Discover the top 10 pro tools essentials every data scientist needs in 2025. Master cloud platforms, Python ecosystems, MLOps tools, and LLM frameworks to boost your data science career and deliver maximum impact.

Introduction: The Evolving Data Science Toolkit

In the rapidly advancing field of data science, mastering the right Pro Tools Essentials has become increasingly critical for professional success. As we navigate through 2025, the landscape of data science tools has evolved significantly, with new platforms and technologies emerging while established tools have matured into more sophisticated versions. The Pro Tools Essentials that data scientists need to master extend far beyond basic programming languages and statistical software—they encompass a comprehensive ecosystem of platforms that handle everything from data ingestion and processing to model deployment and monitoring. Understanding these Pro Tools Essentials is no longer optional for data scientists who aim to deliver maximum value in today’s competitive environment.

The current generation of Pro Tools Essentials reflects several important shifts in how data science work is conducted. There’s been a marked movement toward cloud-native platforms that offer unprecedented scalability and collaboration capabilities. Automation features have become more sophisticated, handling routine tasks while allowing data scientists to focus on higher-value analytical work. Integration between different tools has improved dramatically, creating more seamless workflows across the entire data science lifecycle. Perhaps most importantly, the modern Pro Tools Essentials prioritize not just analytical capability but also deployment efficiency, model management, and business impact measurement.

This guide explores the ten most critical Pro Tools Essentials that every data scientist should master in 2025. These tools represent the foundation upon which successful data science careers are built, enabling professionals to work more efficiently, collaborate more effectively, and deliver more impactful results. From data processing platforms to machine learning operations frameworks, these Pro Tools Essentials cover the complete spectrum of modern data science work, providing the capabilities needed to transform raw data into actionable business intelligence.

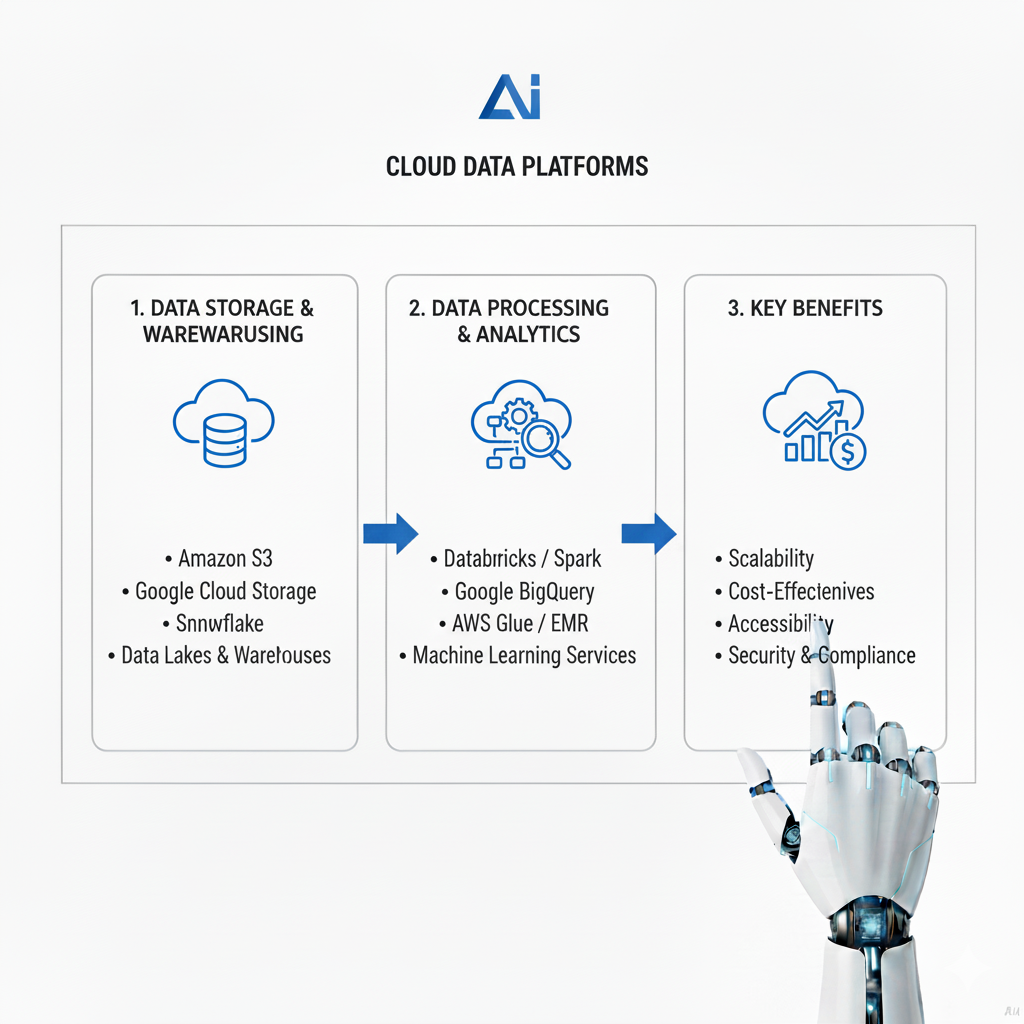

1. Cloud Data Platforms: The Foundational Infrastructure

Modern data science begins with robust data infrastructure, and cloud data platforms represent the most essential of the Pro Tools Essentials for any data scientist working in 2025. Platforms like Snowflake, Databricks, and Google BigQuery have evolved from simple data storage solutions into comprehensive analytical environments that handle everything from data ingestion and transformation to advanced analytics and machine learning. Mastering these platforms is no longer optional—it’s fundamental to conducting data science at scale.

The sophistication of modern cloud data platforms lies in their ability to handle massive datasets while providing seamless integration with other Pro Tools Essentials in the data science toolkit. Snowflake’s data cloud architecture, for instance, enables data scientists to work with structured and semi-structured data across multiple cloud providers without managing underlying infrastructure. Databricks’ Lakehouse Platform combines data engineering and data science capabilities in a unified environment, leveraging Apache Spark for distributed computing while providing optimized machine learning capabilities. Google BigQuery offers serverless architecture that automatically scales to handle queries of any size, making it ideal for exploratory data analysis on massive datasets.

The practical mastery of these platforms involves understanding not just how to query data but how to optimize performance, manage costs, and implement security best practices. Data scientists need to know how to use features like Snowflake’s zero-copy cloning for creating development environments, Databricks’ Delta Lake for reliable data pipelines, and BigQuery’s ML integration for building models directly within the data warehouse. Understanding these capabilities allows data scientists to work more efficiently, reducing the time between data access and insight generation while ensuring that their work aligns with organizational data governance standards.

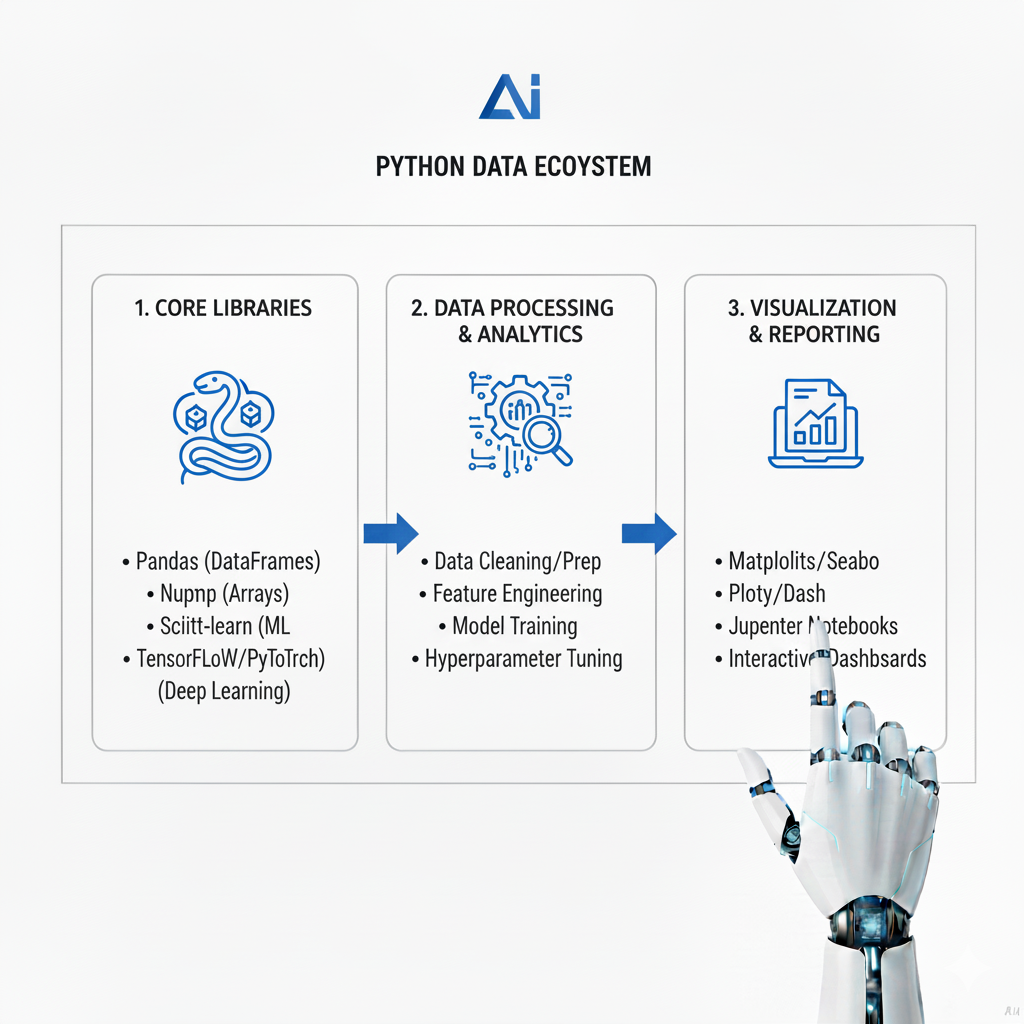

2. Python Data Ecosystem: The Analytical Core

The Python data science ecosystem remains one of the most critical Pro Tools Essentials, though its composition and best practices have evolved significantly by 2025. While familiar libraries like Pandas, NumPy, and Scikit-learn continue to be fundamental, the ecosystem has expanded to include new libraries and frameworks that address emerging challenges in scalability, performance, and specialized analytical tasks. Mastery of this ecosystem involves not just understanding individual libraries but knowing how to combine them effectively to solve complex data science problems.

The modern Python data scientist’s toolkit includes several essential components beyond the traditional basics. Polars has emerged as a powerful alternative to Pandas for handling large datasets, offering faster performance and better memory efficiency through its Rust-based architecture. Scikit-learn continues to be the go-to for traditional machine learning, but has been supplemented by libraries like XGBoost and LightGBM for gradient boosting, and Optuna for hyperparameter optimization. For deep learning,Pro Tools Essentials PyTorch has solidified its position as the preferred framework for most research and development work, though TensorFlow remains important for production deployments in certain environments.

The advanced application of these Pro Tools Essentials involves understanding not just their basic functionality but their performance characteristics, integration patterns, and deployment considerations. Data scientists need to know when to use Polars versus Pandas based on dataset size and operation complexity. They should understand how to use PyTorch with distributed training frameworks for large models, and how to optimize Scikit-learn pipelines for production deployment. Perhaps most importantly, they need to master the art of combining these libraries into cohesive analytical workflows that leverage the strengths of each component while maintaining code quality and reproducibility.

3. Containerization Platforms: The Deployment Foundation

Containerization has transitioned from a DevOps specialty to one of the essential Pro Tools Essentials that every data scientist must understand. Docker and Kubernetes form the foundation of modern model deployment, enabling data scientists to create reproducible environments, scale applications efficiently, and ensure consistent behavior across development, testing, and production environments. In 2025, containerization knowledge is no longer optional for data scientists who want to see their models deployed and delivering business value.

The practical application of containerization in data science involves several key competencies. Data scientists need to understand how to create Docker images that encapsulate their models and dependencies, write Dockerfiles that optimize for both development efficiency and production performance, and manage container registries for version control and deployment. With Kubernetes, they should understand Pro Tools Essentials basic concepts like pods, services, and deployments, even if infrastructure management is primarily handled by dedicated platform teams. This knowledge enables data scientists to collaborate more effectively with engineering teams and take greater ownership of the full model lifecycle.

The advanced use of these Pro Tools Essentials involves understanding patterns and best practices specific to machine learning workloads. This includes strategies for building lean container images that minimize security vulnerabilities and deployment times, implementing health checks and graceful shutdowns for model serving containers, and configuring resource requests and limits to ensure stable performance under varying loads. Data scientists who master these containerization fundamentals can significantly accelerate the path from model development to production deployment while reducing the operational burden on engineering teams.

4. Machine Learning Operations Platforms

MLOps platforms represent some of the most transformative Pro Tools Essentials for data scientists in 2025, addressing the critical challenge of moving models from experimentation to production reliably and efficiently. Platforms like MLflow, Kubeflow, and Domino Data Lab provide integrated environments for experiment tracking, model management, and deployment automation. Mastering these platforms is essential for data scientists who want to ensure their work delivers consistent, measurable business impact.

The core value of MLOps platforms lies in their ability to bring engineering discipline to the machine learning lifecycle. MLflow’s experiment tracking capabilities allow data scientists to systematically log parameters, metrics, and artifacts from every model training run, creating a searchable repository of experimentation history. Its model registry provides version control and stage management for models moving from development to staging to production. Kubeflow extends these concepts to Kubernetes environments, enabling scalable model training and serving, while platforms like Domino Data Lab offer enterprise-grade collaboration and reproducibility features.

Advanced mastery of these Pro Tools Essentials involves understanding how to integrate them into complete machine learning pipelines. Data scientists need to know how to configure MLflow to track experiments across distributed training jobs, implement automated model validation checks before promotion to production, and set up monitoring for data quality and model performance degradation. They should understand how to use these platforms to enable collaboration across teams, sharing experiments, models, and insights in a structured way that accelerates organizational learning and improvement.

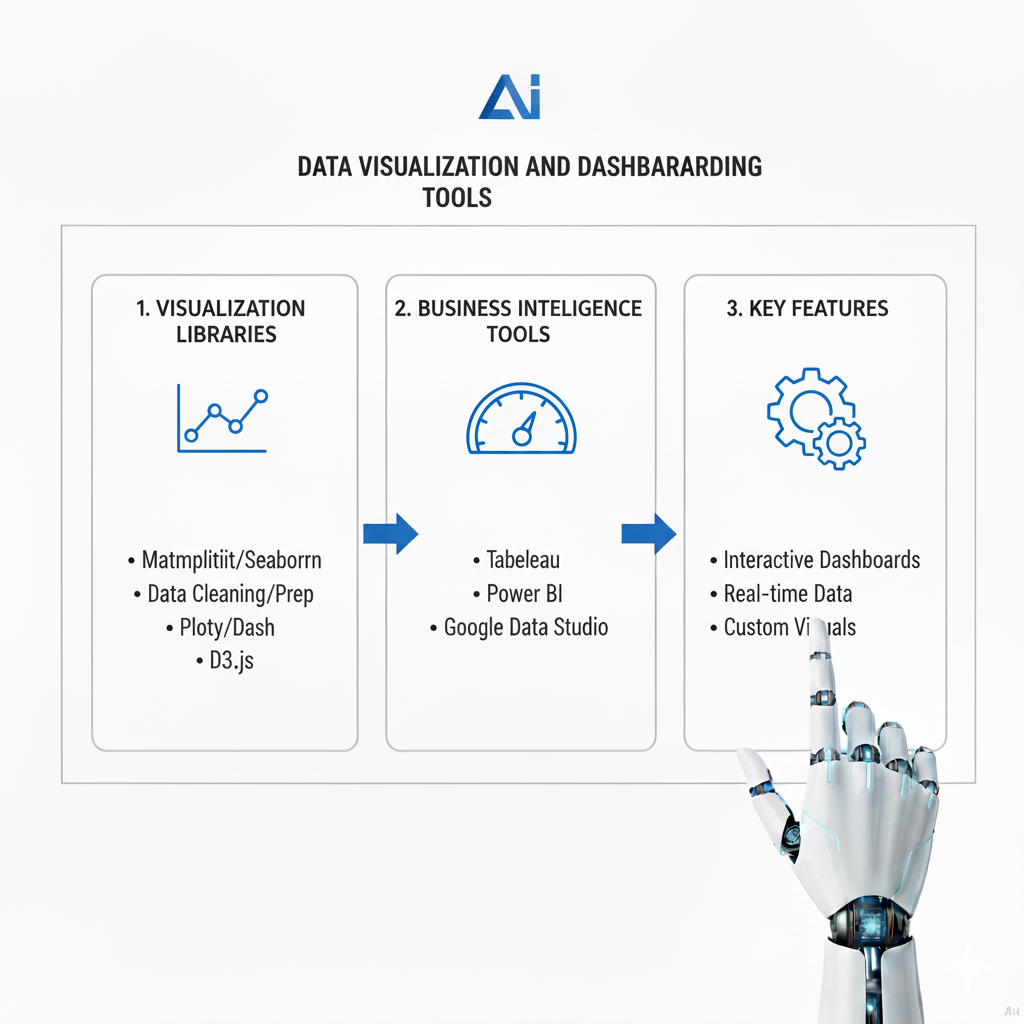

5. Data Visualization and Dashboarding Tools

Effective communication of insights remains a critical data science competency, and modern data visualization tools represent essential Pro Tools Essentials for this purpose. While traditional tools like Matplotlib and Seaborn continue to be valuable for exploratory analysis, the landscape of production visualization and dashboarding has evolved significantly. Tools like Streamlit, Plotly Dash, and Tableau have become standard for creating interactive applications and dashboards that enable stakeholders to explore data and understand model behavior.

The modern approach to data visualization emphasizes interactivity, reproducibility, and deployment efficiency. Streamlit has emerged as a particularly powerful tool for data scientists, enabling them to create sophisticated web applications using only Python scripts. Its simplicity and flexibility make it ideal for building prototype applications, model debugging interfaces, and internal tools quickly. Plotly Dash offers similar capabilities with more fine-grained control over application layout and behavior, while Tableau continues to dominate enterprise environments for business intelligence and reporting.

Mastering these Pro Tools Essentials involves understanding not just how to create visualizations but how to design effective data stories and user experiences. Data scientists need to know how to choose the right chart types for different data relationships, implement interactive elements that enable deeper exploration, and design dashboard layouts that guide users through analytical narratives. They should understand performance optimization techniques for large datasets and accessibility considerations for diverse audiences. Perhaps most importantly, they need to develop the design thinking skills that transform raw analytical outputs into compelling, actionable insights.

6. Version Control Systems: The Collaboration Backbone

Version control systems, particularly Git, remain foundational Pro Tools Essentials for data scientists in 2025, though their application has expanded beyond code management to include data, models, and experiments. Git proficiency is no longer optional—it’s a fundamental requirement for collaborative data science work, enabling teams to track changes, manage parallel development efforts, and maintain reproducibility across projects. The modern data scientist’s Git skills must extend beyond basic commit and push operations to encompass sophisticated branching strategies, conflict resolution, and integration with other tools in the data science ecosystem.

The advanced application of Git in data science involves several specialized practices. Data scientists need to understand how to structure repositories to separate code, data, configuration, and documentation appropriately. They should master branching strategies like GitFlow that enable parallel experimentation while maintaining stable main branches for production models. Understanding how to write effective commit messages that document not just what changed but why it changed is crucial for maintaining project history and facilitating collaboration. Integration with platforms like GitHub, GitLab, or Bitbucket enables code review processes, continuous integration pipelines, and project management workflows that improve code quality and team coordination.

Beyond traditional code versioning, data scientists must understand how Git integrates with other Pro Tools Essentials for managing the complete machine learning lifecycle. This includes using Git with DVC (Data Version Control) for tracking large datasets and model artifacts, implementing Git hooks for automated code quality checks, and understanding how Git workflows integrate with MLOps platforms for automated model training and deployment. Data scientists who master these advanced Git practices can significantly improve their productivity, collaboration effectiveness, and the overall quality and reproducibility of their work.

7. Automated Machine Learning Platforms

Automated Machine Learning (AutoML) platforms have evolved from experimental curiosities to essential Pro Tools Essentials that every data scientist should understand and leverage appropriately. While fears of AutoML replacing data scientists have proven unfounded, these platforms have become invaluable tools for accelerating certain aspects of the machine learning workflow. Platforms like H2O.ai, DataRobot, and Google Cloud AutoML provide capabilities for automated feature engineering, model selection, and hyperparameter tuning that can significantly reduce time-to-insight for common machine learning problems.

The sophisticated use of AutoML platforms involves understanding both their capabilities and their limitations. These tools excel at generating strong baseline models quickly, exploring large model and hyperparameter spaces efficiently, and handling routine aspects of model development that don’t require deep domain expertise. However, they typically struggle with problems requiring specialized feature engineering, complex model architectures, or deep integration of domain knowledge. Masterful data scientists know when to leverage AutoML for efficiency and when to apply custom approaches for maximum performance or interpretability.

Integrating AutoML into a comprehensive data science workflow represents an advanced skill that separates competent practitioners from true experts. This involves using AutoML not as a replacement for traditional data science but as a component within a broader analytical strategy. Data scientists might use AutoML platforms for initial exploration and baseline establishment before applying more sophisticated techniques for final model development. They might leverage AutoML’s feature importance analyses to inform manual feature engineering efforts, or use AutoML-generated models as benchmarks for evaluating custom approaches. Understanding these integration patterns allows data scientists to harness the efficiency of AutoML while maintaining the flexibility and sophistication of traditional data science methods.

8. Specialized Hardware Acceleration Tools

The increasing computational demands of modern machine learning, particularly for deep learning and large-scale data processing, have made understanding hardware acceleration tools essential Pro Tools Essentials for data scientists. While hardware configuration was traditionally the domain of infrastructure teams, the performance implications are so significant that data scientists now need to understand how to leverage specialized hardware effectively. This includes GPUs, TPUs, and other accelerators, along with the software frameworks that enable their efficient use.

The practical knowledge required extends beyond simply knowing how to access accelerated hardware to understanding how to optimize code to leverage it fully. For GPU acceleration, data scientists need to understand CUDA programming concepts, how to use GPU-accelerated libraries like RAPIDS, and how to structure data and computations to maximize parallel processing. For TPUs, they need to understand the specific model architectures and data pipeline patterns that achieve optimal performance. Even without deep hardware expertise, data scientists should understand how to profile their code to identify bottlenecks and select the appropriate hardware configuration for different types of workloads.

Advanced application of these Pro Tools Essentials involves understanding trade-offs between different acceleration strategies and how to implement them in production environments. Data scientists should know when to use multiple GPUs for data parallelism versus model parallelism, how to optimize data loading to keep accelerators fully utilized, and how to manage memory constraints when working with large models or datasets. They should understand how acceleration considerations influence model architecture choices and training strategies, and how to balance computational efficiency against other considerations like model accuracy, interpretability, and deployment complexity.

9. Large Language Model Interfaces and Frameworks

The explosive growth of large language models has created a new category of essential Pro Tools Essentials that every data scientist must understand in 2025. While not every data scientist will be training massive foundation models from scratch, virtually all will need to interact with them through APIs or adapt them for specific applications. Understanding how to work effectively with LLMs has become as fundamental as traditional statistical modeling skills for many data science roles.

The practical skills required include understanding how to interact with API-based models like GPT-4 and Claude, how to fine-tune open-source models using frameworks like Hugging Face Transformers, and how to implement retrieval-augmented generation patterns that combine LLMs with external data sources. Data scientists need to understand prompt engineering techniques that maximize model performance for specific tasks, evaluation methodologies for assessing LLM output quality, and cost management strategies for working with expensive API calls. They should also understand the ethical considerations and limitations of these models, including their tendencies toward hallucination, bias amplification, and reasoning errors.

Beyond basic interaction, advanced data scientists understand how to integrate LLMs into broader data science workflows and applications. This includes implementing caching and optimization strategies to manage latency and cost, designing evaluation frameworks that measure LLM performance on task-specific metrics, and creating guardrails and validation systems that ensure reliable operation in production environments. Data scientists who master these Pro Tools Essentials can leverage the remarkable capabilities of large language models while mitigating their weaknesses and limitations.

10. Data Governance and Security Platforms

As data science has become more integrated into core business operations, understanding data governance and security platforms has transitioned from a niche concern to one of the essential Pro Tools Essentials for all data scientists. Regulations like GDPR and CCPA, along with growing consumer expectations around data privacy, have made compliance non-negotiable. Platforms like Collibra, Alation, and Immuta provide the capabilities needed to manage data access, ensure privacy compliance, and maintain audit trails—all crucial considerations for responsible data science practice.

The practical knowledge required includes understanding how to work within data governance frameworks without sacrificing analytical productivity. Data scientists need to know how to discover and request access to appropriate data sources, how to handle personally identifiable information appropriately, and how to implement privacy-preserving techniques like differential privacy or federated learning when necessary. They should understand how to use data catalog tools to understand data lineage and quality, and how to implement security best practices in their development workflows to prevent accidental data exposure.

Advanced mastery of these Pro Tools Essentials involves understanding how to design data science workflows that are secure and compliant by design, rather than treating governance as an afterthought. This includes implementing automated data masking for development environments, building model monitoring that detects potential privacy violations or biased outcomes, and creating documentation and audit trails that demonstrate compliance with relevant regulations. Data scientists who develop these skills become invaluable partners to legal and compliance teams, enabling their organizations to derive maximum value from data while minimizing regulatory risk.

Conclusion: Building a Comprehensive Professional Toolkit

The Pro Tools Essentials that data scientists need to master in 2025 reflect the continued maturation and professionalization of the field. No longer can data scientists focus exclusively on statistical modeling and algorithm development—they must understand the complete lifecycle from data to deployment, including the infrastructure, collaboration, and governance considerations that determine whether their work will deliver real business impact. The ten categories of tools explored in this guide provide a comprehensive foundation for modern data science practice.

The most successful data scientists in 2025 will be those who view these Pro Tools Essentials not as isolated technologies but as interconnected components of a complete analytical ecosystem. They will understand how to select the right tool for each task, how to integrate different tools into cohesive workflows, and how to adapt their toolkit as technologies continue to evolve. Perhaps most importantly, they will maintain a learning mindset, continuously updating their skills and exploring new tools that emerge to address the ever-changing challenges of data science.

Mastering these Pro Tools Essentials requires significant investment of time and effort, but the returns in terms of productivity, impact, and career advancement make this investment unquestionably worthwhile. Data scientists who develop comprehensive proficiency across these tool categories position themselves not just as technical specialists but as strategic partners who can drive meaningful business outcomes through data. In the competitive landscape of 2025, this comprehensive tool mastery may well be the defining characteristic that separates adequate data scientists from exceptional ones.