Master Data ethics with our complete guide. Learn to implement ethical AI frameworks, ensure algorithmic fairness, build trust in analytics, and create responsible data practices for sustainable business success

Introduction: The Critical Imperative of Data Ethics in the Digital Age

In an era defined by data-driven decision-making and artificial intelligence, Data ethics has emerged as one of the most critical considerations for organizations worldwide. Data ethics represents the moral framework that governs the collection, storage, analysis, and application of data, ensuring that technological advancements serve humanity rather than exploit it. The significance of Data ethics extends far beyond legal compliance—it represents the foundation of trust between organizations and their stakeholders, the safeguard against algorithmic discrimination, and the moral compass guiding innovation in an increasingly datafied world.

The rapid evolution of data collection capabilities and AI sophistication has created an urgent need for robust Data ethics frameworks. Consider that by 2025, the global datasphere is projected to grow to 175 zettabytes, while AI adoption in enterprises has increased by 270% over the past four years. This exponential growth brings profound ethical questions: How do we prevent algorithms from perpetuating societal biases? What responsibilities do organizations have regarding data they collect? How do we balance innovation with individual privacy? These questions lie at the heart of Data ethics, making it not just a theoretical concern but a practical necessity for sustainable business operations.

The consequences of neglecting Data ethics are both severe and well-documented. Organizations that have failed to prioritize ethical considerations have faced massive financial penalties (Meta’s $1.3 billion GDPR fine), reputational damage (Equifax’s data breach), public backlash (Google’s Project Maven), and legislative scrutiny (TikTok’s congressional hearings). Conversely, companies that champion Data ethics demonstrate higher customer loyalty, stronger employee retention, and greater innovation capacity. A 2023 study by the Ethics & Compliance Initiative found that organizations with strong ethical data practices experienced 40% higher customer trust and 25% greater employee engagement.

This comprehensive guide explores the multifaceted landscape of Data ethics, providing a practical framework for building trustworthy AI systems and responsible analytics practices. We’ll examine the core principles of ethical data handling, implementation strategies for organizations of all sizes, real-world case studies, and future trends that will shape the evolution of Data ethics. Whether you’re a data scientist developing machine learning models, a business leader making data-driven decisions, or a policymaker crafting regulations, understanding and implementing Data ethics is no longer optional—it’s essential for building sustainable success in the digital economy.

The Foundation of Data Ethics: Core Principles and Frameworks

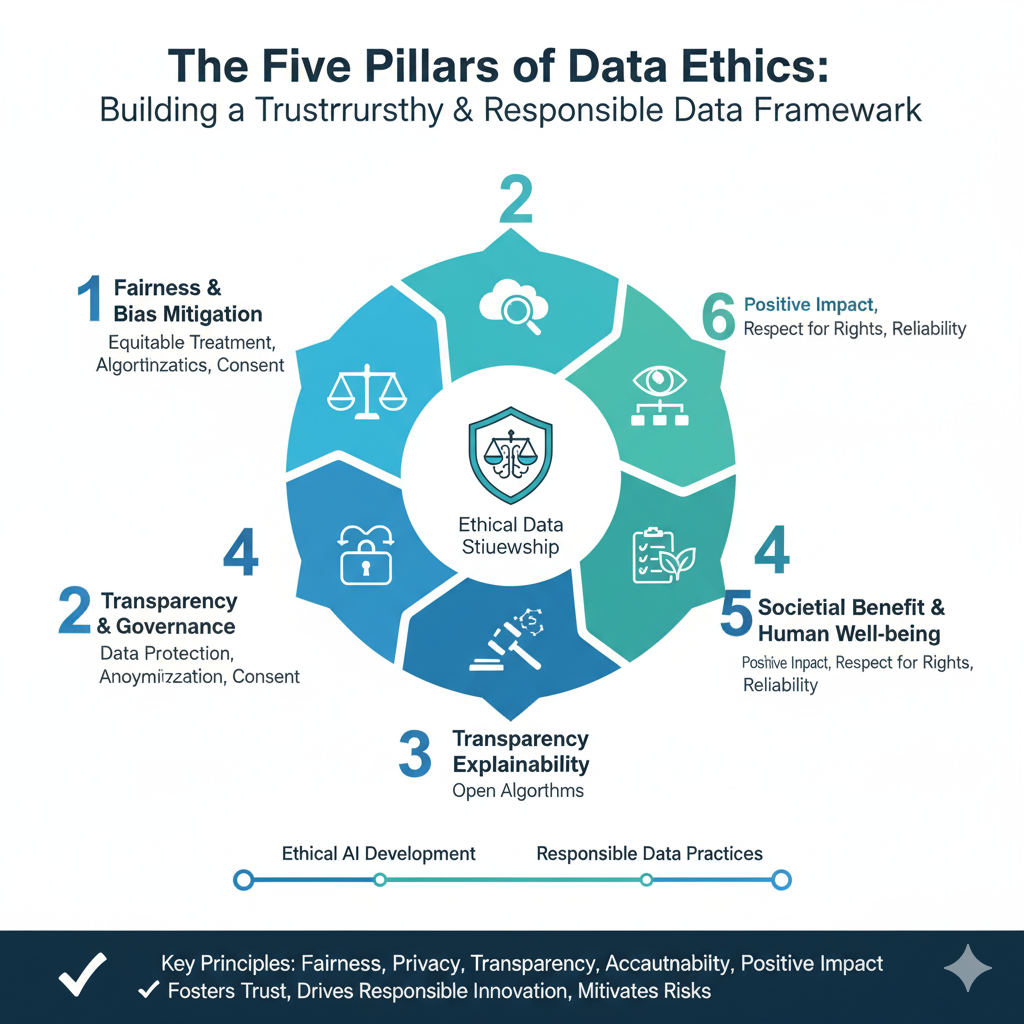

The Five Pillars of Data Ethics

Data ethics rests on five fundamental principles that collectively form a comprehensive moral framework for responsible data practices. These principles work in concert to guide ethical decision-making from data collection through analysis and application, ensuring that technological progress aligns with human values and social good.

Privacy and Consent forms the bedrock of Data ethics, emphasizing individuals’ fundamental right to control their personal information. This principle extends far beyond mere legal compliance with regulations like GDPR or CCPA to encompass respectful data handling practices that honor the spirit of privacy protection. In practical terms, this means implementing privacy-by-design architectures where data protection measures are embedded into systems from their initial conception rather than bolted on as an afterthought.

It requires organizations to practice data minimization, collecting only the information strictly necessary for specified purposes, and to establish clear retention policies that determine how long data is kept before secure destruction. Most importantly, privacy and consent demand transparent communication with data subjects about how their information will be used, coupled with mechanisms for obtaining meaningful, informed consent that can be as easily withdrawn as it was given.

Transparency and Explainability represents the commitment to openness about data practices and the ability to make algorithmic decisions understandable to stakeholders. In the context of Data ethics, transparency means clearly communicating what data is being collected, for what purposes, and how it will be processed and shared. For AI systems, this extends to explaining how algorithms arrive at their decisions in language that is accessible to the people affected by those decisions.

This is particularly crucial in high-stakes domains like healthcare, criminal justice, and financial services, where algorithmic decisions can significantly impact human lives. Explainability involves deploying techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) to illuminate the “black box” of complex machine learning models, enabling stakeholders to understand which factors influenced specific outcomes. This principle acknowledges that trust cannot exist in darkness—organizations build confidence by being open about their data practices and decision-making processes.

Fairness and Non-Discrimination ensures that data systems do not perpetuate or amplify existing societal biases, protecting against algorithmic discrimination that could disadvantage vulnerable populations. This principle of Data ethics requires proactive measures to identify and mitigate biases throughout the entire data lifecycle, from collection and preparation through model development and deployment. Technical approaches include implementing fairness metrics to measure disparate impact across different demographic groups, using techniques like adversarial debiasing during model training, and conducting regular audits to detect bias drift over time.

Beyond technical solutions, fairness demands diverse teams that can identify potential biases that might be overlooked by homogeneous groups, and stakeholder engagement processes that include voices from communities likely to be affected by algorithmic systems. This principle recognizes that data and algorithms are not neutral—they reflect the values and assumptions of their creators, making conscious effort necessary to ensure they promote equity rather than undermine it.

Accountability and Governance establishes clear responsibility for ethical outcomes and implements robust oversight structures to ensure Data ethics principles are consistently applied. This principle moves beyond abstract commitments to create concrete mechanisms for ethical accountability, including designated roles like Data Ethics Officers, ethics review boards for high-risk projects, and clear escalation paths for ethical concerns. Effective governance frameworks define processes for ethical impact assessments before system deployment, ongoing monitoring of live systems, and protocols for addressing ethical issues when they arise.

Accountability also encompasses documentation requirements that create audit trails demonstrating how ethical considerations influenced system design and decision-making, and remediation processes for when systems cause harm despite preventive measures. This principle acknowledges that ethical data practices require more than good intentions—they need structured governance that embeds responsibility throughout the organization.

Beneficence and Social Responsibility emphasizes that data practices should actively promote social good and consider broader societal impacts beyond immediate organizational benefits. This forward-looking principle of Data ethics encourages organizations to consider how their data initiatives might affect various stakeholders, including vulnerable populations who may not directly interact with their systems but could be impacted by secondary effects.

It involves conducting systemic risk assessments to identify potential negative consequences at scale, engaging in ethical horizon scanning to anticipate how technologies might be misused, and proactively designing systems to maximize positive social impact while minimizing potential harms. This principle also encompasses environmental considerations, such as the carbon footprint of large AI models, and economic considerations, such as the impact of automation on employment patterns. By adopting a beneficence mindset, organizations align their data practices with the broader goal of human flourishing rather than narrow self-interest.

Implementing Ethical Frameworks in Organizations

Translating Data ethics principles into practice requires structured approaches that embed ethical considerations into organizational culture, processes, and decision-making. Successful implementation begins with developing an organizational ethics charter that clearly articulates the company’s commitment to ethical data practices and provides specific guidance for common ethical dilemmas.

This charter should be co-created with diverse stakeholders, including representatives from affected communities, to ensure it reflects multiple perspectives rather than just internal organizational priorities. The charter must be living document that evolves with technological changes and societal expectations, regularly reviewed and updated through inclusive processes that incorporate lessons from both successes and failures.

Establishing ethics governance structures creates the organizational capacity to consistently apply Data ethics principles across all data initiatives. This typically involves forming a cross-functional ethics committee with representation from technical teams, business units, legal compliance, and external advisors to provide oversight and guidance on ethical matters. Many organizations appoint dedicated Data Ethics Officers who have the authority and resources to implement ethics programs, conduct training, and review high-risk projects. Effective governance also includes creating clear reporting lines for ethical concerns, protection for whistleblowers, and integration with existing risk management frameworks to ensure Data ethics receives appropriate executive attention and resource allocation.

Developing ethics assessment tools enables systematic evaluation of data projects throughout their lifecycle. These tools include algorithmic impact assessments that evaluate potential biases and disparate impacts before system deployment, data collection reviews that ensure proper consent mechanisms and privacy protections, and ongoing monitoring frameworks that track ethical performance indicators once systems are operational. These assessments should be proportional to the potential risks involved, with more rigorous scrutiny applied to high-impact systems in sensitive domains like healthcare, criminal justice, and financial services. The assessment process must be transparent, with findings documented and addressed rather than treated as checkboxes to be completed, creating organizational learning about ethical risks and mitigation strategies.

Data Ethics in Practice: Implementation Strategies

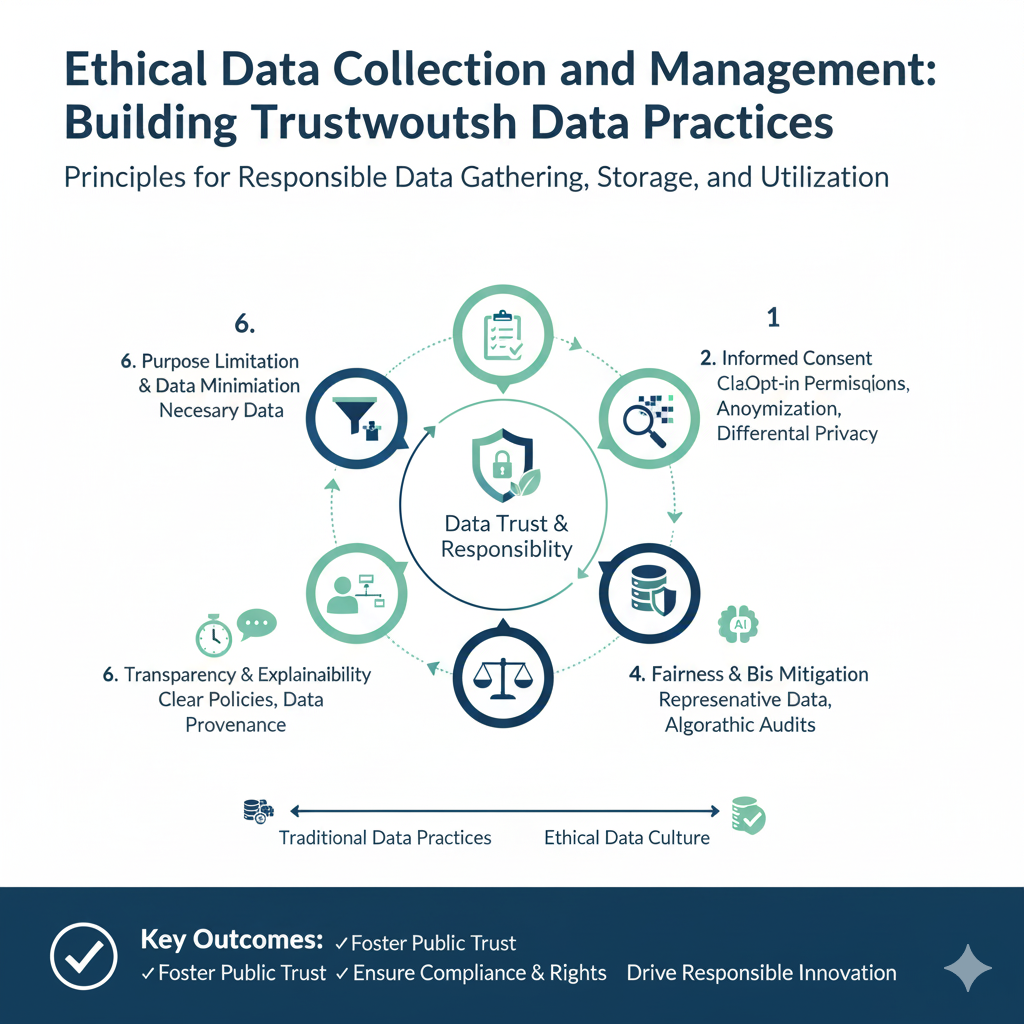

Ethical Data Collection and Management

Responsible data sourcing represents the first practical application of Data ethics in the data lifecycle. Organizations must establish clear standards for how data is acquired, ensuring proper consent mechanisms are in place and that data collection methods respect individual autonomy and privacy. This includes conducting due diligence on third-party data suppliers to verify their ethical practices, implementing provenance tracking to document data origins and transformations, and ensuring that data usage remains consistent with the context in which it was originally collected. Ethical sourcing also involves critical assessment of whether certain types of data should be collected at all, even when legally permissible, if their collection might enable harmful applications or violate social norms.

Implementing privacy-by-design architectures embeds Data ethics into the technical foundation of data systems. This approach involves integrating privacy protections into system architecture from the initial design phase rather than adding them as an afterthought. Technical implementations include data minimization features that collect only necessary information, purpose limitation mechanisms that restrict data usage to specified objectives, and security-by-default configurations that protect data through encryption, access controls, and comprehensive logging. Privacy-by-design also encompasses data anonymization techniques that reduce identifiability while preserving utility, and retention management systems that automatically delete or archive data according to predefined schedules aligned with ethical and legal requirements.

Creating meaningful consent frameworks moves beyond legalistic checkboxes to establish genuine understanding and choice for data subjects. Modern Data ethics requires layered consent approaches that provide clear, concise summaries of key information alongside opportunities to access more detailed explanations. Contextual consent mechanisms request permission when specific data uses become relevant rather than seeking blanket authorization upfront, while easy-to-use preference centers enable ongoing control over data practices.

Meaningful consent also includes straightforward withdrawal mechanisms that are as simple to execute as initial agreement, avoiding dark patterns that make opt-out difficult. These approaches recognize that consent is an ongoing process rather than a one-time event, requiring continuous communication and choice rather than static permissions.

Building Fair and Unbiased AI Systems

Implementing comprehensive bias detection represents a critical technical application of Data ethics in machine learning systems. This process begins with identifying potential sources of bias, including historical biases in training data, representation biases in data collection methods, measurement biases in how outcomes are defined, and aggregation biases in how diverse groups are combined in analysis.

Technical teams then deploy a suite of fairness metrics to quantify different types of bias, such as demographic parity that measures whether outcomes are independent of protected attributes, equal opportunity that assesses false positive rates across groups, and predictive equality that evaluates false negative rates. These assessments must be conducted throughout the model lifecycle—during development, before deployment, and continuously during operation—since biases can emerge or evolve over time as data distributions change.

Developing bias mitigation strategies addresses identified fairness issues through technical and procedural interventions. Pre-processing techniques transform training data to reduce biases before model development, through approaches like reweighting samples from different groups, generating synthetic data to improve representation of underrepresented populations, or removing proxy variables that indirectly encode protected characteristics.

In-processing techniques modify learning algorithms themselves to incorporate fairness constraints during training, using methods like adversarial debiasing that pits the main model against a adversary trying to predict protected attributes, or regularization approaches that penalize models for making predictions correlated with sensitive features. Post-processing techniques adjust model outputs after prediction, such as calibrating decision thresholds differently across groups to ensure equitable outcomes, or implementing rejection options that refer uncertain cases for human review.

Ensuring algorithmic transparency and explainability makes AI systems understandable to various stakeholders, a fundamental requirement of Data ethics. This involves creating global explanations that describe overall model behavior, such as feature importance rankings that identify which factors most influence predictions, and decision rules that outline general patterns the model has learned.

Local explanations illuminate individual decisions, showing how specific factors contributed to a particular outcome for a specific case. Model documentation approaches like model cards and datasheets provide comprehensive information about system capabilities, limitations, and appropriate use cases. Crucially, explanations must be tailored to different audiences—technical teams need detailed implementation specifics, business users require understanding of functional capabilities and limitations, affected individuals deserve clear reasons for decisions that impact them, and regulators need evidence of compliance with legal requirements.

Organizational Implementation: Building an Ethics-First Culture

Ethics Governance Structures

Establishing effective oversight mechanisms creates the organizational infrastructure needed to implement Data ethics consistently across all operations. This begins with strategic governance bodies like ethics committees or advisory boards that include diverse perspectives, including external ethicists, community representatives, and subject matter experts alongside internal leadership.

These groups provide high-level guidance, review significant ethical dilemmas, approve organizational policies, and oversee the overall ethics program. At the operational level, dedicated Data Ethics Officers or similar roles coordinate day-to-day activities, conduct training, consult on projects, and monitor compliance with ethical standards. Distributed networks of ethics champions embedded within business units and technical teams help scale ethical awareness and provide localized guidance, creating a comprehensive governance ecosystem that connects strategic direction with practical implementation.

Implementing ethics training and capability building develops the knowledge and skills needed to apply Data ethics principles in daily work. Foundational training should reach all employees who handle data, covering basic principles, organizational policies, and regulatory requirements through engaging formats that connect abstract concepts to practical decisions.

Role-specific training provides deeper technical knowledge for different functions—data scientists need expertise in fairness metrics and bias detection techniques, product managers require skills in ethical design and impact assessment, executives need understanding of strategic ethics and crisis management. Continuous learning opportunities keep ethical knowledge current as technologies and standards evolve, through mechanisms like ethics newsletters discussing recent developments, case study sessions analyzing real-world examples, and participation in industry forums that share best practices and emerging challenges.

Creating accountability frameworks ensures that Data ethics responsibilities are clearly defined, measured, and reinforced. This includes integrating ethical considerations into performance management systems, with specific goals and metrics related to ethical data practices for relevant roles. Clear escalation paths enable employees to raise ethical concerns without fear of retaliation, with protections for whistleblowers and transparent processes for investigating and addressing reported issues. Regular ethics audits assess how well the organization is implementing its stated principles,

identifying gaps and improvement opportunities through systematic review of processes, systems, and outcomes. Consequences for ethical violations must be consistently applied and commensurate with the seriousness of the breach, demonstrating that the organization takes its ethical commitments seriously rather than treating them as optional guidelines.

Ethics Assessment and Auditing

Conducting pre-implementation ethical impact assessments evaluates potential ethical issues before systems are deployed, a proactive approach that aligns with the precautionary principle in Data ethics. These structured assessments examine multiple dimensions of potential impact, including purpose evaluation that scrutinizes the problem being solved and who benefits from the solution, data assessment that reviews collection methods, consent mechanisms, and privacy protections, algorithmic review that analyzes potential biases, transparency levels, and explainability approaches, and deployment consideration that examines user controls, oversight mechanisms, and remediation processes.

Assessments should be proportional to the potential risks involved, with more thorough scrutiny applied to high-impact systems in sensitive domains. The assessment process must be documented, with findings addressed through design changes, additional safeguards, or in some cases, decisions to not proceed with projects whose ethical risks cannot be adequately mitigated.

Implementing continuous ethics monitoring ensures that ethical performance is tracked throughout system operation, recognizing that ethical risks can emerge or evolve over time. Technical monitoring tracks fairness metrics to detect bias drift as data distributions change, privacy compliance through audits of data handling practices, and performance indicators that might signal emerging ethical issues.

External monitoring tracks regulatory developments that might affect ethical requirements, industry standards that represent evolving best practices, and stakeholder perceptions through trust surveys and feedback mechanisms. Incident response protocols establish clear procedures for detecting, escalating, and addressing ethical issues when they occur, including communication plans, remediation steps, and processes for implementing preventive measures based on lessons learned. This ongoing monitoring acknowledges that ethical data management is not a one-time certification but a continuous commitment that requires vigilance throughout system lifecycles.

Developing ethical auditing frameworks creates systematic approaches for evaluating compliance with Data ethics principles and identifying improvement opportunities. Internal audits conducted by independent examiners assess whether data practices align with stated policies, ethical impact assessments are properly conducted, governance mechanisms are functioning effectively, and training programs are achieving their objectives.

External audits by third-party validators provide objective verification of ethical claims, enhancing credibility with stakeholders and potentially qualifying for ethical certifications that differentiate organizations in the marketplace. Audit findings should drive continuous improvement through action plans that address identified gaps, process refinements that incorporate lessons learned, and strategy updates that reflect new understanding of ethical risks and mitigation approaches. Comprehensive documentation of audit processes and results creates transparency about ethical performance and demonstrates serious commitment to accountability.

Real-World Applications and Case Studies

Successful Data Ethics Implementations

The financial services industry provides compelling case studies of Data ethics in action, particularly in fair lending practices. Traditional credit scoring models have often disadvantaged marginalized communities through proxies that indirectly encode racial or socioeconomic factors, such as zip codes correlating with historically redlined neighborhoods or educational institutions reflecting privilege rather than creditworthiness. Forward-thinking financial institutions are implementing ethical credit assessment systems that deploy sophisticated bias detection techniques to identify these problematic correlations, apply fairness constraints during model development to ensure equitable outcomes across demographic groups, and use alternative

data sources like rental payment history or utility bills to expand credit access while carefully validating that these new variables actually predict creditworthiness without introducing new biases. These institutions complement technical solutions with transparent communication, providing specific explanations when credit is denied and offering guidance for improving credit profiles, thereby using Data ethics to both comply with fair lending laws and create business value through expanded, responsible lending.

Healthcare AI applications demonstrate how Data ethics becomes particularly crucial when algorithmic decisions directly impact human health and wellbeing. Ethical implementation in this domain requires rigorous clinical validation that demonstrates AI systems meet stringent performance standards before deployment, maintaining human oversight through clinician-in-the-loop designs where AI provides decision support rather than autonomous operation, and ensuring patient consent through clear disclosure about AI involvement in diagnostic or treatment processes.

The healthcare context also highlights the importance of specialized ethical considerations, such as handling particularly sensitive health information with heightened privacy protections, addressing potential biases that could affect care quality for different demographic groups, and establishing clear accountability frameworks that define responsibility when AI-assisted decisions have negative outcomes. These implementations show how Data ethics must be adapted to domain-specific risks and requirements rather than applied as a one-size-fits-all framework.

Retail and marketing applications of Data ethics illustrate how ethical practices can create competitive advantage while avoiding reputational damage. Companies facing public scrutiny over privacy violations and manipulative practices have transformed their approaches by implementing ethical personalization that provides genuine value to customers rather than exploiting psychological vulnerabilities, transparent data practices that clearly explain what information is collected and how it benefits users, and meaningful opt-out mechanisms that respect customer preferences without penalty.

These organizations have found that ethical data use actually enhances customer relationships and loyalty, as consumers increasingly prefer to engage with companies that demonstrate respect for their privacy and autonomy. The retail context also highlights the business case for Data ethics, as ethical missteps can trigger consumer backlash, regulatory action, and lasting brand damage, while ethical practices can differentiate companies in crowded markets and build trust that translates into sustainable customer relationships.

Lessons from Ethical Failures

Examining cases where Data ethics failures caused significant harm provides crucial lessons for organizations seeking to avoid similar pitfalls. The well-documented case of racial bias in healthcare algorithms that systematically privileged white patients over sicker black patients for special care programs demonstrates how technical solutions can perpetuate societal inequities when ethical considerations are not thoroughly integrated into development processes.

This failure resulted from using healthcare costs as a proxy for health needs without recognizing that unequal access to care meant black patients generated lower costs despite greater health needs—a bias that went undetected because the development team lacked diversity and because rigorous fairness testing was not conducted. The case underscores that Data ethics requires critical examination of the assumptions embedded in problem formulation and variable selection, not just technical implementation.

The controversy surrounding facial recognition technologies illustrates how Data ethics failures can occur when technologies are deployed without adequate consideration of context and potential misuse. Systems trained primarily on light-skinned male faces performed poorly on women and people with darker skin, leading to misidentification with serious consequences in law enforcement applications. Additionally, use of these technologies without proper regulatory frameworks raised concerns about mass surveillance and erosion of privacy rights.

These issues emerged because development focused on technical capabilities without sufficient ethical assessment of potential societal impacts, and because deployment decisions prioritized functionality over human rights considerations. The case highlights that Data ethics requires looking beyond immediate use cases to consider broader societal implications, and establishing strong governance frameworks before deploying powerful technologies in sensitive contexts.

Corporate data breaches and privacy violations demonstrate how Data ethics failures can destroy stakeholder trust and incur massive costs. Instances where companies collected extensive personal data without adequate security protections, used data in ways inconsistent with user expectations, or failed to provide meaningful control over personal information have resulted in regulatory penalties, class-action lawsuits, and lasting reputational damage.

These cases often stem from treating data protection as a compliance issue rather than an ethical imperative, prioritizing short-term business objectives over long-term trust preservation, and implementing consent mechanisms designed to obtain permission rather than ensure understanding. The pattern reveals that Data ethics requires cultural commitment that permeates organizational decision-making, not just technical safeguards or legal compliance, and that ethical data stewardship is essential for sustainable business operations in the digital economy.

Future Trends and Evolving Standards

Emerging Challenges in Data Ethics

The rapid evolution of artificial intelligence capabilities presents new Data ethics challenges that require continuous adaptation of ethical frameworks. Generative AI systems that create realistic text, images, and video raise concerns about misinformation, intellectual property rights, and authentication of digital content. These technologies can generate convincing fake content at scale, potentially undermining trust in digital information, while training processes that ingest copyrighted material without permission create legal and ethical questions about fair use and compensation.

The democratization of AI capabilities through accessible tools lowers barriers to creating potentially harmful applications, requiring new approaches to responsible innovation that anticipate misuse while preserving beneficial uses. These developments challenge existing Data ethics frameworks that were designed for predictive analytics rather than generative capabilities, necessitating evolution of principles and practices to address novel ethical questions.

Increasing regulatory attention to algorithmic systems signals a shifting landscape where Data ethics is becoming formalized in legal requirements rather than remaining voluntary best practice. The European Union’s AI Act establishes a risk-based regulatory framework with strict requirements for high-risk AI systems, including conformity assessments, fundamental rights impact evaluations, and post-market monitoring. Similar legislative initiatives are emerging globally, from Algorithmic Accountability Acts in the United States to AI governance frameworks in Singapore and Canada.

These regulatory developments create compliance imperatives that reinforce ethical commitments, but also raise challenges around jurisdictional differences, compliance costs for smaller organizations, and potential innovation constraints. Organizations must navigate this evolving landscape by building adaptable Data ethics programs that can accommodate different regulatory requirements while maintaining consistent ethical principles across operations.

Growing public awareness and expectations around data practices create both pressure and opportunity for organizations to differentiate through ethical leadership. High-profile incidents involving data misuse, algorithmic bias, and privacy violations have increased consumer skepticism about how organizations handle personal information, while employee activism has pushed companies to reconsider controversial contracts and applications. Simultaneously, investors are increasingly considering ethical factors in investment decisions,

with ESG (Environmental, Social, and Governance) frameworks incorporating data ethics criteria. This environment rewards organizations that demonstrate genuine commitment to Data ethics through transparent practices, accountable governance, and positive social impact, while penalizing those that treat ethics as a public relations exercise. The trend suggests that ethical data stewardship is becoming a business imperative rather than optional enhancement, integrated into core operations and strategy rather than siloed as a compliance function.

Building Adaptive Ethics Frameworks

Creating learning-oriented Data ethics programs enables organizations to continuously improve their ethical practices as technologies and societal expectations evolve. This involves establishing systematic feedback mechanisms that capture lessons from both ethical successes and failures, through processes like after-action reviews following project completion, ethical incident analysis that identifies root causes and preventive measures, and regular stakeholder engagement that surfaces concerns and expectations.

Horizon scanning activities monitor emerging technologies, regulatory developments, and social trends that might create new ethical challenges or opportunities, allowing proactive adaptation rather than reactive response. Framework evolution processes ensure that ethics policies, assessment tools, and governance structures are regularly reviewed and updated based on new learning, maintaining relevance and effectiveness in a changing landscape. This adaptive approach acknowledges that Data ethics is not a fixed destination but a continuous journey requiring ongoing learning and improvement.

Developing participatory approaches to Data ethics recognizes that ethical perspectives are diverse and that affected communities should have voice in decisions that impact them. This involves creating inclusive processes for developing ethical frameworks, with representation from different demographic groups, socioeconomic backgrounds, and cultural perspectives to avoid embedding narrow worldviews into ethical standards. Stakeholder engagement mechanisms enable ongoing dialogue

with people affected by data systems, through approaches like community review boards for high-impact applications, user panels that provide feedback on data practices, and collaborative design sessions that include diverse perspectives in system development. Transparency initiatives build trust by openly sharing ethical frameworks, assessment processes, and performance metrics, enabling external validation and accountability. These participatory approaches counteract the risk of Data ethics becoming an internal technical exercise disconnected from societal values and concerns.

Building interdisciplinary capacity strengthens Data ethics by integrating diverse knowledge domains rather than treating it as solely a technical or philosophical concern. Effective ethical practice requires combining technical understanding of how data systems work with philosophical grounding in ethical theories, legal knowledge of regulatory requirements, sociological insight into how technologies affect social structures, psychological understanding of human-computer interaction, and domain expertise

in specific application contexts. Organizations can develop this capacity through cross-functional teams that bring together diverse perspectives, hiring practices that value ethical reasoning alongside technical skills, training programs that build literacy across disciplines, and partnerships with academic institutions that provide access to cutting-edge research and thought leadership. This interdisciplinary approach enriches Data ethics by drawing on the full range of human knowledge rather than limiting it to any single perspective.

Conclusion: The Path Forward for Data Ethics

Data ethics has evolved from a niche concern to a central business imperative that underpins sustainable success in the digital economy. Organizations that embrace Data ethics as a core competency rather than a compliance burden will build stronger customer relationships, foster greater innovation, and create lasting competitive advantages. The implementation of robust ethical frameworks enables organizations to navigate complex data landscapes while maintaining public trust and social license to operate. The journey toward ethical data practices requires ongoing commitment, but the rewards—trust, reputation, and the ability to innovate responsibly—are invaluable assets in today’s transparent business environment.

The business case for Data ethics continues to strengthen as consumers, employees, investors, and regulators increasingly reward ethical behavior and penalize missteps. Research consistently shows that organizations with strong ethical practices experience higher customer loyalty, better employee engagement and retention, reduced regulatory risk, and enhanced capacity for innovation.

Ethical data stewardship has become a market differentiator that helps organizations attract and retain top talent who want to work for companies that align with their values, build deeper customer relationships through transparent and respectful practices, and access partnership opportunities that require demonstrated ethical commitment. Beyond these business benefits, Data ethics represents an essential component of corporate citizenship in the digital age, contributing to societal wellbeing rather than extracting value at social expense.

Looking forward, Data ethics will continue to evolve alongside technological advancements and societal expectations. Emerging challenges like generative AI, autonomous systems, and quantum computing will require new ethical frameworks and approaches, while increasing regulatory attention will formalize ethical requirements that are currently voluntary.

Organizations that thrive in this environment will be those that treat ethics not as a destination but as an ongoing journey—one that requires continuous learning, adaptation, and commitment to doing what’s right. By making Data ethics a foundational element of organizational culture and operations, businesses can harness the power of data and AI while building the trust that is essential for long-term success in an increasingly transparent and accountable world.