Master R for Data Science in 2025 with expert insights. Learn advanced statistical modeling, visualization techniques, and strategic career development for pharmaceutical research, academia, and specialized analytics roles.

Introduction: The Evolving Landscape of Statistical Computing

In the rapidly changing world of data science, R for Data Science continues to maintain its crucial position as a specialized tool for statistical analysis and research. As we progress through 2025, the role of R for Data Science has evolved from a general-purpose data tool to a specialized instrument for specific domains and use cases. Industry experts consistently emphasize that understanding where and how to apply R for Data Science effectively has become more important than ever for data professionals seeking to build comprehensive analytical capabilities.

The ecosystem surrounding R for Data Science has matured in distinctive ways, with new packages and methodologies emerging to address the unique challenges of statistical computing, academic research, and specific industry applications. What sets R for Data Science apart in the current landscape is not just its statistical capabilities, but its deeply integrated approach to reproducible research, visualization excellence, and domain-specific applications. The continued evolution of R for Data Science ensures that professionals who master this toolset possess unique capabilities that complement rather than compete with other data science tools.

This comprehensive guide examines the current state of R for Data Science through the lens of industry experts, providing critical insights and strategic approaches for leveraging this powerful tool in modern data workflows. Whether you’re building statistical models, creating publication-quality visualizations, or implementing reproducible research practices, understanding the evolving role of R for Data Science will be essential for maximizing your impact in data-driven organizations.

The 2025 R Ecosystem: Strategic Positioning and Competitive Advantages

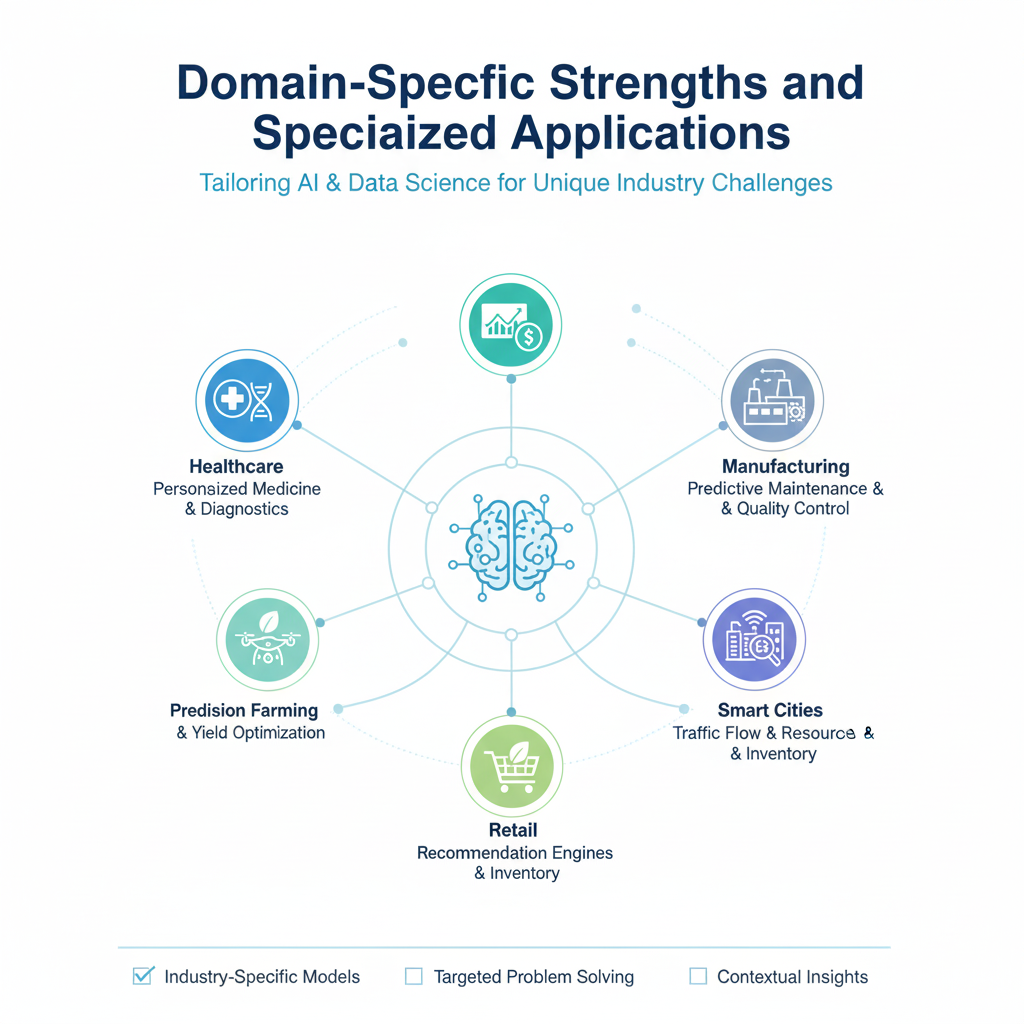

Domain-Specific Strengths and Specialized Applications

The R for Data Science ecosystem has solidified its position in several key domains where its statistical capabilities provide undeniable advantages. In pharmaceutical research and clinical trials, R for Data Science remains the gold standard for statistical analysis, with packages like survival, lme4, and clinicaltables enabling sophisticated modeling that meets rigorous regulatory requirements. Financial institutions continue to rely heavily on R for Data Science for risk modeling, time series analysis, and econometric research, leveraging packages such as quantmod, PerformanceAnalytics, and rugarch for specialized financial computations.

Academic and research institutions have deepened their commitment to R for Data Science, with the tidyverse ecosystem becoming the default environment for statistical computing education and research. The integration of R Markdown and Quarto has transformed how researchers document and share their work, making reproducible research accessible to domains beyond traditional statistics. This emphasis on documentation and reproducibility represents a key differentiator for R for Data Science in environments where methodological transparency is paramount.

The biomedical and public health sectors have seen particularly strong growth in R for Data Science applications, with Bioconductor providing comprehensive tools for genomic analysis, and specialized packages emerging for epidemiological modeling and health economics. Experts note that the community-driven development model of R for Data Science packages enables rapid innovation in these specialized domains, often outpacing alternatives in addressing niche analytical requirements.

Integration and Interoperability Advances

Seamless Cross-Language Integration

The evolution of cross-language interoperability represents one of the most significant advancements in statistical computing environments. The maturation of packages like reticulate has fundamentally transformed how statistical programmers collaborate with broader R for Data Science teams. This integration enables a sophisticated “best tool for the job” approach, where teams can leverage R’s unparalleled statistical capabilities while simultaneously accessing Python’s extensive machine learning libraries and production deployment frameworks.

The practical implementation of this integration allows for sophisticated hybrid workflows. For instance, a data team might use R for initial data exploration and statistical modeling, leveraging its advanced visualization capabilities and comprehensive statistical tests. They can then seamlessly pass these analyzed datasets to Python environments to utilize deep learning frameworks like TensorFlow or PyTorch for complex pattern recognition tasks. Conversely, Python-developed features can be imported into R for rigorous statistical validation and hypothesis testing. This bidirectional data exchange eliminates previous barriers between different analytical paradigms, enabling more comprehensive and robust analytical workflows.

The integration extends beyond simple data passing to include shared memory management and object conversion. Advanced users can now call Python functions directly from R scripts, with automatic type conversion handling the translation between language-specific data structures. This means a pandas DataFrame can become an R data frame with minimal overhead, and R matrices can transform into NumPy arrays without data duplication or format conversion issues. This memory-efficient approach enables working with large datasets that previously would have required cumbersome export/import processes.

Enhanced Database Connectivity and Big Data Integration

The revolution in database connectivity has dramatically expanded the scope of statistical programming in enterprise environments. The dbplyr package represents a paradigm shift in how analysts interact with databases, translating familiar dplyr syntax into efficient SQL queries automatically. This means analysts can work with database tables using the same verbs and patterns they use for local data frames, while the system handles query optimization and execution. The abstraction layer allows statistical programmers to focus on analysis rather than database-specific syntax, while still generating production-quality SQL.

The DBI package provides a unified interface for connecting to various database systems, from traditional SQL servers to modern cloud data warehouses. This standardization means that code for connecting to PostgreSQL, MySQL, SQL Server, or Snowflake follows consistent patterns, reducing the learning curve when moving between different data infrastructure environments. The connection pooling and transaction management capabilities ensure that statistical applications can operate reliably in production environments with multiple concurrent users.

The introduction of the arrow package has been particularly transformative for working with large-scale data. By implementing the Apache Arrow memory format, this package enables zero-copy data sharing between different programming languages and tools. This means that multi-terabyte datasets can be analyzed without the memory limitations that previously constrained statistical programming environments. The columnar data format not only improves performance but also maintains compatibility with other data tools in the modern stack, including Spark, Pandas, and various business intelligence platforms.

Cloud-Native Deployment and Scalability

The cloud integration landscape for statistical programming has matured dramatically, addressing what was historically a significant limitation. Packages like googleCloudRunner and AzureContainers provide native interfaces for deploying analytical workflows to cloud platforms. These tools abstract the underlying infrastructure complexity, allowing statisticians and data scientists to focus on their analytical work while still leveraging scalable cloud resources.

Containerization has been a game-changer for production deployment. The ability to package statistical applications using Docker creates portable, reproducible environments that can run consistently across development, testing, and production systems. This containerization approach solves the longstanding challenge of dependency management and environment consistency that often plagued statistical applications in production. A complex analytical workflow with specific package versions and system dependencies can be reliably deployed across different environments without modification.

The scalability improvements extend to distributed computing frameworks. Integration with Spark through packages like sparklyr enables statistical programmers to work with datasets that far exceed local memory limitations. The familiar dplyr syntax translates to distributed Spark operations, allowing analysts to apply their existing skills to big data problems. This integration maintains the expressive power of statistical programming while leveraging the scalability of enterprise big data infrastructure.

API Integration and Microservices Architecture

Modern statistical programming environments have embraced API-driven workflows and microservices architecture patterns. The ability to create and consume RESTful APIs means that statistical models and analyses can be integrated into broader business applications. Plumber packages enable R code to be exposed as web services, allowing other systems to programmatically access statistical functionality. This API-first approach transforms statistical code from isolated analyses into reusable business services.

The microservices architecture enables sophisticated composition of analytical capabilities. A single business process might involve multiple specialized statistical services—perhaps one for forecasting, another for anomaly detection, and another for optimization—all orchestrated through API calls. This modular approach allows organizations to maintain and scale different analytical capabilities independently while still presenting a unified interface to consumers.

Event-driven architectures have also become more accessible, with packages providing interfaces to message queues and streaming platforms. This enables statistical applications to respond to real-time data streams, moving beyond batch processing to support operational decision-making. The ability to process streaming data opens new use cases in areas like real-time monitoring, dynamic pricing, and immediate fraud detection.

Integrated Development and Collaboration Tools

The interoperability advances extend to development tools and collaboration platforms. Integration with Jupyter notebooks provides a familiar interface for mixed-language development, while RStudio’s evolved capabilities support both R and Python workflows within a single environment. Version control integration through Git has become more seamless, with better support for collaborative development workflows and code review processes.

The interoperability with document generation systems has also improved significantly. Integration with Quarto enables creation of dynamic documents that combine code, results, and narrative in multiple output formats. This capability is particularly valuable in regulated industries where audit trails and methodological transparency are essential. The same analysis code can generate everything from internal technical reports to regulatory submissions to executive presentations, all maintaining consistency and reproducibility.

These integration capabilities collectively transform statistical programming from an isolated specialty into an integral component of modern data infrastructure. The bridges built between different tools and platforms enable statistical experts to contribute their unique skills to broader business initiatives while leveraging the scalability and robustness of enterprise technology stacks. This interoperability ensures that statistical rigor can be maintained even as analytical workflows scale to meet enterprise demands.

Core Technical Excellence: Advanced Methodologies for Modern Challenges

Statistical Modeling and Inference

The statistical modeling capabilities of R for Data Science continue to set the standard for rigorous analytical work. Mixed effects models, survival analysis, and Bayesian inference represent areas where R for Data Science maintains significant advantages over alternatives. The brms package has democratized sophisticated Bayesian modeling, while tidymodels has created a cohesive framework for machine learning that aligns with tidy data principles.

Experimental design and analysis remain cornerstone applications for R for Data Science, with experts emphasizing the importance of proper statistical methodology over algorithmic sophistication in many business contexts. The ability to implement designed experiments, analyze variance components, and compute exact rather than approximate p-values provides crucial advantages in research and development settings.

Causal inference has emerged as a particularly strong application area for R for Data Science, with packages like causaleffect, ggdag, and MatchIt enabling sophisticated approaches to estimating treatment effects from observational data. Industry experts highlight that the rigorous statistical foundation of these packages, combined with comprehensive documentation, makes R for Data Science the preferred tool for causal analysis in academic and regulated industry settings.

Data Visualization and Communication

The visualization capabilities of R for Data Science continue to evolve, with ggplot2 maintaining its position as the gold standard for statistical graphics. Recent developments in the ggplot2 ecosystem have introduced improved animation capabilities, interactive features through plotly integration, and specialized extensions for domain-specific visualization needs.

The gt package has revolutionized table creation within R for Data Science, enabling the production of publication-quality tables with sophisticated formatting and conditional styling. When combined with Quarto documents, these visualization and table capabilities create comprehensive reporting workflows that seamlessly integrate analysis, visualization, and narrative.

Dashboard creation has advanced significantly through the Shiny ecosystem, with tools like shinydashboard, fresh, and bslib providing professional-grade interface components. Experts note that the ability to create interactive analytical applications has expanded the reach of R for Data Science beyond individual analysis to organizational decision support, though they caution that production deployment requires careful consideration of performance and scalability.

Industry Applications: Where Statistical Computing Delivers Unique Value

Pharmaceutical and Healthcare Analytics

The pharmaceutical industry represents one of the strongest domains for statistical computing applications, with R deeply embedded in clinical trial analysis and regulatory submissions. The use of this environment in creating analysis datasets, generating tables, figures, and listings (TFLs), and performing statistical tests has become standardized practice across major pharmaceutical companies. The comprehensive ecosystem of packages specifically designed for clinical research enables researchers to implement complex statistical methodologies with confidence and precision.

Regulatory acceptance of analytical outputs has increased significantly,R for Data Science with agencies like the FDA and EMA providing clear guidance on submitting analyses conducted using validated statistical software. This regulatory comfort, combined with the tool’s statistical rigor, makes it essential for professionals working in drug development and clinical research. The ability to generate fully reproducible analysis pipelines ensures that results can be verified and validated throughout the regulatory review process.

Real-world evidence generation has emerged as another growth area in healthcare analytics. The ability to analyze electronic health records, claims data, and registry information using sophisticated statistical methods has positioned R as a critical tool for health economics and outcomes research. Advanced survival analysis, propensity score matching, and complex survey analysis capabilities enable researchers to draw meaningful insights from observational data while properly accounting for confounding factors and selection biases.

Academic Research and Public Policy

Academic institutions continue to favor open-source statistical tools for research and teaching, R for Data Science with the zero-cost basis and comprehensive statistical capabilities making them ideal for educational environments. The reproducibility features of literate programming approaches align perfectly with academic values of transparency and verifiability. Students and researchers can share complete analytical workflows, enabling peers to verify results and build upon existing work with confidence.

Public policy organizations and government agencies have increasingly adopted statistical programming for program evaluation, policy analysis, and official statistics. The audit trail provided by script-based approaches ensures that analytical decisions are documented and reproducible, which is crucial for public accountability. When policy decisions depend on statistical findings, the ability to trace every calculation back to its source code provides essential transparency.

Social science research remains heavily dependent on specialized statistical software, with packages for psychometrics, network analysis, and spatial statistics enabling sophisticated methodological approaches. The flexibility to implement novel statistical methods quickly makes these tools particularly valuable in research domains where methodology evolves rapidly. Researchers can prototype new analytical techniques and immediately apply them to their data without waiting for commercial software updates.

Expert Insights: Strategic Career Development

Building Complementary Skill Sets

Industry experts consistently emphasize that statistical programming proficiency should be viewed as part of a broader analytical toolkit rather than a standalone capability. Professionals who combine deep statistical knowledge with implementation skills and domain expertise position themselves for specialized roles where methodological rigor is valued over deployment scale. Understanding the theoretical foundations of statistical methods enables practitioners to select appropriate techniques and interpret results correctly.

The integration of statistical programming with R for Data Science other technologies has created opportunities for professionals who can work across tool boundaries. Understanding how to leverage statistical capabilities within larger data engineering workflows, or how to productionize statistical models through APIs and containers, represents a valuable skill combination. These hybrid skills allow organizations to maintain statistical rigor while leveraging the scalability of modern data platforms.

Domain specialization has emerged as a key strategy for professionals building careers around statistical computing. Developing deep expertise in specific application areas like biostatistics, econometrics, or psychometrics, combined with programming proficiency, creates career opportunities that are less susceptible to automation or outsourcing. Deep domain knowledge enables professionals to ask better questions, select more appropriate methods, and provide more meaningful interpretations of analytical results.

Navigating the Evolving Job Market

The demand for statistical programming skills has become more specialized but also more stable, with consistent need in pharmaceutical research, academic institutions, government agencies, and financial services. Experts note that while the percentage of general R for Data Science roles requiring these skills has decreased, the specialized roles that do require them often offer greater job security and compensation. These positions typically involve complex analytical challenges where methodological correctness is paramount.

The remote work revolution has benefited professionals with statistical expertise, as organizations can now access specialized talent regardless of geographic location. This has created opportunities for experts in regions with strong statistical training but limited local job markets. The ability to work effectively in distributed teams has become as important as technical skills for many statistical programming roles.

Consulting and freelance work represents a growing opportunity for statistical programming professionals, particularly those with domain expertise. The ability to provide specialized statistical analysis as a service has become increasingly viable as organizations seek external expertise for specific projects rather than maintaining large internal teams. Successful consultants combine technical excellence with strong communication skills and business acumen.

Future Directions and Strategic Considerations

Emerging Technical Capabilities

The statistical programming ecosystem continues to evolve, with several technical developments shaping its future trajectory. The adoption of modern language features has improved code readability and performance, making statistical programming more accessible to new users while maintaining its power for advanced applications. These improvements help bridge the gap between pedagogical use and production applications.

Performance improvements have addressed historical limitations, with enhanced memory management, parallel processing capabilities, and just-in-time compilation expanding applicability to larger datasets and more complex computations. These advancements mean that statistical programming environments can now handle problems that previously required specialized high-performance computing tools, making sophisticated analyses more accessible to researchers and analysts.

Machine learning integration has progressed, R for Data Science with cohesive frameworks providing structured approaches to model training, tuning, and evaluation. While traditional statistical programming environments may not compete with Python for deep learning applications, their capabilities for traditional machine learning, particularly with structured data, have become increasingly sophisticated. The integration of machine learning techniques with traditional statistical methods creates powerful hybrid approaches.

Strategic Positioning in the Data Ecosystem

The most successful R for Data Science applications of statistical programming leverage unique strengths while acknowledging limitations. Experts recommend using these tools for statistical modeling, exploratory analysis, and research prototyping, while employing other tools for data engineering, deployment, and deep learning. This pragmatic approach maximizes value without forcing tools into roles where alternatives are more appropriate.

The emphasis on reproducibility and documentation positions statistical programming ideally for regulated industries and research contexts where methodological transparency is crucial. As organizations increasingly focus on responsible AI and ethical data practices, the reproducible research paradigm becomes increasingly valuable. The ability to demonstrate exactly how results were obtained is essential for building trust in analytical findings.

The open-source nature of popular statistical programming tools ensures continued evolution and accessibility. As commercial software vendors increase prices and restrict functionality, the zero-cost basis becomes increasingly attractive, particularly for academic institutions, non-profits, and organizations with limited budgets. The vibrant community surrounding open-source statistical software drives continuous improvement and innovation.

Conclusion: The Enduring Value of Statistical Excellence

The journey through the evolving landscape of statistical computing reveals tools that have successfully navigated the transformation of the R for Data Science field by focusing on core strengths. Rather than attempting to compete across all domains, these environments have deepened capabilities in statistical computing, reproducible research, and specialized applications where methodological rigor is paramount.

The critical lessons from industry experts consistently emphasize that the value of statistical programming lies not in universal applicability but in exceptional capability within specific domains. Professionals who master these tools while understanding appropriate application contexts position themselves for successful careers in research, regulated industries, and organizations where statistical excellence drives decision-making.

As the R for Data Science field continues to mature, the importance of rigorous methodology, reproducible research, and statistical sophistication will only increase. The investments in statistical programming capabilities today will yield returns throughout careers, providing the foundation for tackling increasingly complex analytical challenges with confidence and competence. The future belongs to professionals who can leverage the right tools for the right problems, and for statistical excellence, modern programming environments remain essential components of the data professional’s toolkit.