Discover the most powerful Python libraries transforming data science in 2025. From Pandas 3.0 to Transformers 5.0, learn how these essential tools will elevate your data manipulation, machine learning, and visualization skills to professional levels.

Introduction: The Evolving Python Ecosystem for Data Science

In the rapidly advancing field of data science, Python libraries continue to serve as the fundamental building blocks that empower professionals to extract meaningful insights from complex datasets. As we progress through 2025, the landscape of Python libraries has evolved dramatically, with new tools emerging and established libraries maturing to address the increasingly sophisticated demands of modern data workflows. The strategic selection and mastery of these Python libraries has become a critical differentiator between competent data practitioners and truly exceptional ones who can deliver transformative business value.

The ecosystem of Python libraries in 2025 reflects several key trends: the integration of artificial intelligence into development workflows, the emphasis on scalable and efficient data processing, and the growing importance of ethical AI and model interpretability. What sets the current generation of Python libraries apart is their ability to work in concert, creating seamless workflows that span from data acquisition to deployment, while maintaining the flexibility and accessibility that has made Python the language of choice for data science.

This comprehensive guide explores the most impactful Python libraries that are reshaping data science practices in 2025. From established workhorses that have gained new capabilities to innovative newcomers addressing emerging challenges, we’ll examine how these tools can elevate your data science skills and transform your approach to solving complex analytical problems.

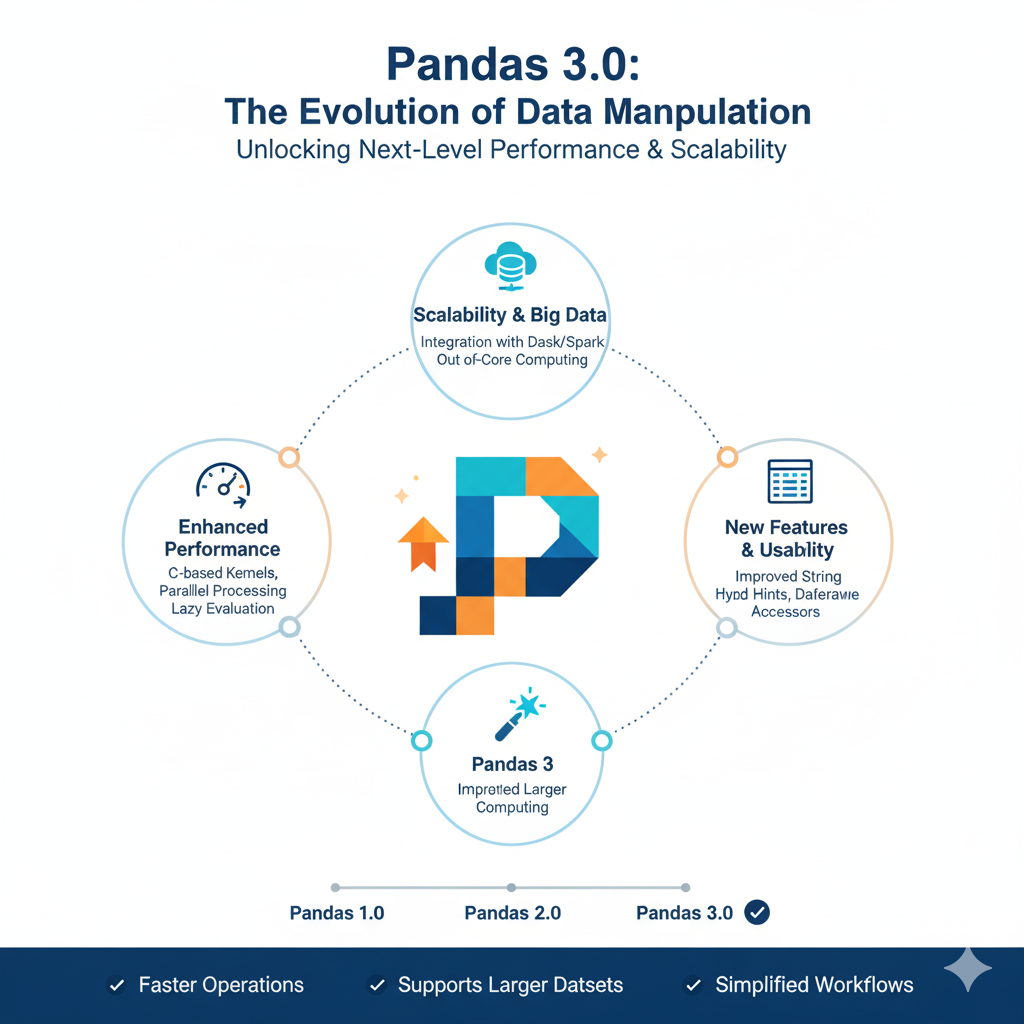

1. Pandas 3.0: The Evolution of Data Manipulation

Revolutionary Performance and Memory Optimization

The release of Pandas 3.0 represents a watershed moment for data manipulation in Python, introducing groundbreaking performance improvements that fundamentally change how we work with large datasets. Among the most significant Python libraries for data manipulation, Pandas has evolved from a convenient data analysis tool into a high-performance engine capable of handling datasets that previously required distributed computing frameworks. The integration of Apache Arrow as the backend for data storage has dramatically reduced memory usage while accelerating operations, making it possible to work with datasets measuring hundreds of gigabytes on a single machine.

The performance enhancements in this latest iteration of one of the most essential Python libraries are particularly evident in string operations, groupby transformations, and merge operations. Benchmark tests show 2-5x improvements in common data manipulation tasks, with some specialized operations achieving order-of-magnitude speedups. These advancements mean that data scientists can now perform exploratory data analysis and feature engineering on significantly larger datasets without switching between different Python libraries or moving to specialized big data platforms.

Enhanced Data Types and Missing Value Handling

Pandas 3.0 introduces sophisticated data types that go beyond traditional numerical and categorical representations. The new nullable integer and boolean data types provide more intuitive handling of missing values, while enhanced string data types offer improved performance and functionality for text processing. These advancements in one of the cornerstone Python libraries make data cleaning and preparation more efficient and less error-prone.

The method chaining capabilities have been extended with new convenience methods that reduce the need for temporary variables and make code more readable. For data scientists working with time series data—one of the most common applications of Python libraries in business contexts—the enhanced date and time functionality provides more intuitive handling of time zones, business calendars, and irregular time intervals. These improvements demonstrate how established Python libraries continue to evolve to meet the changing needs of data professionals.

2. Polars: The Next-Generation Data Frame Library

Lightning-Fast Performance with Rust Foundation

Polars has emerged as one of the most revolutionary Python libraries for data manipulation, offering performance that often surpasses Pandas by an order of magnitude, especially on larger datasets. Built on Rust with a carefully designed Python API, Polars represents a fundamental rethinking of how data frames should operate in memory. Its lazy evaluation paradigm allows for query optimization across multiple operations, while its parallel execution capabilities leverage modern multi-core processors more effectively than traditional Python libraries.

What sets Polars apart from other Python libraries is its consistent performance characteristics across different operation types and dataset sizes. While many Python libraries show performance degradation with increasing data volume, Polars maintains its speed advantages even with datasets approaching the limits of available memory. This makes it particularly valuable for data scientists working with large but not massive datasets—the sweet spot for many business applications where distributed computing would be overkill but traditional Python libraries struggle with performance.

Expressive API and Seamless Integration

Despite its performance focus, Polars hasn’t sacrificed usability. The API design balances expressiveness with consistency, providing intuitive methods for common data manipulation tasks while maintaining predictable behavior across different operations. For data scientists familiar with other Python libraries like Pandas, the learning curve is manageable, with clear parallels in functionality but significant differences in performance and memory efficiency.

The interoperability between Polars and other Python libraries has improved dramatically, making it easier to incorporate into existing workflows. Conversion between Polars data frames and Pandas data frames is efficient, and integration with visualization Python libraries like Plotly and machine learning Python libraries like Scikit-learn is seamless. This interoperability ensures that data scientists can leverage Polars’ performance advantages without abandoning their existing toolkit of Python libraries.

3. Scikit-learn 2.0: Machine Learning for the Modern Era

Enhanced Performance and New Algorithmic Approaches

Scikit-learn 2.0 represents a major evolution of one of the most fundamental Python libraries for machine learning, introducing performance improvements and new capabilities that address the changing landscape of predictive modeling. The integration of numba JIT compilation for key algorithms has dramatically sped up model training and prediction, while new ensemble methods and neural network implementations bridge the gap between traditional machine learning and deep learning approaches that typically require separate Python libraries.

The updated version of this essential collection of Python libraries introduces several innovative algorithms specifically designed for tabular data, which remains the primary data type for business applications. These include advanced gradient boosting implementations, attention mechanisms adapted for structured data, and hybrid models that combine different algorithmic approaches. For data scientists working with mixed data types—numerical, categorical, and text features within the same dataset—these new capabilities in one of the most trusted Python libraries provide more sophisticated modeling options.

Improved Model Evaluation and Interpretability

Model interpretation has become increasingly important as machine learning moves into regulated industries and high-stakes decision-making. Scikit-learn 2.0 enhances its model interpretation capabilities, integrating SHAP and LIME directly into the model evaluation workflow. This eliminates the need to use separate Python libraries for model explanation, creating a more cohesive analytical experience.

The updated cross-validation and hyperparameter tuning capabilities make it easier to build robust models that generalize well to new data. New scoring metrics specifically designed for business applications—such as metrics that incorporate costs of different error types—make it easier to align model evaluation with business objectives. These enhancements solidify Scikit-learn’s position as one of the most comprehensive Python libraries for end-to-end machine learning workflows.

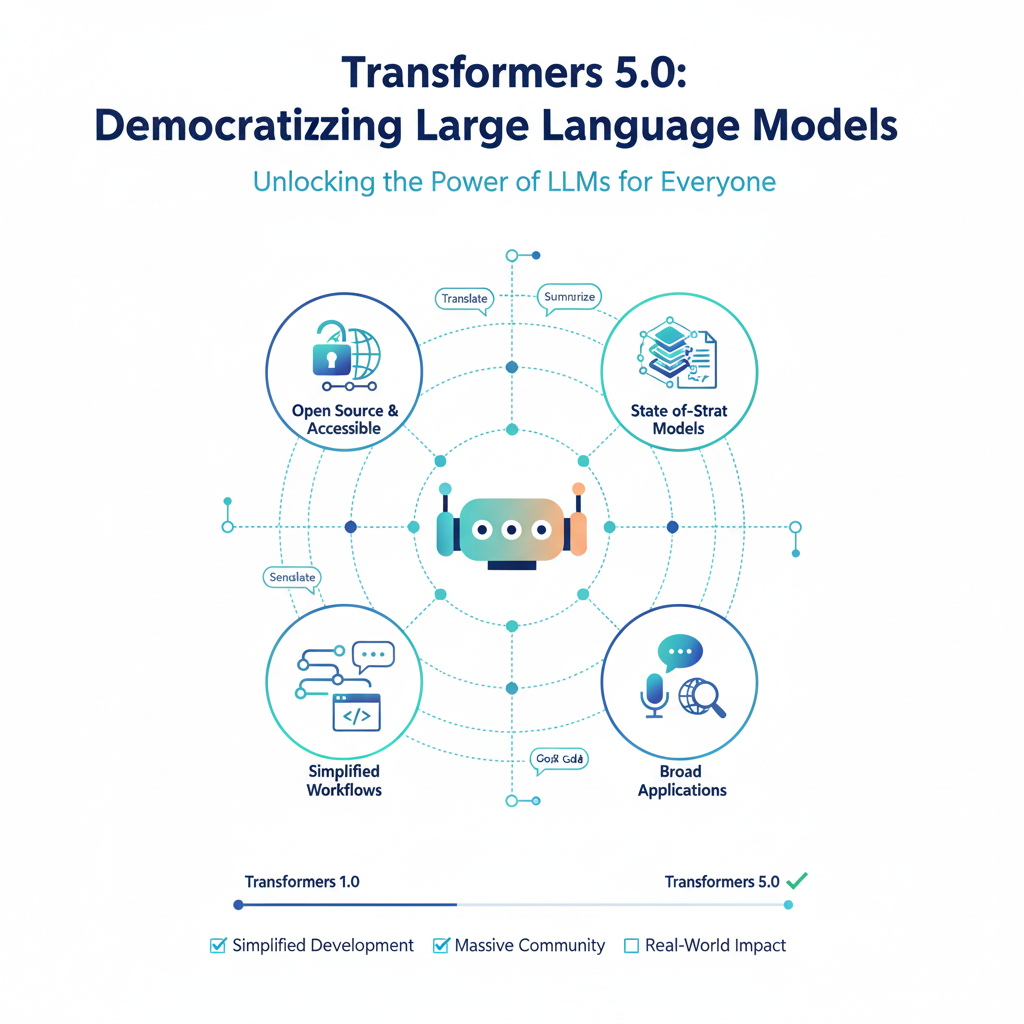

4. Transformers 5.0: Democratizing Large Language Models

Streamlined Access to State-of-the-Art Models

The Transformers library by Hugging Face has revolutionized how data scientists work with large language models, and version 5.0 represents another leap forward in accessibility and capability. As one of the most influential Python libraries in the natural language processing domain, Transformers has democratized access to cutting-edge models that were previously available only to researchers at well-funded laboratories. The latest version simplifies model selection, training, and deployment while expanding the range of available architectures.

What sets Transformers apart from other NLP-focused Python libraries is its model hub—a centralized repository with thousands of pre-trained models that can be loaded with a single line of code. This eliminates the need for data scientists to train models from scratch for common tasks, significantly reducing the computational resources and time required to implement sophisticated NLP solutions. The library’s consistent API across different model architectures makes it easy to experiment with different approaches without learning new interfaces.

Efficient Fine-Tuning and Customization

While access to pre-trained models is valuable, the ability to efficiently fine-tune these models on domain-specific data is where Transformers truly shines among Python libraries. Version 5.0 introduces more memory-efficient training techniques that make it feasible to fine-tune large models on single GPUs or even high-end CPUs. Parameter-efficient fine-tuning methods like LoRA (Low-Rank Adaptation) are now integrated directly into the training workflow, reducing the computational barrier to customizing state-of-the-art models.

The integration with other Python libraries in the data science ecosystem has also improved. Seamless interoperability with Pandas for data preparation, MLflow for experiment tracking, and Gradio for demo creation makes Transformers a central component in modern NLP workflows. For data scientists working with text data—which represents an increasingly large portion of enterprise data—mastering this collection of Python libraries is no longer optional.

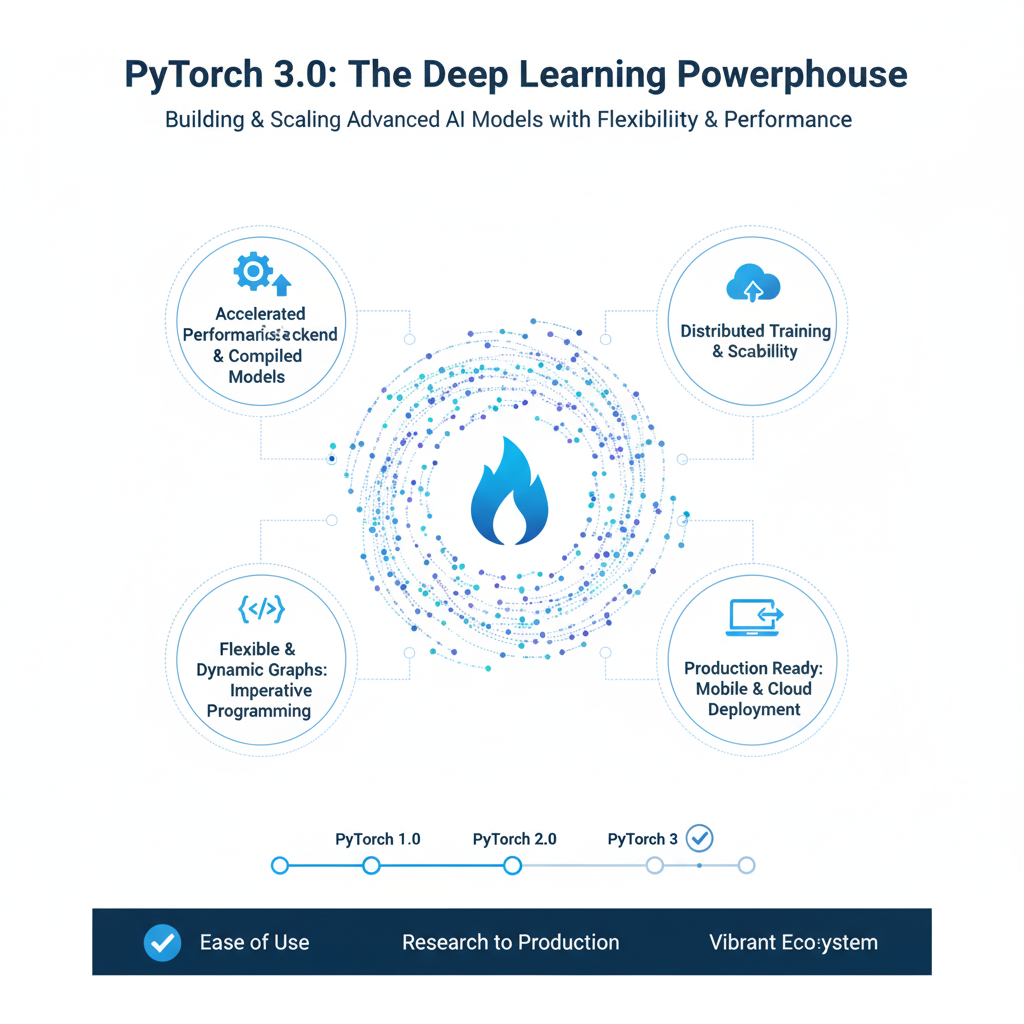

5. PyTorch 3.0: The Deep Learning Powerhouse

Enhanced Performance and Developer Experience

PyTorch continues to dominate as one of the most essential Python libraries for deep learning, and version 3.0 introduces significant improvements in both performance and usability. The new compiler stack provides automatic performance optimization without requiring changes to existing code, while the enhanced distributed training capabilities make it easier to scale model training across multiple GPUs or nodes. These advancements ensure that PyTorch remains competitive with specialized deep learning frameworks while maintaining its signature flexibility and Pythonic interface.

The developer experience has been a key focus in PyTorch 3.0, with improved debugging capabilities, more informative error messages, and enhanced visualization tools. For data scientists transitioning from traditional machine learning to deep learning, these improvements lower the barrier to entry and reduce the time spent on troubleshooting. The expanded model zoo and pre-trained model collection make it easier to start with proven architectures rather than building models from scratch.

Expanded Domain Coverage and Specialized Architectures

While PyTorch has always excelled at computer vision and natural language processing, version 3.0 expands its capabilities into new domains. Enhanced support for graph neural networks, time series forecasting models, and reinforcement learning algorithms makes PyTorch a more comprehensive solution for diverse deep learning applications. These expansions mean that data scientists can now use the same set of Python libraries for different types of deep learning problems, reducing the cognitive load of learning multiple frameworks.

The integration with other Python libraries in the scientific computing ecosystem has also been strengthened. Better interoperability with NumPy, Pandas, and traditional machine learning Python libraries creates smoother workflows that combine deep learning with other analytical approaches. For data scientists working on complex problems that require multiple modeling techniques, this interoperability is invaluable.

6. Streamlit 2.0: Interactive Applications Made Simple

Revolutionizing Model Deployment and Demonstration

Streamlit has transformed how data scientists share their work with stakeholders, and version 2.0 represents a significant evolution in capabilities and performance. As one of the most practical Python libraries for creating interactive web applications, Streamlit enables data scientists to build sophisticated dashboards and model interfaces without web development expertise. The latest version introduces performance improvements that make applications more responsive, especially when working with large datasets or complex visualizations.

The expanded widget library in Streamlit 2.0 provides more options for user interaction, from sophisticated file uploaders to dynamic forms that update based on user input. The caching capabilities have been enhanced to handle more complex scenarios, making it easier to build applications that remain responsive even when performing computationally intensive operations. For data scientists needing to demonstrate model capabilities or create self-service analytical tools, mastery of these Python libraries is increasingly important.

Enhanced Styling and Customization

While earlier versions of Streamlet focused on functionality over form, version 2.0 provides more options for customizing the appearance and layout of applications. The theming system makes it easy to match organizational branding, while the expanded layout components enable more sophisticated application structures. These improvements make Streamlit suitable for production applications rather than just prototypes and internal tools.

The integration with other Python libraries has been a key focus in Streamlit 2.0. Better support for visualization Python libraries like Plotly and Altair, machine learning Python libraries like Scikit-learn and PyTorch, and data manipulation Python libraries like Pandas and Polars creates a cohesive environment for building end-to-end data applications. For data scientists looking to increase their impact beyond building models to deploying usable tools, Streamlit is among the most valuable Python libraries to master.

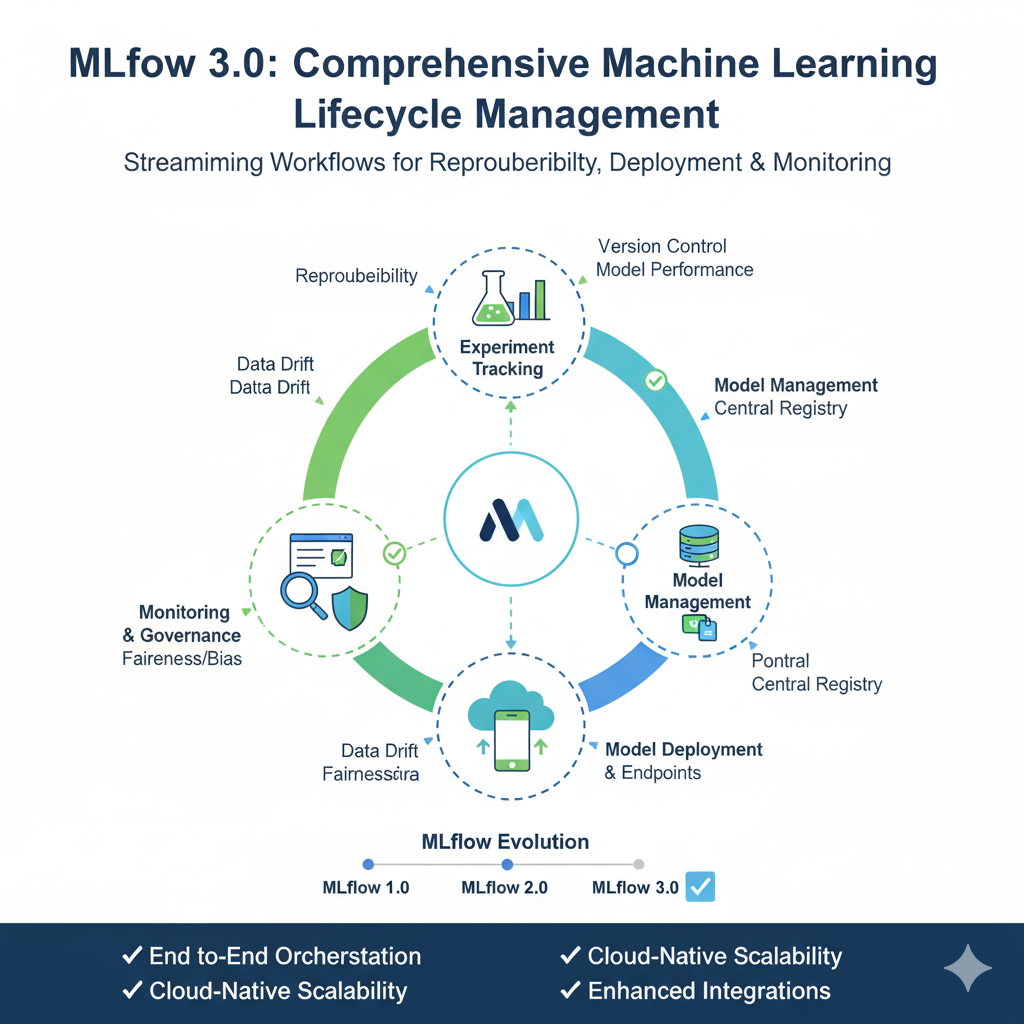

7. MLflow 3.0: Comprehensive Machine Learning Lifecycle Management

Unified Experiment Tracking and Model Management

MLflow has established itself as the standard for machine learning experiment tracking, and version 3.0 expands its capabilities to cover the entire machine learning lifecycle. As one of the most important Python libraries for managing machine learning projects, MLflow provides a unified framework for tracking experiments, packaging code, managing models, and monitoring deployed models. The latest version introduces enhanced collaboration features that make it easier for teams to work together on machine learning projects.

The model registry has been completely redesigned in MLflow 3.0, with improved workflows for model versioning, staging, and deployment. The integration with popular MLOps platforms and cloud services makes it easier to incorporate MLflow into existing infrastructure. For data science teams working on production machine learning systems, these Python libraries provide the foundation for reproducible, maintainable, and scalable machine learning operations.

Enhanced Model Monitoring and Governance

As machine learning models move into production, monitoring their performance and ensuring compliance with regulatory requirements becomes increasingly important. MLflow 3.0 introduces comprehensive model monitoring capabilities that track prediction quality, data drift, and concept drift over time. The enhanced governance features provide audit trails for model changes and deployments, which is crucial for regulated industries.

The interoperability with other Python libraries in the machine learning ecosystem has been expanded in MLflow 3.0. Support for more model types, including large language models and deep learning architectures, makes it possible to use MLflow with virtually any model created using popular Python libraries. This flexibility ensures that MLflow can grow with your machine learning capabilities rather than constraining your choice of algorithms and frameworks.

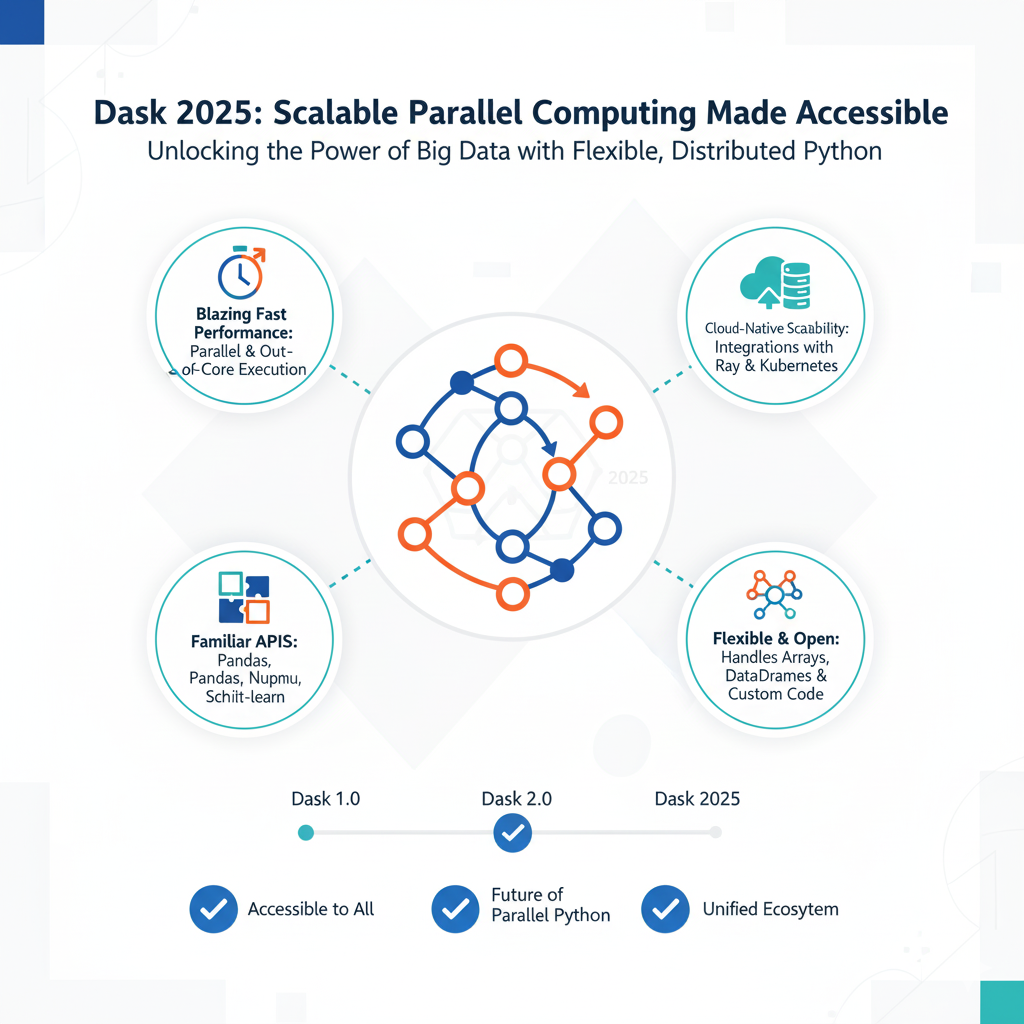

8. Dask 2025: Scalable Parallel Computing Made Accessible

Effortless Scaling for Computational Workloads

Dask continues to be one of the most powerful Python libraries for parallel and distributed computing, and its 2025 release introduces significant improvements in usability and performance. The ability to scale computations from a single laptop to large clusters without changing code makes Dask invaluable for data scientists working with datasets that are too large for single-machine processing but don’t require full big data infrastructure. The latest version reduces the overhead of parallel execution, making it efficient even for medium-sized datasets.

The integration with other Python libraries has been a key focus in the latest Dask release. Better support for Pandas, NumPy, and Scikit-learn means that existing code can often be parallelized with minimal changes. The Dask DataFrame API mirrors the Pandas API so closely that many data manipulation workflows can be scaled simply by replacing pd with dd in imports. This low barrier to adoption makes Dask one of the most practical Python libraries for scaling data science workflows.

Advanced Scheduling and Resource Management

The Dask scheduler has been completely redesigned in the 2025 release, with improved performance for complex computational graphs and better resource management. The new scheduler can dynamically allocate resources based on computational requirements, making more efficient use of available hardware. For data scientists working in resource-constrained environments or cloud platforms where compute costs matter, these improvements can significantly reduce execution time and cost.

The monitoring and diagnostic capabilities have been enhanced, providing more insight into parallel execution and making it easier to identify performance bottlenecks. The integration with Jupyter notebooks has been improved, with better progress tracking and interactive debugging of parallel computations. For data scientists dealing with computationally intensive tasks, mastery of these Python libraries can dramatically improve productivity and enable analysis of larger datasets.

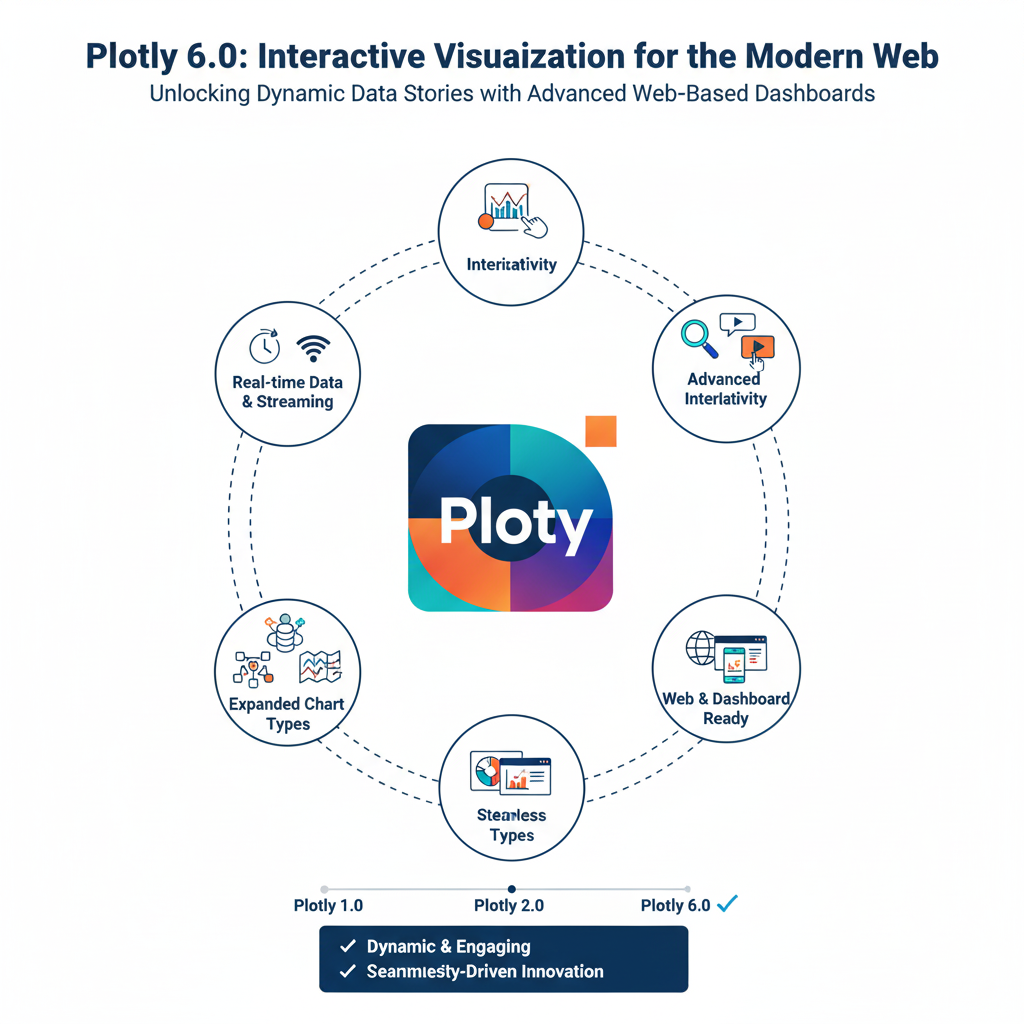

9. Plotly 6.0: Interactive Visualization for the Modern Web

Advanced Customization and Interactivity

Plotly has long been one of the most popular Python libraries for creating interactive visualizations, and version 6.0 introduces unprecedented flexibility and customization options. The new version provides more control over every aspect of visualization, from color schemes and layouts to interactive behaviors and animation. The expanded chart types include specialized visualizations for hierarchical data, network analysis, and high-dimensional data exploration.

The performance improvements in Plotly 6.0 make it possible to create interactive visualizations with millions of data points without sacrificing responsiveness. The WebGL rendering backend has been optimized for complex visualizations, while the new partial update capabilities allow for efficient updates to existing visualizations without complete redraws. For data scientists creating dashboards or exploratory data analysis tools, these Python libraries provide the foundation for engaging and informative data presentations.

Seamless Integration with Modern Data Science Workflows

Plotly 6.0 features enhanced integration with other Python libraries in the data science ecosystem. The Express API has been expanded to cover more chart types while maintaining its signature simplicity, making it easy to create sophisticated visualizations with minimal code. The integration with Jupyter widgets enables more interactive notebook experiences, while the improved Dash integration makes it easier to build complex web applications around Plotly visualizations.

The theming system has been completely redesigned, with better support for custom color scales, templates, and layout presets. The new annotation capabilities make it easier to highlight specific data points or regions in visualizations, which is particularly valuable for communicating insights to non-technical stakeholders. For data scientists who need to present their findings effectively, mastery of these visualization-focused Python libraries is as important as analytical skills.

10. Evidently AI 2.0: Comprehensive Model Monitoring and Evaluation

Proactive Model Performance Management

Evidently AI has emerged as one of the most comprehensive Python libraries for model monitoring and evaluation, and version 2.0 introduces significant advancements in detecting and diagnosing model performance issues. The library provides a unified framework for evaluating data quality, data drift, target drift, and model performance across the entire machine learning lifecycle. The latest version introduces more sophisticated drift detection algorithms that can identify subtle changes in data distributions that might affect model performance.

The new dashboard capabilities in Evidently AI 2.0 make it easier to monitor model performance over time and identify trends that might indicate emerging issues. The integration with popular workflow schedulers and MLOps platforms enables automated model evaluation as part of production pipelines. For data scientists responsible for maintaining models in production, these Python libraries provide essential tools for ensuring model reliability and performance.

Enhanced Reporting and Communication

Evidently AI 2.0 features completely redesigned reporting capabilities that make it easier to communicate model health and performance to technical and non-technical stakeholders. The new report templates provide clear, actionable insights into model behavior, with specific recommendations for addressing identified issues. The expanded metric collection includes business-focused metrics that align model evaluation with organizational objectives.

The integration with other Python libraries has been expanded, with better support for models created using Scikit-learn, XGBoost, LightGBM, and deep learning frameworks. The library can evaluate models regardless of the Python libraries used for training, making it a universal solution for model monitoring. For data science teams working with diverse modeling approaches, this flexibility is invaluable.

Conclusion: Strategic Library Selection for Maximum Impact

The landscape of Python libraries for data science continues to evolve at a rapid pace, with new tools emerging and established libraries gaining new capabilities. The most successful data scientists in 2025 will be those who strategically select and master the Python libraries that best address their specific challenges and opportunities. This requires not only technical proficiency with individual Python libraries but also an understanding of how different Python libraries work together to create cohesive, efficient data science workflows.

The Python libraries discussed in this article represent the current state of the art in data science tooling, but the ecosystem will continue to evolve. Staying current with new developments in Python libraries requires continuous learning and experimentation. The most valuable approach is to develop a deep understanding of foundational Python libraries while maintaining the flexibility to adopt new tools as they prove their value.

Ultimately, mastery of these Python libraries transforms how you approach data science problems, enabling more sophisticated analyses, more efficient workflows, and more impactful results. By investing in understanding these powerful Python libraries, you position yourself at the forefront of data science practice, ready to tackle the complex analytical challenges of 2025 and beyond.