Master Probability and Distributions with our comprehensive guide. Learn 7 essential concepts that form the foundation of data science, from conditional probability to information theory, with practical examples and applications

Introduction: The Bedrock of Data Science

In the rapidly evolving landscape of data science, where new algorithms and techniques emerge almost daily, one fundamental truth remains constant: a deep understanding of Probability and Distributions forms the unshakable foundation upon which all reliable data analysis is built. The year 2024 has seen unprecedented growth in artificial intelligence and machine learning applications, yet the principles governing Probability and Distributions remain as relevant as ever. These mathematical concepts are not just academic exercises; they are the essential tools that enable data scientists to make sense of uncertainty, draw meaningful conclusions from data, and build models that genuinely work in the real world.

The journey through Probability and Distributions represents a critical path every aspiring data scientist must travel. Whether you’re conducting A/B tests for a tech giant, building recommendation systems for e-commerce platforms, or developing sophisticated neural networks, the principles of Probability and Distributions will be your constant companions. This comprehensive guide explores seven powerful concepts in Probability and Distributions that separate competent data scientists from exceptional ones, providing both theoretical understanding and practical applications that you can immediately implement in your work.

The Critical Importance of Probability and Distributions in Modern Data Science

Before diving into specific concepts, it’s crucial to understand why Probability and Distributions maintain such a central position in data science. In an era dominated by big data and complex algorithms, one might assume that raw computational power could overcome theoretical limitations. However, the opposite has proven true: as our datasets grow larger and our models more complex, the principles of Probability and Distributions become increasingly important for several reasons.

First, Probability and Distributions provide the language and framework for dealing with uncertainty. In the real world, data is never perfect, measurements contain errors, and future outcomes are inherently unpredictable. The mathematical framework of Probability and Distributions gives us the tools to quantify this uncertainty, to measure our confidence in predictions, and to make decisions despite incomplete information. Without this framework, data science would be reduced to pattern matching without understanding.

Second, modern machine learning is fundamentally built upon concepts from Probability and Distributions. From the loss functions we optimize to the regularization techniques we apply, from Bayesian neural networks to generative adversarial networks, nearly every advanced concept in machine learning has deep roots in probability theory. Understanding Probability and Distributions isn’t just helpful for passing interviews; it’s essential for innovating and advancing the field itself.

Third, as data science becomes more integrated into critical decision-making processes in healthcare, finance, and public policy, the ability to properly quantify and communicate uncertainty becomes a professional and ethical imperative. Misunderstanding or misapplying concepts from Probability and Distributions can lead to catastrophic consequences, whether in medical diagnoses, investment strategies, or policy decisions.

Throughout this article, we’ll explore how mastering Probability and Distributions enables data scientists to build better models, draw more reliable conclusions, and communicate findings more effectively. Each concept will be illustrated with practical examples and real-world applications that demonstrate why these fundamentals remain indispensable in an age of increasingly sophisticated AI systems.

Concept 1: Conditional Probability and Bayes’ Theorem – The Foundation of Modern Inference

The Mathematical Framework

Conditional probability forms the cornerstone of statistical inference and represents one of the most crucial concepts in Probability and Distributions. Formally, the conditional probability of event A given event B is defined as:

P(A|B) = P(A ∩ B) / P(B), provided P(B) > 0

This simple formula belies profound implications. Bayes’ Theorem, which follows directly from the definition of conditional probability, provides a systematic framework for updating beliefs in light of new evidence:

P(A|B) = [P(B|A) * P(A)] / P(B)

Where:

- P(A|B) is the posterior probability

- P(B|A) is the likelihood

- P(A) is the prior probability

- P(B) is the evidence or marginal probability

Real-World Applications and Examples

In practical data science, conditional probability and Bayes’ Theorem find applications across numerous domains. Consider spam email classification, where we want to calculate the probability that an email is spam given that it contains certain words. Using Bayes’ Theorem, we can update our belief about an email being spam as we analyze its content.

Another compelling application lies in medical diagnostics. Suppose a disease affects 1% of the population, and a test for this disease has 99% sensitivity and 99% specificity. Using Bayes’ Theorem, we can calculate that a positive test result only indicates approximately a 50% chance of actually having the disease—a result that often surprises those unfamiliar with conditional probability.

In recommendation systems, conditional probability enables platforms to suggest products based on what similar users have purchased. The probability that a user will buy product B given that they purchased product A can be calculated using historical purchase data, forming the basis for collaborative filtering algorithms.

Advanced Implications for Machine Learning

The principles of conditional probability extend far beyond these basic applications into the core of modern machine learning. Bayesian neural networks represent a sophisticated application where weights are treated as probability distributions rather than fixed values. This approach allows for better uncertainty quantification and improved performance on small datasets.

In natural language processing, concepts from Probability and Distributions enable language models to generate coherent text by calculating the probability of each subsequent word given the previous context. The entire field of probabilistic graphical models builds upon the foundation of conditional probability to represent complex relationships between variables.

Understanding conditional probability also helps data scientists avoid common pitfalls like the prosecutor’s fallacy in statistical reasoning, where P(A|B) is mistakenly equated with P(B|A). This misunderstanding has led to numerous errors in both legal proceedings and data analysis, highlighting the practical importance of mastering this fundamental concept.

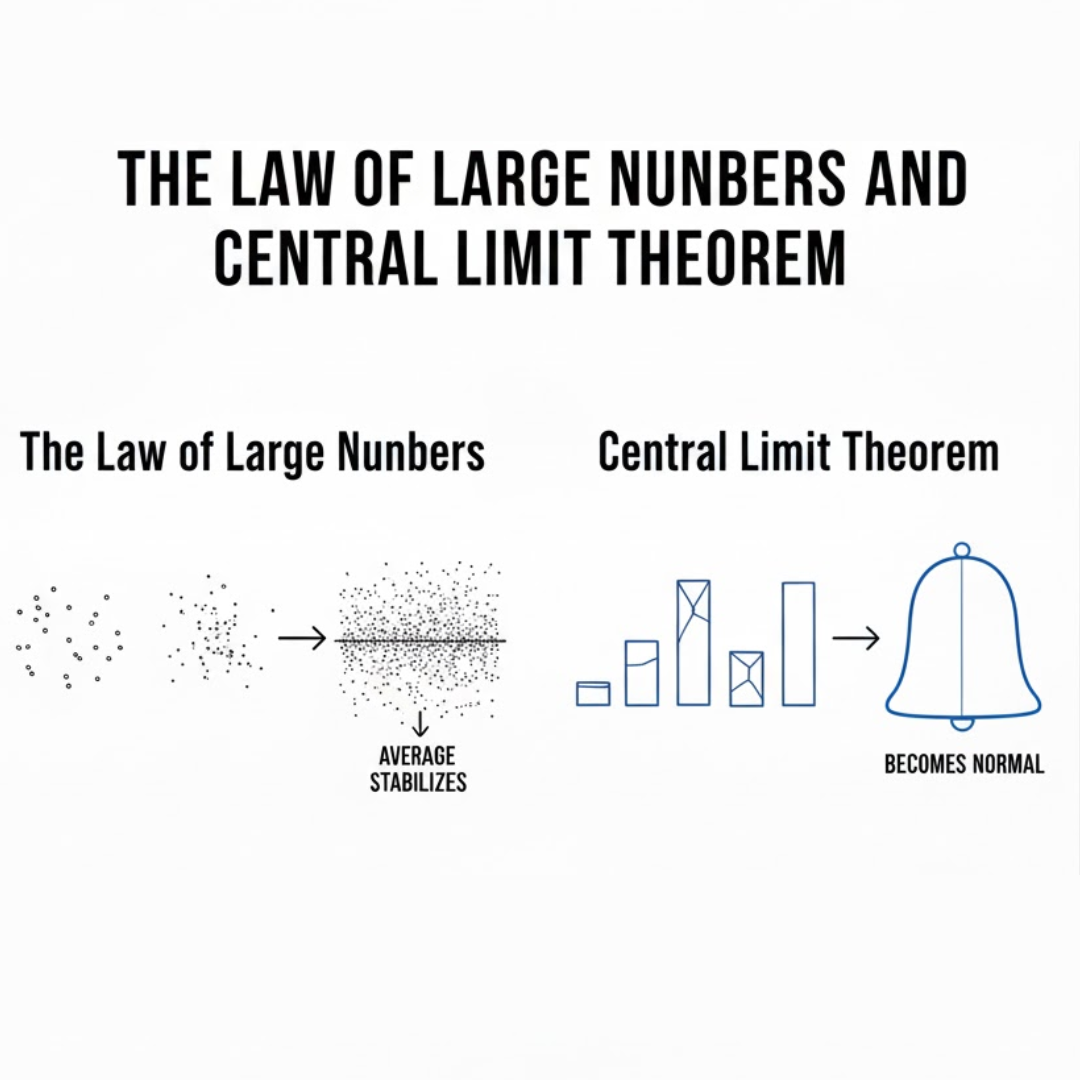

Concept 2: The Law of Large Numbers and Central Limit Theorem – The Pillars of Statistical Stability

Mathematical Foundations

The Law of Large Numbers (LLN) and Central Limit Theorem (CLT) represent two of the most important convergence theorems in Probability and Distributions. The LLN states that as the sample size increases, the sample mean converges to the expected value:

(1/n) * ΣX_i → E[X] as n → ∞

The CLT provides even more powerful insights: regardless of the underlying distribution of a population, the sampling distribution of the mean approaches a normal distribution as the sample size increases:

√n(X̄ – μ) / σ → N(0,1) as n → ∞

Practical Applications in Data Science

These theorems have profound implications for practical data science. The LLN justifies why we can trust that larger samples will provide better estimates of population parameters. This principle underpins the validity of Monte Carlo methods, where we approximate complex integrals or expectations through random sampling.

In A/B testing, the CLT ensures that even if our underlying metric (like conversion rate) follows a non-normal distribution, the distribution of sample means will be approximately normal for sufficiently large samples. This normality allows us to use familiar statistical tests and construct confidence intervals using z-scores.

Consider a practical example from e-commerce: when estimating the average transaction value for a website, individual transactions might follow a highly skewed distribution with most transactions being small but a few being very large. Thanks to the CLT, the sampling distribution of the mean transaction value will be approximately normal for sample sizes as small as 30-50, enabling reliable inference about the population mean.

Advanced Considerations and Limitations

While these theorems are powerful, understanding their limitations is equally important. The rate of convergence depends on the underlying distribution—heavily skewed or fat-tailed distributions require larger sample sizes for the CLT to provide good approximations.

In Bayesian statistics, the LLN justifies why posterior distributions become increasingly concentrated around the true parameter values as more data is observed. This principle forms the basis for Bayesian learning and updating of beliefs.

Modern applications of these theorems extend to stochastic gradient descent in deep learning, where the CLT helps explain why mini-batch gradients provide good approximations of the full gradient, enabling efficient training of large neural networks.

Concept 3: Key Probability Distributions and Their Applications

The Discrete Distribution Family

Understanding the characteristics and applications of specific probability distributions is essential for proper model selection and interpretation. The Bernoulli distribution models binary outcomes and forms the foundation for logistic regression:

P(X = k) = p^k * (1-p)^(1-k) for k ∈ {0,1}

The Binomial distribution extends this to multiple independent trials, crucial for modeling counts of successes in fixed numbers of trials. The Poisson distribution, characterized by its single parameter λ, models counts of events occurring in fixed intervals of time or space and finds applications in queueing theory, website traffic modeling, and rare event analysis.

The Continuous Distribution Spectrum

The Normal distribution, with its familiar bell curve, appears throughout statistics and machine learning due to the CLT. Its properties are well-understood, and it serves as the default assumption for many statistical tests:

f(x) = (1/√(2πσ²)) * e^(-(x-μ)²/(2σ²))

The Exponential distribution models time between events in Poisson processes and possesses the memoryless property, making it suitable for reliability engineering and survival analysis. The Beta distribution, defined on [0,1], serves as the conjugate prior for Bernoulli and Binomial distributions, playing a crucial role in Bayesian analysis.

Specialized Distributions for Advanced Applications

Beyond these fundamental distributions, data scientists frequently encounter specialized distributions tailored to specific domains. The Power Law distribution models phenomena where small events are common but large events are rare, appearing in social networks, word frequency, and wealth distribution.

In financial modeling, the Log-Normal distribution appropriately models stock prices, as it ensures values remain positive while allowing for multiplicative growth. The Gamma distribution serves as a flexible model for waiting times and serves as the conjugate prior for Poisson distributions.

Understanding the relationships between distributions—how the Binomial approximates the Poisson under certain conditions, or how the t-distribution approaches the Normal as degrees of freedom increase—provides data scientists with intuition for selecting appropriate models and understanding their limitations.

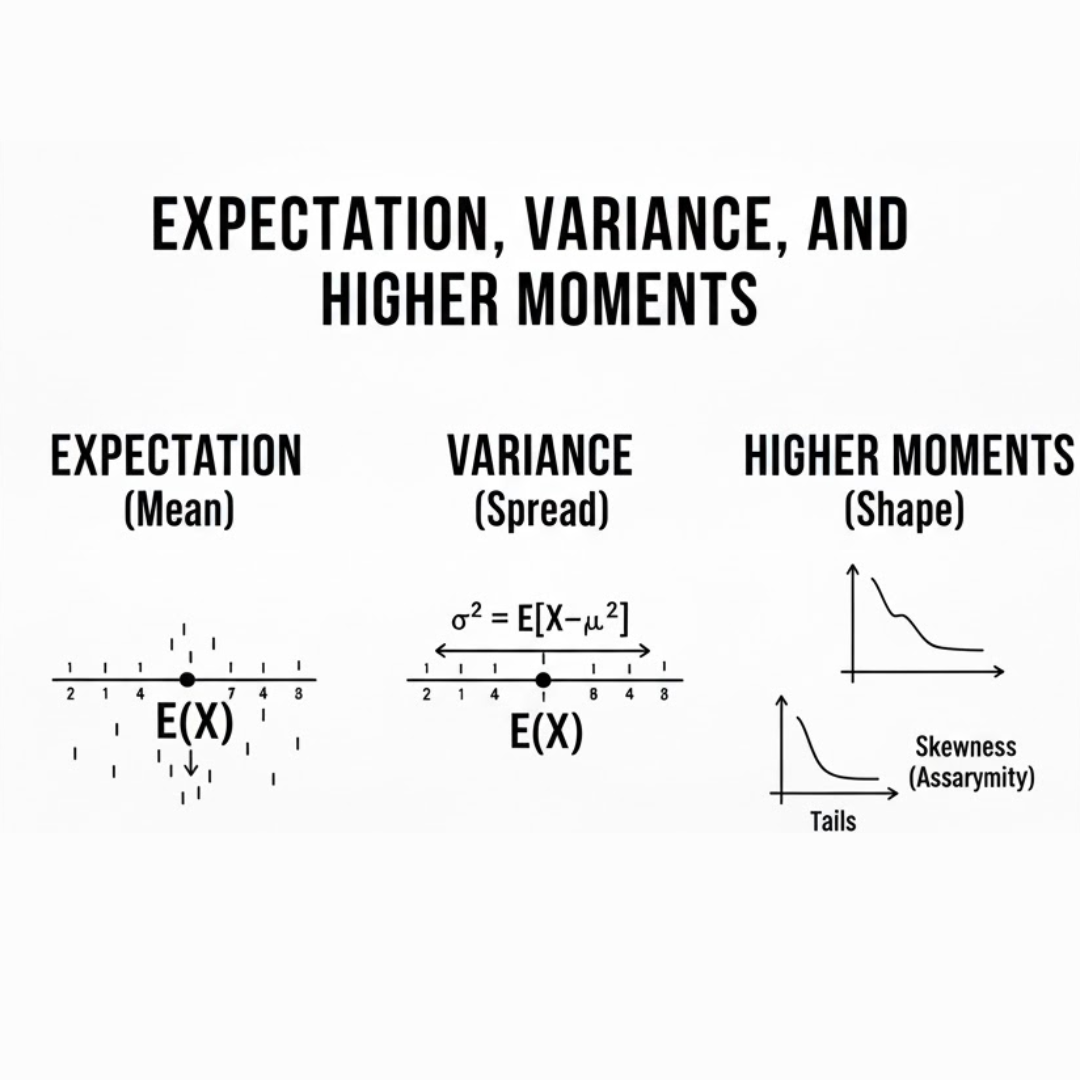

Concept 4: Expectation, Variance, and Higher Moments – Characterizing Distributions

Fundamental Concepts and Properties

The expected value (mean) of a random variable provides a measure of central tendency in Probability and Distributions , while variance measures dispersion around this mean. For a discrete random variable:

E[X] = Σx_i * p(x_i)

Var(X) = E[(X – E[X])²] = E[X²] – (E[X])²

These concepts extend to continuous random variables through integration. Understanding the linearity of expectation—that E[aX + bY] = aE[X] + bE[Y] regardless of whether X and Y are independent—proves incredibly useful in derivations and calculations.

Practical Significance in Model Evaluation

In machine learning, these moments play crucial roles in model development and evaluation. The bias-variance tradeoff, fundamental to understanding model performance, directly relates to these concepts. Bias measures how far the expected model predictions are from the true values, while variance measures how much these predictions vary across different training sets Probability and Distributions.

Regularization techniques in machine learning explicitly manipulate this tradeoff, reducing variance at the cost of increased bias. Understanding this balance helps data scientists select appropriate model complexity and regularization strength.

In financial applications, expected return and variance form the basis of modern portfolio theory, while higher moments like skewness and kurtosis provide insights into the asymmetry and tail risk of investment returns.

Advanced Applications and Extensions

The concept of conditional expectation in Probability and Distributions extends these ideas to more complex scenarios. In reinforcement learning, the value function represents the expected cumulative reward from a given state, guiding optimal decision-making.

The law of total variance (Var(Y) = E[Var(Y|X)] + Var(E[Y|X])) provides insights into variance decomposition, useful in analysis of variance (ANOVA) and understanding sources of variability in hierarchical models.

Covariance and correlation, as extensions of variance to multiple variables, form the foundation for understanding relationships between features and play crucial roles in dimensionality reduction techniques like principal component analysis.

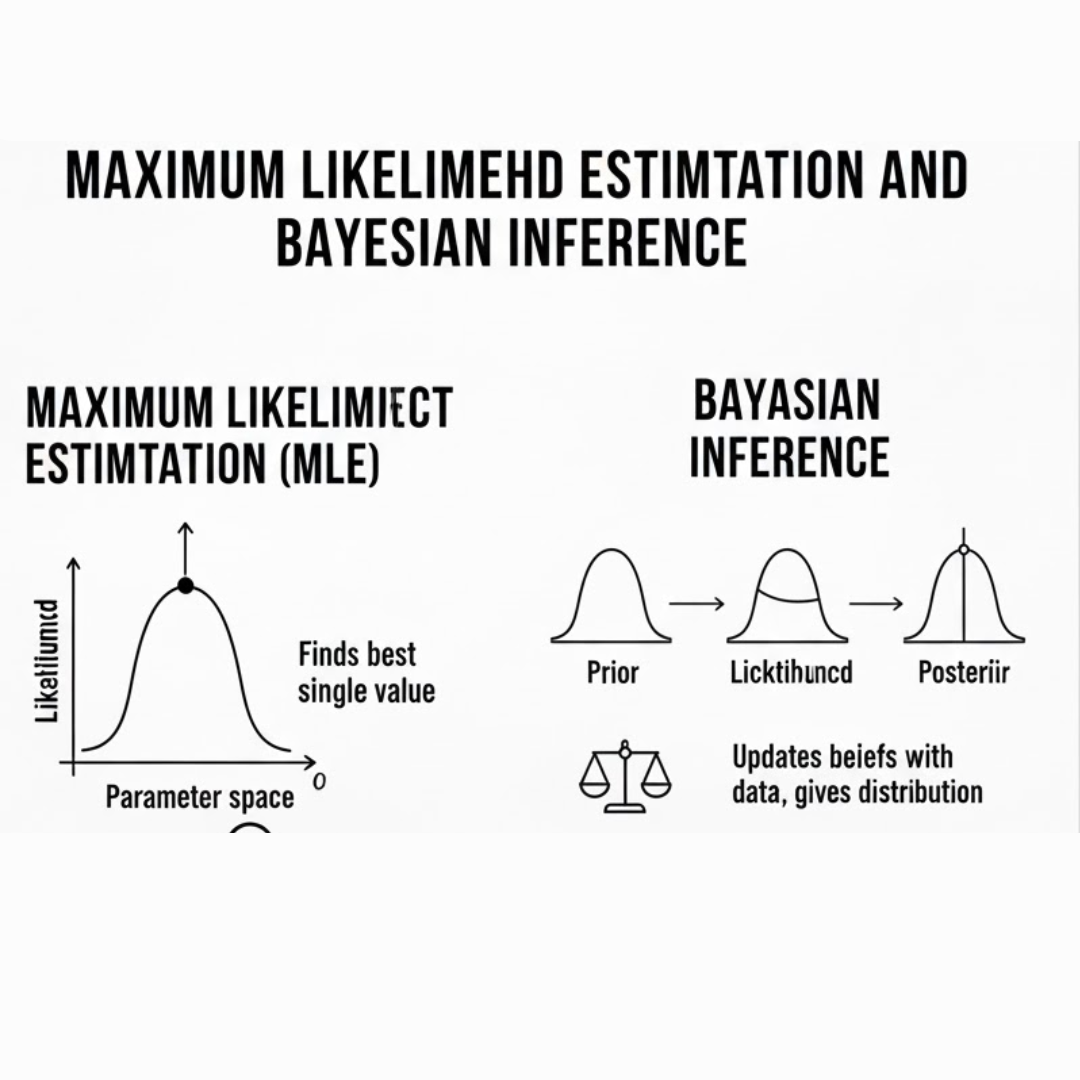

Concept 5: Maximum Likelihood Estimation and Bayesian Inference – The Two Paradigms of Statistical Learning

Frequentist Approach: Maximum Likelihood Estimation

Maximum Likelihood Estimation (MLE) represents the cornerstone of frequentist statistics. Given observed data and a model parameterized by θ, the likelihood function L(θ|x) represents the probability of observing the data given the parameters. The MLE principle selects the parameter values that maximize this likelihood:

θ_MLE = argmax_θ L(θ|x)

In practice, we often work with the log-likelihood, which transforms products into sums and is mathematically more convenient. The exponential family of distributions, which includes many common distributions like Normal, Poisson, and Bernoulli, possesses particularly nice properties for MLE, guaranteeing convexity and existence of unique solutions.

Bayesian Approach: Incorporating Prior Knowledge

Bayesian inference takes a different philosophical approach, treating parameters as random variables with associated probability distributions. Bayes’ Theorem provides the mechanism for updating prior beliefs about parameters in light of observed data:

P(θ|x) = [P(x|θ) * P(θ)] / P(x)

The posterior distribution P(θ|x) combines prior knowledge with observed data, providing a complete summary of our updated beliefs about the parameters. This approach naturally incorporates uncertainty in parameter estimates and enables sequential updating as new data arrives.

Practical Implementation and Tradeoffs

In practice, Probability and Distributions MLE often proves computationally simpler and is the default approach for many machine learning algorithms. The connection between MLE and cross-entropy loss in classification problems demonstrates the practical importance of this concept.

Bayesian methods, while computationally more intensive, provide several advantages: they naturally handle small datasets, incorporate domain knowledge through priors, and provide full posterior distributions rather than point estimates. Modern computational techniques like Markov Chain Monte Carlo (MCMC) and variational inference have made Bayesian methods practical for complex models.

The choice between these paradigms depends on the problem context, available prior information, computational resources, and how the results will be used. Understanding both approaches equips data scientists with a more complete toolkit for different scenarios.

Concept 6: Stochastic Processes and Time Series Analysis – Modeling Temporal Dependence

Foundational Stochastic Processes

Many real-world phenomena involve data collected over time in Probability and Distributions, where observations are not independent. Stochastic processes provide the mathematical framework for modeling such dependent data. The Poisson process models events occurring randomly in time, finding applications in queueing systems, insurance claims, and website visits.

Markov chains model systems where the future state depends only on the current state, not the entire history. This Markov property enables efficient computation and forms the basis for many algorithms in reinforcement learning and natural language processing.

Time Series Models and Applications

Autoregressive (AR) models express the current value of a series as a linear combination of previous values plus noise. Moving average (MA) models represent the current value as a linear combination of previous noise terms. Combining these gives us ARMA models, while incorporating differencing to handle non-stationarity leads to ARIMA models—workhorses of traditional time series analysis.

In financial applications, ARCH and GARCH models capture the volatility clustering phenomenon, where periods of high volatility tend to persist. These models are essential for risk management and option pricing.

Modern Developments and Machine Learning Integration

Recent years have seen deep learning approaches applied to time series forecasting. Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformer architectures have demonstrated impressive performance on complex temporal patterns.

State space models and Kalman filtering provide a unified framework for dealing with time series containing measurement error, with applications in signal processing, economics, and control systems.

Understanding the theoretical foundations of stochastic processes enables data scientists to select appropriate models, properly evaluate forecasts, and understand the limitations of different approaches to temporal data.

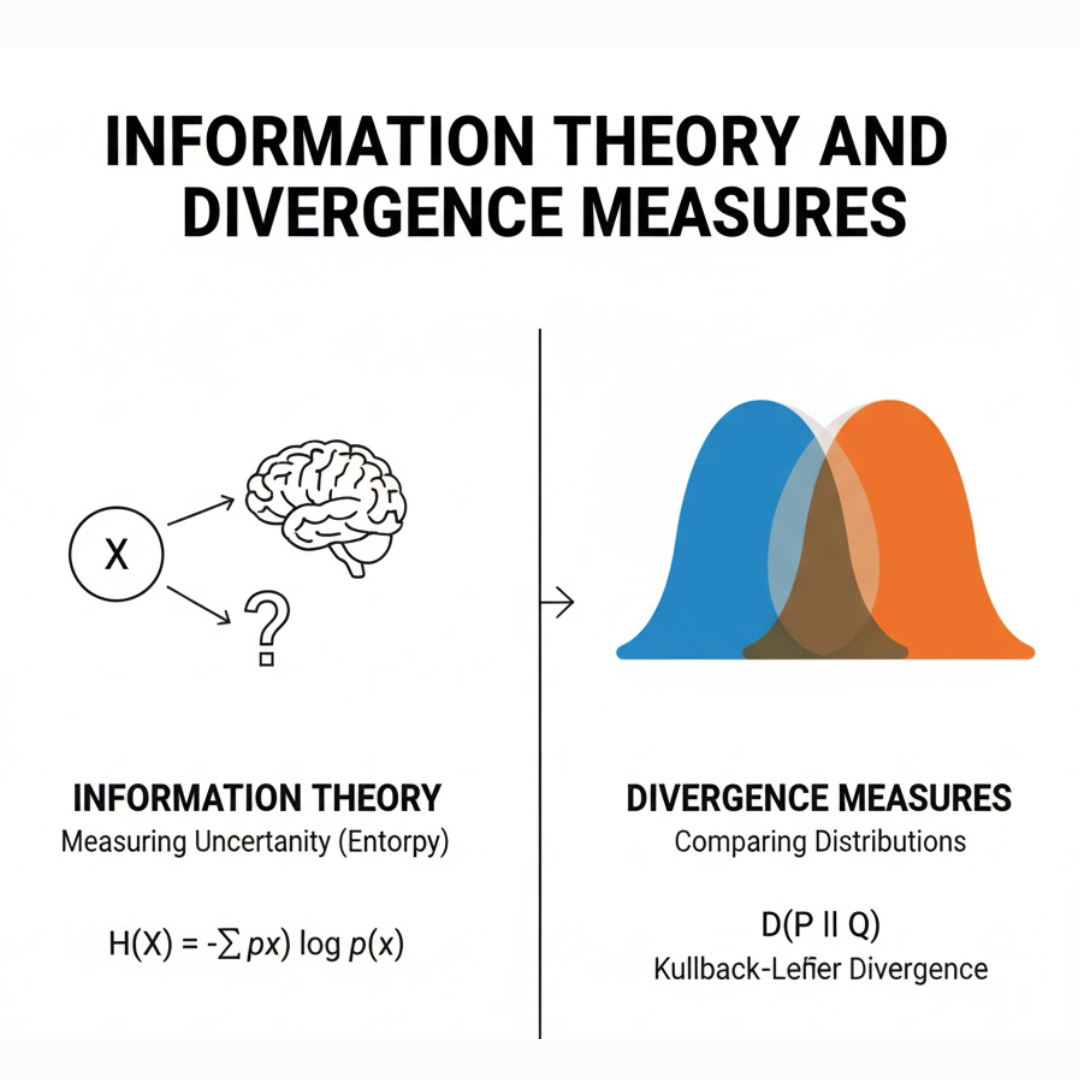

Concept 7: Information Theory and Divergence Measures – Quantifying Information and Difference

Core Concepts of Information Theory

Information theory, pioneered by Claude Shannon, provides powerful tools for quantifying information and measuring differences between distributions. Entropy measures the uncertainty or average information content of a random variable:

H(X) = -Σp(x)log p(x)

Cross-entropy measures the average number of bits needed to encode data from distribution p using a code optimized for distribution q. Kullback-Leibler (KL) divergence quantifies how one Probability and Distributions differs from another:

D_KL(P||Q) = Σp(x)log(p(x)/q(x))

Applications in Machine Learning and Model Evaluation

These concepts find numerous applications in machine learning. Cross-entropy serves as the most common loss function for classification problems, while KL divergence appears in variational autoencoders and Bayesian neural networks as part of the loss function, encouraging the learned distribution to match a prior distribution.

Mutual information, which measures the dependence between two random variables, finds applications in feature selection and independent component analysis. The data processing inequality formalizes the intuition that processing cannot increase information.

Advanced Applications and Recent Developments

In reinforcement learning, the policy gradient theorem often incorporates Probability and Distributions entropy regularization to encourage exploration. In natural language processing, perplexity—derived from cross-entropy—serves as a standard metric for evaluating language models.

Recent research has explored f-divergences beyond KL divergence, each with different properties suitable for various applications. The Wasserstein distance from optimal transport theory has gained popularity in generative models like Wasserstein GANs due to its better behavior when distributions have non-overlapping support.

Understanding these information-theoretic concepts enables data scientists to select appropriate loss functions, evaluate model performance more deeply, and develop novel algorithms based on solid theoretical foundations.

Conclusion: Integrating Probability and Distributions into Your Data Science Practice

The seven concepts explored in this article represent essential knowledge areas within Probability and Distributions that every competent data scientist must master. However, true mastery comes not from understanding these concepts in isolation, but from recognizing their interconnections and knowing how to apply them synergistically to solve real-world problems.

The journey through Probability and Distributions is ongoing. As new challenges emerge in areas like causal inference, federated learning, and ethical AI, the fundamental principles of Probability and Distributions will continue to provide the analytical tools needed to address these challenges. The data scientist who deeply understands these foundations positions themselves not just to apply existing techniques, but to develop new methods and advance the field itself.

Ultimately, mastery of Probability and Distributions transforms data science from a collection of techniques into a disciplined approach to reasoning under uncertainty. It enables practitioners to build more robust models, draw more reliable conclusions, and communicate findings with appropriate humility about what the data can and cannot tell us. In an increasingly data-driven world, these skills have never been more valuable.