Dive into the fundamentals of machine learning. This beginner’s guide explains what ML is, how it works, key concepts like supervised & unsupervised.

Introduction: Welcome to the World of Machine Learning

You’ve likely heard the term “Machine Learning” (ML) buzzing around in tech news, business conferences, and even in everyday conversations. From Netflix’s uncannily accurate recommendations to the voice assistant in your smartphone and the fraud detection systems protecting your bank account, machine learning is no longer a futuristic concept—it’s a core part of our present digital landscape.

But what exactly is machine learning? Is it just a fancy name for statistics? Is it the same as artificial intelligence? And most importantly, how can you, regardless of your technical background, begin to understand its core principles?

This comprehensive guide is designed to demystify machine learning. We will break down this complex field into digestible, foundational concepts. By the end of this article, you will have a solid grasp of what machine learning is, how it works, the different types it encompasses, and the real-world problems it solves. We’ll journey from the basic definition to the very algorithms that power this technological revolution, all without assuming any prior knowledge. Let’s begin.

What is Machine Learning? A Definition Beyond the Hype

At its simplest, Machine Learning (ML) is a subset of artificial intelligence (AI) that provides systems the ability to automatically learn and improve from experience without being explicitly programmed.

The classic, more technical definition comes from Arthur Samuel, a pioneer in the field, who in 1959 described it as a “field of study that gives computers the ability to learn without being explicitly programmed.” Tom Mitchell, another leading figure, provided a more precise definition that is widely used today:

Machine Learning Tutorial video:

“A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.”

Let’s unpack this with a practical example: Email Spam Filtering.

- Task (T): Classifying emails as “spam” or “not spam” (also called “ham”).

- Performance Measure (P): The accuracy of classification. For example, the percentage of emails correctly identified as spam or not spam.

- Experience (E): The process of the ML model learning from a large dataset of emails that have already been labeled by users as “spam” or “not spam.”

So, a spam filter learns from your labeled emails (E) to improve its ability to classify new emails (T), thereby increasing its accuracy (P).

Machine Learning vs. Traditional Programming

To further clarify, it’s helpful to contrast Machine Learning with traditional programming.

- Traditional Programming: A human programmer writes explicit rules (an algorithm) in a language like Python or Java. The computer follows these rules to transform input data into a desired output.

- Input -> Rules/Program -> Output

- Example: A program to calculate employee salary. The rule is

Salary = Hours Worked * Hourly Rate. The programmer defines the logic explicitly.

- Machine Learning: Instead of writing the rules, the programmer (or data scientist) provides the computer with a large amount of input data and the corresponding correct outputs. The Machine Learning algorithm then infers the rules or patterns that connect the inputs to the outputs. Once learned, these rules can be applied to new, unseen data.

- Input + Output -> ML Algorithm -> Model (Inferred Rules) -> Prediction on New Input

- Example: A program to identify cats in images. Instead of writing rules like “if it has whiskers and pointy ears, it’s a cat,” you show the algorithm thousands of pictures labeled “cat” and “not cat.” The algorithm learns the complex patterns of pixels that constitute “cat-ness” on its own.

This fundamental shift—from explicit programming to learning from data—is what makes machine learning so powerful for tasks where defining the rules is too difficult, complex, or constantly changing.

How Machine Learning Works: The Core Process

While different types of Machine Learning have their nuances, most follow a generalized workflow. Understanding this process is key to grasping the mechanics behind the magic.

Step 1: Data Collection

This is the foundational step. An ML model is only as good as the data it’s trained on. Data can be collected from various sources: databases, log files, sensors, online repositories, or through web scraping. The data can be structured (like a neat Excel spreadsheet), unstructured (like text, images, and videos), or semi-structured (like JSON files).

Step 2: Data Preparation and Preprocessing

Raw data is almost never ready for consumption by an Machine Learning algorithm. This step involves cleaning and organizing the data, which includes:

- Handling Missing Values: Deciding whether to remove rows with missing data or fill them in with a statistic like the mean or median.

- Encoding Categorical Data: Converting text labels (like “red,” “blue,” “green”) into numerical values that algorithms can understand (like 0, 1, 2).

- Normalization/Standardization: Scaling numerical data to a common range (e.g., 0 to 1) to ensure that no single feature dominates the model simply because of its larger scale.

- Data Splitting: Dividing the entire dataset into at least two sets:

- Training Set: The subset of data used to train the model (typically 70-80%).

- Testing Set: The subset of data used to evaluate the final model’s performance (typically 20-30%). This data is never seen by the model during training, providing an unbiased assessment.

Step 3: Choosing a Model

An ML model is a mathematical representation of the patterns in the data. There are dozens of algorithms to choose from, each with its strengths and weaknesses. The choice depends on the problem type (e.g., prediction, classification, clustering), the size and nature of the data, and the desired outcome. We will explore specific models later in this article.

Step 4: Training the Model

This is the heart of the learning process. The training dataset (inputs and correct outputs) is fed into the chosen algorithm. The algorithm iteratively makes predictions on the training data and adjusts its internal parameters (its “rules”) to minimize the difference between its predictions and the actual correct answers. This process of adjustment is often driven by an optimization technique called Gradient Descent.

Step 5: Evaluating the Model

Once training is complete, the model’s performance is evaluated using the testing set. Since the model has never seen this data before, this evaluation gives a realistic estimate of how it will perform in the real world. Common evaluation metrics include accuracy, precision, recall, and F1-score for classification problems, and Mean Absolute Error or R-squared for regression problems.

Step 6: Hyperparameter Tuning

Most Machine Learning algorithms have hyperparameters—configuration settings that are not learned from the data but are set prior to the training process. Think of them as the “dials” of the algorithm. Tuning these hyperparameters (e.g., learning rate, network depth) can significantly improve model performance.

Step 7: Making Predictions (Inference)

After the model is trained, evaluated, and tuned to satisfaction, it is deployed into a production environment where it can start making predictions or decisions on new, real-world data. This is the ultimate goal of the entire process.

The Three Paradigms of Machine Learning

Machine learning can be broadly categorized into three main types, based on the “learning signal” or feedback available to the system.

1. Supervised Learning: Learning with Labeled Data

Supervised learning is the most common and well-studied type of Machine Learning. In this paradigm, the training data consists of input-output pairs. The “supervision” comes from the fact that the dataset includes the correct answers (called labels).

The goal is to learn a mapping function from the input (X) to the output (Y), such that when new input data is presented, the model can predict the corresponding output.

Key Concepts:

- Label: The “answer” or output part of the training data.

- Feature: An individual measurable property or characteristic of the data being observed. For example, in a housing price prediction model, features could be square footage, number of bedrooms, and zip code.

Supervised learning problems are generally divided into two categories:

A) Classification

The goal is to predict a discrete categorical label. The output is a choice from a predefined set of categories.

Real-World Examples:

- Email Spam Detection: Input: email text; Output: “spam” or “not spam.”

- Image Recognition: Input: image pixels; Output: “cat,” “dog,” “car,” etc.

- Medical Diagnosis: Input: patient symptoms and test results; Output: “disease A,” “disease B,” or “healthy.”

- Loan Approval: Input: applicant’s credit history, income; Output: “approved” or “denied.”

Common Algorithms: Logistic Regression, k-Nearest Neighbors (k-NN), Support Vector Machines (SVM), Decision Trees, Random Forests, and Neural Networks.

B) Regression

The goal is to predict a continuous quantitative value. The output is a number on a continuous scale.

Real-World Examples:

- House Price Prediction: Input: features of a house; Output: predicted price (a continuous number).

- Stock Market Forecasting: Input: historical stock prices, volume; Output: predicted future price.

- Weather Prediction: Input: atmospheric data; Output: predicted temperature or rainfall amount.

- Sales Forecasting: Input: historical sales data, marketing spend; Output: predicted sales for the next quarter.

Common Algorithms: Linear Regression, Polynomial Regression, Decision Trees, Random Forests, and Support Vector Regression.

2. Unsupervised Learning: Finding Hidden Patterns

In unsupervised learning, the training data is unlabeled. There are no correct answers provided. The system is given a dataset and tasked with finding inherent patterns, structures, or groupings within the data on its own.

Key Concept:

- No Labels: The algorithm must make sense of the data without any guidance.

Unsupervised learning problems are also divided into main categories:

A) Clustering

The goal is to group a set of objects in such a way that objects in the same group (called a cluster) are more similar to each other than to those in other groups.

Real-World Examples:

- Customer Segmentation: Grouping customers based on purchasing behavior for targeted marketing campaigns.

- Grouping Genetics: Finding patterns in gene expression data to group patients for biological analysis.

- Social Network Analysis: Identifying communities of friends or influencers within a network.

- Image Segmentation: Grouping pixels in an image to identify objects or regions.

Common Algorithms: k-Means Clustering, Hierarchical Clustering, DBSCAN.

B) Association

The goal is to discover rules that describe large portions of your data, such as “people who buy X also tend to buy Y.”

Real-World Examples:

- Market Basket Analysis: Uncovering relationships between products in a supermarket. The classic example is the “beer and diapers” association rule.

- Recommendation Systems: (Used in conjunction with other methods) “Users who watched movie A also watched movie B.”

Common Algorithms: Apriori, Eclat.

C) Dimensionality Reduction

The goal is to reduce the number of random variables (features) under consideration, by obtaining a set of principal variables. It compresses the data while retaining as much of the relevant information as possible.

Real-World Examples:

- Data Visualization: Projecting high-dimensional data onto 2D or 3D planes so humans can visualize it.

- Noise Reduction: Removing irrelevant features to improve the performance of other Machine Learning models.

- Feature Extraction: Creating new, smaller sets of features that are more informative than the original raw data.

Common Algorithms: Principal Component Analysis (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE).

3. Reinforcement Learning: Learning by Interaction

Reinforcement Learning (RL) is a different paradigm inspired by behavioral psychology. Here, an agent learns to make decisions by performing actions in an environment to maximize a cumulative reward.

Think of it like training a dog. The agent (dog) interacts with the environment (your house). It performs an action (sitting). You give it a reward (a treat). Over time, the dog learns which sequence of actions leads to the most treats.

Key Concepts:

- Agent: The learner or decision-maker.

- Environment: The world the agent interacts with.

- Action: A move the agent can make.

- State: The current situation of the agent.

- Reward: Feedback from the environment, a score that the agent tries to maximize over time.

Real-World Examples:

- Game Playing: AlphaGo and OpenAI’s Dota 2 bots learned to play at a superhuman level by playing millions of games against themselves.

- Robotics: Teaching a robot to walk by rewarding it for moving forward without falling.

- Autonomous Vehicles: The driving policy (when to accelerate, brake, turn) can be learned through RL in simulated environments.

- Resource Management: Managing resources in a data center to minimize energy costs while maintaining performance.

Common Algorithms: Q-Learning, Deep Q-Networks (DQN), Policy Gradient methods.

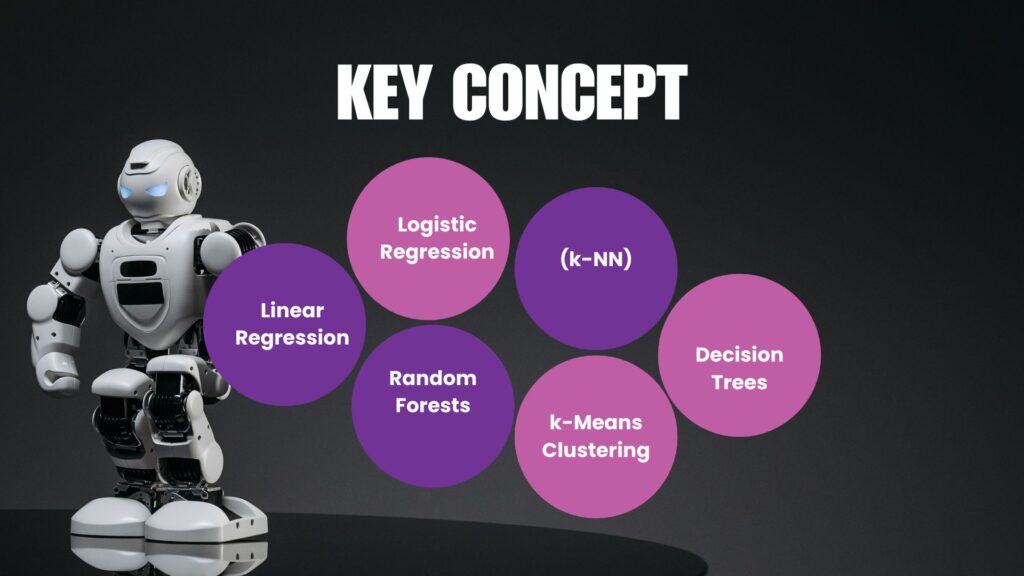

Deep Dive into Key Machine Learning Algorithms

Now that we understand the types, let’s briefly look at a few foundational algorithms that bring these concepts to life.

1. Linear Regression

A simple yet powerful algorithm for regression tasks. It assumes a linear relationship between the input features (X) and the single output value (Y). It tries to draw a straight line (or a hyperplane in higher dimensions) that best fits the data points. The “best” line is the one that minimizes the sum of the squared differences between the predicted values and the actual values.

2. Logistic Regression

Despite its name, it’s used for classification, not regression. It’s used to estimate the probability that an instance belongs to a particular class (e.g., “spam”). Its output is always between 0 and 1, representing a probability. It uses a special “sigmoid” function to squeeze the output of a linear equation into this range.

3. k-Nearest Neighbors (k-NN)

A simple, intuitive algorithm used for both classification and regression. For classification, it works by finding the ‘k’ training examples that are closest (most similar) to the new example and having them “vote” on the class label. For example, if k=3, and the three closest points are two cats and one dog, the new point is classified as a cat.

4. Decision Trees

This model makes predictions by asking a series of True/False questions about the features, traversing a tree-like structure until it reaches a leaf node (a prediction). It’s highly interpretable—you can literally see the decision path. For instance, “Is the age > 30? If yes, is the income > $50k? If yes, then ‘approve loan’.”

5. Random Forests

An “ensemble” method that builds upon Decision Trees. The core idea is that a large number of relatively uncorrelated models (trees) operating as a committee will outperform any of the individual constituent models. It creates a “forest” of decision trees, each trained on a random subset of the data, and makes its final prediction by averaging the predictions of all the trees (for regression) or taking a majority vote (for classification). This approach reduces overfitting and is generally very robust and accurate.

6. Support Vector Machines (SVM)

A powerful and versatile algorithm used primarily for classification. It finds the “maximum margin separator”—the optimal boundary (a line or a hyperplane) that separates classes with the widest possible margin. It is particularly effective in high-dimensional spaces.

7. k-Means Clustering

The most popular clustering algorithm. The goal is to partition data into ‘k’ distinct clusters. It works by:

- Randomly initializing ‘k’ cluster centers (centroids).

- Assigning each data point to the nearest centroid.

- Updating the centroids to be the mean of the points assigned to them.

- Repeating steps 2 and 3 until the centroids no longer change significantly.

8. Neural Networks and Deep Learning

Inspired by the human brain, neural networks consist of interconnected layers of nodes (neurons). Each connection can transmit a signal, and each neuron processes its input signals and passes on an output.

- Input Layer: Receives the raw data.

- Hidden Layers: Intermediate layers where the complex computations and feature detections happen.

- Output Layer: Produces the final prediction.

Deep Learning refers to neural networks with many hidden layers (hence “deep”). These models are exceptionally good at learning complex, hierarchical patterns from vast amounts of data and are the driving force behind modern AI advancements in computer vision, natural language processing, and speech recognition.

The Machine Learning Toolbox: Languages and Libraries

You don’t need to build these algorithms from scratch. A rich ecosystem of programming languages and libraries has been developed to make Machine Learning accessible.

Python: The Lingua Franca of ML

Python is the undisputed leader in the Machine Learning community due to its simple syntax, vast ecosystem of specialized libraries, and strong community support.

- NumPy: The fundamental package for scientific computing. Provides support for large, multi-dimensional arrays and matrices.

- Pandas: Provides easy-to-use data structures and data analysis tools. Perfect for data manipulation and cleaning.

- Matplotlib & Seaborn: Libraries for creating static, animated, and interactive visualizations.

- Scikit-learn: The go-to library for traditional ML algorithms. It features simple and efficient tools for data mining and data analysis, built on NumPy and Matplotlib. It includes implementations for classification, regression, clustering, dimensionality reduction, and more.

- TensorFlow & PyTorch: The two leading libraries for Deep Learning. They provide the flexibility and tools to build and train complex neural networks.

R: The Statistician’s Choice

R is another popular language, particularly strong in statistical analysis and data visualization. It has a wide array of packages for specific statistical learning techniques.

Challenges and Limitations of Machine Learning

Machine learning is powerful, but it’s not a magic bullet. Being aware of its limitations is crucial for its responsible application.

1. Garbage In, Garbage Out (GIGO)

The performance of an Machine Learning model is heavily dependent on the quality and quantity of the training data. Biased, noisy, or insufficient data will lead to a poor-performing model.

2. Overfitting and Underfitting

- Overfitting: When a model learns the training data too well, including its noise and random fluctuations. It performs excellently on the training data but fails to generalize to new, unseen data. It’s like memorizing the answers to a practice test instead of understanding the subject.

- Underfitting: When a model is too simple to capture the underlying trend in the data. It performs poorly on both the training and testing data. It’s like trying to fit a straight line to a complex, curved pattern.

3. Bias and Fairness

Machine Learning models can inherit and even amplify biases present in the training data. If a hiring model is trained on historical data from a company that historically favored one demographic group, the model will learn to do the same, perpetuating systemic bias. Ensuring algorithmic fairness is a major area of research and ethical concern.

4. The “Black Box” Problem

Many complex models, especially deep neural networks, are often “black boxes.” It can be difficult or impossible to understand why they made a particular decision. This lack of interpretability is a significant hurdle in high-stakes fields like medicine and finance, where understanding the “why” is as important as the prediction itself.

5. Data Privacy and Security

ML models often require vast amounts of data, which can include sensitive personal information. Ensuring this data is collected, stored, and used ethically and securely is paramount.

The Future of Machine Learning

The field of machine learning is evolving at a breathtaking pace. Here are some key trends shaping its future:

- Automated Machine Learning (AutoML): Automating the end-to-end process of applying machine learning, making it accessible to non-experts.

- Explainable AI (XAI): Developing techniques to make the decisions of “black box” models more transparent and interpretable to humans.

- Federated Learning: A technique for training models across multiple decentralized devices (like smartphones) holding local data samples, without exchanging them. This enhances privacy.

- AI Ethics and Governance: The development of frameworks, regulations, and tools to ensure that AI is developed and used responsibly, fairly, and safely.

- AI and IoT (AIoT): Integrating AI with the Internet of Things to create intelligent, responsive systems in smart homes, cities, and industries.

Conclusion: Your Journey Has Just Begun

Machine learning is a transformative technology that is reshaping industries and our daily lives. We’ve covered the foundational concepts: from its definition and core process to the three main paradigms (Supervised, Unsupervised, and Reinforcement Learning) and key algorithms. We’ve also highlighted the practical tools, the critical challenges, and the exciting future trends.

Understanding these basics is the first step. The field is vast and constantly changing, but the core principles remain the foundation upon which all advanced concepts are built. The journey into machine learning is one of continuous learning and discovery.

Whether you are a business leader looking to leverage AI, a developer aiming to build intelligent applications, or simply a curious mind, grasping these machine learning basics empowers you to participate in one of the most important technological revolutions of our time.