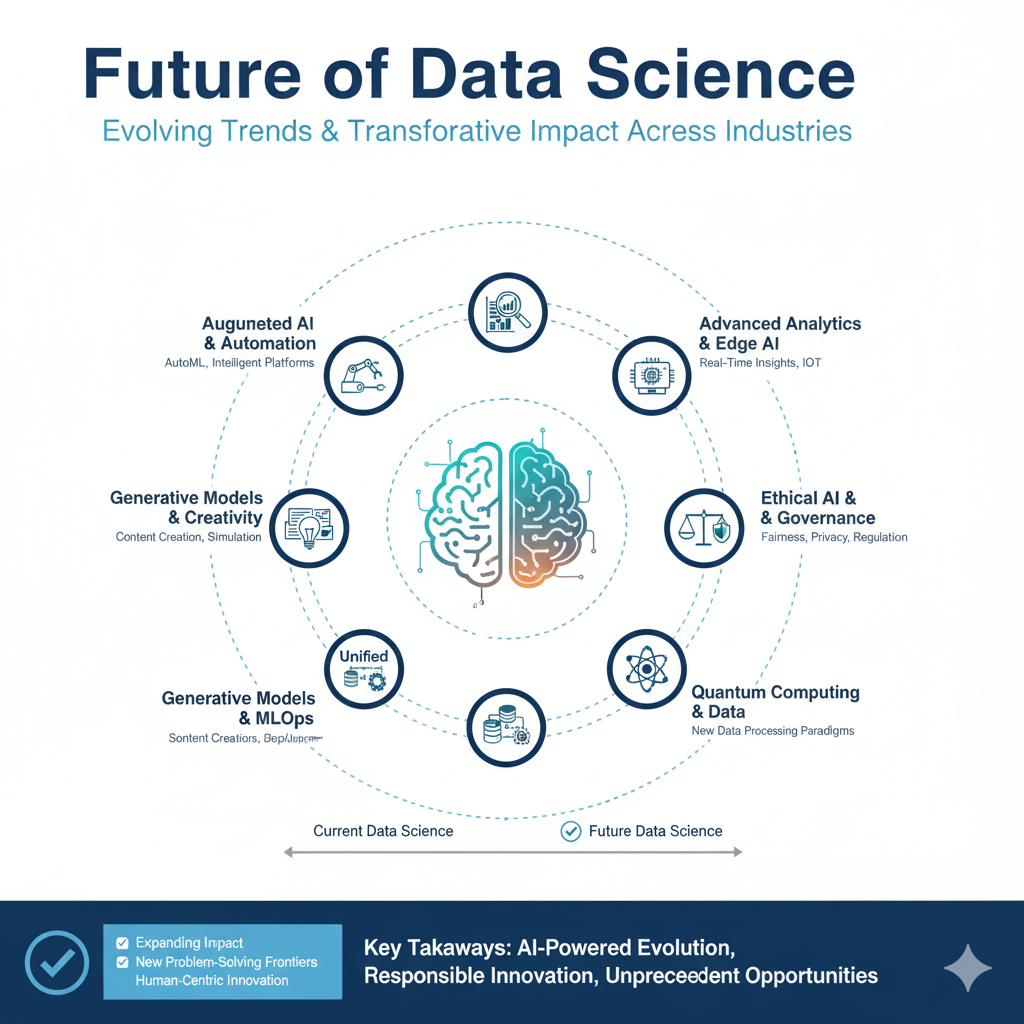

Explore the future of data science with 10 bold predictions for 2025 and beyond. From AI-assisted development to quantum machine learning, discover how data science is evolving and what it means for businesses and professionals.

Introduction: The Dawning of a New Era in Data

The discipline of data science is undergoing a profound metamorphosis. No longer confined to the backend of business intelligence dashboards, it is evolving into the central nervous system of intelligent enterprise and scientific discovery. The Future of Data Science is not merely an incremental improvement on the present; it is a fundamental shift towards a more automated, intuitive, and impactful discipline.

As we stand on the precipice of 2025, the convergence of massive computational power, sophisticated AI, and an ever-expanding digital universe is set to redefine what it means to be a data scientist. This article unveils ten bold predictions that will shape the Future of Data Science, charting a course from the code-heavy present to a concept-driven, strategic future.

1. The Rise of the Citizen Data Scientist: Democratization of Analytics

The Prediction: By 2025, over 80% of data-driven business insights will be generated by “citizen data scientists”—domain experts equipped with low-code/no-code AI platforms, rather than centralized data teams.

The Driving Force: The proliferation of intuitive, visual tools integrated directly into business platforms (like CRM and ERP systems) will empower marketing managers, supply chain analysts, and financial officers to build models, run forecasts, and generate insights without writing a single line of Python or SQL.

Impact on the Future of Data Science:

- Role Evolution: Professional data scientists will transition from hands-on coders to architects, educators, and orchestrators. They will design the robust, scalable platforms and reusable AI components that citizen data scientists use, and will focus on solving the most complex, novel problems that lie beyond the scope of automated tools.

- Cultural Shift: Data-driven decision-making will become deeply embedded in every business function, accelerating innovation and agility. However, this will also place a premium on data governance and literacy to prevent misinterpretation.

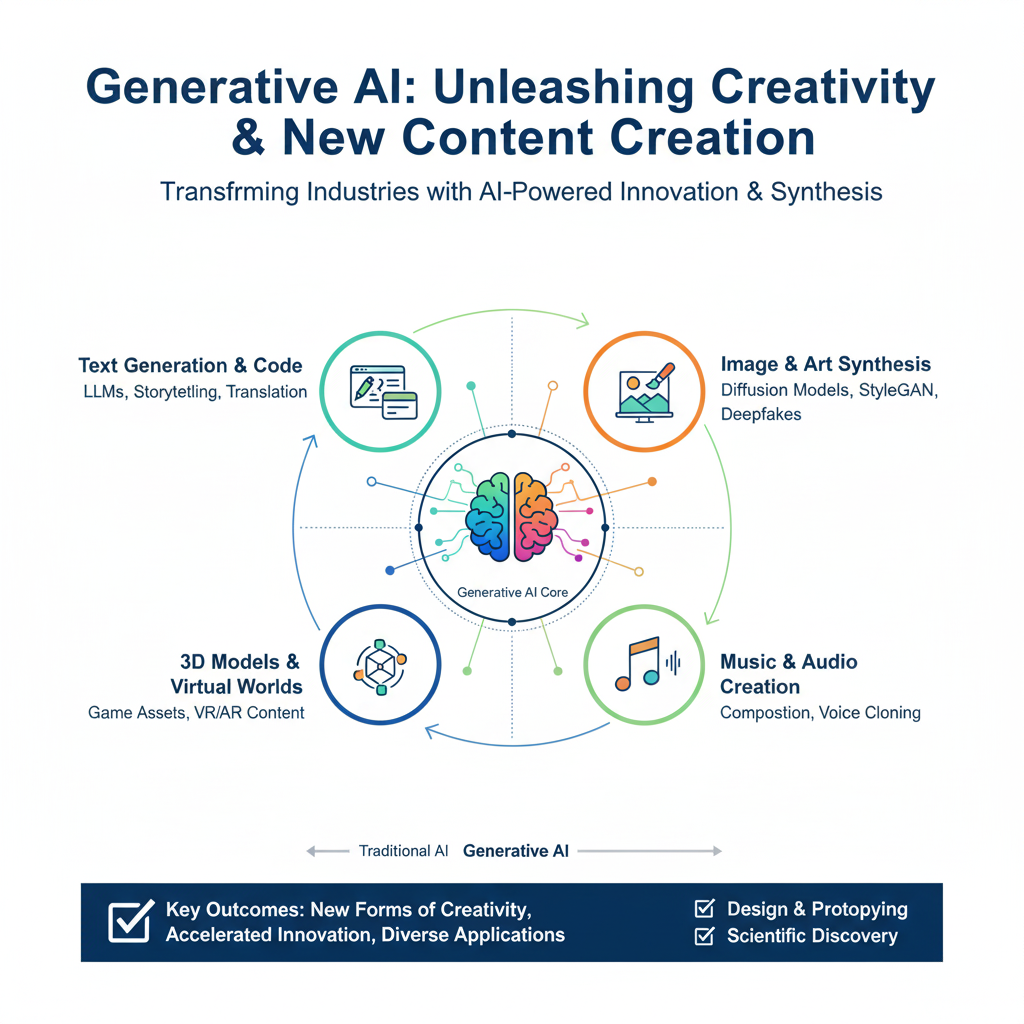

2. Generative AI Becomes the Primary Interface for Data Interaction

The Prediction: Natural language will become the standard interface for data querying, analysis, and visualization. Data scientists will “converse” with their datasets using tools powered by Large Language Models (LLMs).

The Driving Force: Advanced LLMs fine-tuned on SQL, Python, and statistical reasoning will understand complex user intents. A user will be able to type, “Show me a time-series forecast of our top-selling product in Europe for the next quarter, and break it down by marketing channel,” and the system will automatically generate the code, run the analysis, and produce an interactive dashboard.

Impact on the Future of Data Science:

- Productivity Explosion: The tedious, time-consuming tasks of data wrangling, exploratory data analysis (EDA), and basic reporting will be massively accelerated. This frees up data scientists to focus on higher-level strategy, model interpretation, and business storytelling.

- Lowered Barrier to Entry: This further fuels the citizen data scientist movement, making powerful analytics accessible to anyone who can articulate a question in plain English.

3. The Shift from Predictive to Causal AI

The Prediction: The focus of advanced analytics will move beyond correlation-based predictions to understanding cause-and-effect relationships. Causal AI will become a standard tool for strategic decision-making.

The Driving Force: Businesses realize that knowing what will happen is less valuable than knowing why it will happen and how to change it. For instance, a model that predicts customer churn is useful, but a causal model that identifies the specific product feature change that causes churn is transformative.

Impact on the Future of Data Science:

- More Robust Decision-Making: Causal models allow for reliable “what-if” scenario planning. Companies can simulate the impact of a price change, a new ad campaign, or a policy shift with greater confidence, minimizing risk.

- New Skill Sets: Data scientists will need to develop expertise in causal inference frameworks, experimental design, and domain-specific structural causal models, moving from pure statistics to a blend of science and economics.

4. The Ubiquity of Synthetic Data

The Prediction: By 2025, the use of synthetically generated data for training AI models will surpass the use of real-world data in sensitive or data-scarce domains.

The Driving Force: Privacy regulations (like GDPR), the high cost of data labeling, and the scarcity of data for rare events (e.g., fraudulent transactions, rare diseases) are making real-world data problematic. Generative AI models, particularly Diffusion models and GANs, can create highly realistic, perfectly labeled, and privacy-guaranteed synthetic datasets.

Impact on the Future of Data Science:

- Privacy by Design: Enables innovation in healthcare and finance without exposing sensitive personal information.

- Solving the “Long-Tail” Problem: Allows for the creation of vast datasets for edge cases, crucial for developing robust autonomous vehicles and diagnostic tools.

- Ethical Considerations: A new field of “synthetic data auditing” will emerge to ensure that synthetic data does not perpetuate or amplify hidden biases from the original data.

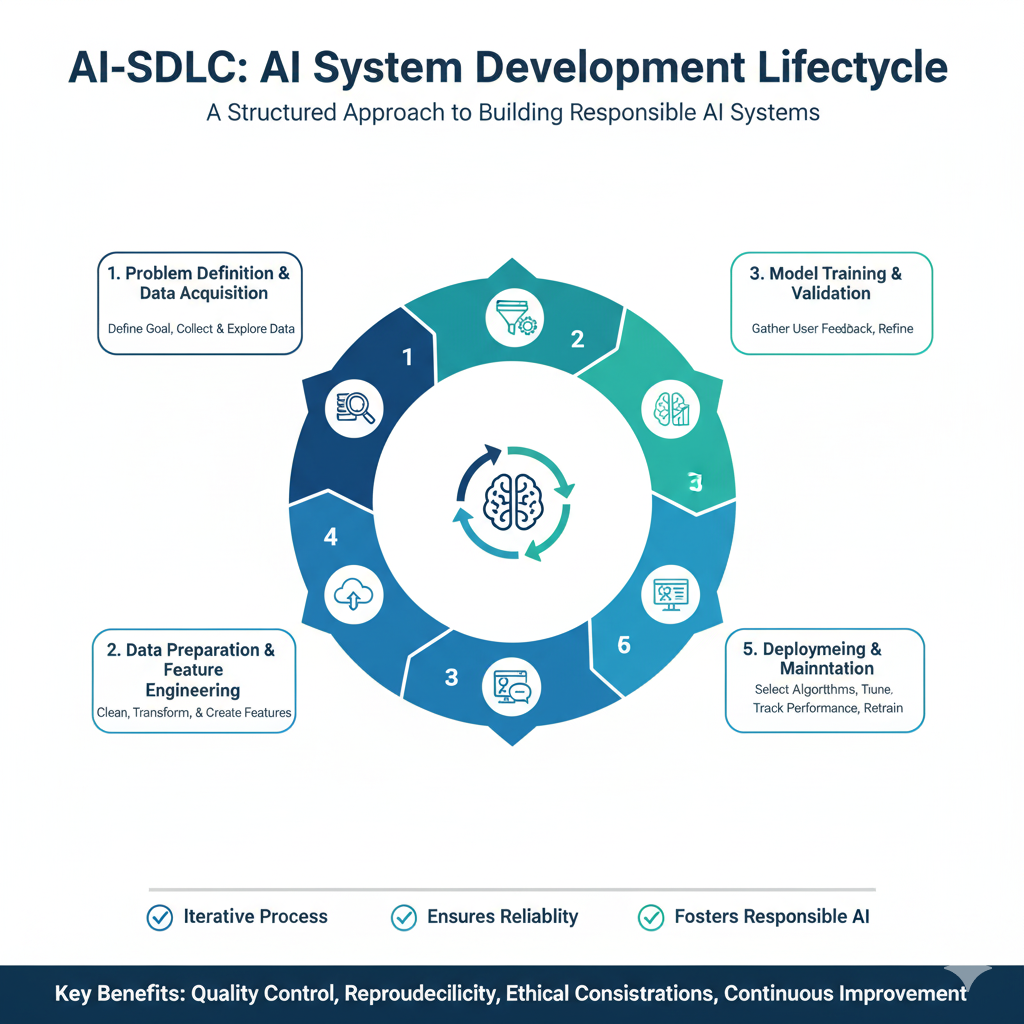

5. AI-Assisted Software Development for Data Scientists (AI-SDLC)

The Prediction: The entire data science lifecycle—from data cleaning and feature engineering to model deployment and monitoring—will be managed by AI-powered co-pilots.

The Driving Force: Tools like GitHub Copilot are just the beginning. Future specialized AI assistants will understand the entire context of a data science project, suggesting optimal feature transformations, recommending the best model architectures, writing deployment scripts, and even identifying data drift in production.

Impact on the Future of Data Science:

- Hyper-Efficiency: Data scientists will achieve in hours what currently takes days or weeks. The focus will shift from implementation to problem-framing and validation.

- Standardization and Best Practices: AI co-pilots will encode and enforce best practices, leading to more reliable, production-ready models and reducing technical debt.

6. The Emergence of Data Science as a Strategic Boardroom Function

This prediction signifies the ultimate maturation of data science from a technical support function to a core pillar of corporate governance and strategy.

The Evolution of the Chief Data Officer (CDO):

The role of the CDO is evolving from a manager of data infrastructure and governance to a strategic visionary, often called the “Chief Value Officer” for data. In the boardroom of 2025 and beyond, the CDO’s responsibilities will expand critically:

- M&A Due Diligence: The CDO will be central to evaluating acquisition targets. Beyond traditional financial metrics, they will assess the quality, uniqueness, and integrability of the target company’s data assets. A company with a proprietary, well-labeled dataset could be more valuable than its physical assets or even its current revenue stream. The CDO will answer: “What new data moats or market insights does this acquisition provide us?”

- Corporate Strategy Formulation: Instead of just reacting to business questions, the CDO will proactively use data to identify new market opportunities, model the long-term impact of strategic choices (e.g., entering a new region, launching a product line), and identify potential disruptions before they happen. They will use predictive and causal AI models to run sophisticated corporate-level simulations.

- P&L Accountability: Data science will no longer be a cost center buried in the IT budget. The CDO will be responsible for a “Data P&L,” directly tying data initiatives to key financial metrics like customer lifetime value (LTV) optimization, supply chain cost reduction, and revenue from data-driven products and services.

Impact on the Future of Data Science: A Deeper Dive

- From Cost Center to Profit Driver: The performance of Future of Data Science teams will be measured by business outcomes, not technical metrics. A model’s F1-score will be less important than its demonstrable impact on reducing customer churn by 5% or increasing the efficiency of a marketing spend by 15%. This forces a cultural shift where every project must start with a clear business hypothesis and a plan for measuring its financial return.

- Broader Skill Profile: The data leaders who succeed in the C-suite will possess a rare blend of skills:

- Technical Depth: To maintain credibility and understand what is possible.

- Business Acumen: To speak the language of the CEO and CFO, understanding core business drivers like unit economics, market share, and competitive positioning.

- Storytelling and Communication: The ability to translate complex analytical findings into a compelling narrative that drives executive action. A dashboard is not enough; a story about what the data means for the company’s future is required.

- Influence and Leadership: To champion a data-driven culture across all departments, breaking down silos and fostering collaboration.

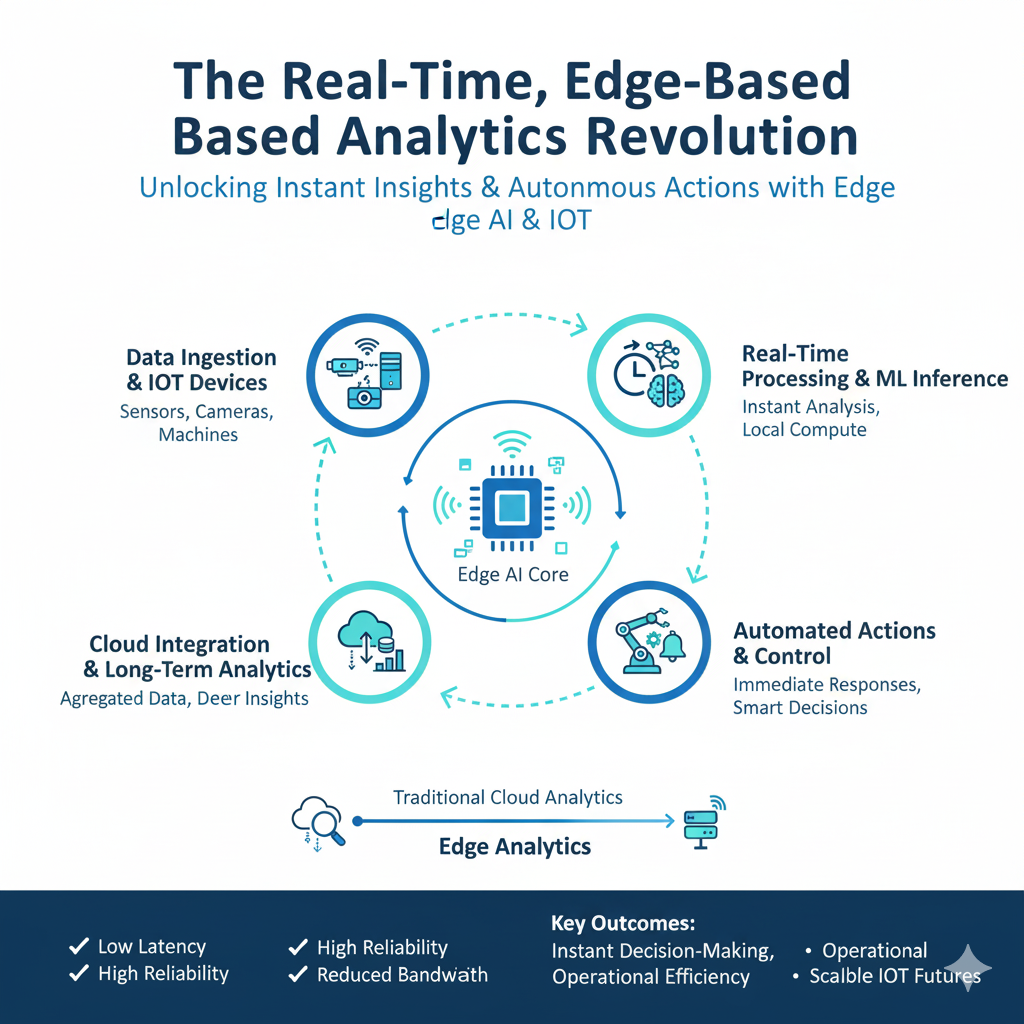

7. The Real-Time, Edge-Based Analytics Revolution

This prediction marks a fundamental architectural shift in how and where data is processed, moving intelligence closer to the source of data generation to overcome the physical limitations of cloud computing.

The “Why” Behind the Shift to the Edge:

The driving force is latency, bandwidth, and reliability. Sending data to a centralized cloud for processing and waiting for a response takes time—anywhere from 100 milliseconds to several seconds. Future of Data Science For many modern applications, this is unacceptable.

- Autonomous Vehicles: A car traveling at 70 mph covers about 10 feet in 100 milliseconds. A delay in processing sensor data to identify a pedestrian could be catastrophic. The decision to brake must be made on-board, in microseconds.

- Industrial IoT: A sensor on a manufacturing robot arm detecting a potential failure needs to trigger an immediate shutdown, not wait for a round-trip to the cloud.

- Bandwidth Constraints: A single autonomous vehicle can generate multiple terabytes of data per day. Transmitting all this raw data to the cloud is prohibitively expensive and inefficient. Processing it locally and only sending critical insights (an “anomaly detected” alert) saves immense bandwidth.

Impact on the Future of Data Science: A New Technical Playbook

- New Architectural Paradigms: “TinyML” and Edge-Optimized Models: Data scientists will need to master a new set of constraints. Edge devices have limited memory, processing power (CPU/GPU), and battery life. This necessitates:

- Model Compression: Techniques like pruning (removing redundant neurons), quantization (reducing numerical precision from 32-bit to 8-bit), and knowledge distillation (training a small “student” model to mimic a large “teacher” model) become standard practice.

- Hardware-Aware Design: Models will be designed for specific edge hardware accelerators (e.g., Google’s Edge TPU, Intel’s Movidius).

- Focus on Streaming Data: The paradigm shifts from analyzing static, batched datasets to working with infinite, continuous streams of data. This requires expertise in:

- Stream Processing Frameworks: Proficiency in platforms like Apache Kafka (for data ingestion) and Apache Flink (for stateful stream processing) will be as fundamental as knowing Future of Data Science Pandas is today.

- Online Machine Learning: Models that can learn and update their parameters incrementally from each new data point, without needing to be retrained on the entire historical dataset. This allows the model to adapt to changing patterns in real-time.

8. Quantum-Enhanced Machine Learning Moves from Lab to Niche Application

This prediction highlights the transition of Quantum Machine Learning (QML) from a theoretical field to a practical tool for solving a specific class of problems that are beyond the reach of even the most powerful classical supercomputers.

Understanding “Quantum Advantage”:

The goal is not to replace all classical ML, Future of Data Science but to achieve “quantum advantage”—the point where a quantum computer can solve a specific, useful problem faster or more accurately than any known classical algorithm. This will happen first in domains that are naturally quantum mechanical or involve searching vast combinatorial spaces.

Niche Applications on the Horizon:

- Drug Discovery and Materials Science: Simulating molecular interactions is incredibly difficult for classical computers because the behavior of molecules is governed by quantum mechanics. A quantum computer can naturally simulate these quantum systems. QML could be used to discover new pharmaceuticals by simulating how a candidate molecule binds to a protein target, or to design new materials with specific properties, like high-temperature superconductors.

- Complex Financial Modeling: Portfolio optimization, risk analysis, and option pricing involve calculating outcomes across a staggering number of variables and scenarios. Quantum algorithms can explore these vast possibility spaces more efficiently, leading to more robust financial strategies.

- Logistics and Supply Chain Optimization: Finding the most efficient routes for a global supply chain or optimizing a complex manufacturing process are combinatorial optimization problems. Quantum algorithms like QAOA (Quantum Approximate Optimization Algorithm) are designed to tackle these “needle-in-a-haystack” problems.

Impact on the Future of Data Science: The Birth of a New Specialty

- Specialization: The “Quantum Data Scientist”: This will be a highly specialized role requiring interdisciplinary knowledge. A professional in this field would need:

- Foundational ML Knowledge: Understanding classical models, loss functions, and training.

- Quantum Mechanics Fundamentals: Knowledge of qubits, superposition, entanglement, and quantum gates.

- Quantum Algorithm Expertise: Understanding how to map classical ML problems onto quantum circuits using algorithms like the Variational Quantum Eigensolver (VQE) or Quantum Neural Networks (QNNs).

- Unlocking New Frontiers: The primary impact is that QML will open up entirely new problem domains for inquiry. It will enable discoveries in fundamental science and complex system design that we can currently only theorize about. For most businesses, this will remain a specialized R&D area, but for those in pharmaceuticals, advanced materials, and finance, it will become a critical competitive edge.

9. The “AutoML 3.0” Ecosystem: Self-Healing and Self-Optimizing Data Pipelines

The Prediction: Automated Machine Learning (AutoML) will evolve beyond model selection to manage the entire end-to-end data pipeline, which will become self-documenting, self-monitoring, and self-optimizing.

The Driving Force: The complexity of modern data stacks is becoming unmanageable for humans. AI-driven systems will automatically detect data quality issues, retrain models upon detecting concept drift, and optimize computational resources for cost and performance—all with minimal human intervention.

Impact on the Future of Data Science:

- Operational Excellence: Data scientists will spend far less time on “firefighting” and maintenance, ensuring that models in production remain accurate and reliable over time.

- Reliability at Scale: This is a prerequisite for the widespread, trustworthy deployment of AI across critical enterprise systems.

10. The Critical Ascendancy of AI Ethics and Governance Platforms

The Prediction: Integrated AI Ethics & Governance platforms will become as mandatory as version control systems, automatically auditing models for fairness, bias, explainability, and compliance before they can be deployed.

The Driving Force: Mounting regulatory pressure, consumer demand for ethical tech, and the tangible financial risks of deploying biased AI will make ethical oversight a core part of the technical workflow, not an afterthought.

Impact on the Future of Data Science:

- Embedded Ethics: Tools that provide “fairness scores,” “explainability reports,” and “adversarial robustness checks” will be integrated directly into the CI/CD pipeline for AI.

- The “Ethical Data Scientist”: Proficiency in using these governance platforms and interpreting their outputs will become a standard requirement for the profession, building trust and ensuring responsible innovation.

Conclusion: Navigating the New Frontier

The Future of Data Science is not a distant horizon; it is unfolding now. It paints a picture of a field that is more accessible, more powerful, and more deeply integrated into the fabric of our world than ever before. The role of the data scientist will not become obsolete but will be elevated—from a technical specialist to a strategic visionary, an ethical guardian, and an orchestrator of intelligent systems.

The professionals who will thrive are those who embrace continuous learning, cultivate strategic business understanding, and champion the responsible use of data. The next decade will be defined by our ability to harness these bold predictions, transforming the vast ocean of data into a force for unprecedented progress and insight Future of Data Science.