Discover top ETL tools and techniques of 2025. Learn how to extract, transform, and load data efficiently for analytics and data science success

Have you ever tried to cook a big, complicated meal for a party? You spend hours beforehand—chopping vegetables, marinating meat, and prepping sauces. You do all this work in your kitchen before your guests arrive, so when it’s time to eat, everything is ready to go.

For decades, this is how companies have handled their data. This process is called ETL, which stands for Extract, Transform, Load.

But what if you had a different option? What if you could just bring all the raw ingredients straight to the table and let your guests assemble their own perfect plates? This new, more flexible approach is called ELT.

In today’s world, data isn’t just for scheduled reports anymore. It’s for real-time dashboards, instant recommendations, and powerful AI. The old ways of moving data are being challenged. In this guide, we’re going to explore both ETL and ELT. We’ll break down what they are, why the shift is happening, and how you can build data workflows that are not just faster, but smarter and more reliable than ever before.

What is ETL? (The Traditional Kitchen)

Let’s start with the classic. ETL is a three-step process for moving data from one place to another.

The ETL Process:

- Extract: You pull data out of its source. This could be a customer database, a CRM like Salesforce, website log files, or a payment processor.

- Example: Your script connects to your online store’s database and pulls out all the sales transactions from the last 24 hours.

- Transform: This is the “kitchen prep” work. You clean and reshape the data to make it useful. This happens before the data reaches its final destination. Common transformations include:

- Cleaning: Fixing typos, standardizing formats (e.g., changing “NY,” “New York,” and “N.Y.” to “New York”).

- Joining: Combining the sales data with your customer table to get a complete view.

- Aggregating: Calculating daily totals, averages, or counts.

- Filtering: Removing unnecessary columns or rows that aren’t needed for analysis.

- Load: You write the final, transformed, and clean data into a target system, usually a data warehouse or a data mart. This is where business analysts can access it to build reports and dashboards.

The Big Idea of ETL: The data is perfectly shaped and ready for consumption the moment it lands in the warehouse. It’s like serving a fully plated meal.

What is ELT? (The Modern Buffet)

Now, let’s talk about the newer approach that’s gaining massive traction. ELT stands for Extract, Load, Transform.

Notice the switch? The “Transform” step happens after the data is loaded.

The ELT Process:

- Extract: Same as before. You pull data from its source systems.

- Load: You immediately dump the raw, unprocessed data into a powerful, scalable cloud data warehouse like Snowflake, Amazon Redshift, or Google BigQuery. You load it exactly as it is, with all its messiness.

- Transform: The transformation happens inside the data warehouse itself, using its immense computing power. Analysts and data engineers write SQL queries to clean and shape the data on-demand, right where it sits.

The Big Idea of ELT: You get the raw ingredients into the pantry first. You figure out the recipes later. This is the “buffet” approach—you bring all the food out, and people can take and combine what they want.

ETL vs. ELT: A Side-by-Side Comparison

So, which one is better? The answer, as always, is “it depends.” Let’s look at the key differences.

| Feature | ETL (Traditional) | ELT (Modern) |

|---|---|---|

| Transformation Location | Happens on a separate processing server. | Happens inside the target data warehouse. |

| Data Stored | Only cleaned, structured data. | All data: raw, cleaned, structured, and unstructured. |

| Flexibility | Rigid. Schema is defined upfront. Changes are hard. | Highly flexible. You can re-transform data anytime. |

| Speed to Insight | Slower for initial loads (transformation is a bottleneck). | Faster for initial data availability. |

| Ideal For | Structured data, compliance-heavy environments (e.g., finance). | Big data, unstructured data, agile exploration, cloud-native setups. |

| Cost | Can be high for processing power and maintenance. | Leverages warehouse’s power; pay for query time. |

The Supermarket Analogy

- ETL is like a pre-packaged meal delivery service. They send you a box with perfectly chopped, pre-measured ingredients and a recipe. It’s convenient and consistent, but you can’t easily make a different dish.

- ELT is like shopping at a massive wholesale store like Costco. You buy everything in bulk—whole vegetables, giant packs of meat. It’s up to you to decide later if you want to make a stew, a salad, or a roast. It’s more flexible and great for feeding a large, diverse group.

Why the Shift to ELT is Accelerating in 2025

The move towards ELT isn’t just a trend; it’s a response to how data is used today. Here are the key drivers:

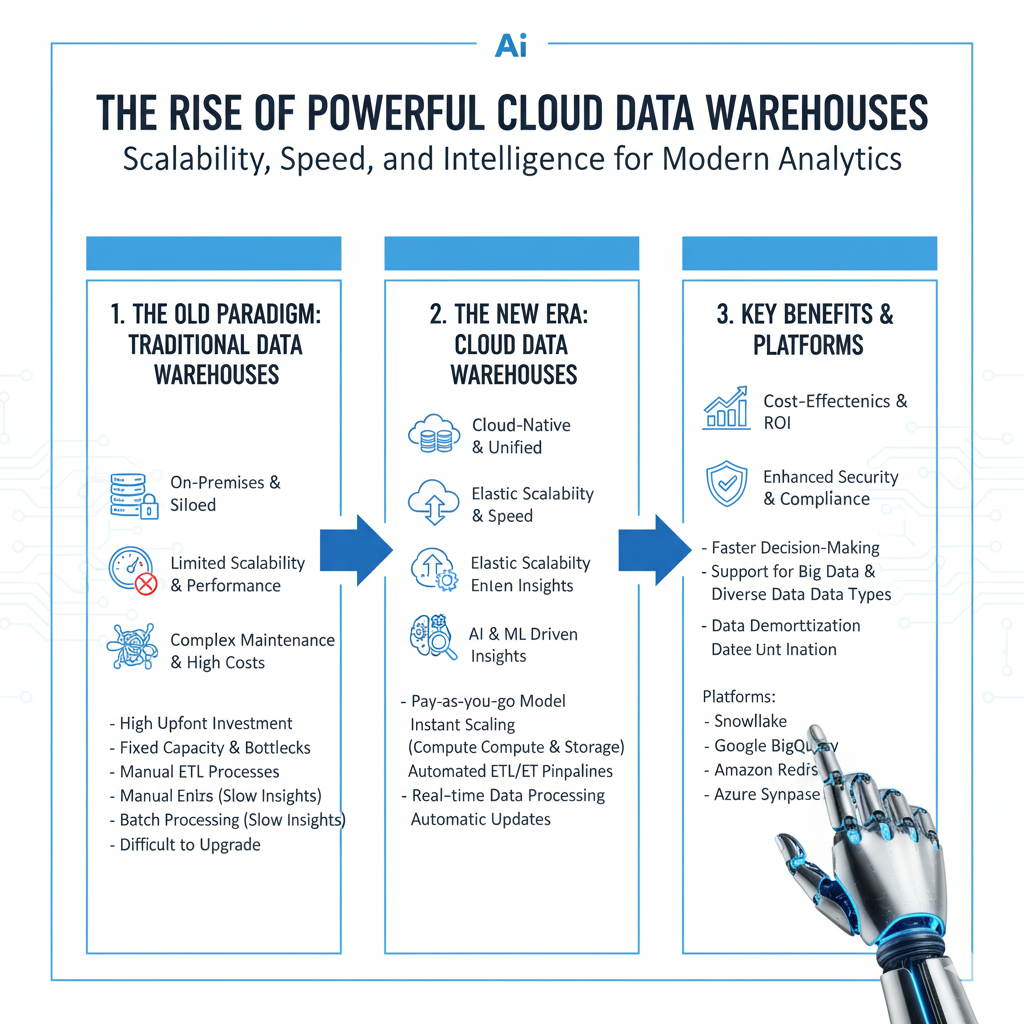

1. The Rise of Powerful Cloud Data Warehouses

The single biggest reason for ELT’s popularity is technology. Modern cloud data warehouses like Snowflake, BigQuery, and Redshift are incredibly powerful. They can process terabytes of data in seconds. A decade ago, transforming data inside the warehouse would have been painfully slow and expensive. Now, it’s not only possible but efficient. The transformation engine is no longer a separate, middleman server; it’s built right into the destination.

2. The Need for Speed and Agility

In the ETL model, if a business user needs a new metric, a data engineer often has to redesign the entire transformation pipeline. This can take days or weeks. With ELT, the raw data is already there. An analyst can often write a SQL query ETL to create that new metric in an afternoon. This agility is crucial for businesses that need to make fast decisions.

3. The Explosion of Data Variety

ETL was designed for structured data that fits neatly into tables. But today, over 80% of data is unstructured or semi-structured: social media posts, sensor data, video files, and JSON logs from web apps. ETL struggles with this “messy” data. ELT embraces it. You can dump a JSON file into your data lake and use the warehouse’s power to parse and understand it later.

4. The “Data Lakehouse” Architecture

A new pattern is emerging: the Data Lakehouse. It combines the low-cost, flexible storage of a data lake (which holds raw data) with the management and performance features of a data warehouse. ELT is the natural process for a Lakehouse. You Extract data, Load it into the data lake, and then Transform it for use in the warehouse layer.

Building Faster, Smarter, and More Reliable Workflows

Whether you choose ETL or ELT, the goal is the same: to build a workflow you can trust. Here’s how to do it in 2025.

For SPEED: Embrace Automation and Streamlining

- Use Managed Services: Don’t build and maintain your own ETL servers. Use cloud-native tools like AWS Glue, Azure Data Factory, or Fivetran. They are serverless, meaning they automatically scale up and down, and you only pay for what you use.

- Adopt Change Data Capture (CDC): Instead of dumping entire tables every night (which is slow), use CDC tools. They identify and move only the data that has changed since the last run. This makes your pipelines much faster and more efficient.

- Process Data in Micro-Batches: For near-real-time needs, don’t wait 24 hours. Run smaller batches every 15 minutes or hour to get fresher data.

For SMARTER Workflows: Infuse Intelligence

- Data Quality as Code: Don’t just hope your data is clean. Build checks directly into your pipeline. For example, a rule could be: “The

customer_emailfield must contain an ‘@’ symbol. If it doesn’t, send this record to a quarantine table for investigation.” Tools like Great Expectations and dbt (data build tool) are fantastic for this. - Leverage dbt (data build tool): This tool has revolutionized the T in ELT. It allows you to manage data transformations as code, using SQL. You can write tests, document your models, and create a dependency graph, making the entire transformation process transparent, version-controlled, and reliable.

- Use Metadata-Driven Pipelines: Instead of hardcoding connections for every data source, create a smart pipeline that reads a metadata table. This table tells the pipeline “here are the sources to pull from, here are the rules for each one.” Adding a new data source becomes as simple as adding a new row to a table.

For RELIABILITY: Build for the Real World

Things will go wrong. A reliable pipeline expects this.

- Implement Robust Error Handling: Your pipeline shouldn’t just crash and burn. It should:

- Retry: If an API is temporarily down, it should wait and try again.

- Alert: If a failure persists, it should send a message to a Slack channel or create a ticket in your project management tool.

- Graceful Degradation: If one source fails, the rest of the pipeline should keep running.

- Comprehensive Logging and Monitoring: Every step of your pipeline should write a log. You should be able to see:

- How many records were processed?

- How long did each step take?

- Did any data quality checks fail?

Tools like Datadog or Grafana can turn these logs into beautiful dashboards.

- Design for Idempotency: This is a fancy word for a simple idea: running your pipeline multiple times should have the same result as running it once. If your job fails halfway and you restart it, it shouldn’t create duplicate records. This is crucial for reliability.

A Real-World Example: ETL vs. ELT in Action

Scenario: An e-commerce company wants to analyze customer behavior by combining sales data with website clickstream data.

The ETL Approach:

- Extract: Pull data from the SQL database (sales) and the website application logs (clicks).

- Transform: On a separate server, run a complex script that:

- Parses the messy JSON clickstream data.

- Joins the click data with the sales data using a

user_id. - Filters out bot traffic.

- Aggregates everything into a clean “customer_journey” table.

- Load: Load the final “customer_journey” table into the data warehouse.

Problem: The marketing team now wants to analyze the click data in a new way that you didn’t anticipate. You have to go back and change the entire transformation script.

The ELT Approach:

- Extract: Pull data from the same sources.

- Load: Dump the raw sales data and the raw JSON clickstream files directly into the cloud data warehouse.

- Transform: Inside the warehouse, use SQL to create the “customer_journey” view. The raw data remains.

Advantage: The marketing team can directly query the raw clickstream data to answer their new question. They don’t need to wait for the data engineering team. The raw data is available for any future, unplanned analysis.

Frequently Asked Questions (FAQs)

Q1: Is ETL dead? Should I completely switch to ELT?

Not at all! ETL is far from dead. It’s still the right choice for many scenarios, especially when dealing with highly sensitive data that needs to be masked or anonymized before loading, or in very structured, regulated industries where the final output must be strictly controlled. Think of ELT as an additional, powerful tool in your toolbox, not a total replacement.

Q2: What is the role of a data engineer in an ELT world?

It’s evolving, not disappearing. Instead of spending all their time building and maintaining complex transformation code, data engineers in an ELT world focus on:

- Data Governance: Ensuring data quality, security, and compliance.

- Infrastructure: Managing the cloud data platform and tools.

- Orchestration: Building and monitoring the reliable flow of data.

- Enabling Others: Creating the frameworks and tools that allow data analysts and scientists to do their own transformations safely and efficiently.

Q3: We have a legacy on-premise data warehouse. Can we do ELT?

It’s very difficult. The core of ELT is the power and scalability of the target system. Traditional on-premise data warehouses often lack the computing power to transform massive amounts of raw data efficiently. A successful move to ELT usually goes hand-in-hand with a migration to a modern cloud data platform.

Q4: What is “Reverse ETL”?

This is another modern concept. Once you have all your clean, transformed data in your warehouse (ELT), you need to get it back out to business applications like Salesforce, HubSpot, or your marketing automation tool. Reverse ETL is this process. It syncs insights from the warehouse back into the operational tools that teams use every day, closing the data loop.

Q5: We’re hearing a lot about “data contracts.” How do they fit into ETL and ELT workflows?

This is a brilliant question that gets to the heart of modern data team collaboration. Think of a data contract as a formal handshake agreement between the team that produces data (e.g., the software engineering team that builds the app) and the team that consumes it (e.g., the data analytics team).

The “What” and “Why”:

A data contract is a documented agreement that specifies the structure, semantics, and quality guarantees of a data source. It answers questions like:

- What are the exact field names and data types (e.g.,

user_idis an integer, not a string)? - What does each field actually mean? (e.g.,

last_loginis in UTC timezone). - What are the service level agreements (SLAs)? (e.g., This data stream will have 99.9% uptime).

- What are the rules for schema changes? (e.g., You can’t delete a column without a 30-day warning).

How it Fits with ETL/ELT:

- In an ETL World: Data contracts are a lifesaver for the “Transform” stage. When the data engineering team knows exactly what to expect from the source, they can build their transformation logic with confidence. A breaking change in the source (like a column rename) won’t silently break the pipeline and corrupt the data warehouse. Instead, it violates the contract, triggering ETL an alert before the faulty data is ever transformed.

- In an ELT World: Contracts are arguably even more critical. Because you are loading raw data directly into the warehouse, you have less control upfront. A schema change in the source system could cause your downstream SQL transformations (and all the reports that depend on them) to fail spectacularly. A data contract acts as an enforced quality gate, ensuring that the data being loaded meets the basic expectations of the consumers who will be transforming it.

Real-Life Example:

The “Product Search” team plans to change the field product_name to item_title in their application database. Because of a data ETLcontract, they can’t just deploy this change. The contract forces them to notify the data team. Together, they agree on a transition period where both fields are provided, giving the data team time to update all their dbt models and dashboards without any service interruption.

Q6: What is the difference between “orchestration” and “transformation,” and why does it matter?

This is a common point of confusion, but understanding the distinction is key to building professional, reliable data pipelines. Think of it like building a car:

- Transformation is the Engine Work. This is the actual processing of the data itself. It’s the business logic—the code that cleans a string, joins two tables, or calculates a average. It’s what you do to the data. Using our earlier analogies, transformation is the “cooking” or the “prep work.”

- Tools: dbt, custom SQL scripts, Python Pandas code.

- Orchestration is the Assembly Line. This doesn’t touch the data itself. Instead, it controls the workflow. It answers the questions: What task runs first ETL? If task A fails, what should I do? Can task B and C run at the same time? It’s the when, how, and in what order things happen.

- Tools: Apache Airflow, Dagster, Prefect, AWS Step Functions.

Why the Separation Matters:

- Specialization: Data analysts might be experts at transformation (writing complex SQL in dbt) but don’t need to worry about the engineering complexity of orchestration (setting up servers and managing task dependencies). Data engineers can own the robust orchestration framework that everyone uses.

- Reliability: A good orchestrator like Airflow can retry a failed transformation task, send alert emails, and ensure that a task doesn’t run until its dependencies (e.g., “wait for the data to be loaded first”) are met. The transformation tool (dbt) focuses on doing its job correctly, while the orchestrator focuses on ensuring the job gets done reliably.

- Visibility: An orchestrator provides a single pane of glass to see the status of your entire data pipeline—what’s running, what succeeded, and what failed. The transformation tool provides logs about what it did during its specific task.

A Simple Pipeline Breakdown:

An orchestrated workflow might look like this:

- Task 1 (Orchestrated):

extract_data.pyruns. (Orchestrator: “Start this task at 2 AM.”) - Task 2 (Orchestrated):

load_raw_to_s3.pyruns only if Task 1 succeeds. (Orchestrator: “Now run this, but wait for Task 1 to finish.”) - Task 3 (Transformation):

dbt runis executed by the orchestrator. This is where the actual data transformation happens—dbt runs your SQL models inside the warehouse. - Task 4 (Orchestrated):

send_success_slack_message.pyruns only if Task 3 succeeds. (Orchestrator: “Everything worked, notify the team.”)

In this setup, the orchestrator is the conductor, and the transformation tools are the musicians. Both are essential to making beautiful music (reliable data).

Conclusion: The Future is Flexible

The journey from ETL to ELT is a story of empowerment. It’s about moving from a rigid, centralized process to a flexible, collaborative one. It’s about trusting the raw data and leveraging modern technology to ask questions we hadn’t even thought of yesterday.

The goal in 2025 isn’t to pick one over the other dogmatically. The goal is to understand the strengths of each approach and build a data architecture that allows for both. You might use ETL for your critical financial data and ELT for your exploratory product analytics.

The most successful organizations will be the ones that can build data workflows that are not only fast but also intelligent and resilient. They will be the ones that can get raw data to the people who need it quickly and provide them with the tools to unlock its ETL value.

So, take a look at your own data workflows. Are they stuck in the “kitchen prep” past? Or are they ready for the “buffet-style” future? By embracing the principles of modern data movement, you can ensure your data infrastructure is a catalyst for innovation, not a bottleneck.