Master the concepts of Deep Learning with our definitive guide. Explore neural networks, CNNs, RNNs, Transformers, and GANs. Learn the model building workflow, real-world applications, and future challenges of this revolutionary AI technology.

This response is AI-generated, for reference only.New chat

If you have ever conversed with a virtual assistant, been amazed by a computer’s ability to describe a photograph, or witnessed a car navigate city streets autonomously, you have experienced the power of Deep Learning. This transformative subfield of machine learning has catapulted artificial intelligence from a theoretical discipline into a practical force that is reshaping industries and redefining what is possible with technology. At its core, Deep Learning is inspired by the structure and function of the human brain, enabling machines to learn from data in a hierarchical and incredibly sophisticated manner.

This comprehensive guide will take you on a journey through the fascinating world of Deep Learning. We will break down its foundational concepts, explore the crucial role of neural network architectures, and examine its groundbreaking applications. We will also navigate the practical workflow of building a deep learning model, confront the unique challenges posed by this technology, and peer into its promising future. Understanding Deep Learning is no longer a niche skill but a essential for anyone looking to grasp the trajectory of modern technology.

What is Deep Learning? The Brain-Inspired Model

Deep Learning is a subset of machine learning that utilizes artificial neural networks with multiple layers, known as “deep” networks. These layers enable the model to learn increasingly complex and abstract representations of data. Imagine a child learning to identify a cat. They don’t start with a definition; they start by seeing many examples. Their brain, a biological neural network, learns to recognize edges, then shapes, then patterns like fur and whiskers, and finally the complex concept of “cat.” A deep neural network operates on a similar principle.

While traditional machine learning models often rely on human experts to manually identify and engineer relevant features from the raw data, a key advantage of Deep Learning is its ability to perform automatic feature extraction. The model learns the optimal features directly from the data itself, layer by layer, making it exceptionally powerful for unstructured data like images, text, and sound.

Formally Defined:

The goal of a Deep Learning model is to approximate a complex function f* that maps input data X to output Y. It does this by stacking multiple layers of processing units (neurons), where each layer transforms the input and passes it to the next. The “depth” of the model, achieved through these many layers, allows it to learn a hierarchy of features, from simple to complex, driving its remarkable performance.

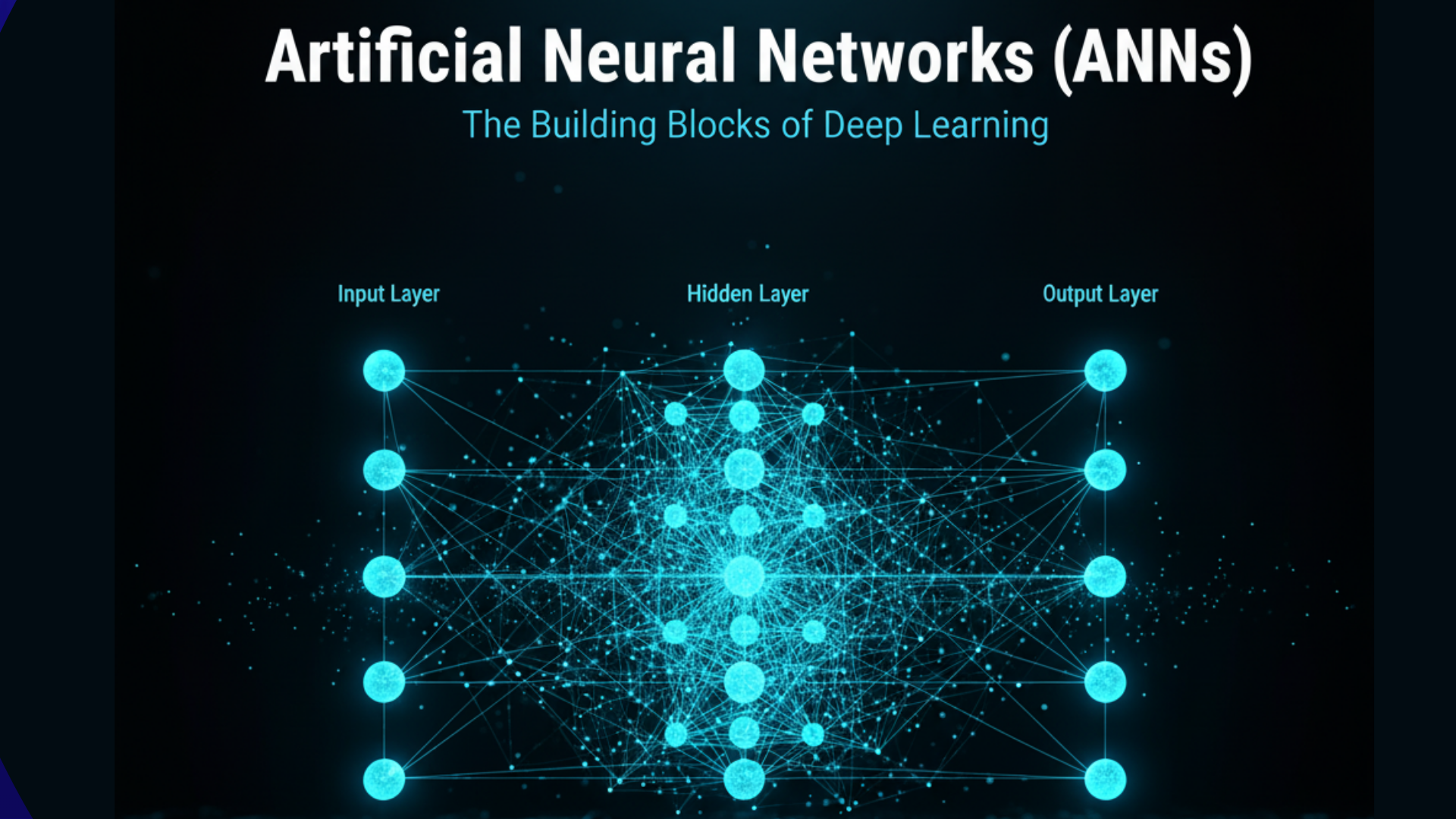

The Core Architecture: Artificial Neural Networks (ANNs)

The fundamental building block of all Deep Learning is the Artificial Neural Network. An ANN is a computational model loosely modeled after the dense network of neurons in the human brain.

- Artificial Neurons (Perceptrons): The basic unit of an ANN. It receives one or more inputs, multiplies them by a “weight” (representing the strength of the connection), sums them, and then passes the result through a non-linear “activation function” to produce an output.

- Layers: Neurons are organized in layers.

- Input Layer: This is the first layer, which receives the raw input data.

- Hidden Layers: These are the intermediate layers between input and output. A model is considered “deep” if it has multiple (sometimes hundreds) of hidden layers. Each layer learns to identify different levels of features.

- Output Layer: The final layer that produces the prediction, such as a classification label or a continuous value.

- Weights and Biases: These are the parameters the model learns during training. The weights determine the influence of one neuron on another.

- Activation Functions: These functions (like ReLU, Sigmoid, or Tanh) introduce non-linearity into the network. Without them, the entire network would be a simple linear model, incapable of learning complex patterns.

The Deep Learning Workflow: From Data to Deployment

Building a effective Deep Learning system involves a meticulous, iterative process.

- Data Acquisition and Preparation: The fuel for any Deep Learning model is data, and lots of it. This stage involves collecting a massive, high-quality dataset. Preparation is critical and includes:

- Data Labeling: For supervised tasks, this is a monumental effort, often requiring human annotators.

- Data Augmentation: Artificially expanding the dataset by creating modified versions of existing data (e.g., rotating images, altering audio pitch) to improve the model’s robustness and prevent overfitting.

- Normalization/Standardization: Scaling input data to a common range to stabilize and speed up the training process.

- Model Architecture Selection: Choosing the right type of neural network is paramount. The choice depends entirely on the problem domain—different architectures are designed for different data types.

- Model Training – The Learning Process: This is the computationally intensive heart of Deep Learning.

- Forward Propagation: Input data is passed through the network, layer by layer, to generate a prediction.

- Loss Function Calculation: The model’s prediction is compared to the actual target using a loss function (e.g., Cross-Entropy for classification, MSE for regression). The loss quantifies how wrong the prediction was.

- Backpropagation and Gradient Descent: This is the magic behind the learning. The error is propagated backward through the network. The algorithm calculates the gradient (derivative) of the loss function with respect to each weight, indicating the direction and magnitude to adjust the weights to reduce the error.

- Optimization: An optimizer (like Adam or SGD) uses these gradients to update the model’s weights, iteratively improving its performance over many cycles (epochs).

- Model Evaluation and Hyperparameter Tuning: The model’s performance is evaluated on a held-out test set. Hyperparameters (e.g., learning rate, number of layers, number of neurons) are not learned during training and must be tuned manually or through automated methods to optimize performance.

- Deployment and Inference: Once trained and validated, the model is deployed into a production environment where it can make predictions on new, real-world data. This phase, known as inference, must be optimized for speed and efficiency.

A Tour of Essential Deep Learning Architectures

The versatility of Deep Learning stems from its specialized architectures, each engineered for specific data types and tasks.

1. Convolutional Neural Networks (CNNs)

CNNs are the undisputed champions for processing grid-like data, such as images.

- Key Features: They use mathematical operations called convolutions that apply filters across the image to detect features like edges, corners, and textures. Pooling layers then reduce the spatial size, making the model more efficient and robust to small variations.

- Hierarchical Feature Learning: Early layers learn simple features, while deeper layers combine them to recognize complex objects like faces, animals, or street signs.

- Applications:

- Image Recognition and Classification (e.g., Google Photos)

- Object Detection (e.g., self-driving cars)

- Medical Image Analysis (e.g., detecting tumors in MRI scans)

- Facial Recognition Systems

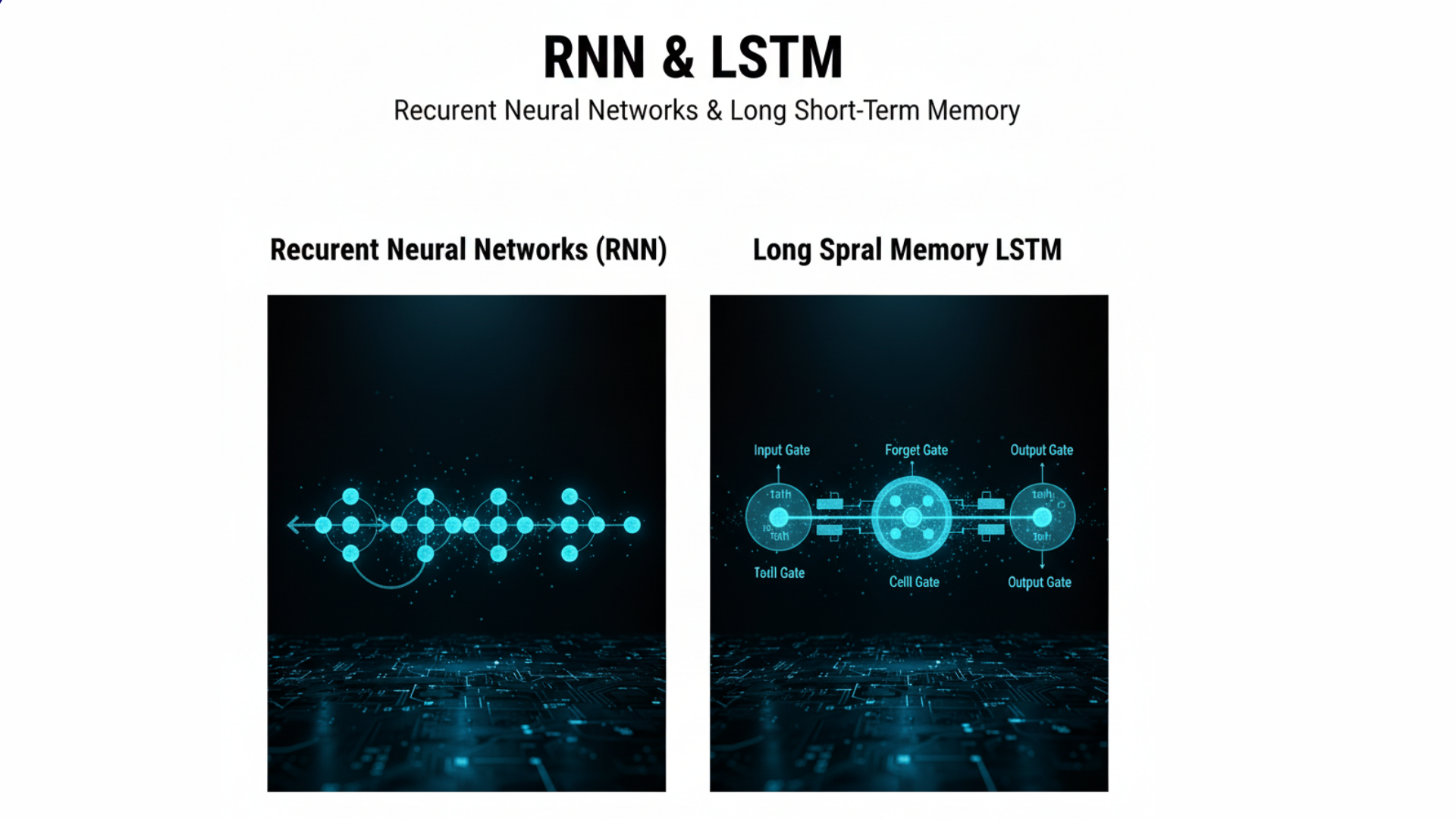

2. Recurrent Neural Networks (RNNs) and LSTMs

RNNs are designed for sequential data where the order matters, such as time series, speech, and text.

- Key Features: Unlike feedforward networks, RNNs have “memory” through internal loops. They process inputs one element at a time and maintain a hidden state that contains information about previous elements in the sequence.

- The Challenge of Vanishing Gradients: Basic RNNs struggle to learn long-range dependencies in sequences.

- Long Short-Term Memory (LSTM) Networks: A special, more complex kind of RNN that uses a gating mechanism to selectively remember or forget information over long periods, effectively solving the vanishing gradient problem.

- Applications:

- Machine Translation (e.g., Google Translate)

- Speech Recognition

- Text Generation

- Time Series Forecasting (e.g., stock market prediction)

3. Transformers

The transformer architecture has recently revolutionized the field of natural language processing (NLP), largely replacing RNNs and LSTMs for many tasks.

- Key Features: Transformers rely on a mechanism called “self-attention,” which allows the model to weigh the importance of different words in a sentence when processing a particular word, regardless of their position. This enables parallel processing of entire sequences, making them vastly more efficient to train than RNNs.

- Applications:

- Large Language Models (LLMs) like GPT-4 and BERT

- State-of-the-art machine translation

- Text Summarization

- Question-Answering Systems

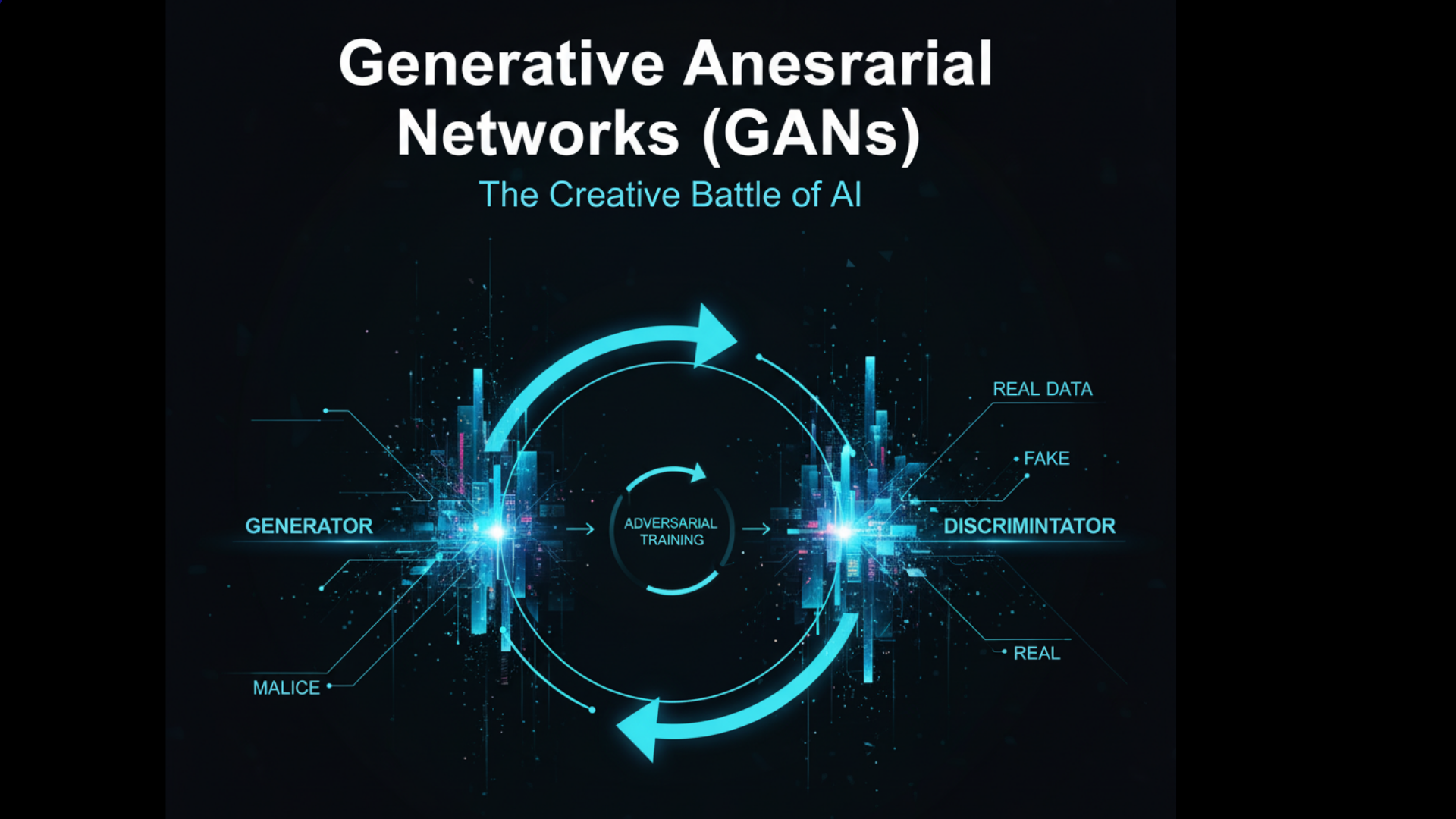

4. Generative Adversarial Networks (GANs)

GANs are a fascinating class of models used for generative tasks, creating new data that is indistinguishable from real data.

- Key Features: A GAN consists of two dueling neural networks:

- The Generator creates fake data.

- The Discriminator tries to distinguish between real and fake data.

- The Adversarial Process: These two networks are trained together in a competitive game. The generator gets better at creating realistic data, and the discriminator gets better at spotting fakes. This pushes both to improve until the generator produces highly realistic outputs.

- Applications:

- Creating photorealistic images and art (e.g., DALL-E, Stable Diffusion)

- Deepfake generation

- Image-to-Image Translation (e.g., turning sketches into photos)

- Data Augmentation

Evaluating Deep Learning Models

Evaluating the performance of a Deep Learning model depends on its task, using many of the same metrics as traditional machine learning.

- For Classification: Accuracy, Precision, Recall, F1-Score, and the Confusion Matrix.

- For Regression: Mean Absolute Error (MAE), Mean Squared Error (MSE).

- For Object Detection: Mean Average Precision (mAP), which combines precision and recall for object localization.

- For Generative Models (GANs): Inception Score (IS) and Fréchet Inception Distance (FID), which attempt to quantify the quality and diversity of generated images.

Challenges and Limitations of Deep Learning

Despite phenomenal success of deep learning, this technology is not a silver bullet and comes with significant challenges.

- Data Hunger: These models typically require enormous amounts of labeled training data, which can be expensive, time-consuming, and sometimes impossible to acquire.

- Computational Cost: Training state-of-the-art models requires powerful hardware, like GPUs and TPUs, and can take days or weeks, consuming substantial energy.

- The Black Box Problem: The internal workings of deep neural networks are notoriously difficult to interpret. Understanding why a model made a specific decision is a major area of research (Explainable AI) and is critical for high-stakes applications like healthcare and criminal justice.

- Lack of Common Sense and Reasoning: While excelling at pattern recognition, these systems often lack a fundamental understanding of the world and can make absurd errors that a human never would.

- Bias and Fairness: Models can learn and amplify societal biases present in the training data, leading to unfair or discriminatory outcomes.

The Future of Deep Learning

While deep learning has already revolutionized numerous fields, the most transformative developments likely lie ahead. The field is rapidly evolving to overcome its current limitations and venture into new territories of capability. Several key frontiers represent the most active and promising areas of research that will define the next decade of artificial intelligence.

1. The Pursuit of Efficiency: Doing More with Less

The enormous computational and data requirements of current models are unsustainable for widespread deployment. Research in efficiency focuses on several innovative approaches:

- Model Compression: Techniques like pruning (removing redundant neurons), quantization (reducing numerical precision), and knowledge distillation (training smaller “student” models to mimic larger “teacher” models) are creating models that are dramatically smaller and faster with minimal loss of accuracy.

- Neuromorphic Computing: Moving beyond traditional von Neumann architecture, this involves building specialized hardware that physically mimics the brain’s neural structure. Chips like Intel’s Loihi use spiking neural networks (SNNs) to process information in a more event-driven, asynchronous manner, potentially offering orders of magnitude better energy efficiency for specific tasks.

- Data-Efficient Learning: To overcome the data bottleneck, researchers are pioneering few-shot and zero-shot learning techniques. These allow models to recognize new categories from just a handful of examples—or even from a textual description alone—mimicking the human ability to learn quickly from limited experience.

2. Explainable AI (XAI): Opening the Black Box

As deep learning models make decisions in critical areas like healthcare, finance, and criminal justice, their “black box” nature becomes a significant barrier to trust and adoption. XAI is a critical field focused on making these models transparent and interpretable.

- Interpretability Techniques: Methods like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) help quantify the contribution of each input feature to a final prediction, answering the question, “Why did the model make this specific decision?”

- Causal Reasoning: The next step beyond pattern recognition is for models to understand cause-and-effect relationships. Instead of just learning that symptoms A and B are correlated with disease C, a causal model would strive to understand the underlying biological mechanisms, leading to more robust and reliable predictions that hold up in changing environments.

3. AI Alignment: Ensuring Safe and Beneficial Intelligence

As AI systems become more powerful and autonomous, the field of AI alignment has emerged to tackle one of the most profound challenges: ensuring that these systems’ goals and behaviors are aligned with human values and intentions.

- Value Learning: How can we teach an AI complex, nuanced human values like fairness, compassion, and well-being? This involves developing techniques for models to learn and internalize these principles from human feedback, a process known as Reinforcement Learning from Human Feedback (RLHF).

- Robustness and Reliability: This involves building models that are resistant to manipulation (e.g., adversarial attacks) and can reliably admit uncertainty or say “I don’t know” when faced with novel or out-of-distribution inputs, preventing overconfident errors in critical situations.

4. Multimodal Learning: Towards Holistic Understanding

The real world is not composed of isolated images, text, or sounds. The next generation of AI will be multimodal, capable of processing and connecting information from various sensory inputs simultaneously.

- Cross-Modal Reasoning: Future models will not just process text and images together; they will perform sophisticated reasoning across them. For example, a model could watch a video, read a script, and listen to the soundtrack to answer complex questions about the plot’s subtext or a character’s motivations.

- Embodied AI: This involves placing AI agents in simulated or real-world environments where they must learn by interacting and sensing their surroundings, combining vision, touch, and proprioception (sense of self-movement) to learn tasks in the way humans and animals do. This is a crucial step towards general-purpose robots.

5. Generative AI and the Synthesis of Everything

The explosion of generative models like GPT-4 and DALL-E is just the beginning. The future points towards:

- Multimodal Generation: Models that can seamlessly generate complex, coherent content across multiple modalities from a single prompt—for instance, producing a video complete with a script, visuals, and sound effects from a one-sentence description.

- AI for Science and Discovery: Generative models will be used to hypothesize new scientific theories, design novel molecules for drug discovery, and engineer new materials with bespoke properties, dramatically accelerating the pace of scientific innovation.

Conclusion: The Indispensable Engine of Modern AI

Deep learning has fundamentally transformed the landscape of artificial intelligence, providing the tools to solve problems once thought to be the exclusive domain of human cognition. From enabling real-time language translation and powering life-saving medical diagnostics to generating creative art and driving autonomous vehicles, its impact is both profound and widespread.

The journey, however, is far from over. The current challenges of data hunger, massive computational costs, and the “black box” problem are not endpoints but catalysts for the next wave of innovation. The relentless pace of research in efficiency, explainability, safety, and multimodal understanding promises to overcome these hurdles, paving the way for AI systems that are more accessible, trustworthy, and capable.

Understanding the principles, potential, and pitfalls of deep learning is no longer a specialized pursuit but a essential literacy for navigating and shaping the future of our increasingly intelligent world. It is the indispensable engine of modern AI, and its continued evolution will undoubtedly be one of the defining stories of the 21st century, reshaping every industry and aspect of human life in the process.