Master data workflows from ingestion to visualization with best practices for reliability, scalability, and governance. Learn to build efficient data pipelines that deliver trustworthy insights.

Introduction: The Strategic Importance of Data Workflows in Modern Organizations

Data workflows represent the circulatory system of modern data-driven organizations, orchestrating the complex journey of data from its raw source to actionable business insights. In today’s competitive landscape, where data volumes are growing exponentially and decision-making timelines are shrinking, well-designed data workflows have evolved from technical necessities to strategic differentiators. The global data integration market, projected to reach $19.6 billion by 2027, reflects the critical importance of efficient data workflows in enabling organizations to harness their data assets effectively.

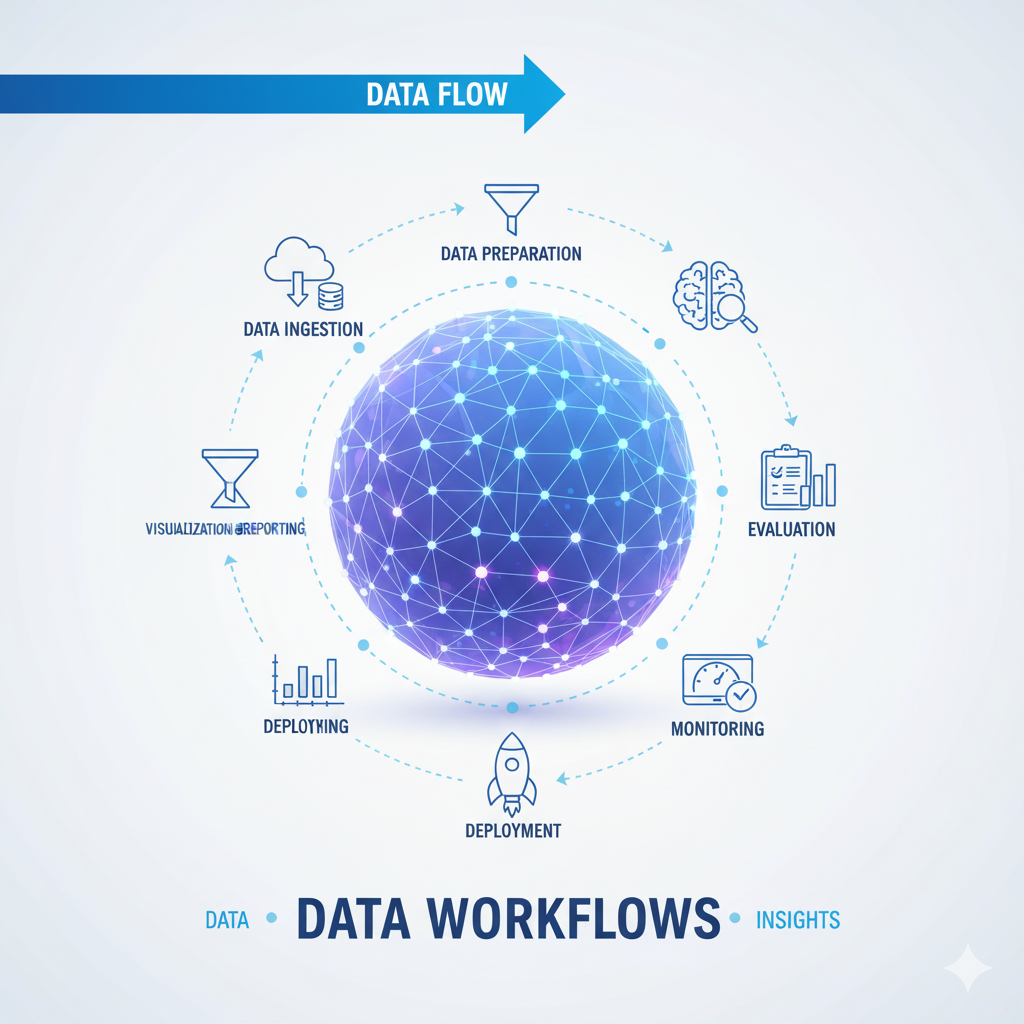

The evolution of data workflows has been dramatic, moving from simple ETL (Extract, Transform, Load) processes to sophisticated, automated pipelines that handle diverse data types, real-time streams, and complex transformations. Modern data workflows encompass not just the technical movement of data but also the governance, quality assurance, and collaboration processes that ensure data remains trustworthy and valuable throughout its lifecycle. This comprehensive approach recognizes that the quality of insights depends not just on analytical sophistication but on the foundational data workflows that deliver clean, reliable data to decision-makers.

This guide explores the end-to-end best practices for designing, implementing, and maintaining effective data workflows that span from data ingestion through transformation to final visualization. We’ll examine each stage of the data journey, providing practical strategies, architectural patterns, and implementation considerations that organizations can apply to build data workflows that are robust, scalable, and aligned with business objectives. Whether you’re building new data workflows from scratch or optimizing existing processes, these best practices will help you create data infrastructure that supports rather than constrains your analytical ambitions.

Foundational Principles of Effective Data Workflows

The Four Pillars of Modern Data Workflow Design

Reliability and Resilience form the non-negotiable foundation of production-grade data workflows. In practice, this means designing workflows that can withstand component failures, data quality issues, and unexpected schema changes without manual intervention. Reliable data workflows implement comprehensive error handling with appropriate retry logic, establish clear failure notification mechanisms, and maintain data consistency even when processing is interrupted. Resilience is achieved through idempotent operations that can be safely retried, checkpointing to track progress through long-running processes, and circuit breakers that prevent cascading failures across connected systems.

Scalability and Performance ensure that data workflows can handle growing data volumes and processing demands without degradation. Scalable design involves stateless processing components that can be horizontally scaled, efficient data partitioning strategies that enable parallel processing, and elastic resource allocation that matches computational capacity to workload demands. Performance optimization focuses not just on raw processing speed but on minimizing end-to-end latency, particularly for real-time use cases. Techniques like data compression, columnar storage formats, intelligent caching, and query optimization all contribute to performant data workflows that deliver data when and where it’s needed.

Maintainability and Evolvability address the long-term sustainability of data workflows as business requirements change and technologies evolve. Maintainable workflows feature modular design with clear separation of concerns, comprehensive documentation, and standardized coding practices that enable multiple team members to collaborate effectively. Evolvability requires designing data workflows with change in mind—implementing schema evolution techniques to handle structural changes in data, using feature flags for controlled rollout of new functionality, and maintaining backward compatibility to support gradual migration of downstream systems.

Governance and Security ensure that data workflows comply with organizational policies and regulatory requirements while protecting sensitive information. Effective governance involves data lineage tracking to understand data provenance, access controls that enforce least-privilege principles, and audit trails that document data access and transformation. Security must be integrated throughout the workflow, from encryption of data in transit and at rest to secure credential management and network security controls. In regulated industries, data workflows must also support data sovereignty requirements and privacy-enhancing technologies like differential privacy.

Architectural Patterns for Modern Data Workflows

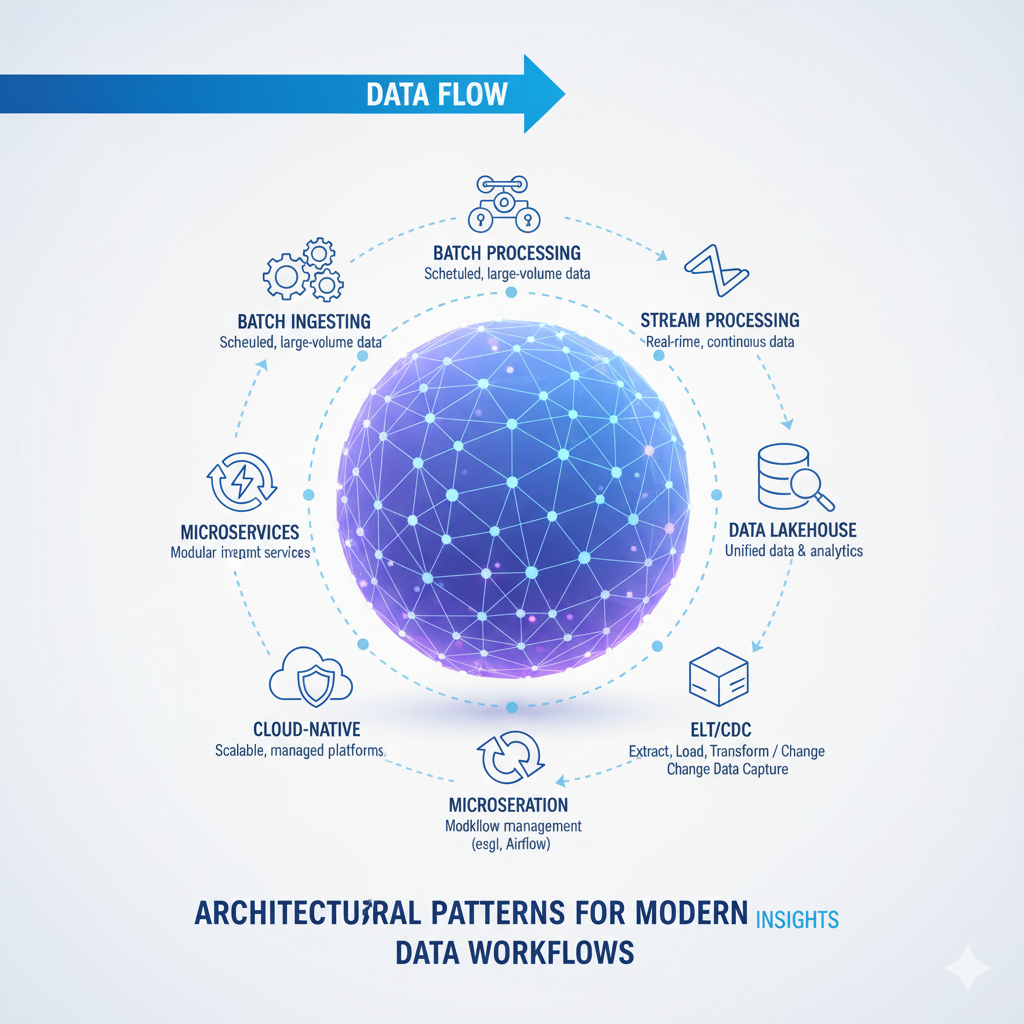

Lambda and Kappa Architectures represent two foundational approaches to designing data workflows that handle both batch and real-time processing requirements. The Lambda architecture maintains separate batch and speed layers that serve different latency requirements, with a serving layer that presents a unified view. While this approach ensures comprehensive data processing, it introduces complexity through duplicated logic. The Kappa architecture simplifies this by using a single stream processing layer for all data, treating batch processing as a special case of stream processing. In modern implementations, the trend is toward Kappa-inspired approaches using stream processing frameworks that can handle both real-time and historical data efficiently.

Medallion Architecture has emerged as a popular pattern for organizing data in data lakes, providing a clear progression from raw to refined data. The bronze layer contains raw, unprocessed data in its original format; the silver layer contains cleaned, validated, and enriched data; and the gold layer contains business-level aggregates and features ready for consumption. This layered approach enables data workflows to maintain data provenance while progressively improving data quality, and supports both exploratory analysis and production use cases from the same foundational data.

Data Mesh and Federated Architecture represent a paradigm shift in how organizations structure their data workflows and data ownership. Rather than centralized data teams building and maintaining all workflows, the data mesh approach distributes responsibility to domain-oriented teams who own their data products end-to-end. This changes the nature of data workflows from monolithic systems to interconnected networks of specialized workflows that expose data as products. Federated governance ensures consistency and interoperability while allowing domain teams autonomy in implementation.

Data Ingestion: Best Practices for Data Acquisition

Strategic Source Evaluation and Connection

Comprehensive Source Assessment begins before any technical implementation, involving careful evaluation of data sources based on multiple criteria. Data quality assessment examines the completeness, accuracy, and consistency of source data through profiling and sampling. Technical evaluation considers the accessibility of data through APIs, database connections, or file transfers, as well as the stability and performance of source systems. Business context understanding ensures that ingested data aligns with analytical requirements and that the timing and frequency of ingestion matches business needs for data freshness.

Connection Strategy Implementation varies based on source characteristics and destination requirements. Batch ingestion works well for large volumes of data where near-real-time availability isn’t critical, using tools like Azure Data Factory, AWS Glue, or custom Spark jobs. Change Data Capture (CDC) techniques capture and propagate database changes as they occur, enabling near-real-time availability without full table scans. Streaming ingestion handles continuous data flows from sources like IoT devices, clickstreams, or log files, using platforms like Kafka, Kinesis, or Event Hubs. The choice among these approaches depends on data volume, velocity, and the business tolerance for latency.

Robust Error Handling and Monitoring during ingestion prevents data loss and enables quick issue resolution. Validation at ingestion checks for basic data quality issues like missing required fields, format violations, or values outside expected ranges. Dead letter queues capture records that cannot be processed successfully, enabling analysis and reprocessing without blocking the entire workflow. Comprehensive monitoring tracks ingestion volumes, latency, error rates, and data freshness, with alerting configured for anomalies that might indicate source system issues or workflow failures.

Implementation Patterns for Common Scenarios

Database Replication Workflows require careful consideration of transactional consistency and performance impact. Logical replication using database-native capabilities like PostgreSQL logical replication or Oracle GoldenGate provides consistency with minimal performance impact on source systems. Query-based ingestion using incremental queries with watermark columns balances simplicity with performance, though it may miss deleted records or suffer from performance issues on large tables. Snapshot-based approaches capture complete table states at intervals, ensuring comprehensiveness at the cost of increased storage and processing.

API-Based Ingestion Workflows must handle rate limiting, authentication, and schema evolution gracefully. Exponential backoff and retry strategies manage temporary API failures and rate limiting without overwhelming source systems. Incremental synchronization using timestamps or change tokens minimizes data transfer by only retrieving changed records. Schema evolution handling accommodates API changes through version-aware clients and flexible data models that can handle new fields without breaking existing processes.

File-Based Ingestion Workflows common in legacy integrations require robust handling of file formats, encoding issues, and delivery mechanisms. Format validation checks for consistent structure and content before processing, with clear error messages when validation fails. Character encoding detection handles the common issue of mismatched encodings between source systems and processing environments. Reliable delivery confirmation ensures files are not processed multiple times or lost entirely, using mechanisms like file movement to processing directories or checksum verification.

Data Transformation: Building Clean, Trusted Datasets

Transformation Strategy and Architecture

Staged Transformation Approach organizes data processing into logical stages that progressively enhance data quality and value. Bronze stage transformations focus on basic cleanup—format standardization, character encoding normalization, and structural validation—while preserving the raw data for audit purposes. Silver stage transformations perform more significant enrichment—deduplication, type conversion, business rule validation, and basic aggregation—creating analysis-ready datasets. Gold stage transformations implement business-specific logic—complex calculations, dimensional modeling, feature engineering—producing datasets optimized for specific analytical use cases.

Modular Transformation Design enables reuse, testing, and maintainability of transformation logic. Single-responsibility transformations focus on specific business rules or technical operations, making them easier to understand, test, and debug. Parameterized transformations accept configuration values for environment-specific behavior, enabling the same logic to work across development, testing, and production environments. Transformation libraries encapsulate common operations like address standardization, date parsing, or unit conversion, promoting consistency across different data workflows.

Testing and Validation Framework ensures transformation logic correctness and data quality maintenance. Unit testing verifies individual transformation functions with both typical and edge case inputs. Integration testing validates that transformations work correctly with actual data sources and sinks. Data quality testing checks that output data meets defined quality thresholds for completeness, accuracy, and consistency. Regression testing ensures that changes to transformation logic don’t break existing functionality or data quality.

Common Transformation Patterns and Techniques

Data Cleaning and Standardization addresses the inevitable inconsistencies in source data. Missing value handling uses strategies appropriate to the data context—removal when missingness is random, imputation when values can be reasonably estimated, or flagging when missingness itself is informative. Standardization routines normalize formats for common data types like dates, phone numbers, and addresses, enabling consistent analysis and matching. Outlier detection and handling identifies statistically unusual values that may represent errors, with strategies ranging from capping to removal based on business context.

Enrichment and Feature Engineering enhances raw data with additional context and derived attributes. Reference data joining adds descriptive information from master data sources, like product categories or customer segments. Geographic enrichment adds location context through geocoding or spatial joins with boundary datasets. Temporal feature creation generates time-based attributes like day-of-week, seasonality indicators, or time-since-event calculations. Statistical feature engineering creates aggregated features like rolling averages, rates of change, or statistical moments that capture important patterns in the data.

Aggregation and Summarization creates datasets optimized for specific analytical use cases. Pre-aggregation computes common summary statistics during transformation, trading storage for query performance. Multi-level aggregation creates summaries at different granularities (daily, weekly, monthly) to support different analytical needs. Windowed aggregations compute running totals, moving averages, or other time-aware summaries that require context from multiple records. Approximate aggregations use probabilistic data structures like data workflows HyperLogLog for distinct counting or T-Digest for percentile estimation when exact values aren’t required but performance is critical.

Data Quality Management: Ensuring Trustworthy Data

Comprehensive Quality Framework

Multi-dimensional Quality Assessment evaluates data across several complementary dimensions. Completeness measures the presence of expected values, distinguishing between structural missingness (empty fields that should contain values) and content missingness (present but meaningless values like “NULL” or “N/A”). Accuracy assesses how well data reflects real-world entities or events, often requiring cross-validation with trusted external sources or business knowledge. Consistency checks for logical contradictions within datasets or across related datasets, like orders without customers or inventory counts that don’t balance.

Timeliness and Freshness Monitoring ensures data is current enough for its intended use. Data currency measures the age of data relative to the real-world entities it represents, important for rapidly changing domains like inventory or pricing. Refresh frequency tracks how often data is updated, with alerts when expected updates don’t occur. Processing latency monitors the time from data creation to availability for analysis, particularly critical for real-time use cases. Seasonal pattern awareness accounts for expected variations in data volume or update patterns, preventing false alerts during known quiet periods.

Business Rule Validation implements domain-specific quality checks that go beyond technical metrics. Attribute dependency rules validate relationships between fields, like ensuring discount amounts don’t exceed product prices. State transition rules verify that entity state changes follow valid sequences, like order status progressing from “placed” to “shipped” rather than backwards. Cardinality constraints check that relationship cardinalities match expectations, like each customer having exactly one primary address. Derived measure validation verifies that calculated fields match independently computed values when possible.

Automated Quality Monitoring and Improvement

Continuous Quality Monitoring integrates quality checks throughout data workflows rather than treating quality as a separate process. Inline validation during ingestion and transformation catches quality issues early, preventing corruption of downstream datasets. Statistical process control techniques monitor quality metrics over time, detecting degradation trends before they become critical. Automated quality scoring combines multiple quality dimensions into overall scores that help prioritize improvement efforts and communicate data trustworthiness to consumers.

Anomaly Detection and Alerting identifies unusual patterns that may indicate quality issues. Statistical anomaly detection uses methods like Z-score analysis, moving average deviation, or seasonal decomposition to identify unusual values or patterns. Machine learning approaches train models on historical data to detect anomalies based on multiple correlated metrics. Business rule violation detection flags records that violate defined business constraints. Alert fatigue prevention uses intelligent alerting that considers anomaly severity, persistence, and business impact to avoid overwhelming data stewards with minor issues.

Root Cause Analysis and Improvement transforms quality monitoring from detection to prevention. Data lineage analysis traces quality issues back to their sources, identifying whether issues originate in source systems, transformation logic, or elsewhere. Pattern analysis groups similar quality issues to identify systemic problems rather than one-off errors. Feedback loops communicate quality issues to data producers, enabling source system improvements that prevent recurrence. Preventive controls implement checks earlier in data workflows based on learned patterns of quality issues.

Orchestration and Automation: Managing Complex Workflows

Workflow Orchestration Platforms

Orchestrator Selection Criteria consider multiple factors beyond basic scheduling capabilities. Execution environment support evaluates whether the orchestrator can run workflows on various platforms—Kubernetes, serverless functions, virtual machines—based on organizational standards and workload characteristics. Integration ecosystem examines pre-built connectors for common data sources, transformation tools, and visualization platforms that accelerate development. Observability capabilities assess the built-in monitoring, alerting, and debugging features that simplify operational management. Cost structure considers both licensing costs and the computational resources required for orchestration itself.

DAG-Based Workflow Design structures data workflows as directed acyclic graphs that clearly express dependencies and enable parallel execution. Logical task grouping organizes related operations into cohesive units while maintaining clear interfaces between groups. Dependency management explicitly defines relationships between tasks, enabling the orchestrator to optimize execution order and handle failures gracefully. Parameterization makes workflows reusable across different environments, time periods, or business units by externalizing configuration values.

Error Handling and Recovery strategies ensure data workflows can withstand expected failures without manual intervention. Retry policies implement exponential backoff for transient failures like network timeouts or temporary resource constraints. Circuit breakers prevent cascading failures by temporarily disabling components that experience repeated failures. Compensation logic undoes partially completed work when workflows fail, maintaining data consistency. Graceful degradation allows non-critical path failures without blocking entire workflows when possible.

Implementation Best Practices

Infrastructure as Code applies software engineering practices to workflow infrastructure management. Declarative configuration defines workflow structure and dependencies in version-controlled files rather than manual setup. Environment consistency ensures that development, testing, and production environments are identical, preventing environment-specific failures. Automated deployment uses CI/CD pipelines to test and promote workflow changes safely. Infrastructure testing validates that workflow definitions are syntactically correct and adhere to organizational standards before deployment.

Monitoring and Observability provides visibility into workflow execution and health. Execution history maintains detailed records of workflow runs, including timing, resource usage, and data volumes processed. Real-time status shows currently running workflows with progress indicators and potential bottlenecks. Data lineage tracking records how data flows through workflows, enabling impact analysis and debugging. Business metrics integration connects technical workflow metrics to business outcomes, like tracking how data freshness impacts decision quality.

Cost Optimization and Resource Management controls the computational expense of data workflows. Right-sizing matches computational resources to workload requirements, avoiding over-provisioning for simple tasks or under-provisioning for complex ones. Scheduling optimization runs resource-intensive workflows during off-peak hours when computational costs are lower. Data pruning automatically archives or deletes intermediate data that’s no longer needed, reducing storage costs. Performance monitoring identifies inefficient transformations or resource contention that drives up costs unnecessarily.

Data Storage and Management: Organizing for Analysis

Storage Strategy and Architecture

Multi-temperature Data Management organizes storage based on data access patterns and retention requirements. Hot storage keeps frequently accessed data in high-performance storage like SSDs or in-memory databases, optimizing for query speed rather than cost. Warm storage houses regularly accessed data in balanced storage that offers good performance at moderate cost, like premium cloud storage tiers. Cold storage archives rarely accessed data in low-cost storage like object storage archives, accepting slower access in exchange for significant cost savings. Automated tiering policies move data between storage tiers based on access patterns without manual intervention.

Data Modeling for Analytical Workloads structures data to support efficient querying and analysis. Dimensional modeling uses star or snowflake schemas to organize data into fact tables (containing measurements) and dimension tables (containing descriptive attributes), optimizing for business intelligence tools. Data vault modeling provides a flexible, auditable approach for integrating data from multiple sources, particularly valuable in data warehouse environments. One Big Table (OBT) approaches denormalize data into wide tables that simplify querying at the cost of storage efficiency and update complexity.

Partitioning and Clustering organizes data physically to improve query performance. Time-based partitioning separates data by natural time boundaries like day, month, or year, enabling queries to skip irrelevant time periods. Key-based partitioning distributes data based on entity identifiers or other frequently filtered attributes. Clustering co-locates related records physically on storage, reducing I/O for range queries or joins. Multi-level partitioning combines different strategies, like partitioning by date then clustering by category, to optimize for multiple access patterns.

Management and Optimization

Data Lifecycle Management automates data retention, archiving, and deletion based on business policies. Retention policies define how long different types of data should be kept active based on business needs, regulatory requirements, and storage costs. Archival processes move data from production systems to long-term storage while maintaining accessibility for compliance or historical analysis. Secure deletion permanently removes data that’s no longer needed, reducing storage costs and compliance scope. Legal hold handling suspends normal lifecycle rules for data involved in litigation or investigations.

Performance Optimization ensures that storage systems meet query performance requirements. Indexing strategies create appropriate indexes for common query patterns while minimizing the performance impact on data loading. Materialized views precompute expensive aggregations or joins, trading storage for query performance. Query optimization analyzes execution plans to identify and address performance bottlenecks. Resource management allocates appropriate computational resources to different workloads based on their priority and performance requirements.

Data Governance and Security protects data while enabling appropriate access. Access controls implement role-based permissions that grant users the minimum access needed for their responsibilities. Data masking obscures sensitive information in non-production environments while maintaining data utility for development and testing. Encryption protects data at rest and in transit against unauthorized access. Audit logging records data access and modifications for security monitoring and compliance reporting.

Visualization and Consumption: Delivering Business Value

Dashboard and Report Design

User-Centered Design Approach focuses on delivering insights that are relevant, accessible, and actionable for specific audiences. Persona development identifies key user types and their specific information needs, decision contexts, and technical capabilities. Task analysis understands what decisions users need to make and what information supports those decisions. Iterative design involves users throughout the development process, incorporating feedback to refine visualizations based on actual usage rather than assumptions.

Effective Visualization Selection matches visual encodings to data characteristics and analytical tasks. Comparison visualizations like bar charts and line graphs help users compare values across categories or time. Composition visualizations like stacked bars and pie charts show part-to-whole relationships. Distribution visualizations like histograms and box plots reveal the shape and spread of data. Relationship visualizations like scatter plots and heat maps show correlations and patterns between variables. Geographic visualizations like choropleth maps and point maps reveal spatial patterns.

Dashboard Layout and Organization structures information to guide users through analytical narratives. Progressive disclosure presents high-level summary information first, with drill-down capabilities for users who need more detail. Visual hierarchy uses size, position, and emphasis to direct attention to the most important information. Consistent design applies standardized color schemes, typography, and layout patterns across related visualizations to reduce cognitive load. Context provision includes appropriate titles, labels, annotations, and time context to help users interpret visualizations correctly.

Delivery and Interaction Patterns

Self-Service Analytics empowers business users to explore data and answer their own questions. Intuitive interfaces provide point-and-click access to data without requiring technical skills. Guided analytics offers pre-built analytical paths that help users navigate complex data relationships. Natural language querying allows users to ask questions in plain language rather than learning query syntax. Data discovery tools help users find relevant datasets and understand their structure and meaning.

Embedded Analytics integrates insights directly into business applications and workflows. Contextual presentation shows relevant metrics and visualizations based on the user’s current task and context. Actionable insights connect visualizations to business processes, enabling users to take action directly from analytical interfaces. Personalization tailors content and visualizations to individual users’ roles, preferences, and historical interactions. Proactive alerting notifies users when important changes or patterns are detected, rather than requiring manual monitoring.

Collaboration and Sharing enables teams to work together around data and insights. Commenting and annotation allows users to discuss specific data points and share interpretations. Storytelling features help users combine visualizations with narrative text to communicate insights effectively. Export and sharing enables users to distribute insights through various channels—email, presentations, documents—while maintaining data security. Version control tracks changes to reports and dashboards, enabling collaboration without confusion.

Operational Excellence: Monitoring and Maintenance

Comprehensive Monitoring Framework

Multi-level Monitoring tracks data workflows at different levels of granularity. Infrastructure monitoring watches the underlying computational resources—CPU, memory, storage, network—that support data processing. Platform monitoring tracks the health and performance of data platforms like databases, processing engines, and orchestration tools. Workflow monitoring observes the execution of specific data workflows, including timing, data volumes, and success rates. Business monitoring connects technical metrics to business outcomes, like tracking how data quality impacts decision accuracy.

Proactive Alerting and Notification identifies issues before they impact business operations. Threshold-based alerting triggers notifications when metrics exceed defined limits, like processing time increasing beyond service level agreements. Anomaly detection uses statistical methods or machine learning to identify unusual patterns that might indicate emerging issues. Dependency-aware alerting considers the impact of failures on downstream systems and users, prioritizing alerts based on business impact. Alert aggregation and correlation groups related alerts to avoid notification fatigue and help identify root causes.

Performance and Capacity Planning ensures data workflows can handle current and future workloads. Trend analysis identifies patterns in data volumes, processing times, and resource usage to forecast future requirements. Load testing simulates expected future workloads to identify potential bottlenecks before they impact production. Capacity planning uses performance data and growth forecasts to ensure adequate resources are available when needed. Cost forecasting projects future costs based on usage patterns and planned initiatives, enabling budget planning.

Continuous Improvement Processes

Incident Management and Post-Mortems transform failures into learning opportunities. Structured incident response follows defined procedures for detecting, diagnosing, and resolving issues to minimize impact. Blameless post-mortems focus on understanding systemic factors that contributed to incidents rather than assigning individual blame. Action item tracking ensures that lessons learned from incidents result in concrete improvements to prevent recurrence. Knowledge base maintenance documents incidents and resolutions to accelerate future problem-solving.

Regular Health Assessments proactively identify potential issues before they cause failures. Architecture reviews evaluate whether data workflows still align with best practices and organizational standards. Performance reviews analyze whether workflows are meeting performance targets and identify optimization opportunities. Security assessments verify that data protection measures remain effective as technologies and threats evolve. Cost reviews identify opportunities to optimize resource usage and reduce expenses without compromising capability.

Evolution and Modernization keeps data workflows aligned with changing business needs and technology landscapes. Technology radar tracks emerging technologies and evaluates their potential relevance to the organization’s data capabilities. Proof of concept development tests new approaches with limited risk before committing to large-scale implementation. Gradual migration strategies enable technology updates without disrupting existing business processes. Skills development ensures teams have the expertise needed to implement and operate modern data technologies effectively.

Conclusion: Building Sustainable Data Workflows

Effective data workflows represent a strategic capability that enables organizations to transform raw data into business value efficiently and reliably. The journey from data ingestion to visualization involves numerous interconnected processes that must work together seamlessly to deliver trustworthy insights when and where they’re needed. By applying the best practices outlined in this guide—focusing on reliability, scalability, maintainability, and governance—organizations can build data workflows that not only meet current needs but also adapt to future challenges and opportunities.

The most successful organizations recognize that data workflows are not just technical implementations but business capabilities that require ongoing attention and investment. They establish centers of excellence that develop and disseminate best practices, implement comprehensive monitoring that provides visibility into workflow health and performance, and foster a culture of continuous improvement that regularly assesses and enhances data capabilities. Most importantly, they maintain strong alignment between data workflows and business objectives, ensuring that data infrastructure supports rather than drives business strategy.

As data volumes continue to grow and analytical demands become more sophisticated, the importance of well-designed data workflows will only increase. Organizations that master the art and science of data workflow design will be better positioned to leverage their data assets for competitive advantage, making faster, more informed decisions based on complete, accurate, and timely information. By treating data workflows as critical business infrastructure worthy of careful design and ongoing optimization, organizations can build the foundation for sustained data-driven success.