Discover what Computer Vision is, how it works, and its revolutionary applications in retail, autonomous vehicles, and more.

Introduction: A World Seen Through AI Eyes

Imagine a self-driving car navigating a complex urban roundabout, identifying pedestrians, reading traffic signs, and anticipating the movements of other vehicles. Picture a smartphone camera that seamlessly blurs the background of your portrait, recognizing the difference between you and the scenery behind you. Envision a doctor analyzing medical scans with the assistance of an AI that can spot early-stage tumors invisible to the human eye.

What is the common thread weaving through these transformative technologies? The answer is Computer Vision.

In this ultimate guide, we will embark on a deep dive into the fascinating world of AI. We will demystify what it is, explore the core technologies that make it tick, and investigate its groundbreaking computer vision applications across industries like retail and autonomous vehicles. We will also address the human side of technology by delving into computer vision syndrome, a modern health concern, and look at the promising career path of a computer vision engineer. Whether you’re a curious beginner, a seasoned tech professional, or a business leader seeking innovative computer vision solutions, this article is your comprehensive resource.

Chapter 1: What is Computer Vision? The Fundamentals

1.1 Defining Computer Vision

At its core, computer vision is a field of artificial intelligence (AI) that trains computers to interpret and understand the visual world. By digitally processing and analyzing images and videos, machines can accurately identify and classify objects, and then react to what they “see.”

The ultimate goal of computer vision is to replicate, and in some aspects surpass, the capabilities of the human visual system. It’s about enabling machines to perform tasks that typically require human visual cognition. This goes far beyond simple “seeing”; it involves comprehending a scene, its context, and the relationships between objects within it.

1.2 The Difference Between Computer Vision, Image Processing, and Machine Vision

These terms are often used interchangeably, but they have distinct meanings:

- Image Processing: This is a precursor to computer vision. It involves manipulating an image to improve it or extract information without necessarily understanding its content. Examples include adjusting contrast, sharpening, or reducing noise. It’s a set of techniques, not intelligence.

- Machine Vision: This typically refers to the application of computer vision in an industrial or production setting. It’s often used for automated inspection, robot guidance, and process control on a factory floor. It’s generally less about deep understanding and more about reliable, high-speed measurement and detection.

- Computer Vision: This encompasses a higher level of understanding. It uses image processing techniques but adds layers of interpretation and contextual analysis, often powered by complex machine learning models.

1.3 A Brief History: From Pixels to Intelligence

The journey of computer vision began in the 1960s. Early attempts were rudimentary, focusing on simple pattern recognition. The 1990s saw the rise of statistical methods, but the field’s true renaissance began in the early 2010s with the advent of deep learning and convolutional neural networks (CNNs). The availability of massive datasets (like ImageNet) and powerful GPUs provided the fuel for this revolution, allowing models to achieve human-level, and even superhuman, accuracy in specific visual tasks.

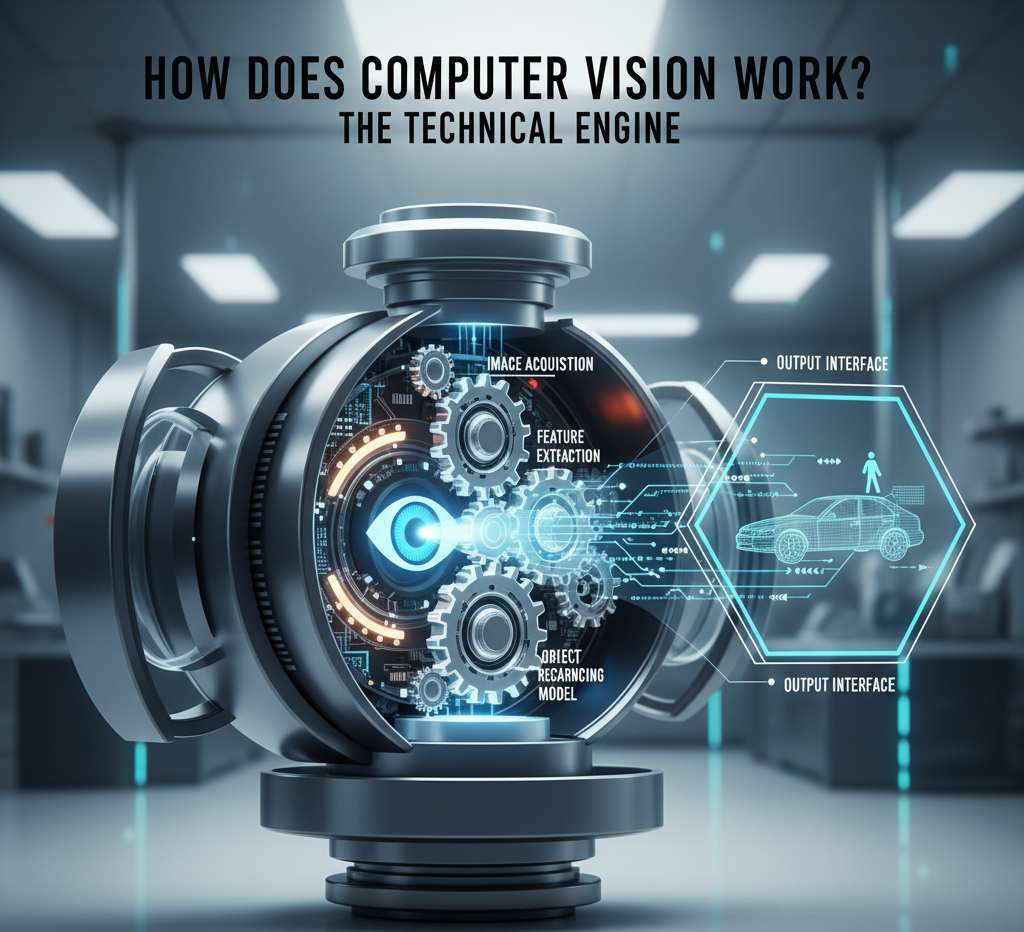

Chapter 2: How Does Computer Vision Work? The Technical Engine

2.1 The Pipeline of a Computer Vision System

A typical computer vision system follows a multi-stage pipeline:

- Image Acquisition: Capturing the visual data via cameras, sensors, or video feeds.

- Pre-processing: Using image processing techniques to prepare the data. This includes noise reduction, normalization, and scaling.

- Feature Extraction: This is the heart of traditional computer vision. The algorithm identifies distinctive patterns or features in the image, such as edges, corners, and textures.

- Model Inference & Decision Making: A trained machine learning model (like a CNN) analyzes the extracted features to classify objects, detect their presence, or segment the image.

- Post-processing and Action: Refining the results and triggering an action—like a robot arm moving to pick an object, or a car applying the brakes.

2.2 The Role of Machine Learning and Deep Learning

While traditional algorithms exist,dominated by machine learning, particularly deep learning.

- Convolutional Neural Networks (CNNs): These are the workhorses of modern computer vision AI. CNNs are inspired by the human visual cortex. They use layers of filters that scan an image to detect hierarchical patterns—from simple edges in early layers to complex objects like faces or cars in deeper layers. This allows the model to learn spatial hierarchies of features automatically and with immense accuracy.

2.3 Key Techniques and Tasks

solutions are built by combining several core techniques:

- Image Classification: Assigning a label to an entire image (e.g., “cat,” “dog”).

- Object Detection: Identifying and locating multiple objects within an image, often by drawing bounding boxes around them.

- Image Segmentation: Partitioning an image into segments, pixel by pixel, to identify the boundaries of objects. This is crucial for detailed scene understanding.

- Facial Recognition: A specific form of object detection and classification focused on human faces.

- Edge Detection: Identifying the boundaries of objects within an image, a fundamental low-level task.

- Pattern Recognition: Identifying recurring patterns or regularities in data.

Chapter 3: Computer Vision Applications: Transforming Industries

This is where the theory meets reality. The computer vision applications are vast and growing exponentially.

3.1 Computer Vision in Healthcare

- Medical Imaging: Analyzing X-rays, MRIs, and CT scans to detect diseases like cancer, fractures, and neurological disorders with speed and accuracy that can augment radiologists.

- Surgical Assistance: Providing real-time guidance and augmented reality overlays during surgeries.

- Patient Monitoring: Analyzing video feeds to monitor patient movement and alert staff to falls or emergencies.

3.2 Computer Vision in Autonomous Vehicles

In autonomous vehicles, the critical technology of machine perception acts as the car’s artificial eyes and brain, enabling it to understand and navigate its environment in real-time. This is achieved through a sophisticated fusion of data from multiple sensors, including cameras, LiDAR, and radar. Advanced algorithms process this visual and spatial data to perform a series of complex tasks: identifying and classifying objects such as pedestrians, other vehicles, and traffic signs; precisely tracking their movement and speed; and interpreting the scene to understand road geometry, lane markings, and traffic signals.

This continuous, millisecond-by-millisecond analysis is fundamental to the vehicle’s decision-making process, allowing it to perform safe maneuvers, adhere to traffic rules, and ultimately drive without human intervention. The reliability and accuracy of this perceptual system are paramount, as they form the foundational layer for all subsequent planning and control actions, making it one of the most challenging and vital components of self-driving technology.Perhaps one of the most publicized applications, computer vision in autonomous vehicles is a critical technology stack. It enables self-driving cars to:

- Perceive their environment in 360 degrees using cameras, LiDAR, and radar.

- Perform object detection for pedestrians, other vehicles, traffic signs, and signals.

- Navigate complex roads, make lane changes, and avoid collisions.

- Read and interpret road markings and signage.

The synergy of computer vision AI with other sensors is what makes full autonomy a tangible goal.

3.3 Computer Vision in Retail

The retail sector is being revolutionized in retail. Applications include:

- Cashier-less Stores: Amazon Go is the prime example, where customers simply walk out, and their items are automatically detected and charged.

- Inventory Management: Automatically tracking shelf stock, identifying out-of-stock items, and monitoring for misplaced products.

- Customer Analytics: Analyzing in-store traffic patterns, understanding customer demographics (anonymously), and optimizing store layouts.

- Virtual Try-Ons: Allowing customers to “try” clothes, glasses, or makeup using augmented reality.

3.4 Other Noteworthy Applications

- Agriculture: Monitoring crop health, predicting yields, and guiding automated harvesting.

- Manufacturing: Automated visual inspection for quality control on production lines.

- Security and Surveillance: Automated threat detection, crowd monitoring, and access control via facial recognition.

- Augmented Reality (AR): Overlaying digital information onto the real world, as seen in games like Pokémon GO and navigation apps.

The Data Foundation: Fueling the Computer Vision Revolution

At the heart of every successful computer vision AI system lies a massive, well-annotated dataset. Before a model can learn to identify a cat, a tumor, or a pedestrian, it must be trained on thousands, sometimes millions, of labeled images. This process of data annotation—where humans meticulously draw bounding boxes around objects, segment pixels, or assign tags—is the unglamorous but critical backbone of the entire field. The quality and diversity of this training data directly determine the accuracy and fairness of the resulting solutions. Without this foundational work, even the most sophisticated algorithms would be blind, underscoring , data is not just fuel; it is the very landscape the AI learns to see.

From Pixels to Understanding: The Semantic Gap

A core challenge that has driven computer vision research for decades is the “semantic gap.” This refers to the significant disparity between the low-level pixel data that a computer receives (essentially just a grid of red, green, and blue values) and the high-level, meaningful understanding that humans effortlessly derive from an image (e.g., “a family enjoying a picnic in the park”). Bridging this gap requires more than just pattern recognition; it involves contextual awareness, common-sense reasoning, and knowledge about how the world works.

Chapter 4: The Human Factor: Computer Vision Syndrome

As we interact more with digital screens, a significant health concern has emerged: Computer Vision Syndrome, also referred to as vision syndrome computer or digital eye strain.

4.1 What is Computer Vision Syndrome?

Computer vision syndrome is a group of eye and vision-related problems that result from prolonged use of digital screens, including computers, tablets, e-readers, and cell phones. The high visual demands of sustained screen viewing—such as intense focus, glare, and often poor ergonomics—make many individuals susceptible to this common condition.

Symptoms typically include eyestrain, headaches, blurred vision, dry eyes, and pain in the neck and shoulders. This occurs because the visual system is consistently overworked when interacting with pixels, characters that lack sharp edges, and screens with contrast and glare, leading to significant discomfort for a large portion of the modern workforce and general population

4.2 Symptoms and Causes

- Common Symptoms: Eyestrain, headaches, blurred vision, dry eyes, and neck and shoulder pain.

- Primary Causes:

- Glare and reflections on the screen.

- Poor lighting.

- Improper viewing distances and posture.

- Uncorrected vision problems.

- The constant refocusing and eye movement required when reading a screen.

4.3 Prevention and Management

Managing computer vision syndrome involves both environmental adjustments and personal habits:

- The 20-20-20 Rule: Every 20 minutes, look at something 20 feet away for at least 20 seconds.

- Optimize Your Workspace: Ensure proper lighting, reduce glare, and position your screen so your gaze is slightly downward.

- Blink More Often: Consciously blinking helps remoisten your eyes.

- Get Regular Eye Exams: Ensure your prescription is up-to-date for screen work.

Chapter 5: The Architects of Sight: The Computer Vision Engineer

The soaring demand for computer vision solutions has created a high-demand role: the computer vision engineer.

5.1 Who is a Computer Vision Engineer?

A computer vision engineer is a specialized AI professional who focuses on designing, developing, and deploying systems that enable machines to interpret and understand visual data. This role involves creating algorithms and models that can process, analyze, and extract meaningful information from pixels, much like human perception but at a computational scale.

These engineers are crucial in bridging the gap between theoretical artificial intelligence research and practical, real-world applications that rely on visual understanding. Their work is foundational to a wide range of technologies, from autonomous vehicles and medical image analysis to industrial quality control and augmented reality systems.

5.2 Key Skills and Responsibilities

- Technical Skills:

- Programming: Proficiency in Python and C++.

- Libraries & Frameworks: Deep expertise in OpenCV, TensorFlow, PyTorch, and Keras.

- Mathematics: Strong foundation in linear algebra, calculus, probability, and statistics.

- Deep Learning: In-depth knowledge of CNNs, R-CNN, YOLO, and other architectures.

- Responsibilities:

- Data collection and annotation.

- Model training, tuning, and evaluation.

- Developing and optimizing inference pipelines.

- Deploying models to production environments (cloud, edge devices).

5.3 Career Path and Outlook

The career outlook for professionals specializing in machine perception and visual AI is exceptionally bright. This domain, which focuses on enabling machines to interpret and understand visual data, is experiencing unprecedented demand. With applications proliferating across every sector—from tech giants and automotive corporations to healthcare startups and agricultural firms—skilled practitioners in this field command high salaries and significant professional interest.

These experts have the unique opportunity to tackle some of the most cutting-edge and impactful challenges in technology today, working on everything from autonomous systems and medical diagnostics to advanced robotics and industrial automation. As industries continue to recognize the transformative potential of integrating visual understanding into their products and processes, the need for talent in this area is poised for continued growth, making it one of the most dynamic and promising career paths in the current technological landscape.

Chapter 6: The Future of Computer Vision and Ethical Considerations

6.1 Emerging Trends

The future of computer vision is even more integrated and intelligent:

- 3D Computer Vision: Moving beyond 2D images to understand the world in three dimensions.

- Generative AI in Vision: Models like DALL-E and Stable Diffusion are creating new images, opening possibilities in design and art.

- Edge Computing: Running computer vision models directly on devices (like smartphones and cameras) for lower latency and greater privacy.

- Multimodal AI: Combining visual data with text, audio, and other sensor data for a richer understanding of context.

6.2 Ethical Challenges and Responsible AI

With great power comes great responsibility. The proliferation of computer vision AI raises critical ethical questions:

- Privacy: Pervasive surveillance and facial recognition threaten individual privacy.

- Bias and Fairness: If training data is biased, models will perpetuate and amplify those biases, leading to discriminatory outcomes.

- Accountability: When a computer vision system in an autonomous vehicle fails, who is responsible?

Developing robust, fair, and transparent computer vision solutions is as important as developing powerful ones. The industry must prioritize ethical frameworks and audits.

Conclusion:

Machine perception, the ability of machines to interpret and understand visual data from the world, has moved from the realms of science fiction to an indispensable technology that is reshaping our world. This field, which enables machines to derive meaning from pixels and imagery, is fundamentally altering a vast spectrum of human activity. From the intricate sensor fusion and object recognition systems in autonomous vehicles that allow them to navigate complex environments, to the intelligent inventory management and customer behavior analysis in retail, and even the personal health monitoring that can track physiological changes, its impact is profound and multifaceted.

The field is driven by brilliant machine learning engineers and AI researchers who are constantly pushing the boundaries of what’s possible. They develop sophisticated algorithms and neural networks capable of not just “seeing” but of interpreting scenes, identifying patterns, and making informed decisions based on visual input. As we look to the future, the potential for advanced perceptual AI to solve complex problems and improve human life is limitless, provided we navigate its challenges, such as ethical data use and algorithmic bias, with wisdom and responsibility.

The machines are learning to perceive and interpret the visual world. And in doing so, they are giving us a new lens—a powerful tool for data analysis and automation—through which to understand and improve our own world. This technology is not about replicating human sight, but about creating a new form of analytical capability that can process visual information at a scale, speed, and consistency far beyond human capacity.