Introduction: The Evolution of Feature Extraction in the AI Era

Feature extraction has undergone a revolutionary transformation, evolving from manual engineering to AI-driven automation that fundamentally changes how organizations derive value from their data. In 2025, feature extraction represents the critical bridge between raw data and actionable insights, with AI-powered techniques enabling the discovery of complex patterns and relationships that human engineers might never identify. The global feature engineering market, valued at $1.2 billion in 2022, is projected to reach $4.8 billion by 2027, reflecting the growing recognition that sophisticated feature extraction is not merely a preprocessing step but a core competitive advantage in the age of artificial intelligence.

The traditional approach to feature extraction relied heavily on domain expertise, manual experimentation, and heuristic methods that were time-consuming, subjective, and often limited by human cognitive constraints. Today, AI-powered feature extraction leverages machine learning algorithms to automatically discover, generate, and select features that maximize predictive power while minimizing redundancy and noise. This paradigm shift has dramatically accelerated model development cycles, improved model performance, and enabled organizations to extract value from increasingly complex and high-dimensional datasets. From computer vision and natural language processing to time-series analysis and multimodal data integration, modern feature extraction techniques are pushing the boundaries of what’s possible with machine learning.

This comprehensive guide explores the cutting-edge landscape of AI-powered feature extraction in 2025, examining the techniques, tools, and best practices that are transforming how organizations prepare data for machine learning. We’ll delve into automated feature engineering frameworks, deep learning approaches for unstructured data, feature selection algorithms, and emerging trends that are shaping the future of feature extraction. Whether you’re working with structured tabular data, unstructured text and images, or complex multimodal datasets, understanding these advanced feature extraction techniques is essential for building models that deliver accurate, robust, and interpretable predictions.

The Foundation of Modern Feature Extraction

From Manual Engineering to AI-Driven Automation

The journey of feature extraction has progressed through three distinct eras, each marked by increasing levels of automation and sophistication. The first era, spanning from the early days of machine learning through approximately 2015, was characterized by manual feature extraction where data scientists and domain experts would painstakingly craft features based on intuition, domain knowledge, and iterative experimentation. This approach, while valuable for developing interpretable features, was inherently limited by human bias, time constraints, and the difficulty of discovering complex, non-linear relationships in high-dimensional data.

The second era, emerging around 2015-2020, introduced automated feature extraction tools that could generate large numbers of candidate features from raw data. Libraries like FeatureTools and tsfresh automated the creation of standard transformations, aggregations, and interactions, significantly accelerating the feature engineering process. However, these tools still largely operated within the constraints of predefined transformation templates and struggled with truly novel feature discovery or handling complex unstructured data types effectively.

The current era, fully realized by 2025, represents the age of AI-powered feature extraction where machine learning algorithms don’t just apply predefined transformations but actually learn how to extract meaningful representations directly from raw data. Deep learning architectures, reinforcement learning for feature selection, and generative approaches for feature creation have transformed feature extraction from a manual art to an automated science. This shift has enabled organizations to tackle previously intractable problems and extract value from data types that were too complex for traditional methods.

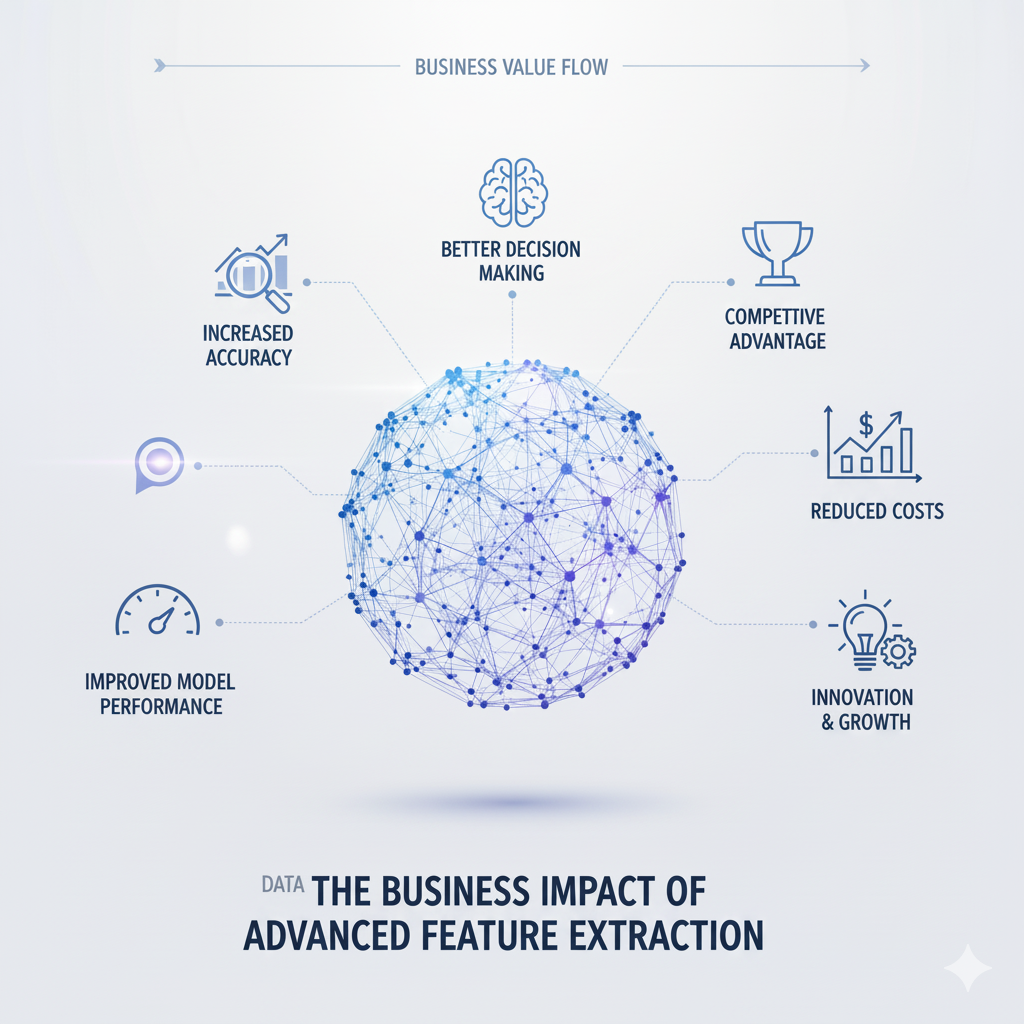

The Business Impact of Advanced Feature Extraction

The evolution of feature extraction has produced tangible business impacts across industries and applications. In healthcare, AI-powered feature extraction from medical images has improved disease detection accuracy by 15-30% compared to manual feature engineering, while reducing radiologist workload by automating routine measurements and anomaly detection. In financial services, automated feature extraction from transaction data has enhanced fraud detection systems, with one major bank reporting a 40% improvement in false positive reduction while maintaining detection rates.

Manufacturing companies leveraging AI-driven feature extraction from sensor data have achieved predictive maintenance accuracy improvements of 25-35%, resulting in reduced downtime and maintenance costs. In retail, feature extraction from customer behavior data has powered recommendation systems that deliver 20% higher conversion rates through more nuanced understanding of customer preferences and intent. These improvements stem from the ability of AI-powered feature extraction to discover subtle patterns and interactions that elude manual engineering approaches.

The economic impact extends beyond improved model performance to development efficiency and resource optimization. Organizations implementing automated feature extraction report 60-80% reductions in feature engineering time, allowing data scientists to focus on higher-value tasks like model interpretation and business integration. The consistency and reproducibility of automated feature extraction also reduce model variance and improve deployment reliability, critical considerations for production machine learning systems. As feature extraction continues to evolve, its role as a key enabler of business value from AI investments becomes increasingly clear.

Deep Learning Approaches to Feature Extraction

Convolutional Neural Networks for Spatial Feature Extraction

Convolutional Neural Networks (CNNs) have revolutionized feature extraction from spatial data, particularly images, by automatically learning hierarchical representations that capture features at multiple scales of abstraction. The fundamental innovation of CNNs lies in their use of convolutional filters that slide across input data, detecting local patterns regardless of their position. This translation invariance, combined with pooling operations that provide spatial abstraction, enables CNNs to learn features that are both discriminative and robust to variations in position, scale, and orientation.

In 2025, CNN architectures for feature extraction have evolved beyond basic convolution-pooling stacks to incorporate sophisticated mechanisms that enhance feature quality and efficiency. Attention mechanisms allow networks to dynamically focus on the most relevant regions of an image when extracting features, improving both performance and interpretability. Dilated convolutions expand receptive fields without increasing parameter counts, capturing broader context while maintaining computational efficiency. Depthwise separable convolutions factorize standard convolutions into depthwise and pointwise operations, dramatically reducing computational requirements while maintaining representational power.

The application of CNN-based feature extraction extends far beyond traditional computer vision tasks. In medical imaging, CNNs extract features from X-rays, MRIs, and CT scans that enable early disease detection and precise anatomical measurements. In autonomous vehicles, they process LiDAR and camera data to identify obstacles, read signs, and understand driving environments. In manufacturing, they analyze product images for quality control and defect detection. The key advantage across these applications is the ability to learn domain-specific features directly from raw data, eliminating the need for manual feature engineering and often discovering patterns that human experts might miss.

Recurrent and Transformer Architectures for Sequential Feature Extraction

Recurrent Neural Networks (RNNs) and their more advanced variants, particularly Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), have transformed feature extraction from sequential data by modeling temporal dependencies and context. Unlike traditional time-series features that rely on fixed windows and statistical aggregations, RNNs can learn features that capture complex patterns across variable-length sequences, making them ideal for applications like natural language processing, speech recognition, and time-series forecasting.

The fundamental strength of RNN-based feature extraction lies in their ability to maintain an internal state that serves as a compressed representation of sequence history. This enables them to capture long-range dependencies and contextual information that is crucial for understanding sequential patterns. In natural language processing, RNNs extract features that capture semantic meaning, syntactic structure, and discourse patterns from text. In financial applications, they identify complex market patterns and regime changes from price and volume data. In IoT applications, they process sensor streams to detect anomalies and predict equipment failures.

Transformer architectures have emerged as a powerful alternative to RNNs for sequential feature extraction, particularly for tasks involving long-range dependencies. The self-attention mechanism at the heart of transformers allows them to directly model relationships between all positions in a sequence, regardless of distance. This has proven especially valuable for natural language processing, where transformers like BERT and GPT extract contextualized word representations that capture nuanced semantic relationships. Beyond text, transformers are increasingly used for time-series feature extraction, where their ability to capture complex temporal patterns has demonstrated superior performance compared to traditional methods.

Autoencoders and Representation Learning

Autoencoders provide a powerful framework for unsupervised feature extraction by learning compressed representations of input data through an encoder-decoder architecture. The encoder network transforms high-dimensional input into a lower-dimensional latent representation, while the decoder attempts to reconstruct the original input from this compressed representation. The features learned by the encoder often capture the most salient aspects of the data, making them valuable for downstream tasks like classification, clustering, and anomaly detection.

In 2025, autoencoder variants have evolved to address specific feature extraction challenges. Variational Autoencoders (VAEs) learn probabilistic latent representations that enable generative capabilities and more robust feature extraction. Denoising Autoencoders are trained to reconstruct clean data from corrupted inputs, learning features that are robust to noise and missing values. Contractive Autoencoders add a regularization term that penalizes sensitivity to small input variations, encouraging the learning of features that capture fundamental data patterns rather than noise.

Representation learning extends beyond autoencoders to encompass a broader class of techniques that learn useful data representations without explicit supervision. Self-supervised learning approaches create supervisory signals from the data itself, such as predicting missing parts of an input or determining the temporal ordering of frames. Contrastive learning methods learn representations by maximizing agreement between differently augmented views of the same data while minimizing agreement with other examples. These approaches have proven particularly powerful for feature extraction from unlabeled data, enabling organizations to leverage their vast stores of unstructured information without expensive manual labeling.

Automated Feature Engineering Frameworks

Tree-Based Automated Feature Engineering

Tree-based algorithms have emerged as powerful tools for automated feature extraction, particularly from structured tabular data. Methods like LightGBM, XGBoost, and CatBoost not only serve as predictive models but also provide rich information about feature importance and interactions that can guide feature engineering. The key insight is that tree ensembles naturally perform implicit feature extraction by creating splits that capture interactions and non-linear relationships in the data.

Advanced tree-based feature extraction techniques leverage the internal structure of trained models to generate new features. Leaf encoding transforms each data point into a vector indicating which leaf node it falls into in each tree of an ensemble, effectively creating features that capture complex decision paths. Tree embedding methods learn dense representations by considering the entire ensemble structure, creating features that preserve the hierarchical relationships learned by the trees. These approaches often yield features that are highly predictive while maintaining some level of interpretability through their connection to the original tree structures.

The practical implementation of tree-based feature extraction involves several considerations. The quality of extracted features depends heavily on the performance and diversity of the underlying tree models, necessitating careful hyperparameter tuning and ensemble construction. The computational cost of generating tree-based features can be significant for large datasets or deep trees, though optimizations like histogram-based methods and GPU acceleration help mitigate this. Perhaps most importantly, tree-based features must be validated on holdout data to ensure they generalize well and don’t simply memorize training set patterns.

Genetic Programming for Feature Synthesis

Genetic programming approaches to feature extraction treat feature creation as an evolutionary process, where populations of candidate features undergo selection, crossover, and mutation to discover increasingly effective transformations. Starting from a set of primitive operations (arithmetic functions, trigonometric operations, logical comparisons, etc.), genetic programming systematically explores the space of possible feature transformations, evaluating candidates based on their ability to improve model performance or other quality metrics.

Modern genetic programming frameworks for feature extraction incorporate several advancements that improve efficiency and effectiveness. Multi-objective optimization balances feature predictive power with complexity, preventing the creation of overly complex features that may overfit. Domain-specific primitives incorporate operations relevant to particular data types or problem domains, guiding the search toward more meaningful transformations. Transfer learning leverages features discovered for similar problems, accelerating the search process and improving feature quality.

The application of genetic programming to feature extraction has demonstrated particular strength in domains with complex physical relationships or known but intricate mathematical relationships between variables. In materials science, it has discovered features that capture non-linear property relationships from experimental data. In finance, it has identified technical indicators that outperform traditional measures. In engineering, it has derived features that approximate complex physical phenomena from sensor data. The key advantage is the ability to discover feature transformations that would be unlikely to occur to human engineers, often revealing unexpected but meaningful relationships in the data.

Neural Architecture Search for Feature Learning

Neural Architecture Search (NAS) has emerged as a powerful paradigm for automating the design of neural network architectures, including those used for feature extraction. By framing architecture design as an optimization problem, NAS algorithms can discover network structures that are specifically tailored to particular datasets and tasks, often outperforming human-designed architectures.

In the context of feature extraction, NAS focuses on finding architectures that learn maximally informative and generalizable representations from raw data. This involves optimizing not just for final task performance but also for representation quality metrics like disentanglement, robustness, and transferability. Modern NAS approaches for feature extraction include differentiable architecture search that treats architecture selection as a continuous optimization problem, evolutionary methods that maintain populations of architectures, and reinforcement learning-based approaches that learn search policies.

The practical implementation of NAS for feature extraction requires careful consideration of search space design, performance estimation, and computational constraints. The search space defines what architectures are possible, balancing expressiveness with tractability. Performance estimation strategies range from full training (accurate but expensive) to proxy metrics and weight sharing (faster but potentially less reliable). Computational efficiency is particularly important for feature extraction, where the goal is often to find good architectures quickly rather than exhaustively searching for the absolute optimum.

Feature Extraction for Unstructured Data

Natural Language Processing Feature Extraction

Natural Language Processing (NLP) has undergone a revolution in feature extraction with the advent of contextualized word embeddings and transformer-based language models. Traditional NLP feature extraction relied on bag-of-words representations, TF-IDF weighting, and static word embeddings like Word2Vec and GloVe. While these methods captured some semantic information, they struggled with polysemy (words with multiple meanings) and failed to capture deeper linguistic structure and context.

The breakthrough in NLP feature extraction came with transformer-based models like BERT, GPT, and their successors, which generate context-aware representations that capture nuanced semantic relationships. These models are pre-trained on massive text corpora using self-supervised objectives like masked language modeling and next sentence prediction, learning rich linguistic representations that can be fine-tuned for specific tasks. The resulting features capture not just word meanings but also syntactic structure, discourse relationships, and even some forms of reasoning.

In 2025, NLP feature extraction has evolved beyond single-language models to encompass multilingual representations that capture cross-lingual semantic relationships, domain-specific models pre-trained on scientific, legal, or medical text, and efficient architectures that provide near-state-of-the-art performance with reduced computational requirements. Techniques like knowledge distillation transfer capabilities from large models to smaller, more efficient ones, while prompt-based learning enables effective feature extraction with minimal task-specific training data. These advancements have made sophisticated NLP feature extraction accessible to organizations without massive computational resources.

Computer Vision Feature Extraction

Computer vision feature extraction has progressed from handcrafted features like SIFT and HOG to deep learning approaches that automatically learn hierarchical visual representations. Modern CNN architectures pre-trained on large-scale image datasets like ImageNet learn features that capture visual concepts at multiple levels of abstraction, from simple edges and textures in early layers to complex objects and scenes in deeper layers.

The evolution of computer vision feature extraction in 2025 is characterized by several key trends. Self-supervised learning methods like contrastive learning enable effective feature extraction from unlabeled image data, reducing dependence on expensive manual annotation. Vision transformers apply transformer architectures to images, dividing them into patches and processing them as sequences, often achieving superior performance compared to traditional CNNs. Multimodal models like CLIP learn joint representations of images and text, enabling zero-shot classification and cross-modal retrieval.

Practical applications of modern computer vision feature extraction span numerous domains. In healthcare, features extracted from medical images enable disease diagnosis, treatment planning, and surgical guidance. In autonomous systems, they support object detection, scene understanding, and navigation. In e-commerce, they power visual search, product recommendation, and quality assessment. In agriculture, they monitor crop health, predict yields, and detect pests. The common thread is the ability to learn domain-relevant visual features directly from pixel data, often discovering patterns that are subtle or complex for human perception.

Audio and Time-Series Feature Extraction

Audio feature extraction has evolved from traditional signal processing techniques like MFCCs and spectral features to deep learning approaches that learn representations directly from raw audio waveforms or spectrograms. Convolutional neural networks applied to spectrograms learn features that capture spectral patterns and temporal evolution, while recurrent networks process audio sequences to model temporal dependencies. More recently, transformer architectures have been adapted for audio, capturing long-range dependencies in speech and music.

The applications of advanced audio feature extraction are diverse and impactful. In speech recognition, deep learning features enable robust performance across accents, noise conditions, and speaking styles. In music information retrieval, they support genre classification, mood detection, and recommendation. In healthcare, they analyze respiratory sounds, heartbeats, and other biological audio signals for diagnostic purposes. In security and surveillance, they detect specific sounds like glass breaking or alarms.

Time-series feature extraction has similarly advanced from statistical descriptors and fixed-shaped windows to learned representations that capture complex temporal patterns. Dilated convolutional networks capture multi-scale temporal dependencies without excessive parameter growth. Attention mechanisms identify important time steps and relationships. Shapelet-based methods learn discriminative subsequences that characterize different classes or states. These approaches have proven particularly valuable for applications like predictive maintenance, financial forecasting, and physiological monitoring, where capturing complex temporal dynamics is essential for accurate predictions.

Feature Selection and Dimensionality Reduction

Automated Feature Selection Techniques

Feature selection represents a critical component of the feature extraction pipeline, identifying the most relevant features while eliminating redundancy and noise. In 2025, feature selection has evolved from simple filter methods based on correlation or mutual information to sophisticated approaches that consider feature interactions, model-specific importance, and multi-objective optimization.

Model-based feature selection leverages the intrinsic feature importance measures provided by algorithms like tree ensembles and linear models with regularization. Permutation importance quantifies feature relevance by measuring performance degradation when feature values are randomly shuffled. SHAP values provide theoretically grounded feature importance scores that account for complex interactions. These methods offer the advantage of being tailored to specific modeling approaches, though they can be computationally expensive for large feature sets.

Wrapper methods evaluate feature subsets by actually training models on them and measuring performance. While computationally intensive, modern implementations use efficient search strategies like recursive feature elimination, genetic algorithms, and beam search to explore the feature space intelligently. Multi-objective optimization balances predictive performance with feature set size, complexity, and acquisition cost, providing practical feature subsets that consider real-world constraints beyond pure accuracy.

Embedded methods integrate feature selection directly into the model training process. LASSO and elastic net regression automatically perform feature selection through L1 regularization. Tree-based algorithms naturally perform feature selection through their splitting criteria. Neural networks with sparsity-inducing regularization learn to ignore irrelevant features. These approaches offer a good balance of performance and efficiency, though they may be specific to particular model classes.

Advanced Dimensionality Reduction

Dimensionality reduction techniques transform high-dimensional features into lower-dimensional representations while preserving important structure and relationships. Traditional methods like PCA and t-SNE have been supplemented by more sophisticated approaches that better handle non-linear relationships and preserve different aspects of the data geometry.

UMAP (Uniform Manifold Approximation and Projection) has emerged as a powerful alternative to t-SNE for visualization-quality dimensionality reduction, offering better preservation of global structure and significantly faster computation. Autoencoders learn non-linear dimensionality reductions through neural networks, often capturing more complex patterns than linear methods. Variational autoencoders add probabilistic foundations, enabling generation of new data points and more robust representations.

Manifold learning techniques like Isomap, Locally Linear Embedding, and Laplacian Eigenmaps explicitly model the assumption that high-dimensional data lies on or near a lower-dimensional manifold. These methods can uncover intrinsic data structure that linear methods miss, though they often scale poorly to very large datasets. Recent advances have addressed these scalability issues through approximation techniques and efficient implementations.

Supervised dimensionality reduction methods like Linear Discriminant Analysis and its kernel extensions incorporate label information to find projections that maximize class separation. More recent approaches like Neighborhood Components Analysis learn distance metrics that improve k-nearest neighbor classification. These methods are particularly valuable when the reduced dimensions will be used for specific predictive tasks rather than general exploratory analysis.

Feature Importance and Interpretability

As feature extraction becomes increasingly automated, understanding and interpreting the resulting features has grown in importance. Feature importance methods help identify which features contribute most to model predictions, while feature interpretation techniques explain what these features represent and how they influence outcomes.

Global feature importance methods provide an overall ranking of feature relevance across the entire dataset. Permutation importance measures the drop in model performance when each feature is randomly shuffled. SHAP (SHapley Additive exPlanations) values provide a unified measure of feature importance based on cooperative game theory, accounting for complex feature interactions. Partial dependence plots show how model predictions change as specific features vary, holding other features constant.

Local interpretation methods explain individual predictions rather than overall model behavior. LIME (Local Interpretable Model-agnostic Explanations) approximates complex models with interpretable local models around specific instances. Anchors provide high-precision rules that “anchor” predictions, explaining what features guarantee a particular classification. Counterfactual explanations show how inputs would need to change to alter model predictions, providing actionable insights for decision-making.

The challenge of feature interpretation becomes particularly acute with deep learning features, which may not have direct semantic meaning. Feature visualization techniques for neural networks generate examples that maximally activate specific neurons or layers, providing insight into what patterns they detect. Concept activation vectors test whether specific human-understandable concepts are encoded in neural network representations. Disentanglement metrics quantify how well different aspects of variation are separated in learned features, with better disentangled features typically being more interpretable.

Emerging Trends and Future Directions

Self-Supervised and Unsupervised Feature Learning

Self-supervised learning has emerged as a powerful paradigm for feature extraction from unlabeled data, addressing the fundamental limitation of supervised approaches that require extensive manual annotation. By creating supervisory signals from the data itself, self-supervised methods can learn rich representations from massive unlabeled datasets, then fine-tune these representations for specific tasks with minimal labeled examples.

The most successful self-supervised approaches for feature extraction are based on contrastive learning, which learns representations by maximizing agreement between differently augmented views of the same data while minimizing agreement with other examples. Methods like SimCLR, MoCo, and BYOL have achieved remarkable performance on image representation learning, often matching or exceeding supervised pre-training. For sequential data, approaches like BERT‘s masked language modeling and CPC‘s future prediction have proven equally powerful.

Unsupervised feature learning extends beyond self-supervision to include methods that discover structure without any explicit supervisory signal. Clustering-based methods like DeepCluster and SwAV alternate between clustering representations and learning to predict cluster assignments. Generative approaches like GANs and VAEs learn features as byproducts of modeling the data distribution. Mutual information maximization methods learn features that capture shared information between different views or parts of the data.

The practical implications of these advances are profound. Organizations can now leverage their vast stores of unlabeled data for feature extraction, dramatically reducing dependence on expensive annotation efforts. Domain-specific models can be pre-trained on specialized unlabeled data, learning features tailored to particular applications. Continual learning from streaming unlabeled data enables features to adapt to changing data distributions, maintaining relevance over time.

Multimodal and Cross-Modal Feature Extraction

Multimodal feature extraction addresses the growing prevalence of data that combines multiple modalities—text, images, audio, video, structured data—requiring representations that capture both within-modality patterns and cross-modal relationships. The challenge lies in learning features that leverage complementary information across modalities while handling their different statistical properties and structures.

Modern approaches to multimodal feature extraction include cross-modal transformers that process multiple modalities through shared or interacting attention mechanisms, multimodal autoencoders that learn joint representations by reconstructing all modalities, and contrastive methods that learn alignments between different modalities. Models like CLIP and DALL·E demonstrate the power of cross-modal feature extraction, learning aligned representations of images and text that enable remarkable capabilities like zero-shot classification and text-guided image generation.

The applications of multimodal feature extraction span numerous domains. In healthcare, it integrates medical images, clinical notes, and lab results for more comprehensive patient understanding. In autonomous systems, it fuses camera, LiDAR, and radar data for robust perception. In e-commerce, it combines product images, descriptions, and reviews for better recommendation. In education, it processes lecture videos, slides, and transcripts for enhanced learning analytics.

Cross-modal retrieval represents a particularly valuable application, enabling queries across modalities—finding images that match text descriptions, audio that matches visual scenes, or text that describes video content. Zero-shot learning leverages cross-modal alignment to recognize concepts not seen during training, dramatically expanding the flexibility of machine learning systems. Multimodal reasoning combines information from different modalities to answer questions or make decisions that require integrative understanding.

Federated and Privacy-Preserving Feature Extraction

Federated learning has emerged as a crucial approach for feature extraction from distributed data sources while maintaining privacy and compliance with data regulations. Instead of centralizing data for feature learning, federated approaches train feature extraction models across distributed devices or servers, sharing only model updates rather than raw data. This enables organizations to leverage data from multiple sources—different departments, geographic locations, or even different companies—without violating privacy constraints or data sovereignty requirements.

The technical challenges of federated feature extraction include handling statistical heterogeneity across data sources (non-IID data), communication efficiency for model updates, and security against potential attacks. Advanced federated learning approaches address these challenges through personalized models that adapt to local data distributions, compression techniques that reduce communication costs, and secure aggregation that prevents reconstruction of individual contributions from aggregated updates.

Privacy-preserving feature extraction extends beyond federated learning to include techniques that provide formal privacy guarantees. Differential privacy adds carefully calibrated noise to feature representations or learning processes, ensuring that individual data points cannot be identified or reconstructed. Homomorphic encryption allows computation on encrypted data, enabling feature extraction without ever decrypting sensitive information. Secure multi-party computation enables collaborative feature extraction from multiple sources while keeping each party’s data private.

The practical applications of these privacy-preserving approaches are particularly important in regulated industries like healthcare, finance, and government. Hospitals can collaborate on medical image feature extraction without sharing patient data. Financial institutions can develop better fraud detection features without exposing transaction details. Government agencies can analyze cross-jurisdictional data while maintaining citizen privacy. As data privacy regulations continue to evolve and strengthen, these approaches will become increasingly essential for responsible feature extraction.

Implementation Best Practices and Considerations

Scalability and Computational Efficiency

As feature extraction techniques grow more sophisticated, managing their computational demands becomes increasingly important for practical deployment. The computational cost of feature extraction can easily dominate overall model training time, particularly for deep learning approaches applied to large datasets. Effective management of these costs requires attention to algorithmic efficiency, implementation optimizations, and resource management.

Algorithmic efficiency begins with selecting appropriate feature extraction methods for the problem scale and constraints. For large-scale problems, approximate methods that provide good enough features with reduced computation may be preferable to exact but expensive approaches. Progressive refinement strategies start with simple features and gradually introduce more complex ones as needed, avoiding unnecessary computation for easy cases. Transfer learning leverages features pre-trained on similar problems or domains, dramatically reducing the computation required for feature learning.

Implementation optimizations leverage modern hardware and software techniques to accelerate feature extraction. GPU acceleration provides massive parallelism for deep learning feature extraction, with specialized libraries like CuPy and RAPIDS optimizing common operations. Distributed computing frameworks like Spark and Dask parallelize feature extraction across clusters, handling datasets too large for single machines. Just-in-time compilation with tools like Numba and TVM can significantly speed up custom feature extraction code.

Resource management strategies ensure efficient utilization of available computation. Feature caching stores computed features to avoid recomputation across experiments or during hyperparameter tuning. Incremental feature extraction updates features efficiently as new data arrives, rather than recomputing from scratch. Computational budgeting allocates resources to different feature extraction components based on their expected contribution, focusing computation where it provides the most value.

Feature Storage and Management

As organizations develop increasingly sophisticated feature extraction pipelines, effectively storing, versioning, and managing the resulting features becomes critical for reproducibility, efficiency, and collaboration. Feature stores have emerged as specialized systems for this purpose, providing centralized repositories for features along with the metadata needed to understand their provenance, meaning, and appropriate usage.

Modern feature stores support both offline features computed in batch for training and historical analysis, and online features served with low latency for real-time prediction. They maintain point-in-time correctness, ensuring that features used for training reflect the data available at the time of each training example, preventing data leakage from the future. They provide feature discovery capabilities that help data scientists find and understand available features, reducing duplication and promoting reuse.

Feature versioning tracks how features change over time, enabling reproducibility and controlled rollout of new feature versions. Feature monitoring tracks feature quality, distribution, and relationships over time, detecting drift, anomalies, and degradation that might impact model performance. Feature access control manages permissions based on data sensitivity and user roles, ensuring compliance with privacy and security policies.

The implementation of effective feature management requires careful consideration of storage formats, indexing strategies, and serving architectures. Columnar storage formats like Parquet and ORC provide efficient compression and retrieval for analytical workloads. Vector databases offer optimized storage and similarity search for embedding features. Real-time serving systems ensure low-latency access to features for production inference. The choice among these options depends on the specific characteristics of the features and their usage patterns.

Integration with MLOps Pipelines

Feature extraction does not exist in isolation but rather as a critical component of end-to-end machine learning operations (MLOps). Effective integration of feature extraction with MLOps practices ensures that features are reliably produced, properly validated, and efficiently served throughout the machine learning lifecycle.

Automated feature pipelines transform raw data into features through reproducible, scheduled, or triggered workflows. These pipelines incorporate data validation to ensure input data quality, feature validation to check that extracted features meet expected distributions and constraints, and monitoring to track pipeline health and feature quality over time. Orchestration tools like Airflow, Prefect, and Kubeflow Pipelines manage the execution and dependencies of these feature extraction workflows.

Feature testing verifies that feature extraction code produces correct, consistent results across different environments and data distributions. Unit tests validate individual feature transformations. Integration tests verify that features work correctly with models and other system components. Property-based tests check that features maintain important mathematical properties across diverse inputs. Comprehensive testing prevents feature-related failures in production and facilitates confident iteration on feature extraction approaches.

Continuous integration and deployment for features extends software engineering practices to feature extraction code. Version control tracks changes to feature definitions and extraction logic. Automated testing runs feature tests on each change, preventing regressions. Staged deployment rolls out new feature versions gradually, monitoring for issues before full promotion. Feature flags enable controlled experimentation with new features, measuring their impact before committing to permanent adoption.

Conclusion: The Future of Feature Extraction

The field of feature extraction stands at an exciting inflection point, with AI-powered techniques transforming it from a manual art to an automated science. The trends outlined in this guide—increasing automation, deeper integration with machine learning, expanding capability across data types, and growing emphasis on interpretability and efficiency—point toward a future where feature extraction becomes increasingly powerful, accessible, and integral to successful AI applications.

Looking ahead, several developments seem likely to shape the next evolution of feature extraction. Causal feature learning will move beyond correlation to discover features that capture underlying causal relationships, enabling more robust predictions and actionable insights. Meta-learning approaches will adapt feature extraction strategies to new domains and tasks with minimal examples, dramatically reducing the time and expertise required for effective feature engineering. Neuro-symbolic integration will combine the pattern recognition power of neural networks with the reasoning capabilities of symbolic AI, producing features that are both data-driven and semantically grounded.

The most significant shift may be toward continuous feature learning systems that constantly adapt and improve their feature representations from streaming data, maintaining relevance as distributions change and new patterns emerge. Combined with automated monitoring and management, this will enable feature extraction systems that not only start strong but get better over time, learning from their own deployment and the evolving data environment.

For organizations seeking to leverage these advancements, the path forward involves building feature extraction capabilities that balance sophistication with practicality. This means investing in the tools and infrastructure needed for automated feature engineering while maintaining the domain understanding and critical thinking required to guide and validate these systems. It means embracing AI-powered feature extraction not as a replacement for human expertise but as an augmentation that allows data scientists to focus on higher-level challenges.

The organizations that master modern feature extraction will gain significant competitive advantages through faster model development, improved predictive performance, and the ability to extract value from increasingly complex and diverse data sources. As AI continues to transform industries and functions, sophisticated feature extraction will remain the essential bridge between raw data and actionable intelligence, turning information into insight and data into decisions.