Master real-world data cleaning with our complete guide. Learn practical techniques for handling missing data, outliers, duplicates, and structural errors to build reliable machine learning models that deliver accurate results

Introduction: The Critical Role of Data Cleaning in Machine Learning Success

Data cleaning represents the unsung hero of successful machine learning projects—the meticulous, often tedious process that separates high-performing models from failed experiments. In the real world, data cleaning consumes approximately 60-80% of a data scientist’s time, not because it’s inefficient, but because it’s fundamentally necessary. The principle of “garbage in, garbage out” has never been more relevant than in the age of machine learning, where even sophisticated algorithms cannot overcome fundamentally flawed input data. Data cleaning is the process of detecting, diagnosing, and rectifying errors in datasets to ensure they accurately represent the real-world phenomena they’re meant to capture.

The consequences of neglecting proper data cleaning are both severe and well-documented. A famous study by IBM estimated that poor data quality costs the U.S. economy approximately $3.1 trillion annually, while in machine learning specifically, research from CrowdFlower found that data scientists spend 60% of their time cleaning and organizing data. More critically, models built on unclean data can produce dangerously misleading results—from financial models that underestimate risk to healthcare algorithms that misdiagnose conditions to recommendation systems that reinforce harmful biases. These aren’t theoretical concerns; they’re real-world failures that stem from inadequate attention to data cleaning fundamentals.

This comprehensive guide explores practical, real-world data cleaning techniques that prevent model failures before they occur. We’ll move beyond theoretical concepts to address the messy reality of working with actual datasets—complete with missing values, inconsistent formatting, duplicate records, and structural errors that plague real-world data collection. Whether you’re working with customer transaction data, sensor readings, medical records, or any other real-world dataset, mastering these data cleaning techniques will transform your modeling outcomes from unpredictable to reliable.

Understanding Data Quality Dimensions: The Foundation of Effective Cleaning

The Six Core Dimensions of Data Quality

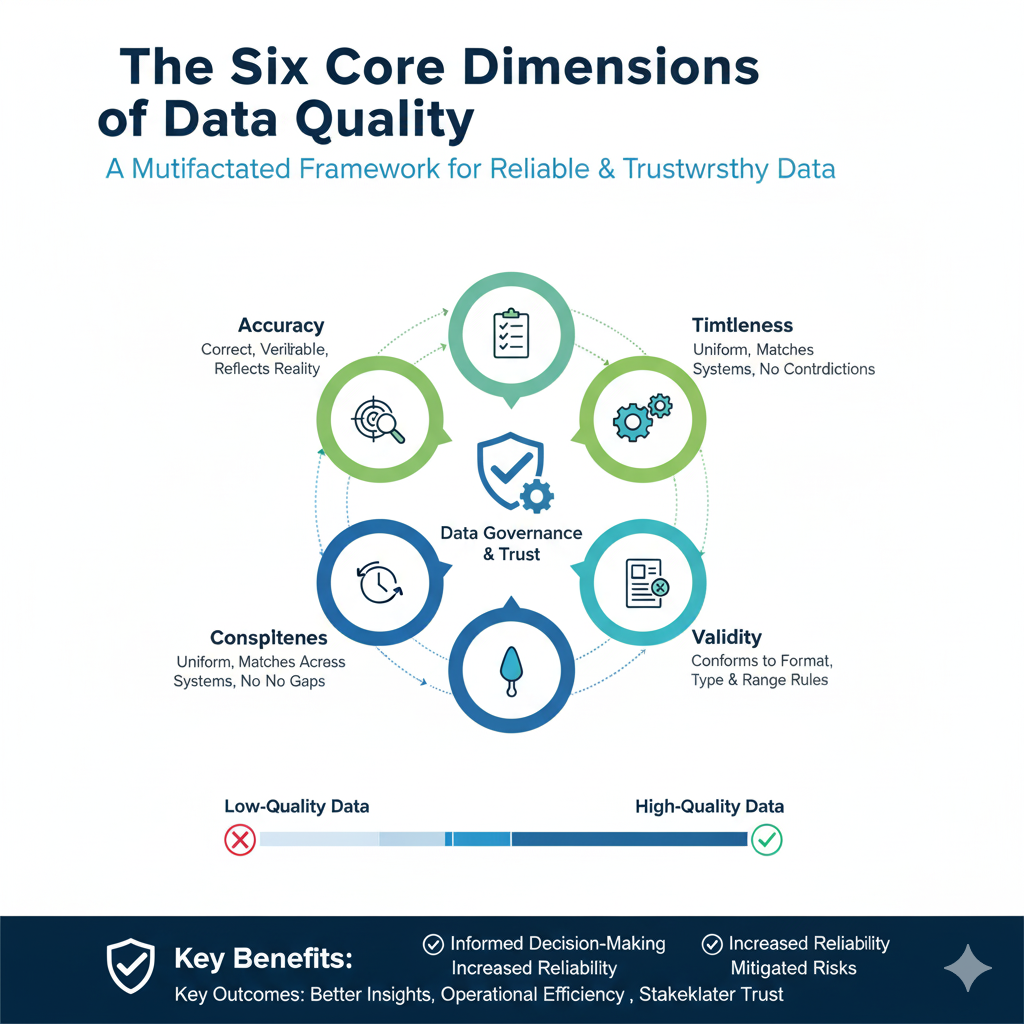

Effective data cleaning begins with understanding what constitutes “clean” data across multiple dimensions. Each dimension represents a different aspect of data quality that must be addressed systematically.

Completeness refers to the presence of expected values across all records and fields. In real-world datasets, missing data rarely occurs randomly—it often follows patterns that can introduce bias if not handled properly. For example, higher-income customers might be less likely to disclose salary information, or certain sensors might fail under specific environmental conditions. Assessing completeness involves not just counting missing values but understanding their distribution and potential causes. Effective data cleaning for completeness requires distinguishing between data that is missing completely at random, missing at random (dependent on observed variables), or missing not at random (dependent on unobserved variables), as each scenario demands different handling strategies.

Accuracy measures how closely data values reflect the real-world entities or events they represent. Accuracy issues often stem from measurement errors, data entry mistakes, or system failures. For instance, a temperature sensor might drift out of calibration over time, or a human data entry clerk might transpose digits in a phone number. Real-world data cleaning for accuracy requires domain knowledge to identify implausible values—knowing that human body temperature doesn’t reach 200°F or that customer ages typically fall between 0 and 120 years. Accuracy validation often involves cross-referencing with trusted external sources or implementing validation rules that flag values outside expected ranges.

Consistency ensures that data doesn’t contain contradictions within the same dataset or across related datasets. Real-world data often suffers from consistency issues due to system integrations, schema changes over time, or different collection standards across departments. Examples include customer records where the same person appears with different spellings (“Jon” vs “John”), product codes that follow different formatting conventions, or date formats that mix MM/DD/YYYY and DD/MM/YYYY. Data cleaning for consistency requires standardizing formats, resolving conflicting information, and establishing single sources of truth for critical entities.

Timeliness reflects whether data is current enough for its intended use. In real-time applications like fraud detection or stock trading, data that’s even minutes old might be useless, while historical analysis might tolerate much older data. Timeliness issues often arise from batch processing delays, time zone confusions, or system synchronization problems. Effective data cleaning involves assessing whether data freshness matches analytical requirements and implementing processes to flag stale data that could lead to outdated insights.

Validity concerns whether data conforms to defined business rules and syntactic rules. This includes checking that values fall within acceptable ranges, follow required formats, and comply with defined constraints. Real-world examples include email addresses without “@” symbols, percentages that exceed 100%, or categorical variables containing undefined categories. Data cleaning for validity involves implementing comprehensive validation rules that catch violations before they corrupt analysis.

Uniqueness ensures that each real-world entity appears only once in the dataset. Duplicate records are incredibly common in real-world data due to system errors, data integration issues, or human error. These duplicates can severely skew analysis—imagine counting the same customer five times in a sales analysis. Data cleaning for uniqueness requires sophisticated matching algorithms that can identify duplicates even when records aren’t identical, accounting for typos, formatting differences, and missing information.

Assessing Your Data’s Current State

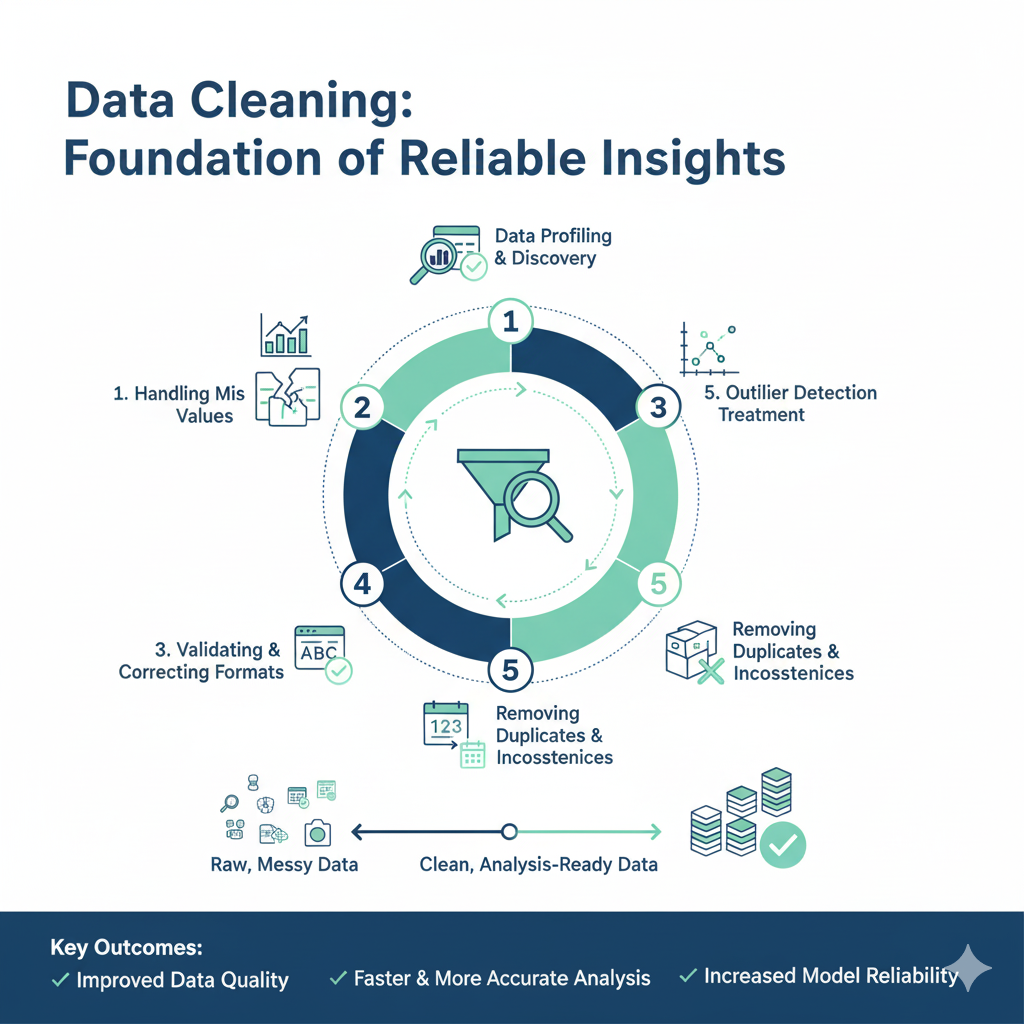

Before beginning any data cleaning process, thorough assessment provides the foundation for targeted, effective cleaning. This assessment should quantify issues across all quality dimensions and prioritize them based on their potential impact on your specific modeling objectives.

Start by profiling your data comprehensively. Calculate basic statistics for each variable—mean, median, standard deviation for numerical fields; value counts and frequencies for categorical fields. Visualize distributions through histograms, box plots, and frequency charts to identify outliers and unusual patterns. Document the percentage of missing values for each field and analyze whether missingness correlates with other variables. Create correlation matrices to understand relationships between variables and identify potential redundancy or multicollinearity issues.

Next, conduct systematic quality scoring. Develop a scoring system that weights different quality issues based on their importance to your modeling goals. For a customer churn prediction model, accuracy in subscription dates might be critical, while minor spelling variations in customer names might be less important. Document every quality issue discovered, noting its type, severity, and potential impact. This documentation becomes invaluable for tracking cleaning progress and justifying the time investment to stakeholders.

Finally, establish data quality benchmarks and monitoring. Set target quality levels for each dimension based on your model’s requirements. Implement automated checks that can run regularly to detect new quality issues as data updates—because in real-world systems, data quality isn’t a one-time achievement but an ongoing challenge. This proactive approach to data cleaning ensures that your models continue to perform well as new data flows in, rather than degrading slowly as quality issues accumulate unnoticed.

Practical Data Cleaning Techniques: Step-by-Step Implementation

Handling Missing Data: Beyond Simple Imputation

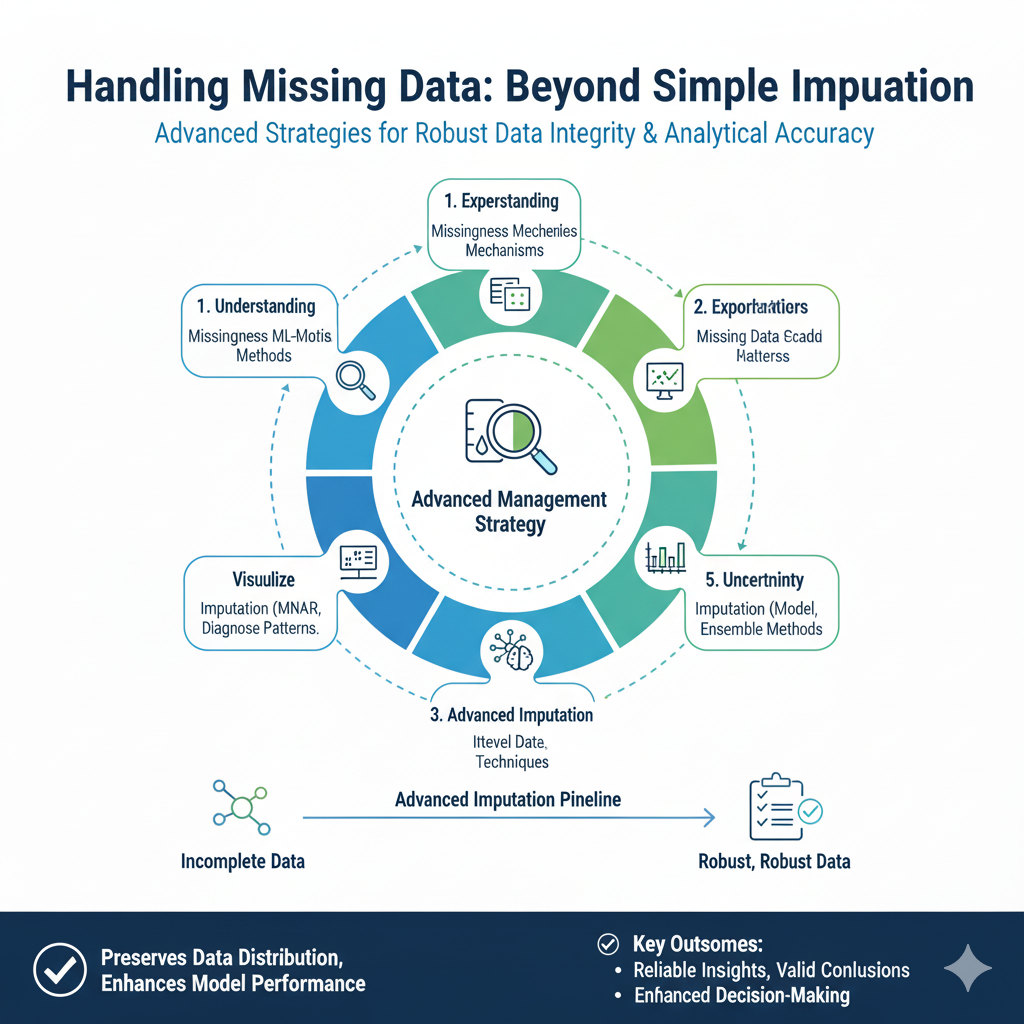

Missing data represents one of the most common challenges in real-world datasets, and effective data cleaning requires sophisticated approaches beyond simply dropping records or filling with means.

Diagnosing Missing Data Patterns begins with understanding why data is missing. Use visualization techniques like missingno matrices in Python to identify patterns in missingness. Are certain variables missing together? Does missingness correlate with other variables? For example, in customer data, wealthier clients might be less likely to disclose income, creating systematic bias if you simply drop missing values. Conduct statistical tests like Little’s MCAR test to determine if data is missing completely at random, which influences your handling strategy.

Strategic Imputation Techniques should match the missing data mechanism and your modeling goals. For data missing completely at random, mean/median imputation might suffice, though it underestimates variance. For data missing at random, consider regression imputation where you predict missing values based on other variables. Multiple imputation creates several complete datasets and combines results, preserving statistical properties better than single imputation. For categorical data, consider creating a “missing” category rather than imputing modes, as the missingness itself might be informative.

Advanced Approaches include using machine learning models for imputation. K-nearest neighbors imputation finds similar records and uses their values, while more sophisticated methods like MICE (Multiple Imputation by Chained Equations) model each variable with missing values conditional on other variables. For time-series data, interpolation methods that account for temporal patterns often work better than cross-sectional approaches. The key is documenting your imputation strategy and testing how sensitive your model is to different approaches—sometimes the best data cleaning decision is to exclude variables with excessive missingness rather than impute poorly.

Validation of Imputation is crucial. After imputation, check that distributions haven’t been unduly distorted and that relationships between variables remain plausible. Compare descriptive statistics before and after imputation, and conduct sensitivity analysis to see how different imputation methods affect your final model. This validation step ensures your data cleaning decisions don’t introduce new problems while solving existing ones.

Correcting Structural Errors: Formatting and Standardization

Structural errors—inconsistent formatting, wrong data types, and schema violations—can silently undermine analysis while being particularly frustrating to detect and fix.

Data Type Validation and Correction ensures each field contains appropriate values. Common issues include numerical values stored as strings (often with hidden characters or units), dates in inconsistent formats, and categorical variables with subtle variations. Systematic data cleaning involves converting data types explicitly rather than relying on inference, handling exceptions gracefully when conversion fails, and documenting all transformations. For example, when converting strings to numbers, extract numerical patterns while preserving information about units in separate columns when relevant.

Standardization of Formats creates consistency across your dataset. For textual data, this includes standardizing case (upper/lower/proper), removing extra whitespace, and normalizing special characters. For categorical variables, consolidate similar categories—”USA”, “U.S.A.”, “United States” should likely become a single value. Create mapping dictionaries that document these consolidations for reproducibility. For dates and times, convert to standardized formats and ensure consistent timezone handling—a critical data cleaning step for any analysis involving temporal patterns.

Addressing Schema Violations fixes structural problems in how data is organized. This might involve splitting composite fields (like “firstName lastName” into separate columns), merging related fields, or restructuring nested data like JSON objects into tabular format. In real-world data integration scenarios, you’ll often need to map similar fields from different sources to common schemas—a process that requires both technical skills and domain knowledge to ensure semantic consistency, not just syntactic matching.

Validation of Structural Cleaning should include automated checks for format compliance, data type consistency, and schema adherence. Create validation rules that can run automatically on new data, flagging records that violate standards before they corrupt analysis. This proactive data cleaning approach prevents structural errors from accumulating and makes data quality maintenance more sustainable over time.

Outlier Detection and Treatment: Separating Signal from Noise

Outliers—extreme values that deviate significantly from other observations—can either represent valuable signals or problematic noise, and effective data cleaning requires distinguishing between these cases.

Comprehensive Outlier Detection employs multiple methods to identify unusual values. Statistical methods include Z-scores for normally distributed data, IQR (Interquartile Range) methods for skewed distributions, and modified Z-scores that are more robust to outliers themselves. Visualization techniques like box plots, scatter plots, and histograms help identify outliers visually, while domain-specific knowledge helps identify values that are statistically plausible but contextually impossible—like a 200-year-old patient or a negative product quantity.

Multivariate Outlier Detection identifies unusual combinations of values rather than extreme values in single variables. Mahalanobis distance measures how far a point is from the center of a distribution, accounting for correlations between variables. Clustering-based approaches flag points that don’t belong well to any cluster, while isolation forests specifically designed for outlier detection can identify anomalies in high-dimensional data. These multivariate techniques are essential for data cleaning in complex datasets where outliers manifest as unusual patterns rather than extreme values.

Contextual Treatment of Outliers varies based on their nature and impact. True anomalies representing data errors should typically be corrected or removed. Genuine extreme values that represent rare but real events might be kept but handled with robust statistical methods. In some cases, winsorizing or trimming extreme values reduces their influence without discarding them completely. For modeling purposes, sometimes creating indicator variables for outliers preserves information while reducing their leverage on model parameters.

Documentation and Analysis of outlier treatment is crucial. Record which values were flagged as outliers, why they were considered outliers, and how they were handled. Analyze whether outliers cluster in meaningful ways—if all outliers come from a single data source or time period, this might indicate systematic issues rather than random errors. This thorough approach to data cleaning ensures outlier treatment is principled and reproducible rather than arbitrary.

Deduplication and Entity Resolution: Ensuring Uniqueness

Duplicate records plague real-world datasets and can severely distort analysis by overrepresenting certain entities or events.

Fuzzy Matching Techniques identify duplicates that aren’t exact matches. Simple string distance metrics like Levenshtein distance help detect typos and minor variations. Phonetic algorithms like Soundex match names that sound similar but are spelled differently. More advanced approaches like TF-IDF combined with cosine similarity can identify similar text even with substantial rewording. For data cleaning in real-world scenarios, fuzzy matching is essential because duplicates rarely match exactly.

Multi-field Matching Strategies combine evidence across multiple fields to identify duplicates. Rather than relying on a single identifier, consider combinations of name, address, birthdate, or other attributes. probabilistic matching assigns weights to different fields based on their discriminative power—phone numbers might be weighted higher than names for identifying individuals. This approach to data cleaning acknowledges that any single field might contain errors, but consistency across multiple fields increases confidence in match decisions.

Decision Rules and Thresholds determine when similar records should be considered duplicates. Establish match scores that combine evidence from multiple fields and set thresholds for automatic merging, manual review, or keeping separate. For critical applications, implement manual review processes for borderline cases. Document these rules thoroughly as part of your data cleaning process to ensure consistency and reproducibility.

Merge Strategies determine how to combine information from duplicate records. When duplicates contain conflicting information, you need rules for resolution—should you keep the most recent data? The most complete record? Create composite records that combine information from all sources? This aspect of data cleaning requires careful consideration of data provenance and reliability, sometimes preferring certain sources over others based on known quality issues.

Advanced Data Cleaning Scenarios: Real-World Complexities

Temporal Data Cleaning: Handling Time-Series Challenges

Time-series data presents unique data cleaning challenges due to its sequential nature and temporal dependencies.

Timestamp Alignment and Standardization ensures consistent time references across your dataset. This includes handling timezone conversions, daylight saving time transitions, and inconsistent timestamp granularity (mixing daily, hourly, and real-time data). For analysis, you often need to align data to regular intervals through aggregation or interpolation, a data cleaning process that requires careful consideration of what time intervals make sense for your analytical goals.

Missing Time-Series Imputation requires methods that preserve temporal patterns. Simple forward-fill or backward-fill might work for some applications, but interpolation methods that account for seasonality and trend often perform better. For more sophisticated data cleaning, consider time-series specific methods like STL decomposition (Seasonal-Trend decomposition using Loess) followed by imputation of the components, or machine learning approaches that use both past values and correlated series to predict missing points.

Anomaly Detection in Time-Series identifies unusual temporal patterns rather than just extreme values. Methods include tracking deviations from seasonal patterns, detecting change points where statistical properties shift, and identifying anomalous sequences rather than just individual points. This temporal data cleaning is particularly important for applications like fraud detection, predictive maintenance, and monitoring systems where the pattern of values over time matters more than individual measurements.

Text Data Cleaning: Preparing Unstructured Data

Text data requires specialized data cleaning approaches to transform unstructured content into analyzable features.

Noise Removal and Normalization eliminates irrelevant variation while preserving meaningful content. This includes removing HTML tags, punctuation, and special characters; standardizing whitespace; correcting common misspellings; and handling case sensitivity appropriately for your application. For some text analysis, you might expand contractions (“don’t” to “do not”) or lemmatize words to their base forms, though these decisions depend on your specific analytical goals.

Handling Encoding Issues addresses the frustrating but common problems of character encoding mismatches. Symptoms include replacement characters (�), mojibake (garbled text from encoding confusion), and invisible characters that affect string matching. Effective data cleaning for text involves detecting encoding problems early, converting to standard encodings like UTF-8, and handling special characters consistently.

Domain-Specific Text Cleaning tailors approaches to your particular context. Medical text might require preserving specific acronyms and terminology that would normally be removed, while social media text might need special handling of emojis, hashtags, and informal language. This contextual data cleaning recognizes that what constitutes “noise” depends entirely on what signals you’re trying to extract from the text.

Geospatial Data Cleaning: Ensuring Location Accuracy

Geospatial data introduces unique data cleaning challenges related to coordinate systems, precision, and topological consistency.

Coordinate System Standardization ensures all spatial data uses consistent reference systems. Different data sources might use different coordinate reference systems (CRS), projections, or datums, leading to misalignment when combined. Systematic data cleaning involves converting all spatial data to a common CRS appropriate for your analysis area and purpose.

Address Validation and Geocoding improves location data quality. For address data, use validation services to identify implausible addresses and geocoding services to convert addresses to precise coordinates. This data cleaning step is crucial for applications like delivery route optimization, service area analysis, or any analysis where location precision matters.

Topological Error Correction fixes issues like overlapping polygons, gaps between adjacent areas, or self-intersecting shapes. These errors can cause serious problems in spatial analysis but often require specialized GIS tools and expertise to detect and fix—a specialized form of data cleaning that’s essential for reliable spatial modeling.

Implementing Scalable Data Cleaning Pipines

Automated Quality Monitoring

Sustainable data cleaning requires automation that continuously monitors data quality rather than relying on one-off cleaning efforts.

Automated Validation Rules implement business logic that flags data quality issues as they occur. These might include range checks (“age must be between 0 and 120”), format validation (“email must contain @”), relationship checks (“end date must be after start date”), and uniqueness constraints. Implementing these rules at data entry or ingestion points prevents many quality issues from persisting in your datasets.

Data Profiling Automation regularly analyzes datasets to detect emerging quality issues. Automated profiling calculates key quality metrics—completeness rates, value distributions, pattern frequencies—and compares them to historical baselines or predefined thresholds. When metrics deviate significantly, the system flags potential quality issues for investigation. This proactive data cleaning approach catches problems early before they affect downstream analysis.

Anomaly Detection Systems use machine learning to identify unusual patterns that might indicate data quality issues. Unlike rule-based systems that catch expected problems, anomaly detection can identify novel issues—sudden changes in data distributions, unusual correlation patterns, or unexpected missingness relationships. These systems complement traditional data cleaning approaches by detecting issues you haven’t specifically anticipated.

Documentation and Reproducibility

Effective data cleaning requires thorough documentation to ensure reproducibility and facilitate collaboration.

Cleaning Logs record every transformation applied to the data, including the rationale for decisions and any assumptions made. These logs should be detailed enough that someone else could exactly reproduce your cleaning process. For automated cleaning pipelines, this means versioning cleaning code and configuration; for manual cleaning, maintaining detailed change records.

Data Lineage Tracking documents how data flows through cleaning processes and how different versions relate to each other. This is particularly important when cleaning involves multiple steps, conditional logic, or human judgment calls. Good lineage tracking helps debug issues by tracing problematic values back to their sources and understanding what transformations they’ve undergone.

Quality Metric Documentation records data quality before and after cleaning, providing evidence of cleaning effectiveness and helping prioritize future cleaning efforts. These metrics should cover all relevant quality dimensions and be tracked over time to identify trends and recurring issues.

Conclusion: Building a Culture of Data Quality

Data cleaning is not a one-time project but an ongoing practice that requires both technical solutions and organizational commitment. The most effective data cleaning happens when quality is considered at every stage of the data lifecycle—from collection and storage through analysis and decision-making.

The technical approaches covered in this guide provide a foundation for systematic data cleaning, but their effectiveness depends on integrating them into broader data governance frameworks. This includes establishing clear data quality standards, defining roles and responsibilities for quality management, and creating processes for continuous quality improvement.

Perhaps most importantly, successful data cleaning requires shifting from reactive cleaning—fixing problems after they’re discovered—to proactive quality assurance that prevents issues from entering datasets in the first place. This might involve improving data collection instruments, implementing validation at point of entry, educating data creators about quality importance, and building systems that make it easy to collect clean data.

The investment in rigorous data cleaning pays compounding returns through more accurate models, more reliable insights, and more confident decision-making. In an increasingly data-driven world, the organizations that master data cleaning will build sustainable competitive advantages, while those that neglect it will find their analytical efforts undermined by the very data meant to support them. By treating data cleaning not as a preliminary chore but as a core analytical competency, data professionals can ensure their models are built on foundations strong enough to support meaningful, reliable conclusions.