Master SQL for Data Science with 10 powerful techniques for 2025. Learn advanced window functions, query optimization, complex joins, and modern SQL patterns to transform your data analysis capabilities and accelerate your data science career

Introduction: The Unchanging Foundation in an Evolving Data Landscape

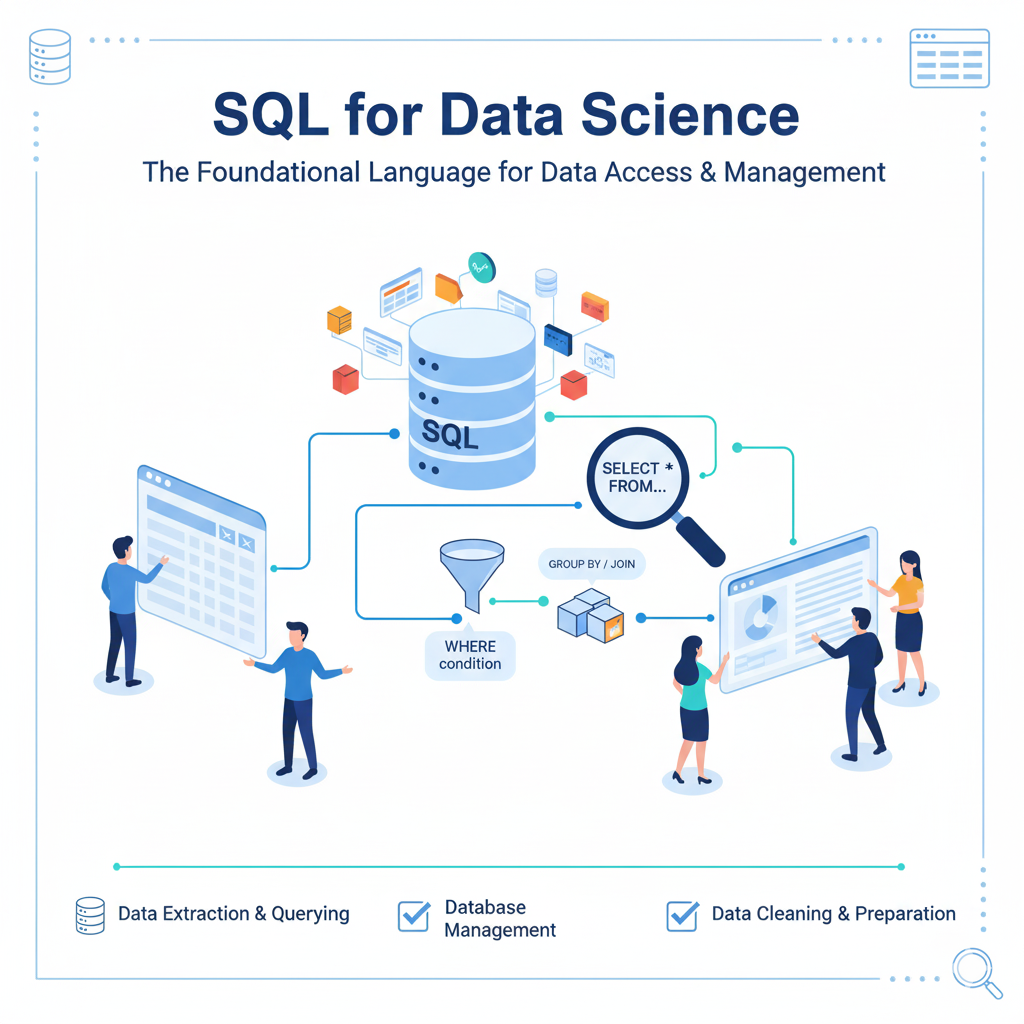

In the rapidly transforming world of data science, SQL for Data Science remains the bedrock upon which all meaningful data analysis is built. As we progress through 2025, the importance of mastering SQL for Data Science has only intensified, despite the emergence of new technologies and methodologies. The ability to efficiently extract, transform, and analyze data using structured query language continues to separate competent data scientists from exceptional ones. This comprehensive guide explores ten powerful strategies to master SQL for Data Science, ensuring you can navigate complex data environments with confidence and precision.

The evolution of SQL for Data Science has been remarkable, with modern implementations offering sophisticated analytical capabilities that rival dedicated programming languages. From window functions that enable complex time-series analysis to advanced optimization techniques that handle massive datasets, the scope of SQL for Data Science has expanded dramatically. Understanding these advanced features is no longer optional for data scientists seeking to deliver maximum value in their organizations.

This article will take you through a progressive journey from fundamental concepts to advanced techniques, providing practical examples and real-world applications that demonstrate why SQL for Data Science remains an indispensable skill. Whether you’re querying traditional relational databases or modern cloud data warehouses, these strategies will transform your approach to data extraction and analysis.

1. Mastering Advanced Window Functions for Complex Analytics

Going Beyond Basic Aggregations

Window functions represent one of the most powerful features in modern SQL for Data Science, enabling sophisticated analytical operations without the need for complex self-joins or procedural code. Understanding how to leverage these functions effectively can dramatically reduce query complexity while improving performance. The key to mastering window functions in SQL for Data Science lies in recognizing patterns where you need to perform calculations across related rows while maintaining individual row details.

Consider a scenario where you need to calculate running totals, moving averages, or rank items within categories. Traditional aggregate functions would collapse these results, but window functions preserve the original granularity. For example, calculating a 7-day moving average of sales while still seeing daily figures becomes straightforward with proper window function implementation. This capability is particularly valuable in SQL for Data Science applications involving time-series analysis, customer behavior tracking, and performance monitoring.

Practical Implementation Patterns

The real power in SQL for Data Science emerges when you combine multiple window functions and understand the nuances of the OVER() clause. Framing clauses like ROWS BETWEEN 7 PRECEDING AND CURRENT ROW enable precise control over which rows participate in calculations. Meanwhile, partitioning allows you to perform these calculations within logical groups, such as computing department-specific rankings while maintaining a single query execution.

Advanced applications include using window functions for gap analysis, where you identify sequences and breaks in data, or for sessionization, where you group user activities into meaningful sessions based on time thresholds. These patterns demonstrate how SQL for Data Science has evolved beyond simple data retrieval to encompass complex analytical workflows that previously required specialized statistical software.

2. Optimizing Query Performance for Large-Scale Data

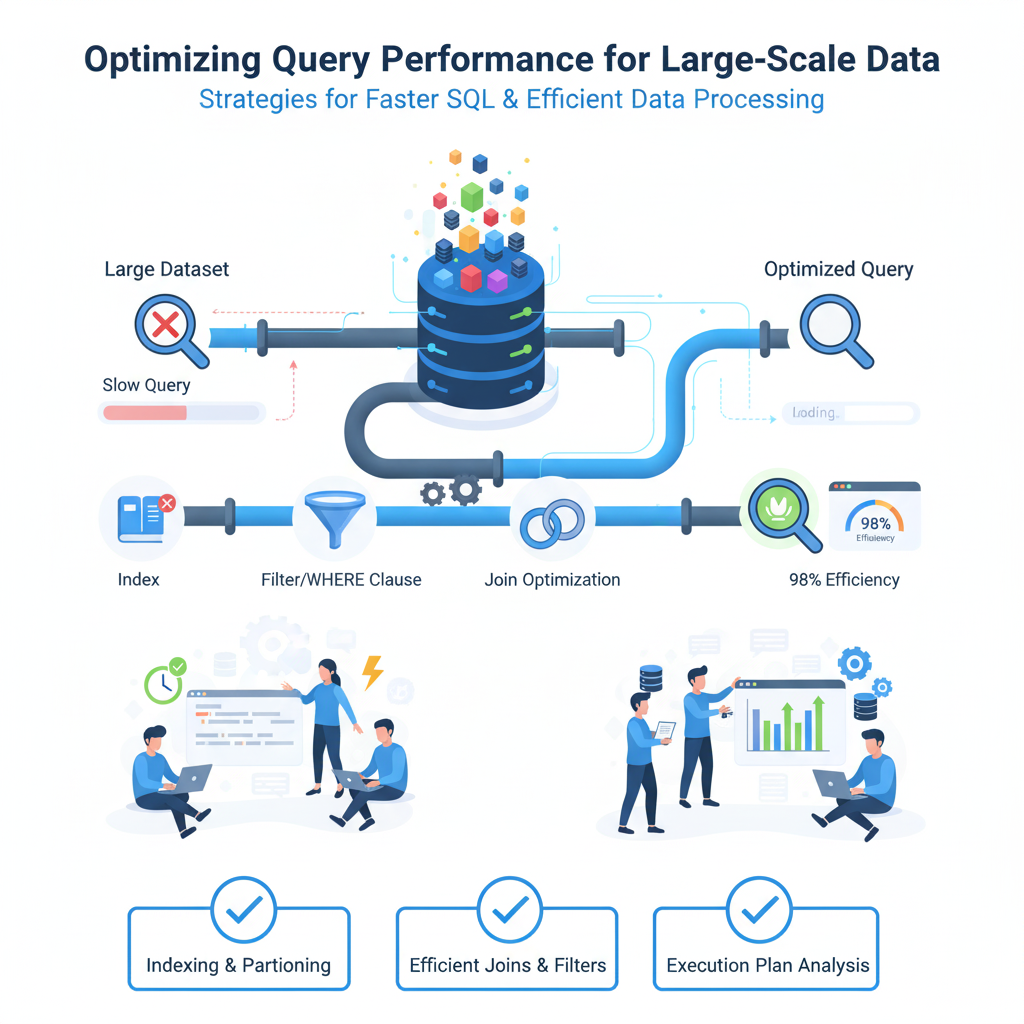

Understanding Execution Plans

In the era of big data, query performance has become a critical aspect of SQL for Data Science. The ability to write efficient queries separates professionals who can work with production datasets from those limited to toy examples. Mastering query optimization begins with understanding execution plans—the roadmap the database uses to execute your queries. Learning to read these plans in SQL for Data Science workflows enables you to identify bottlenecks, recognize missing indexes, and understand how joins are being processed.

Modern database systems provide increasingly sophisticated tools for analyzing query performance. The EXPLAIN ANALYZE command in PostgreSQL, for example, shows both the estimated and actual execution plan, revealing where the optimizer’s predictions diverged from reality. Similar functionality exists across major database platforms, making this knowledge transferable across different SQL for Data Science environments. This understanding becomes crucial when working with large datasets where inefficient queries can consume excessive resources or timeout entirely.

Strategic Indexing and Join Optimization

Effective indexing strategy is fundamental to performant SQL for Data Science. Understanding which columns to index and what type of indexes to use requires insight into both your data and your query patterns. Composite indexes that cover multiple columns can dramatically accelerate queries that filter or sort on those columns. Meanwhile, partial indexes that only include relevant data subsets can reduce index size and maintenance overhead.

Join operations represent another critical optimization area in SQL for Data Science. Understanding the differences between hash joins, merge joins, and nested loops enables you to structure queries and indexes for optimal performance. The order of joins, the selectivity of join conditions, and the availability of appropriate indexes all significantly impact execution time. Advanced practitioners of SQL for Data Science learn to recognize when denormalization might improve performance or when temporary tables might break complex operations into more manageable steps.

3. Advanced JOIN Techniques for Complex Data Relationships

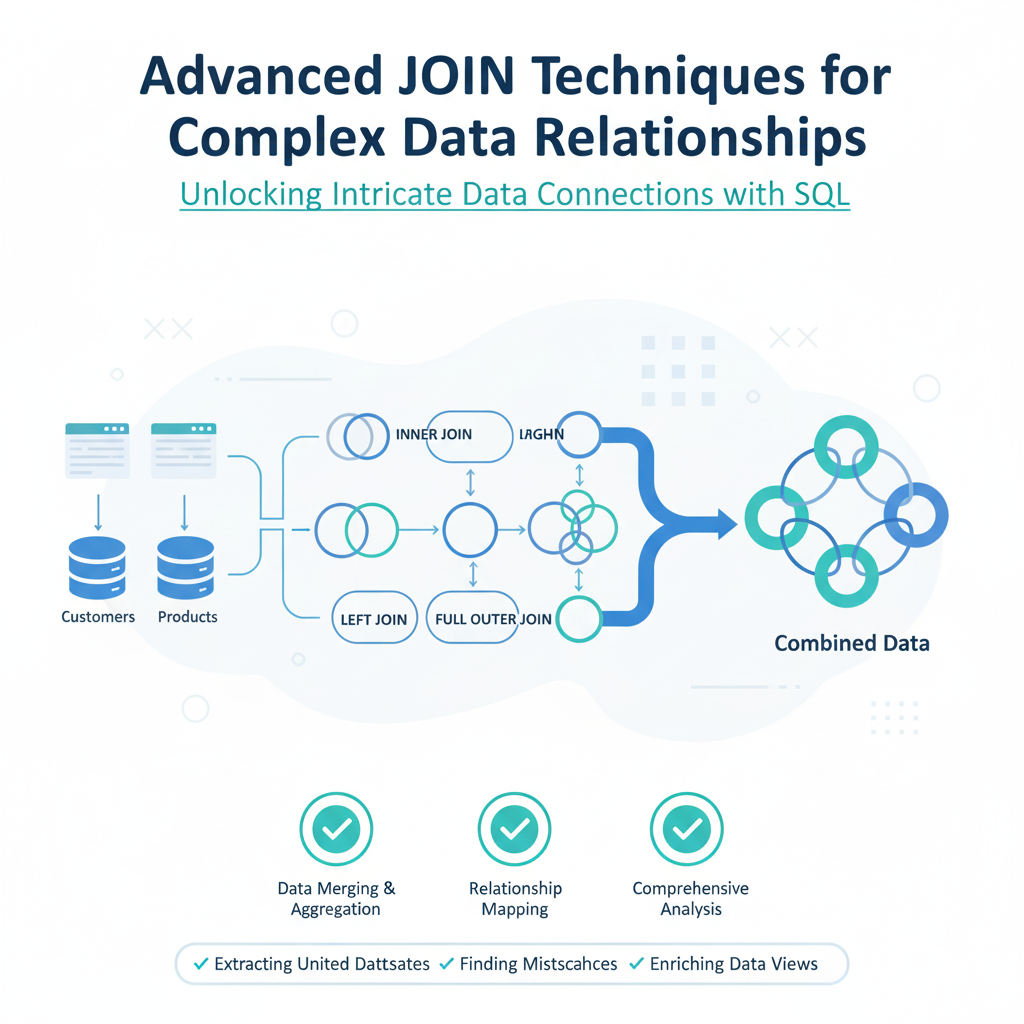

Beyond Basic Inner and Left Joins

While most data scientists master basic JOIN operations early in their SQL for Data Science journey, advanced JOIN patterns unlock significantly more powerful analytical capabilities. Self-joins, for instance, enable comparing rows within the same table—essential for analyzing sequential events, hierarchical relationships, or time-based comparisons. Understanding how to properly alias tables and establish meaningful join conditions in these scenarios separates novice users from SQL for Data Science experts.

LATERAL joins represent another sophisticated tool in the SQL for Data Science toolkit, allowing subqueries in the FROM clause to reference columns from preceding tables. This capability enables complex row-by-row processing that would otherwise require multiple queries or procedural logic. For example, you can use LATERAL joins to fetch a variable number of related records per row or to apply different filtering logic based on each row’s characteristics.

Handling Complex Hierarchies and Graphs

Real-world data often contains hierarchical relationships that challenge traditional JOIN approaches. Recursive Common Table Expressions (CTEs) provide an elegant solution for working with organizational charts, product categories, or any tree-like structure in SQL for Data Science. Mastering recursive queries enables you to flatten hierarchies, calculate aggregates across tree branches, or identify all descendants of a particular node.

Graph analysis represents another frontier for advanced SQL for Data Science applications. While specialized graph databases exist for highly connected data, many graph-like problems can be solved using recursive CTEs and clever JOIN strategies. Finding shortest paths, identifying clusters, or analyzing network effects become possible within SQL, eliminating the need to export data to specialized tools for many common graph problems.

4. Mastering Common Table Expressions for Readable Complex Queries

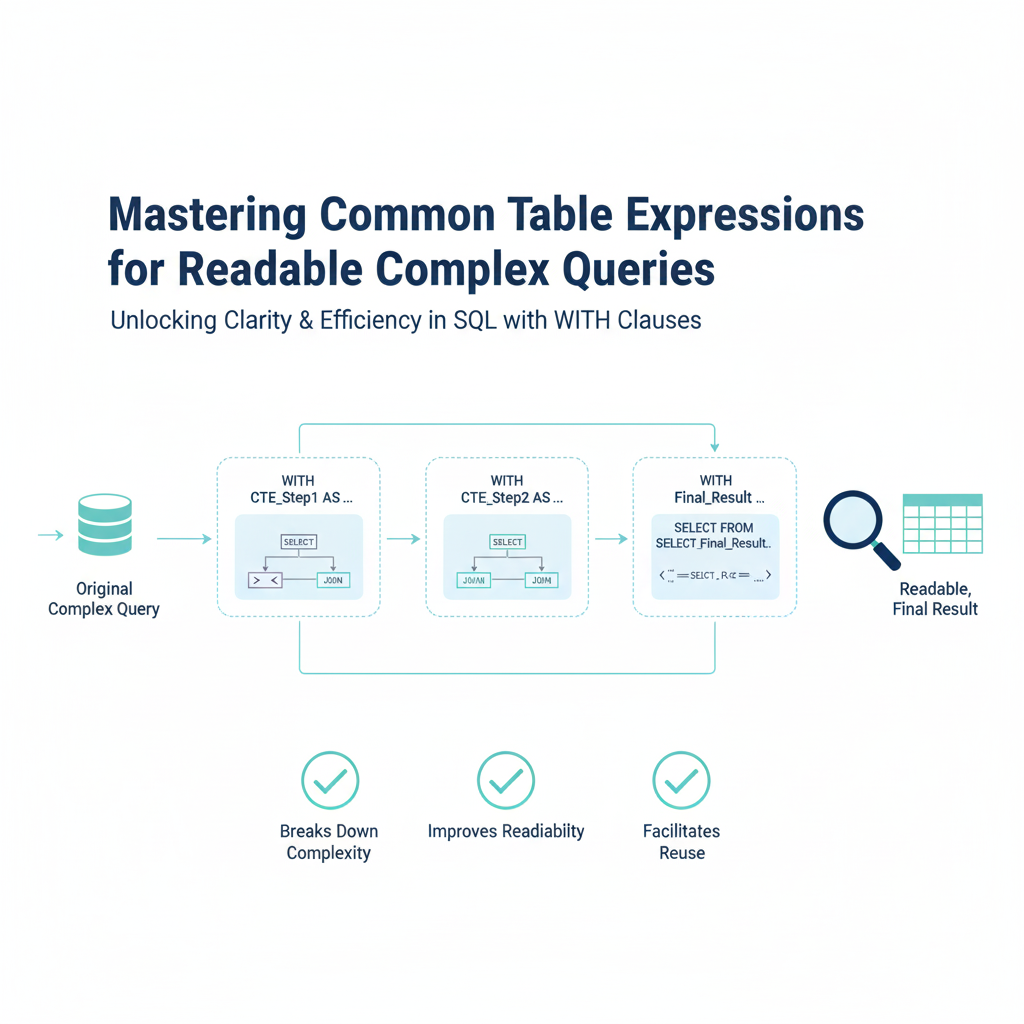

Structuring Complex Analytical Logic

Common Table Expressions have revolutionized how professionals approach SQL for Data Science by providing a way to break complex queries into logical, reusable components. The true power of CTEs extends far beyond simple organizational benefits—they enable a modular approach to query construction that mirrors best practices in software development. In SQL for Data Science applications, this modularity translates to more maintainable, debuggable, and collaborative code.

The strategic use of CTEs in SQL for Data Science allows you to build analytical pipelines where each step transforms data incrementally. This approach makes complex logic more transparent and easier to validate. For instance, you might have one CTE that filters raw data, another that aggregates it, and a final that performs calculations on the aggregated results. This stepwise transformation mirrors the mindset of functional programming and makes SQL for Data Science workflows more robust and testable.

Advanced CTE Patterns and Performance Considerations

Recursive CTEs represent a particularly powerful pattern within SQL for Data Science, enabling solutions to problems that would otherwise require procedural programming. From generating sequences of dates to traversing hierarchical data, recursive CTEs expand the analytical possibilities of SQL. Understanding how to structure the anchor and recursive members, and when to terminate recursion, unlocks capabilities that many data scientists don’t realize are possible in SQL.

Despite their benefits, CTEs in SQL for Data Science require understanding of their performance characteristics. Modern database optimizers handle CTEs differently, with some materializing results and others treating them as inline views. Knowing when CTEs improve performance through result reuse and when they might hinder optimization is crucial for writing efficient SQL for Data Science code. This understanding becomes particularly important when working with large datasets where performance differences can be substantial.

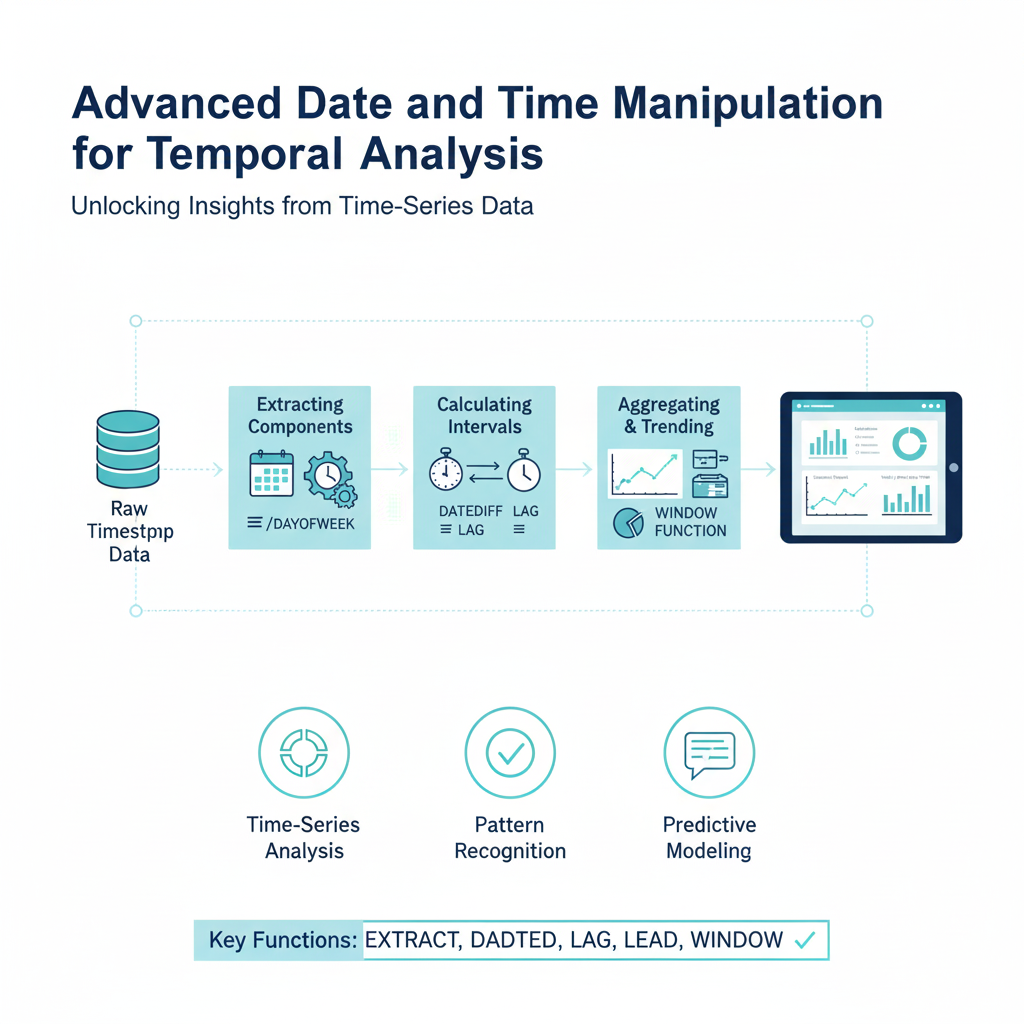

5. Advanced Date and Time Manipulation for Temporal Analysis

Sophisticated Time-Based Calculations

Date and time manipulation forms the foundation of many SQL for Data Science applications, from analyzing seasonal trends to calculating customer retention. While basic date functions are familiar to most practitioners, advanced temporal operations separate exceptional data scientists. Understanding how to work with time zones, extract specific date parts, and perform complex date arithmetic enables more sophisticated temporal analysis directly within SQL.

Date bucketing and binning represents a crucial technique in SQL for Data Science for creating meaningful time-based aggregations. Whether you’re grouping timestamps into custom fiscal periods, aligning events to business hours, or creating week-over-week comparisons, the ability to flexibly bucket time data is essential. Advanced date functions allow for these operations without needing to export data to other tools, keeping the entire analytical workflow within the database.

Time-Series Analysis and Pattern Recognition

Modern SQL for Data Science has evolved to include sophisticated time-series analysis capabilities that rival specialized statistical tools. Functions for calculating moving averages, period-over-period growth rates, and cumulative totals enable comprehensive time-series analysis directly within queries. When combined with window functions, these capabilities support complex analytical workflows like anomaly detection, seasonality analysis, and trend identification.

Gap analysis represents another advanced temporal technique in SQL for Data Science. Identifying periods of activity and inactivity, finding sequences in event data, or detecting missing time periods all require sophisticated date manipulation. Solutions often involve self-joins, window functions, and creative use of date arithmetic to identify patterns that wouldn’t be visible through simple aggregation. These techniques are particularly valuable for analyzing user engagement, operational efficiency, and system monitoring data.

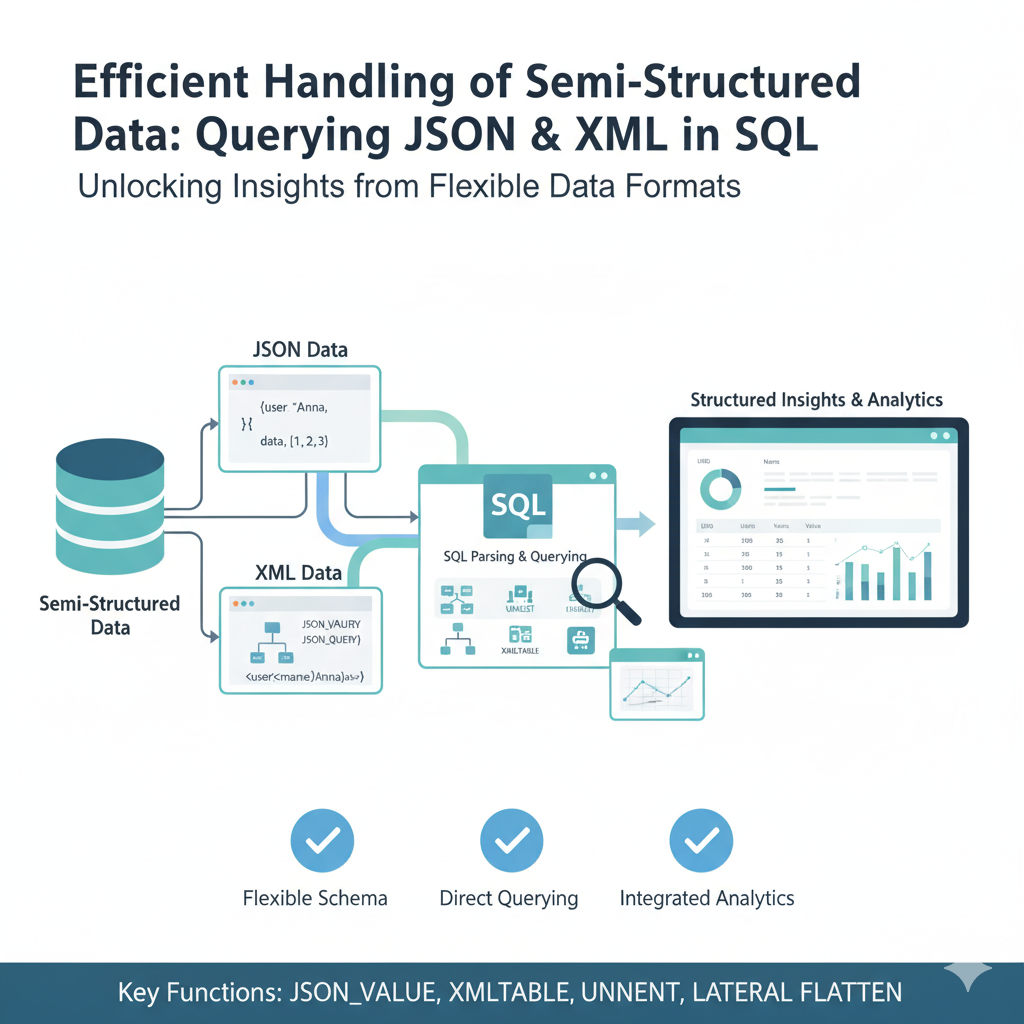

6. Efficient Handling of Semi-Structured Data

JSON and Array Processing Capabilities

The explosion of semi-structured data has transformed how SQL for Data Science professionals approach data extraction and analysis. Modern SQL implementations include robust support for JSON, arrays, and other complex data types, enabling efficient processing of data that doesn’t fit neatly into traditional relational models. Mastering these capabilities allows data scientists to work directly with API responses, application logs, and other semi-structured data sources without preliminary processing in other tools.

JSON manipulation in SQL for Data Science has evolved from simple extraction to comprehensive processing capabilities. Functions for navigating JSON paths, modifying JSON documents, and converting between JSON and relational formats enable complex operations on semi-structured data. This is particularly valuable when working with nested data structures common in modern applications, where important insights might be buried multiple levels deep in JSON documents.

Advanced Array Operations and Unnesting

Array handling represents another sophisticated aspect of modern SQL for Data Science. Functions for array creation, manipulation, and aggregation enable solutions to problems that would otherwise require procedural code. The ability to unnest arrays into relational rows, perform set operations on arrays, and aggregate values into arrays provides flexible options for data transformation and analysis.

Advanced pattern matching within arrays and JSON documents extends the capabilities of SQL for Data Science even further. Regular expression support, combined with JSON path queries, enables sophisticated text mining and pattern recognition directly within SQL queries. This eliminates the need to preprocess data using external tools for many common text analysis tasks, streamlining the analytical workflow and reducing data movement.

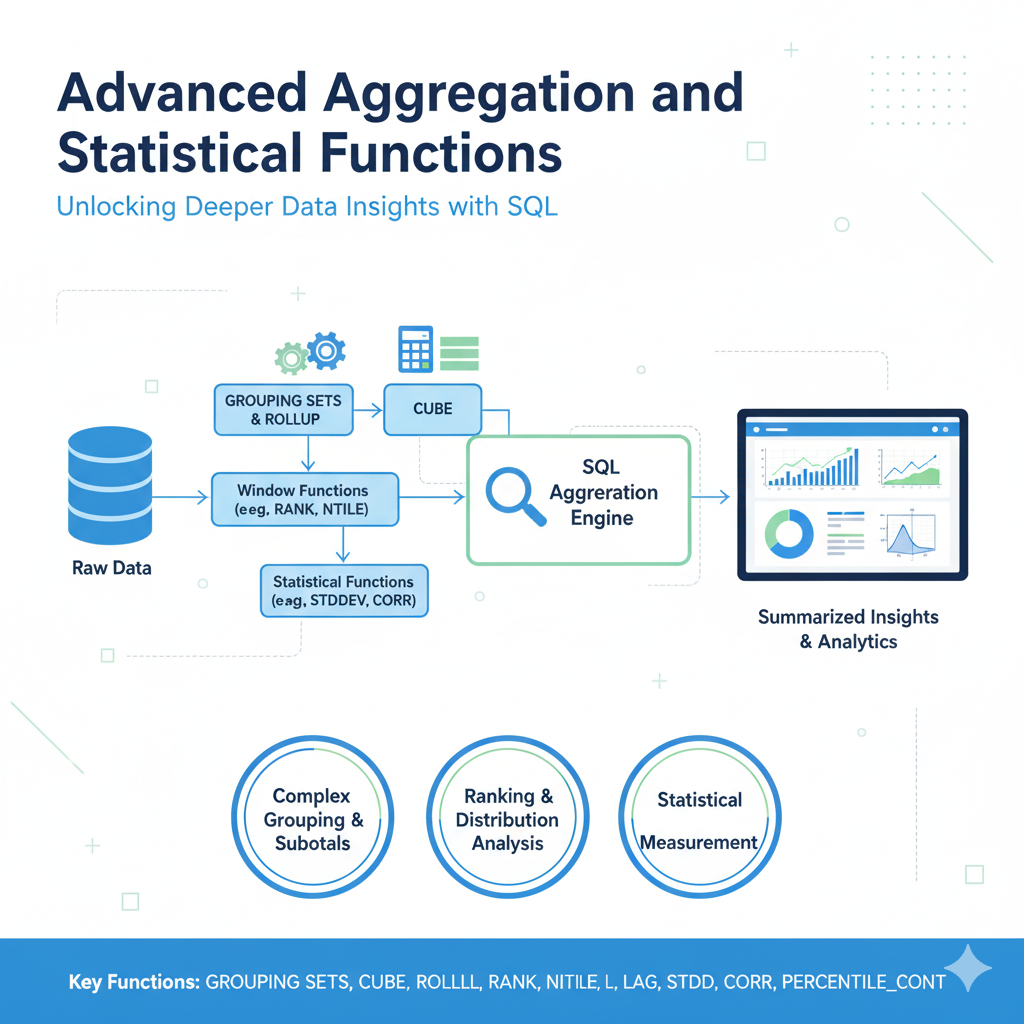

7. Advanced Aggregation and Statistical Functions

Beyond COUNT, SUM, and AVG

While basic aggregation functions are familiar to anyone working with SQL for Data Science, advanced statistical capabilities separate professional data scientists from casual users. Modern SQL implementations include functions for calculating standard deviation, variance, correlation, and regression analysis directly within the database. Understanding these functions enables sophisticated statistical analysis without exporting data to specialized tools.

Percentile and median calculations represent particularly valuable additions to the SQL for Data Science toolkit. These robust measures of central tendency are often more meaningful than averages for skewed distributions, yet many practitioners don’t realize they can compute them directly in SQL. Functions like PERCENTILE_CONT and PERCENTILE_DISC provide flexible options for these calculations, with precise control over how values are handled.

Advanced Grouping and Conditional Aggregation

GROUPING SETS, CUBE, and ROLLUP operators extend the capabilities of SQL for Data Science by enabling multiple levels of aggregation in a single query. These operations are particularly valuable for creating summary reports and dashboards where data needs to be viewed at different hierarchical levels. Understanding how to use these operators efficiently can replace multiple separate queries with a single, more performant operation.

Conditional aggregation represents another powerful pattern in SQL for Data Science. Using CASE statements within aggregate functions enables complex conditional logic during summarization. This technique is invaluable for creating multiple segmented metrics in a single pass through the data, such as calculating different sums based on customer segments, time periods, or product categories. The performance benefits of this approach become significant with large datasets.

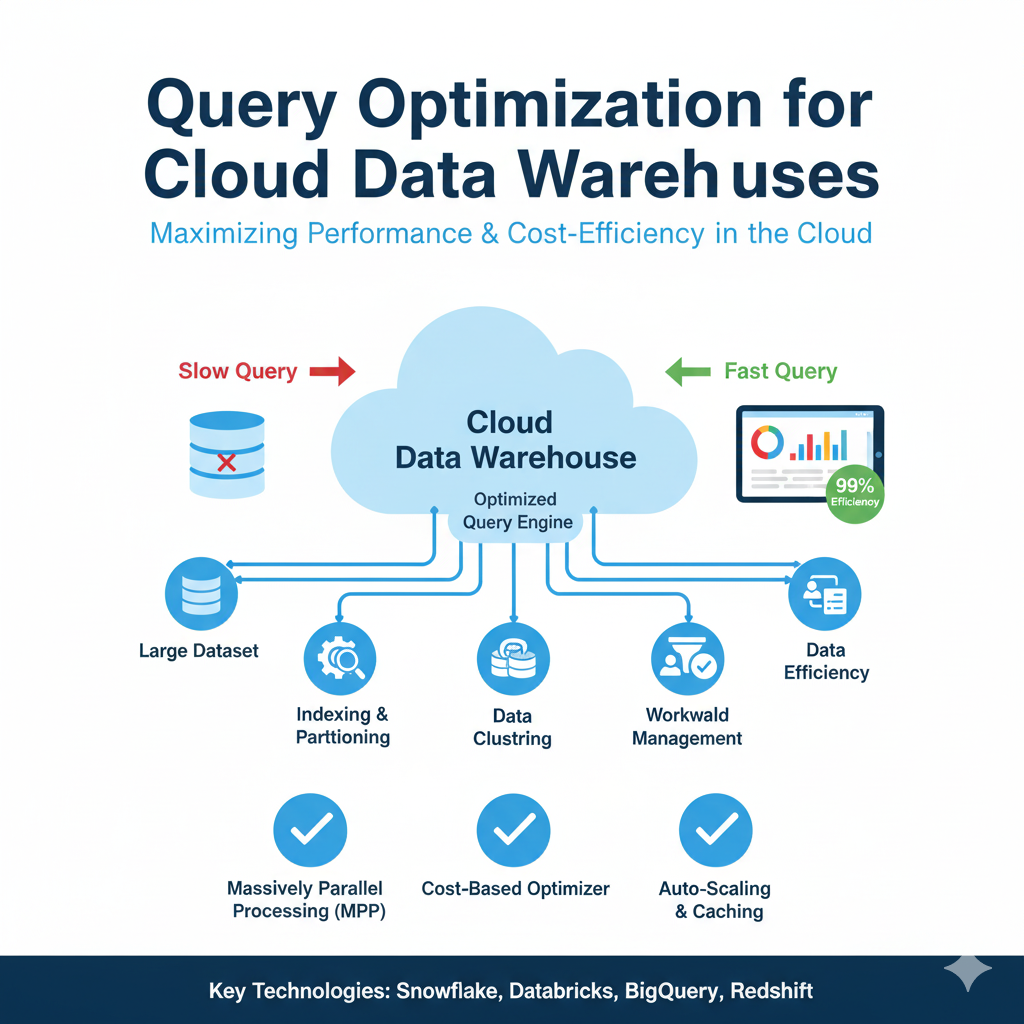

8. Query Optimization for Cloud Data Warehouses

Understanding Massively Parallel Processing Architectures

The migration to cloud data warehouses has transformed optimization strategies for SQL for Data Science. Platforms like Snowflake, BigQuery, and Redshift use massively parallel processing architectures that require different optimization approaches than traditional relational databases. Understanding these differences is crucial for writing efficient queries that leverage the full power of modern data platforms.

Distribution keys and sort keys represent critical concepts in cloud SQL for Data Science. Properly distributing data across compute nodes minimizes data movement during query execution, while effective sorting enables efficient range queries and merge joins. These considerations often have a more significant impact on performance than traditional indexing strategies in MPP systems.

Leveraging Cloud-Specific Features and Services

Cloud data platforms offer unique features that can dramatically enhance SQL for Data Science workflows. User-defined functions, stored procedures, and external data access capabilities extend SQL beyond traditional boundaries. Understanding how to leverage these platform-specific features enables more sophisticated analytical workflows while maintaining performance and cost efficiency.

Cost management has become an essential consideration in cloud-based SQL for Data Science. Unlike traditional databases where hardware costs are fixed, cloud platforms charge based on usage. Understanding how query structure impacts cost, when to use cached results, and how to monitor resource consumption are crucial skills for the modern data scientist. This financial awareness represents a new dimension of query optimization in cloud environments.

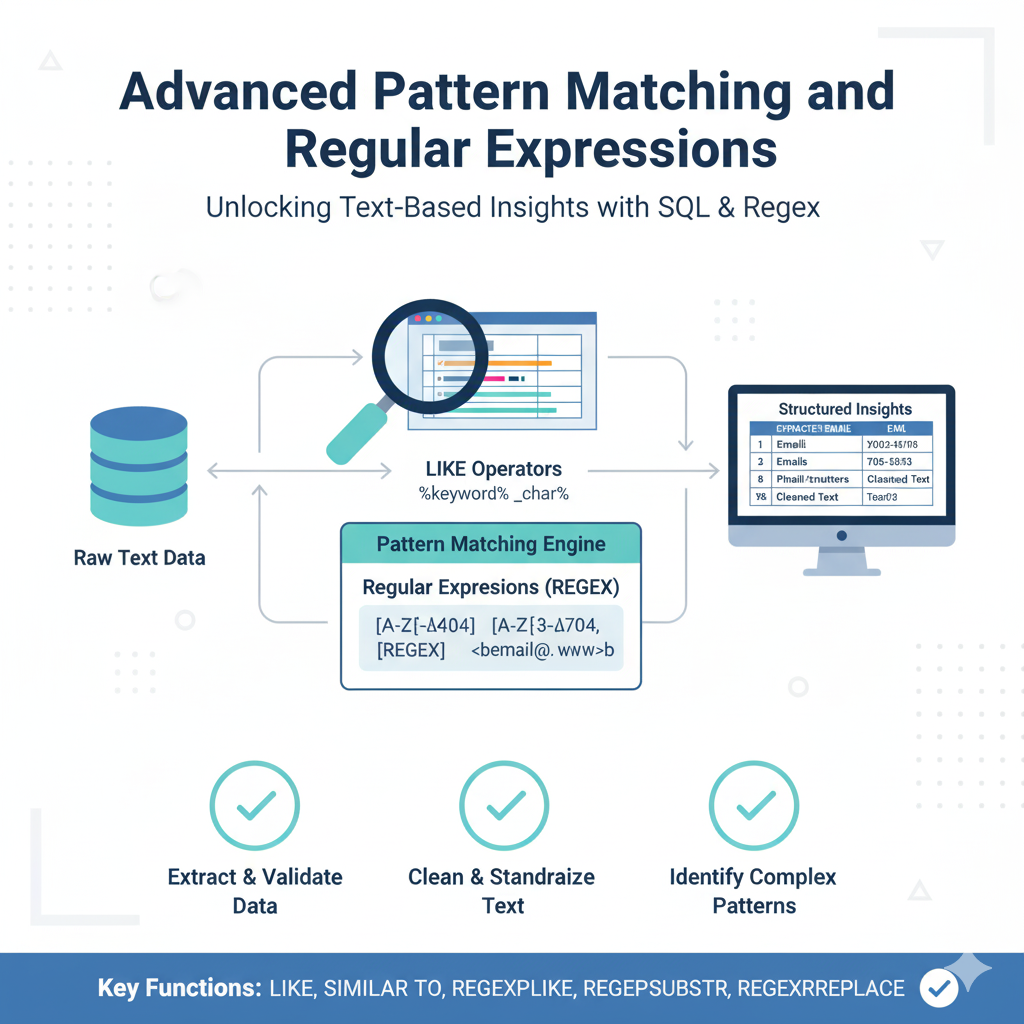

9. Advanced Pattern Matching and Regular Expressions

Sophisticated Text Analysis Capabilities

Pattern matching represents a powerful yet underutilized aspect of SQL for Data Science. Regular expression support in modern SQL implementations enables sophisticated text extraction, validation, and analysis directly within queries. This capability is particularly valuable for working with unstructured or semi-structured text data where patterns contain meaningful information.

Advanced regex functions in SQL for Data Science go beyond simple matching to include extraction, replacement, and splitting operations. The ability to extract specific patterns from text fields, replace patterns based on complex logic, or split strings using regex delimiters enables sophisticated text processing without external tools. This is invaluable for tasks like parsing log files, processing user input, or analyzing document content.

Real-World Applications and Performance Considerations

Practical applications of advanced pattern matching in SQL for Data Science include email validation, phone number formatting, data quality checks, and feature extraction from text fields. These operations can often be performed during data ingestion or transformation, improving data quality and creating analytical features without additional processing steps.

While powerful, regex operations in SQL for Data Science require careful consideration of performance implications. Complex patterns applied to large text fields can be computationally expensive. Understanding how to optimize regex patterns, when to use simpler string functions, and how to structure queries to minimize regex operations is crucial for maintaining performance while leveraging these advanced capabilities.

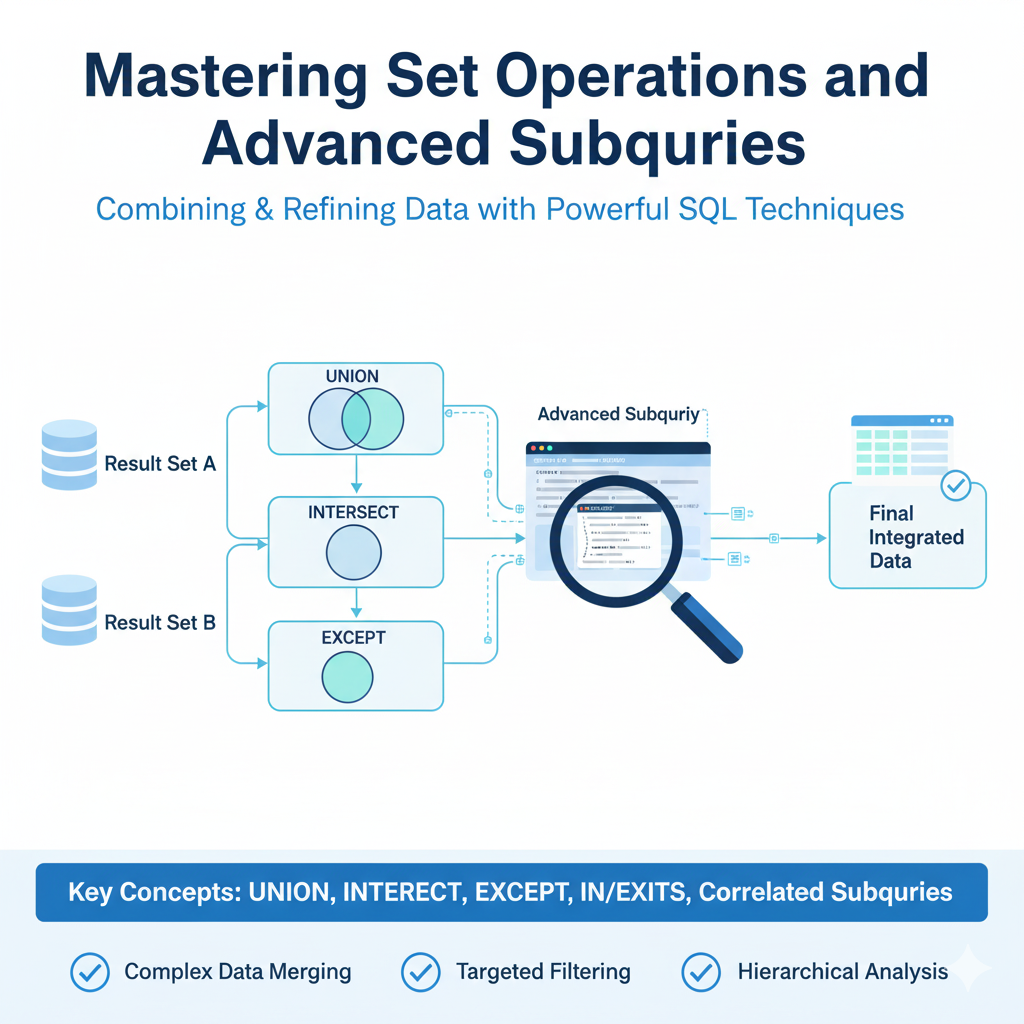

10. Mastering Set Operations and Advanced Subqueries

Sophisticated Data Comparison and Combination

Set operations represent the mathematical foundation of SQL and enable sophisticated data comparison and combination in SQL for Data Science. While UNION, INTERSECT, and EXCEPT operations are conceptually simple, their advanced application solves complex analytical problems. Understanding the performance characteristics and appropriate use cases for each operation separates novice users from SQL for Data Science experts.

Correlated subqueries extend the capabilities of SQL for Data Science by enabling row-by-row processing with context from outer queries. While often criticized for performance implications, correlated subqueries provide elegant solutions to specific problems like existence checks, conditional logic based on related data, and complex filtering conditions. Understanding when correlated subqueries are appropriate and how to optimize them is a valuable skill.

Advanced EXISTS Patterns and Anti-Joins

The EXISTS operator represents a particularly powerful tool in SQL for Data Science for checking relationship existence without retrieving actual data. This operator often outperforms equivalent JOIN operations when you only need to verify that related records exist. Mastering EXISTS patterns enables more efficient queries for common scenarios like finding customers with purchases, users with specific activities, or products with reviews.

Anti-joins using NOT EXISTS provide equally valuable capabilities for finding missing relationships or identifying outliers. These patterns are essential for data quality checks, finding inactive users, or identifying products without sales. The performance benefits of NOT EXISTS over alternative approaches like LEFT JOIN WHERE NULL can be substantial, particularly with large datasets and proper indexing.

Conclusion: Integrating Advanced SQL into Your Data Science Practice

Mastering these ten powerful approaches to SQL for Data Science transforms how you interact with data and unlocks new analytical possibilities. The progression from basic querying to advanced analytical SQL represents a journey that every serious data scientist must undertake. As data volumes grow and analytical requirements become more sophisticated, these advanced skills become increasingly valuable.

The future of SQL for Data Science continues to evolve, with new functions and capabilities being added regularly across database platforms. Staying current with these developments ensures that your skills remain relevant and that you can leverage the full power of modern data platforms. The investment in mastering advanced SQL pays dividends throughout your data science career, enabling you to work more efficiently, solve more complex problems, and deliver greater value to your organization.

Ultimately, excellence in SQL for Data Science isn’t just about knowing syntax or functions—it’s about developing a mindset for thinking in sets, understanding performance implications, and crafting elegant solutions to complex data problems. This holistic approach to SQL mastery will serve you well regardless of how the data landscape continues to evolve in the years ahead.