Discover 5 essential Intermediate Projects to advance your data science skills. From time series forecasting to transformer models, build professional competency through challenging projects that bridge fundamental concepts and advanced applications

Introduction: Bridging the Gap Between Fundamentals and Advanced Data Science

As data enthusiasts progress beyond foundational concepts, the journey into more sophisticated territory begins with carefully selected Intermediate Projects. These Projects serve as the crucial bridge between basic data manipulation and advanced machine learning applications, challenging practitioners to integrate multiple skills while solving increasingly complex problems. In 2024, the landscape of data science continues to evolve, making well-designed Projects more valuable than ever for developing the nuanced understanding required for real-world data challenges.

What distinguishes Intermediate Projects from their beginner counterparts is their emphasis on integrated skill application, real-world complexity, and production-oriented thinking. While beginner projects often focus on mastering individual techniques, Intermediate Projects require combining multiple methodologies, handling messier datasets, and considering deployment implications. These Intermediate Projects push data practitioners beyond comfortable boundaries, introducing the types of challenges professionals face daily in data roles across industries.

This comprehensive guide explores five essential Intermediate Projects that systematically build upon fundamental skills while introducing advanced concepts in machine learning, data engineering, and analytical thinking. Each project addresses genuine business problems while developing specific technical competencies that mark the transition from data enthusiast to data professional.

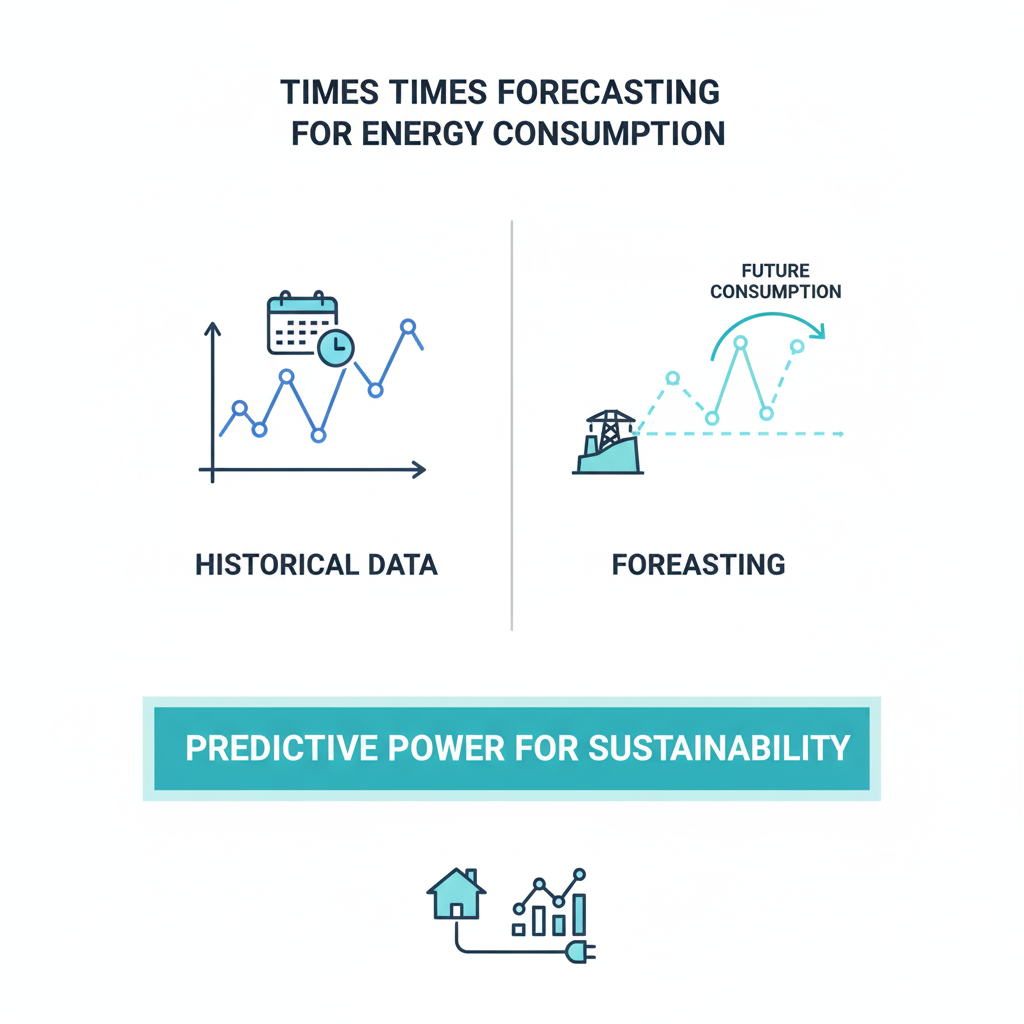

Project 1: Time Series Forecasting for Energy Consumption

The Complexity of Temporal Data Analysis

Time series forecasting represents a significant step up in complexity from basic predictive modeling, making it an ideal starting point for Intermediate Projects. This project focuses on predicting energy consumption patterns using historical data, introducing concepts like seasonality, trend decomposition, and exogenous variables. Unlike simpler Intermediate Projects, time series analysis requires specialized techniques for handling temporal dependencies and structural breaks in data.

What makes this particularly valuable among Intermediate Projects is its direct relevance to numerous industries beyond energy—retail, finance, transportation, and resource planning all rely on accurate time series predictions. Through these Intermediate Projects, practitioners develop intuition for temporal patterns and learn to distinguish between different types of time series behaviors, skills that transfer across domains.

Implementation Methodology

Data Acquisition and Preprocessing:

Source energy consumption data from platforms like Kaggle or public utility databases. Look for datasets with at least three years of hourly or daily readings to capture seasonal patterns. Begin with comprehensive exploratory data analysis, visualizing trends, identifying missing values, and detecting outliers. For Intermediate Projects, pay special attention to data quality issues specific to time series, such as irregular sampling and systematic measurement errors.

Feature Engineering for Temporal Patterns:

Create sophisticated features that capture temporal characteristics—day of week, month, holiday indicators, rolling statistics, and lagged variables. Engineer features that represent weather conditions, economic indicators, or special events that might influence energy consumption. This feature engineering complexity distinguishes Intermediate Projects from simpler predictive modeling tasks.

Model Selection and Implementation:

Compare multiple forecasting approaches including SARIMAX, Prophet, and LSTMs. Implement each model class, tuning hyperparameters and validating performance using time-series cross-validation. For Intermediate Projects, focus not just on model accuracy but also on interpretability and computational efficiency, considerations that matter in production environments.

Evaluation and Deployment Considerations:

Assess models using appropriate time-series metrics like MAPE, RMSE, and MASE. Analyze residual patterns to identify systematic forecasting errors. Develop a simple deployment strategy using Flask or FastAPI to create a forecasting service. This end-to-end thinking elevates Intermediate Projects beyond academic exercises to professional practice.

Skills Developed and Professional Applications

Completing this project builds competency in time series analysis, feature engineering for temporal data, multiple forecasting methodologies, and model deployment strategies. These Intermediate Projects demonstrate ability to handle data with complex dependencies, a skill valuable in domains like finance, supply chain, and resource management.

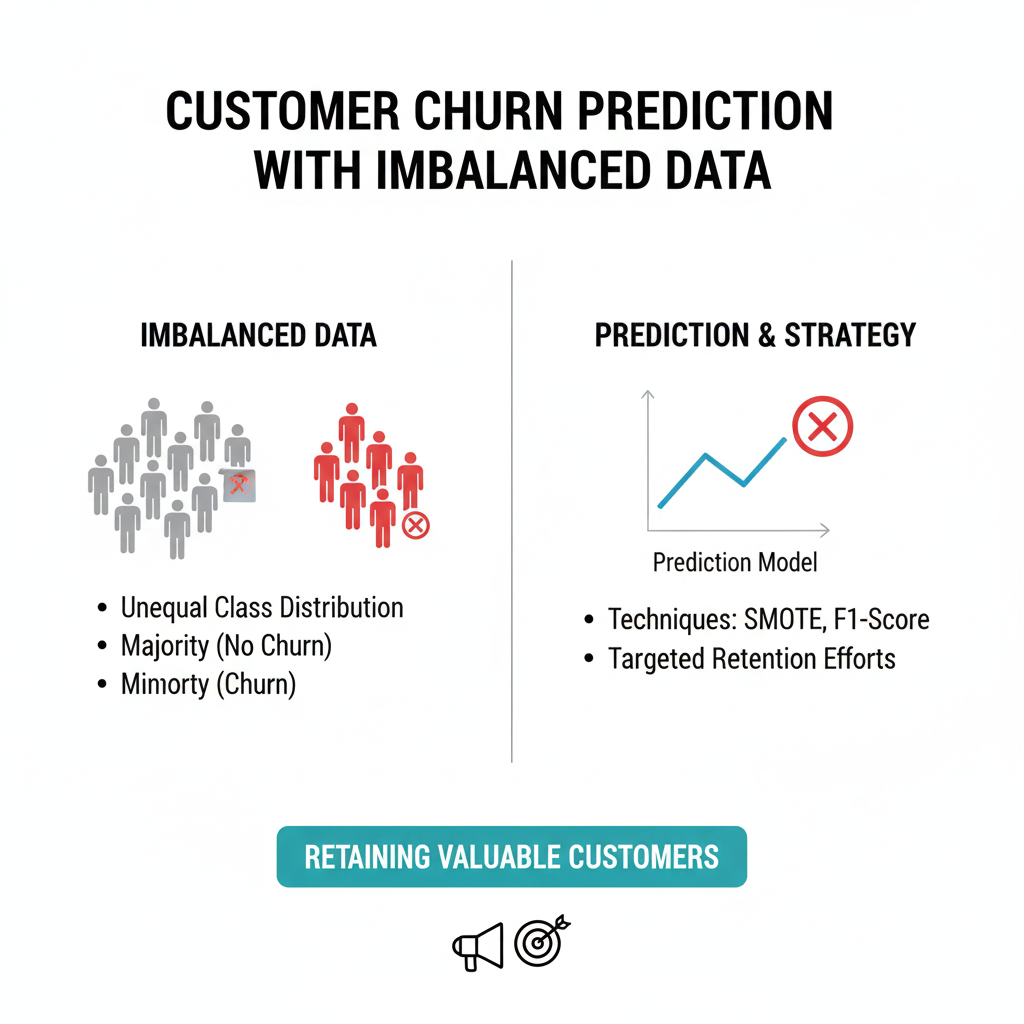

Project 2: Customer Churn Prediction with Imbalanced Data

Handling Real-World Data Skew

Customer churn prediction introduces the critical challenge of imbalanced datasets, a common reality in business applications that many Intermediate Projects overlook. This project focuses on predicting which customers are likely to leave a service, using telecommunications or subscription business data. The imbalance problem—where few customers actually churn—requires specialized techniques beyond standard classification approaches.

These Intermediate Projects teach crucial lessons about model evaluation beyond accuracy, emphasizing metrics like precision-recall curves, F1 scores, and business cost calculations. The focus on practical business impact distinguishes valuable Intermediate Projects from purely technical exercises.

Advanced Implementation Strategy

Data Understanding and Business Context:

Work with datasets containing customer demographics, usage patterns, service information, and churn indicators. Before modeling, develop a deep understanding of the business context—what drives churn, what retention costs, and what intervention opportunities exist. This business-thinking component separates professional Intermediate Projects from academic ones.

Handling Class Imbalance:

Implement multiple strategies for addressing class imbalance—SMOTE, ADASYN, class weighting, and ensemble methods. Compare these approaches systematically, understanding the trade-offs between different rebalancing techniques. For Intermediate Projects, document how each method affects different evaluation metrics and business considerations.

Model Development and Interpretation:

Build multiple classifier types including Logistic Regression, Random Forest, and Gradient Boosting, focusing on probability calibration and threshold optimization. Use SHAP and LIME for model interpretation, identifying the most influential churn drivers. These interpretation skills are increasingly important in regulated industries and represent a key advancement in Intermediate Projects.

Business Integration and Actionable Insights:

Translate model outputs into actionable business recommendations—which customers to target, what interventions might work, and what economic impact to expect. Develop a simple dashboard showing churn risk segments and key drivers. This business-orientation makes these Intermediate Projects valuable portfolio pieces.

Professional Impact and Extensions

This project develops skills in handling imbalanced data, business-focused model evaluation, model interpretation, and stakeholder communication. For advanced Intermediate Projects, consider incorporating time-to-event analysis using survival models or building a complete retention campaign simulation.

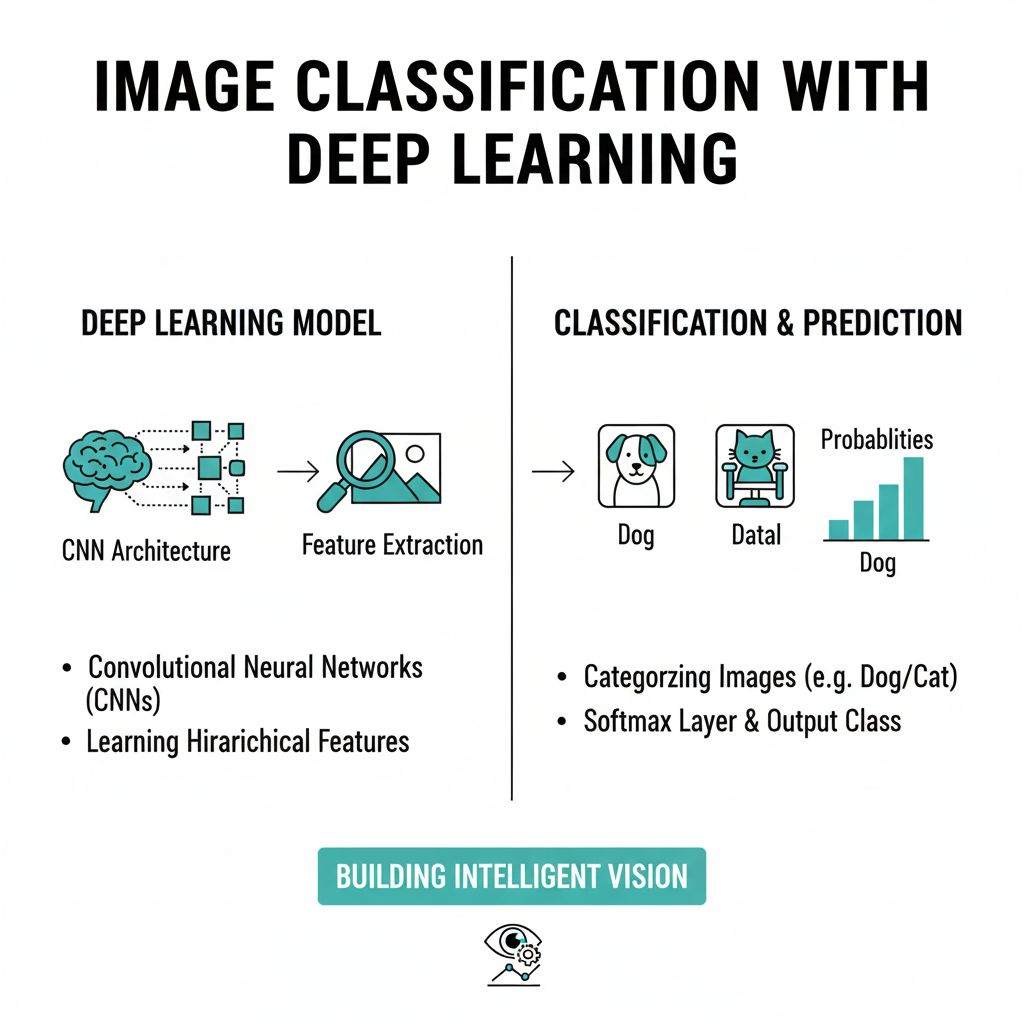

Project 3: Image Classification with Deep Learning

Entering the Computer Vision Domain

Image classification represents a natural progression into deep learning, making it essential among Intermediate Projects for building modern AI skills. This project involves building a convolutional neural network to classify images from domains like medical imaging, product categorization, or quality inspection. Unlike simpler Intermediate Projects, deep learning requires understanding neural network architectures, GPU acceleration, and specialized preprocessing.

What makes these Intermediate Projects particularly valuable is their introduction to transfer learning, a technique that enables impressive results with limited data. Learning to leverage pre-trained models represents a practical skill used across computer vision applications in industry.

Comprehensive Implementation Approach

Data Preparation and Augmentation:

Source image datasets from platforms like Kaggle or create your own using web scraping techniques. Implement comprehensive image preprocessing—resizing, normalization, and data augmentation using rotations, flips, and color adjustments. For Intermediate Projects, pay special attention to creating robust validation strategies that prevent data leakage.

Model Architecture and Transfer Learning:

Build custom CNN architectures from scratch to understand fundamental components like convolutional layers, pooling, and fully connected networks. Then implement transfer learning using pre-trained models like ResNet, VGG, or EfficientNet, fine-tuning them for your specific classification task. This progression from fundamentals to practical optimization characterizes effective Intermediate Projects.

Training Optimization and Regularization:

Implement advanced training techniques including learning rate scheduling, early stopping, and various optimizer configurations. Apply regularization methods like dropout, batch normalization, and L2 regularization to prevent overfitting. These optimization skills distinguish sophisticated Intermediate Projects from basic implementations.

Model Interpretation and Deployment:

Use Grad-CAM and other visualization techniques to understand what image regions drive model predictions. Package your model using TensorFlow Serving or ONNX runtime for efficient inference. For Intermediate Projects, consider edge deployment options using TensorFlow Lite or similar frameworks.

Technical Mastery and Applications

This project builds competency in deep learning frameworks, transfer learning, image preprocessing, and model interpretation. These Intermediate Projects demonstrate ability to work with unstructured data and complex model architectures, skills increasingly demanded across industries from healthcare to manufacturing.

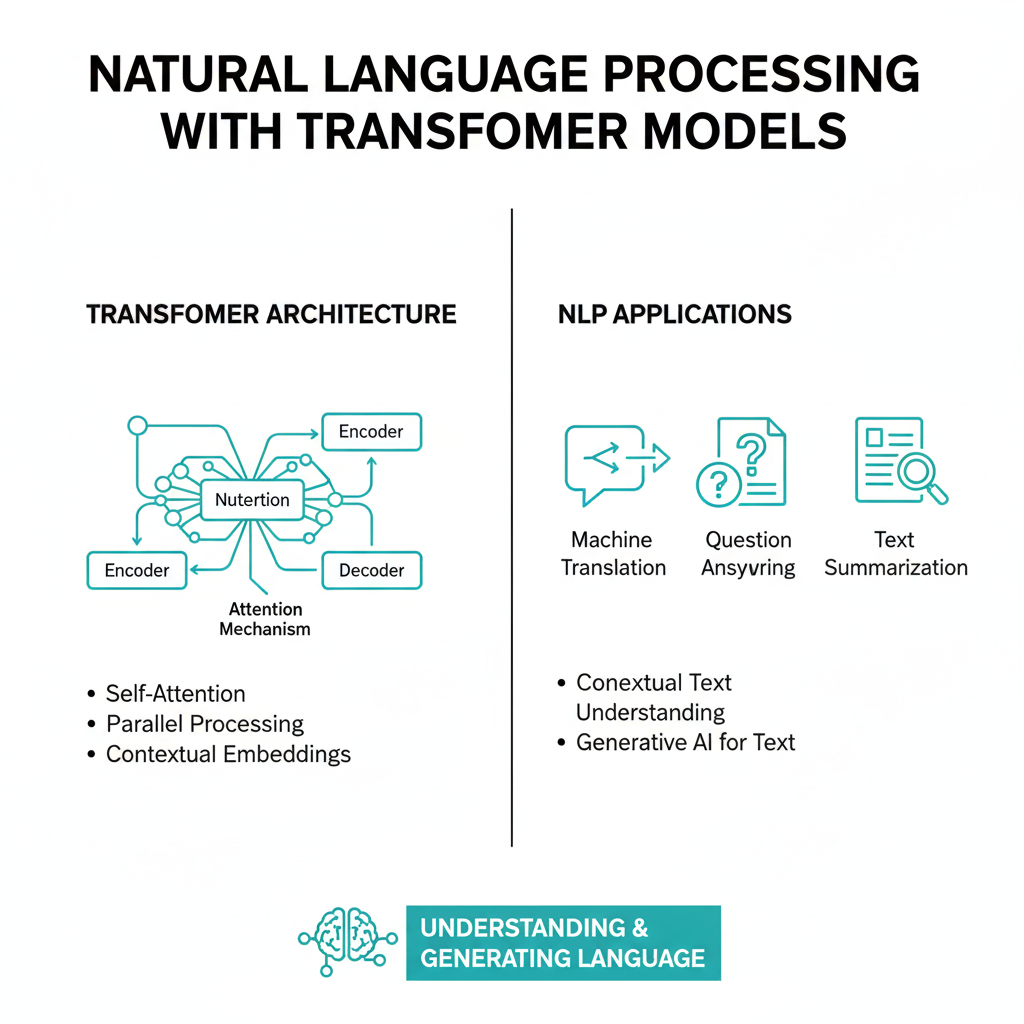

Project 4: Natural Language Processing with Transformer Models

Modern Text Analysis Techniques

Natural language processing with transformer models represents the cutting edge of text analysis, making these Intermediate Projects essential for staying current with industry practices. This project involves fine-tuning transformer models for tasks like sentiment analysis, text classification, or named entity recognition. Unlike simpler Intermediate Projects using traditional NLP methods, transformer-based approaches require understanding attention mechanisms and handling large model architectures.

These Intermediate Projects introduce the practical challenges of working with large language models, including computational constraints, fine-tuning strategies, and ethical considerations around bias and fairness.

Implementation Framework

Data Collection and Text Preparation:

Gather text datasets from sources like Twitter, news articles, or customer reviews. Implement text cleaning specific to transformer models—handling special tokens, truncation, and attention masks. For Intermediate Projects, pay special attention to dataset size requirements and quality considerations for fine-tuning.

Model Selection and Fine-Tuning:

Select appropriate transformer architectures like BERT, RoBERTa, or DistilBERT based on your task and computational resources. Implement fine-tuning using frameworks like Hugging Face Transformers, carefully managing training parameters and monitoring for overfitting. These practical fine-tuning skills are increasingly valuable in industry roles.

Evaluation and Error Analysis:

Evaluate model performance using task-appropriate metrics while conducting thorough error analysis to understand failure modes. Compare transformer performance with traditional approaches to quantify improvement. For Intermediate Projects, analyze computational efficiency trade-offs between different model sizes and architectures.

Deployment and Monitoring:

Optimize models for production using techniques like quantization and pruning. Implement serving infrastructure and develop monitoring for concept drift and performance degradation. This production-focused thinking elevates Intermediate Projects beyond experimental work.

Advanced Skills and Ethical Considerations

This project develops skills in transformer architectures, transfer learning for NLP, model optimization, and text preprocessing for modern models. These Intermediate Projects also introduce important ethical considerations around bias mitigation and responsible AI deployment.

Project 5: End-to-End Data Engineering Pipeline

Building Production-Ready Systems

End-to-end data engineering represents the infrastructure foundation supporting data science work, making these Intermediate Projects crucial for understanding real-world data systems. This project involves building a complete data pipeline—from data ingestion through processing to visualization—using tools like Airflow, Docker, and cloud services. Unlike analytical Intermediate Projects, this focuses on system design, reliability, and scalability.

What makes these Intermediate Projects particularly valuable is their emphasis on production considerations—error handling, monitoring, and maintainability. Understanding data engineering principles makes data scientists more effective in team environments and better equipped to deploy their models reliably.

Comprehensive System Design

Architecture Planning and Tool Selection:

Design a pipeline architecture for a specific use case like e-commerce analytics or IoT data processing. Select appropriate technologies—message queues, processing engines, and storage solutions—based on data characteristics and use case requirements. This architectural thinking distinguishes advanced Intermediate Projects from simpler implementations.

Data Ingestion and Processing:

Implement robust data ingestion from multiple sources including databases, APIs, and file streams. Build data processing workflows that handle common issues like duplicate records, schema evolution, and late-arriving data. For Intermediate Projects, focus on data quality checks and comprehensive logging.

Orchestration and Monitoring:

Use Apache Airflow or similar tools to orchestrate complex workflows with dependencies and error handling. Implement monitoring and alerting to detect pipeline failures and performance degradation. These operational skills are increasingly important as data scientists take more responsibility for production systems.

Visualization and Documentation:

Build dashboards using tools like Grafana or Streamlit to visualize pipeline metrics and business insights. Create comprehensive documentation covering architecture, operations procedures, and troubleshooting guides. This documentation practice is essential for professional Intermediate Projects.

Professional Development and Career Impact

This project develops skills in data engineering, system design, workflow orchestration, and infrastructure management. These Intermediate Projects demonstrate ability to build reliable data systems, a skill combination highly valued in senior data roles and technical leadership positions.

Maximizing Learning from Intermediate Projects

Developing Professional Work Habits

Success with Intermediate Projects requires adopting professional development practices. Implement comprehensive testing strategies including unit tests, integration tests, and data validation checks. Use CI/CD pipelines to automate testing and deployment processes. These engineering practices distinguish professional-grade Intermediate Projects from experimental work.

Establish robust monitoring and logging from the beginning. Track model performance, data quality metrics, and system reliability. For Intermediate Projects, consider implementing A/B testing frameworks to compare different approaches systematically.

Practice effective documentation and knowledge sharing. Create technical documentation that enables others to understand, use, and extend your work. This communication skill becomes increasingly important as Intermediate Projects grow in complexity and potential impact.

Building Toward Advanced Specialization

Intermediate Projects naturally lead to more advanced work and potential specialization. Use these projects to identify areas of particular interest or strength—computer vision, natural language processing, time series analysis, or data engineering. Consider extending Intermediate Projects into more sophisticated implementations or combining multiple projects into comprehensive portfolio pieces.

Engage with the professional community by contributing to open-source projects, participating in competitions, or writing about your Intermediate Projects. This community engagement provides valuable feedback and helps establish professional connections.

From Intermediate Projects to Professional Impact

The journey through carefully selected intermediate work develops the sophisticated skill combinations that distinguish competent data practitioners from true experts. Beyond technical abilities, these challenging endeavors cultivate the problem-solving mindset, systematic thinking, and business awareness required for impactful data work in professional environments. The transition from completing isolated exercises to delivering comprehensive solutions represents a crucial evolution in a data practitioner’s career trajectory.

As you progress through these substantial undertakings, focus on developing reusable patterns and methodologies that can be applied across different domains and business contexts. The ability to adapt techniques to new situations and rapidly understand unfamiliar data ecosystems represents one of the most valuable outcomes of working through complex challenges. This adaptability becomes increasingly important as organizations face rapidly changing market conditions and evolving data landscapes.

Developing Professional Judgment and Decision-Making

The true value of tackling sophisticated data initiatives lies in developing professional judgment—the ability to make informed decisions about methodology selection, resource allocation, and solution design under uncertainty. Through repeated exposure to complex scenarios, practitioners learn to balance theoretical purity with practical constraints, making strategic compromises that deliver maximum business value while maintaining technical integrity. This nuanced decision-making capability separates junior practitioners from senior contributors who can be trusted with significant organizational responsibilities.

Professional judgment extends beyond technical decisions to encompass ethical considerations, stakeholder management, and risk assessment. Working through multifaceted data challenges teaches practitioners to identify potential pitfalls early, anticipate stakeholder concerns, and navigate the organizational dynamics that inevitably influence data projects. These soft skills, when combined with technical expertise, create the foundation for leadership roles in data-driven organizations.

Building Transferable Methodologies and Approaches

The most successful data professionals develop systematic approaches to problem-solving that transcend specific tools or techniques. Through engagement with diverse challenges, practitioners should focus on identifying patterns in data problems and developing reusable frameworks for addressing them. This might include standardized processes for data quality assessment, systematic approaches to feature engineering, or structured methodologies for model validation and monitoring.

These transferable methodologies become particularly valuable when practitioners encounter entirely new domains or technologies. Rather than starting from scratch each time, experienced professionals can adapt their existing frameworks to new contexts, dramatically accelerating their ability to deliver value. This systematic approach also facilitates knowledge sharing and team collaboration, as methodologies can be documented, taught, and refined across organizations.

Cultivating Business Acumen and Strategic Thinking

Advanced data work requires deep integration of technical skills with business understanding. As practitioners progress beyond foundational concepts, they must develop the ability to translate business problems into data solutions and communicate technical findings in business terms. This requires understanding organizational priorities, industry dynamics, and economic constraints that shape how data initiatives are scoped, prioritized, and evaluated.

Strategic thinking involves considering the long-term implications of data decisions, including technical debt, scalability concerns, and alignment with organizational roadmaps. Practitioners who develop this strategic perspective can advocate for sustainable data practices while demonstrating how data initiatives contribute to broader business objectives. This business-technology partnership mindset is essential for advancing into leadership positions where data strategy influences organizational direction.

Establishing Professional Credibility and Impact

The transition from intermediate to advanced practitioner involves building a reputation for reliability, expertise, and impact. This professional credibility comes from consistently delivering robust solutions, communicating effectively with diverse stakeholders, and demonstrating thought leadership in data practices. Building a portfolio of substantial completed projects provides concrete evidence of capabilities while developing the confidence to tackle increasingly ambitious initiatives.

Professional impact extends beyond individual projects to influence how organizations leverage data more broadly. Experienced practitioners often become champions for data-driven decision making, mentors for junior colleagues, and architects of data capabilities that enable broader organizational success. This expanded influence represents the culmination of technical expertise, business understanding, and professional judgment developed through progressively challenging work.

Navigating Career Advancement and Specialization

The skills and experiences gained through complex data initiatives open multiple pathways for career advancement. Some practitioners may choose to deepen their expertise in specific technical domains like machine learning engineering, data architecture, or analytics leadership. Others may pursue broader roles that integrate data expertise with product management, business strategy, or organizational leadership.

Regardless of the specific path, the common thread is the ability to leverage data capabilities to drive meaningful outcomes. The transition from executing predefined tasks to defining problems, designing solutions, and leading implementation represents a fundamental shift in professional scope and impact. This evolution prepares practitioners for roles where they don’t just work with data—they shape how organizations use data to create value, manage risk, and pursue opportunities.

Continuous Learning and Adaptation

The field of data science continues to evolve rapidly, with new techniques, tools, and applications emerging constantly. The most successful professionals maintain a mindset of continuous learning, using each project as an opportunity to expand their capabilities while staying current with industry developments. This learning orientation, combined with practical experience, creates a virtuous cycle where each new challenge builds the foundation for tackling even more complex problems in the future.

Adaptability becomes increasingly important as technologies change and business needs evolve. Practitioners who have developed strong fundamental skills through diverse projects can more readily learn new tools and techniques, applying core concepts across different technological stacks and business contexts. This adaptability ensures long-term relevance in a field characterized by rapid change and innovation.

Conclusion: Advancing Your Data Science Journey

The five Intermediate Projects detailed in this guide represent significant milestones in the progression from fundamental data skills to professional competency. Each project addresses genuine complexity—whether technical, methodological, or operational—while developing specific skill combinations valued in industry roles.

What distinguishes successful is their balance between technical sophistication and practical applicability. The most valuable solve meaningful problems while demonstrating advanced methodologies and production-ready thinking. As you undertake these Intermediate Projects, maintain focus on both technical excellence and real-world impact.

Remember that the journey through Projects is as important as the final outcomes. The challenges encountered, the problems solved, and the lessons learned during these Projects build the experience and intuition that define expert practitioners. Each completed project represents not just another portfolio piece, but another step toward data science mastery.

The Projects you complete today form the foundation for the advanced work you’ll undertake tomorrow. Use them to identify your strengths, address your weaknesses, and discover the domains where you can make your most meaningful contributions. The path from intermediate to advanced practitioner begins with but extends throughout your career as you continue tackling increasingly complex data challenges.