Explore Hypothesis Testing in 2025: Learn how data scientists use advanced statistical methods, machine learning integration, and causal inference frameworks to make confident decisions across industries

Introduction: The Evolution of Hypothesis Testing in the Data Science Landscape

In the rapidly advancing field of data science, Hypothesis Testing remains a cornerstone of statistical inference. However, its implementation, interpretation, and applications have evolved significantly. As we step into 2025, Hypothesis Testing is no longer confined to academic research or theoretical models. It has become a practical, technology-driven framework for making intelligent, data-backed decisions in real-time.

Today, Hypothesis Testing integrates seamlessly with artificial intelligence, machine learning, and business analytics platforms. Organizations use it not only to validate assumptions but also to guide experimentation, optimize models, and ensure fairness in AI systems. The result is a modernized form of Hypothesis Testing that is faster, more flexible, and more context-aware than ever before.

This detailed article explores the complete transformation of Hypothesis Testing—its modern principles, tools, case studies, challenges, and the exciting innovations shaping its future in 2025 and beyond.

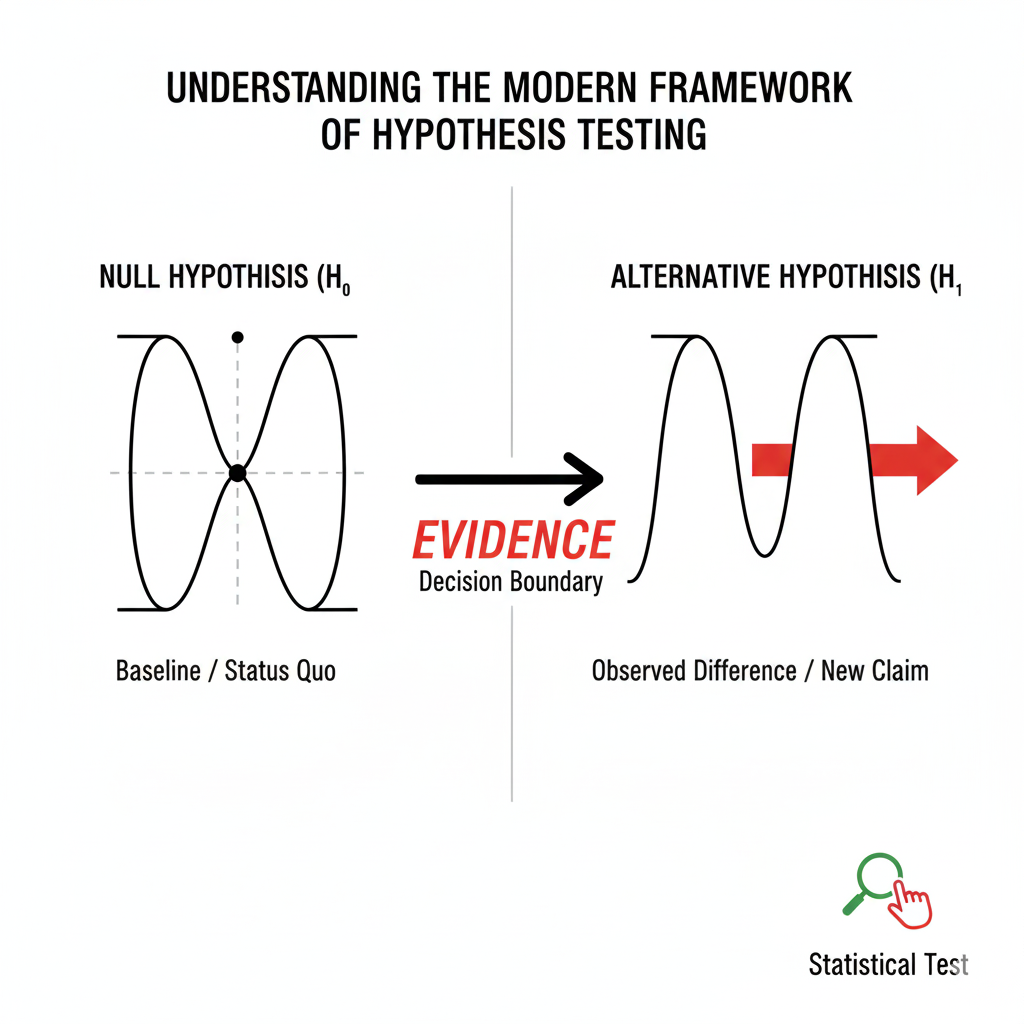

1. Understanding the Modern Framework of Hypothesis Testing

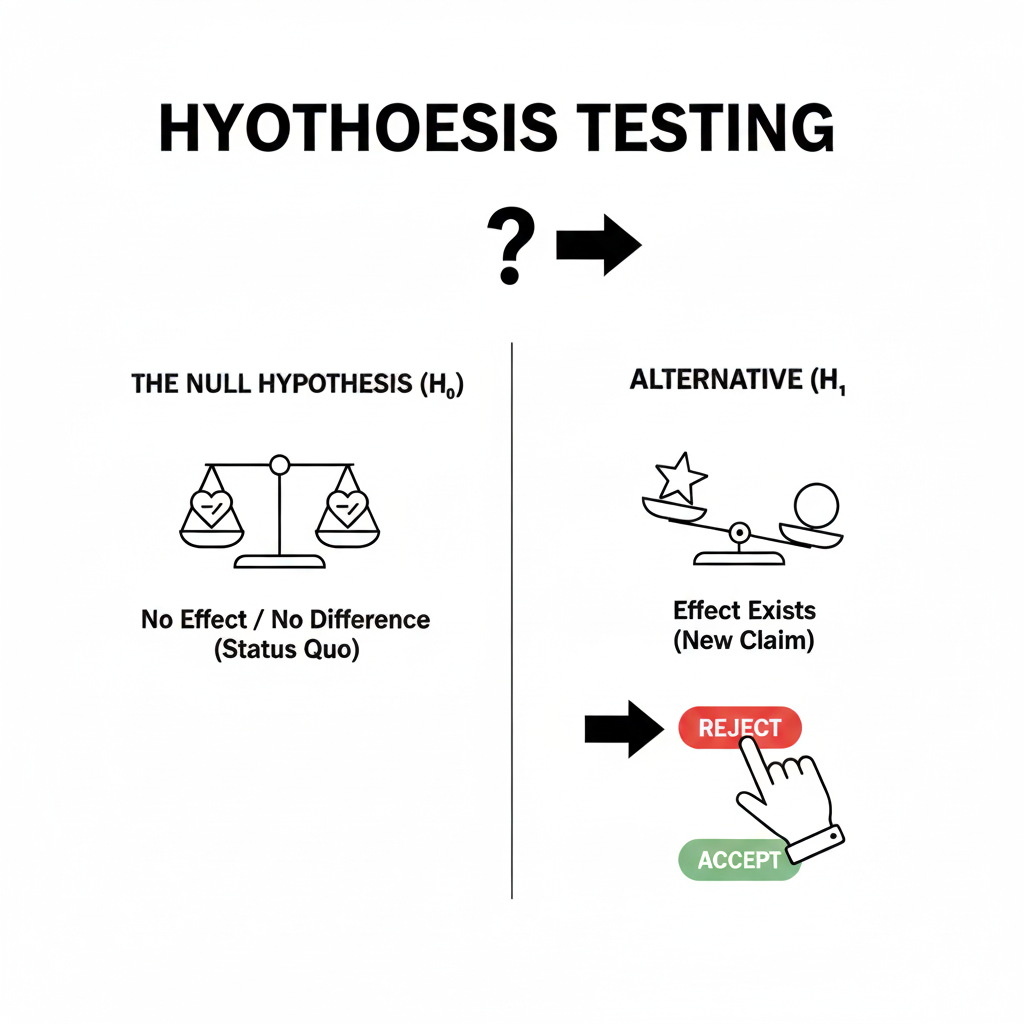

At its core, Hypothesis Testing is still about comparing two claims—the null hypothesis (no effect or difference) and the alternative hypothesis (presence of an effect or difference). Yet, the tools, interpretation, and applications have evolved dramatically.

From Binary Decisions to Contextual Insights

Previously, analysts viewed Hypothesis Testing as a binary decision: reject or fail to reject the null hypothesis based solely on a p-value threshold (often 0.05). In 2025, the interpretation is more nuanced. Data scientists now focus on effect sizes, confidence intervals, and practical significance—not just statistical ones.

This shift acknowledges that a small, statistically significant difference may not have real-world impact, while a non-significant result might still carry valuable insight.

Power Analysis and Sample Design

Modern practitioners integrate power analysis early in the process to determine adequate sample sizes and ensure meaningful results. Thanks to computational tools, can now incorporate simulations to predict likely outcomes and optimize design before data collection even begins.

Reproducibility and Transparency

In the age of open science, reproducibility is key. Analysts now document every step of Hypothesis Testing workflows using reproducible notebooks and automated pipelines, reducing bias and improving trust in findings.

2. The New Toolbox: Advanced Methodologies in Hypothesis Testing

Bayesian Hypothesis Testing

One of the most transformative shifts in 2025 is the widespread adoption of Bayesian Hypothesis Testing. Unlike frequentist approaches, Bayesian methods allow analysts to integrate prior knowledge, producing posterior probabilities that directly express the likelihood of a hypothesis being true.

For example, a marketing team might use Bayesian techniques to continuously update conversion rate assumptions as new data streams in.

Resampling and Bootstrapping

Techniques such as bootstrapping and permutation testing have gained mainstream traction. These methods make fewer assumptions about underlying distributions and are ideal for modern, high-dimensional datasets where analytical solutions may not exist. In practice, these approaches have made Hypothesis Testing more flexible and robust.

Sequential and Adaptive Testing

In fast-paced environments like e-commerce or gaming, waiting for full data collection before testing hypotheses can slow progress. Sequential testing and adaptive design frameworks allow analysts to evaluate results periodically, making faster, statistically valid decisions without inflating error rates.

Causal Inference Meets Hypothesis Testing

Causal inference represents one of the most profound integrations with Hypothesis Testing. Tools such as propensity score matching and instrumental variables help infer causal relationships from observational data. This fusion ensures that insights from Hypothesis Testing go beyond correlation—toward true cause-and-effect understanding.

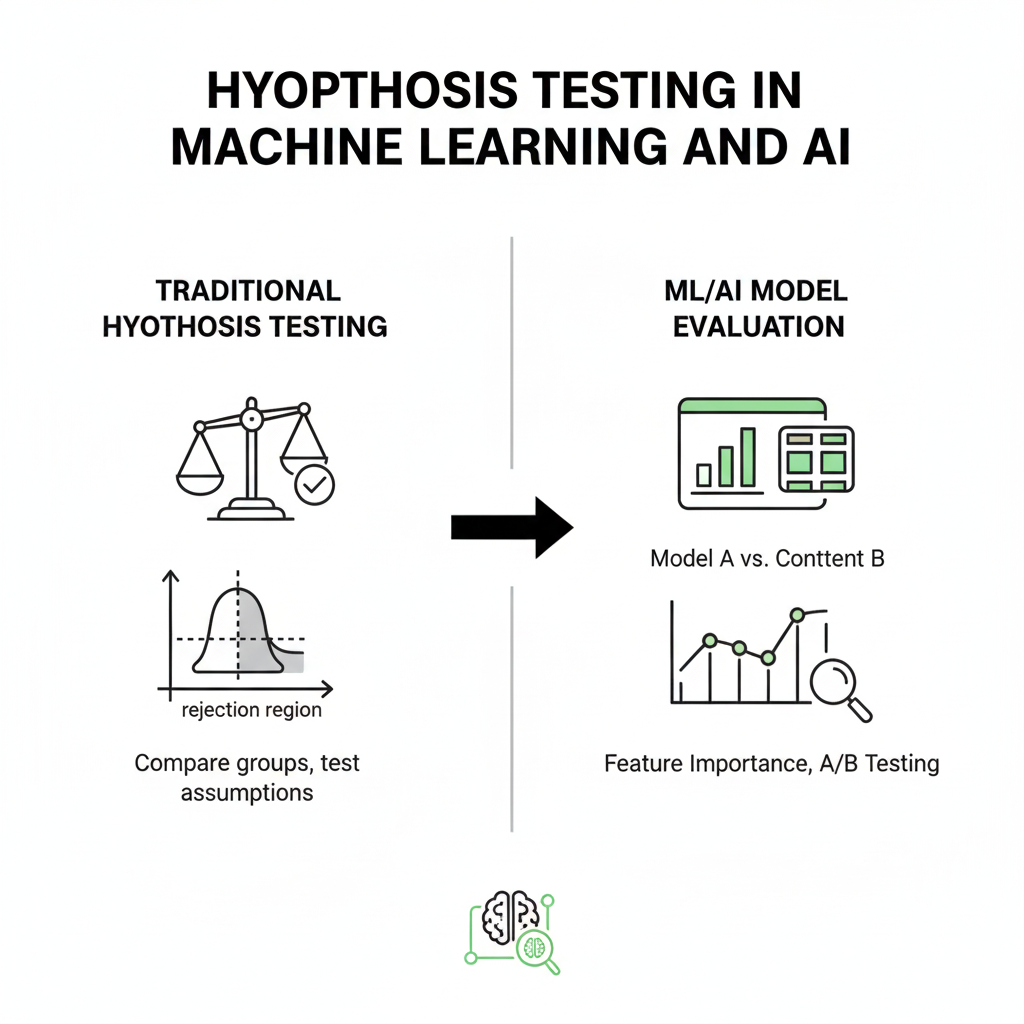

3. Hypothesis Testing in Machine Learning and AI

As machine learning models dominate the analytics landscape, has found new life within these systems.

Feature Significance and Model Explainability

Determining which features truly drive predictions is critical. Modern frameworks evaluate feature importance statistically, using methods like SHAP and permutation tests to quantify each variable’s influence.

Model Comparison and Validation

Instead of comparing models purely on accuracy, data scientists now employ to check whether observed performance differences are statistically meaningful. This helps avoid overfitting and ensures fair model evaluation.

Bias and Fairness Testing

Ensuring ethical AI means testing whether algorithms treat all groups fairly. frameworks now power fairness checks—examining if model errors or outputs significantly differ across gender, race, or region.

Concept Drift and Continuous Monitoring

In production, models can degrade as data patterns shift. methods like the Kolmogorov–Smirnov test detect distributional changes early, triggering model retraining before performance declines.

4. Real-World Applications Across Industries

Healthcare and Life Sciences

In medicine, Hypothesis Testing drives clinical decision-making. Adaptive clinical trials use real-time Bayesian updates, allowing researchers to stop trials early when results are clear. Personalized medicine also depends on Hypothesis Testing to evaluate treatment effects for specific genetic profiles, accelerating breakthroughs.

Finance and Economics

Banks and investment firms use Testing for risk assessment, fraud detection, and strategy validation. By testing trading signals or model assumptions, analysts distinguish genuine predictive patterns from random noise.

Manufacturing and IoT

In manufacturing, Hypothesis Testing underpins quality control and predictive maintenance. Real-time data from sensors enables multivariate testing to detect subtle deviations before equipment fails—saving millions in downtime costs.

Marketing and Digital Platforms

A/B testing remains one of the most common forms of Testing in business. However, modern marketing platforms combine Bayesian methods, multiple comparison corrections, and adaptive experimentation—ensuring that insights remain valid even when running hundreds of tests simultaneously.

5. Common Pitfalls and How to Avoid Them

Even in 2025, misuse of this remains widespread. Here’s how experts avoid key pitfalls:

P-Hacking and Confirmation Bias

Cherry-picking results or repeatedly testing until something appears significant undermines credibility. The modern solution is pre-registration—documenting hypotheses and analysis plans before viewing data.

Multiple Comparisons Problem

Testing many hypotheses increases false positives. Techniques like the Benjamini–Hochberg correction and Bayesian hierarchical modeling control these risks effectively.

Ignoring Practical Significance

A statistically significant result might have negligible business impact. Combining Hypothesis Testing with cost-benefit analysis ensures decisions make sense beyond p-values.

Assumption Violations

Failing to validate assumptions such as normality or independence can distort results. In 2025, resampling and robust testing frameworks have become standard safeguards.

6. Emerging Trends in Hypothesis Testing (2025 and Beyond)

The coming years will reshape how Hypothesis Testing interacts with technology and human decision-making.

AI-Driven Automation

Artificial intelligence now assists in automating Hypothesis Testing—identifying patterns, suggesting tests, and summarizing results. AutoML platforms incorporate statistical inference directly, generating transparent explanations alongside model metrics.

Augmented Analytics

Analysts can now converse with data through natural language. Modern BI dashboards use Hypothesis Testing in the background to verify whether observed trends are statistically meaningful, turning descriptive dashboards into inferential storytelling tools.

Federated and Privacy-Aware Testing

With rising data privacy concerns, distributed allows organizations to draw conclusions across multiple datasets without centralizing sensitive data.

Real-Time and Streaming Inference

In industries like logistics or cybersecurity, being applied on streaming data to detect anomalies instantly—bridging the gap between statistical inference and operational intelligence.

Causal Machine Learning

By merging causal models with Hypothesis Testing, future systems can automatically detect and verify cause-effect relationships, helping organizations act confidently on insights rather than assumptions.

7. Tools and Frameworks for Modern Hypothesis Testing

Modern data professionals rely on a combination of programming languages and specialized tools.

Python

Python libraries such as SciPy, StatsModels, and Pingouin provide classical and modern tests. For Bayesian inference, PyMC and TensorFlow Probability allow for customizable probabilistic models.

R

In R, packages like infer, brms, and BayesFactor support a wide range of Hypothesis Testing approaches, from simple t-tests to hierarchical Bayesian models.

Visualization Tools

Communicating results is as important as running tests. Tools like Plotly, Seaborn, and ggplot2 help visualize distributions, effect sizes, and confidence intervals—making statistical stories accessible even to non-technical stakeholders.

Enterprise Platforms

Cloud-based solutions like Databricks, Google Vertex AI, and Azure Machine Learning now integrate APIs that automatically validate findings within large-scale pipelines.

8. Case Studies: Data-Driven Decisions in Action

While theoretical knowledge forms the foundation of analytics, the real value lies in how organizations apply it to solve complex challenges. In 2025, data-driven companies across industries are using statistical methods to test assumptions, validate models, and make smarter decisions.

Below are three detailed real-world examples showing how data science principles are transforming product design, fraud detection, and healthcare.

Case Study 1: Improving User Retention at a SaaS Company

Background

A fast-growing software startup noticed a worrying trend — although user sign-ups were increasing, long-term engagement was dropping. After studying user behavior, the team suspected that the onboarding experience was overwhelming and confusing, causing many users to leave early.

To confirm this, the product and data teams designed an experiment comparing two onboarding versions.

Approach

They created:

- Version A (Control): The current design with multiple steps and detailed instructions.

- Version B (Variant): A simplified flow with interactive tutorials and gamified progress indicators.

New users were randomly split between the two versions, and their 30-day retention was tracked — measured by how many users logged in more than twice after the first week.

Instead of relying solely on traditional A/B testing, the team adopted a Bayesian approach, which allowed them to continuously update probabilities as data came in. This provided clearer insights and made the results easier to communicate to non-technical stakeholders.

Findings

After four weeks, the analysis revealed a 92% probability that the new design improved 30-day retention by at least 5%.

The results were visualized with confidence intervals and probability curves, helping management quickly understand the evidence without diving into complex statistical terminology.

Outcome

The leadership team approved a full rollout of the new onboarding flow. Within two months, overall retention improved by 8%, and the company noticed better conversion from free to paid plans.

Lesson Learned

Carefully designed experiments and data-driven evaluation can uncover what truly motivates user engagement. By validating assumptions before acting, the company saved time and made an evidence-backed product improvement.

Case Study 2: Detecting Fraud in E-Commerce

Background

A global e-commerce platform was battling an increase in fraudulent transactions. Their existing system relied on manually crafted rules — such as flagging purchases over certain amounts or unusual shipping locations — but it was becoming outdated as fraud patterns evolved.

The analytics team proposed an experiment to compare the current rules with a new machine learning–based fraud detection system.

Approach

They collected three months of transaction data, labeling each transaction as legitimate or fraudulent. Two models were compared:

- Model A: The legacy rules-based system.

- Model B: A machine learning algorithm using anomaly detection and behavior clustering.

The team measured accuracy, false positives, and false negatives for each model. Statistical tests were used to determine if the new system’s improvement was significant or due to chance.

Findings

The results were compelling. The new model outperformed the old system with:

- 7% higher accuracy,

- 12% fewer false positives, and

- a statistically significant difference at the 99% confidence level.

The team also monitored data drift — checking whether the new system remained reliable as new purchasing trends appeared. The model maintained stable performance across time and regions.

Outcome

After deployment, fraudulent activity dropped by 18% within the first quarter. Chargeback losses decreased, and customers reported fewer issues with blocked legitimate purchases.

Lesson Learned

This case showed that statistical validation is essential before replacing legacy systems. By proving the reliability of the new approach, the company confidently adopted machine learning for fraud detection without risking customer trust.

Case Study 3: Clinical Decision Support in Healthcare

Background

A large hospital network introduced an AI system designed to predict sepsis, a potentially fatal condition if not detected early. Before integrating it into clinical workflows, hospital administrators wanted evidence that the AI tool actually improved diagnosis speed and patient outcomes.

Approach

Ten hospitals participated in the trial:

- Control Group: Physicians followed standard diagnostic protocols.

- Experimental Group: Physicians received AI alerts when patient vitals indicated possible sepsis.

The study ran for six months. Key metrics included time to diagnosis, detection accuracy, and false alarm rate. Multiple statistical tests were applied to compare both groups — including t-tests for continuous variables and chi-square tests for categorical outcomes.

Findings

The experiment revealed:

- A 25% reduction in diagnosis time (p < 0.001),

- 85% sensitivity and 78% specificity in predictions,

- A manageable false alert rate well within clinical limits.

Although the short-term mortality difference was small, faster diagnoses gave clinicians a critical advantage in treatment decisions.

Outcome

The AI-assisted workflow was gradually implemented across all hospital branches. Over the next year, emergency departments reported faster intervention times and better coordination among medical teams.

Lesson Learned

Statistical evaluation ensures that new healthcare technologies genuinely add value. Beyond accuracy, transparency and clinical validation are crucial for patient safety and adoption.

Overall Insights

Across all three examples, several important lessons emerge:

- Decisions must be driven by data, not assumptions. Whether in tech, retail, or healthcare, controlled experimentation reveals what really works.

- Statistical methods adapt across domains. Techniques used for onboarding design can also validate medical tools or financial models.

- Communication is key. Translating statistical findings into clear business language ensures decision-makers trust and act on the results.

- Continuous validation matters. Even after deployment, monitoring for performance drift or behavior change ensures long-term reliability.

These real-world examples illustrate how analytical thinking can drive innovation, reduce uncertainty, and create measurable improvements — turning data into actionable insight that transforms how organizations operate.

9. The Future Outlook: Hypothesis Testing in an Intelligent World

As automation, AI, and quantum computing continue advancing, remain at the heart of explainable decision-making. It will not just validate findings but co-create insights—bridging human judgment with algorithmic precision.

In the near future, Hypothesis Testing will:

- Operate autonomously in AI-driven research pipelines.

- Support real-time decision-making with streaming data.

- Evolve into explainable inference systems that justify their conclusions.

- Enable cross-domain collaboration through federated inference frameworks.

Conclusion: A Timeless Framework for the Data-Driven Era

Despite massive technological change, hypothesis testing endures as the gold standard for statistical reasoning. Its journey—from the days of manual calculations to today’s AI-powered inference engines—tells a story not just of innovation but of resilience. In an era where machine learning models automate predictions and large language models simulate reasoning, the structured discipline of hypothesis testing remains the compass that keeps scientific inquiry on course.

In the early 20th century, statisticians like Fisher, Neyman, and Pearson laid the foundation for hypothesis-driven experimentation. Their principles shaped how we distinguish signal from noise, causation from correlation, and truth from coincidence. Fast forward to 2025, and the same core logic continues to guide how data scientists validate algorithms, measure business impact, and assess real-world interventions — only now, it’s executed at an unprecedented scale and speed.

Modern analytics platforms can test thousands of hypotheses in parallel, apply Bayesian inference dynamically, and even flag data drift before humans notice. Yet, the essence remains the same: using evidence to evaluate claims objectively. Whether in genomics, fintech, marketing, or space science, the discipline of forming, testing, and refining hypotheses underpins every serious analytical effort.

For today’s data scientists, hypothesis testing is more than a formula or a step in the workflow — it’s a mindset. It encourages curiosity (asking “what if?”), rigor (checking whether patterns are real), and humility (accepting uncertainty as part of learning). In a world overflowing with data, this mindset is what separates thoughtful insights from accidental correlations.

Every major technological shift — from big data to artificial intelligence — has expanded what we can test, not replaced why we test. The core philosophy of hypothesis testing ensures that human reasoning remains central to data-driven discovery. It enforces accountability: every conclusion must trace back to measurable evidence and transparent logic.

In 2025, organizations are realizing that trust in analytics is as important as accuracy. Whether it’s a pharmaceutical company verifying a drug’s effectiveness, a fintech startup detecting anomalies, or a social platform optimizing its recommendation system, stakeholders demand explanations. Hypothesis testing provides that explainability, grounding even the most complex AI models in verifiable statistical principles.

Moreover, as AI systems become autonomous decision-makers, the discipline of statistical validation acts as a safeguard. By continuously testing model outputs against controlled baselines, companies ensure that automation enhances rather than undermines human judgment. This synergy between classic statistics and modern AI marks a new frontier — where hypothesis testing evolves into AI-assisted scientific reasoning, capable of identifying, prioritizing, and even suggesting hypotheses in real time.

But while technology amplifies speed and scale, the human role remains irreplaceable. It’s the data scientist’s intuition, ethical awareness, and domain expertise that determine which questions are worth asking. Hypothesis testing, in this sense, is not just a computational tool — it’s a cognitive discipline that anchors human reasoning in measurable truth.

As we move further into an era defined by intelligent systems and automated decision-making, hypothesis testing ensures that decisions remain transparent, justifiable, and scientifically sound. It reminds us that true progress depends not on replacing human logic but on augmenting it with data-driven rigor.

In 2025 and beyond, mastering hypothesis testing is not just a statistical necessity—it’s a competitive advantage. Those who understand how to question, test, and verify will continue to lead industries, shape policy, and drive innovation responsibly.

Ultimately, the endurance of hypothesis testing reflects a timeless truth: in a world of infinite data, the ability to ask the right question will always matter more than having all the answers.