Confused between Hadoop vs Spark? I explains everything: their architectures, key differences, use cases, and how they work together in modern data engineering.

Introduction: The Dawn of the Big Data Era

We live in a world drowning in data. From the social media posts we scroll through to the financial transactions we execute, from sensor readings in smart factories to the log files of global web services, an unprecedented volume, variety, and velocity of data is being generated every second. This phenomenon, termed “Big Data,” presented a fundamental challenge to traditional data processing systems. They simply couldn’t scale economically or handle the unstructured nature of this new data deluge.

Enter Hadoop and Spark. These two open-source frameworks, born from cutting-edge academic research, revolutionized how we store, process, and analyze massive datasets. They democratized Big Data, moving it from expensive, proprietary hardware to clusters of inexpensive, commodity servers. For over a decade, they have been the pillars of the modern data ecosystem.

But a common question persists: Hadoop vs. Spark, which one is better? The answer is not a simple either/or. While they are often mentioned together, they serve different, often complementary, purposes. Understanding their strengths, weaknesses, and core philosophies is crucial for anyone involved in data engineering, data science, or analytics.

This ultimate guide will take you on a deep dive into both frameworks. We will deconstruct their architectures, explore their components, and conduct a detailed feature-by-feature comparison. By the end, you will not only know the difference between Hadoop and Spark but also understand how they are used in tandem to power the data platforms of today’s most successful organizations.

Part 1: Deconstructing Hadoop – The Foundation of Distributed Storage and Processing

1.1 What is Hadoop?

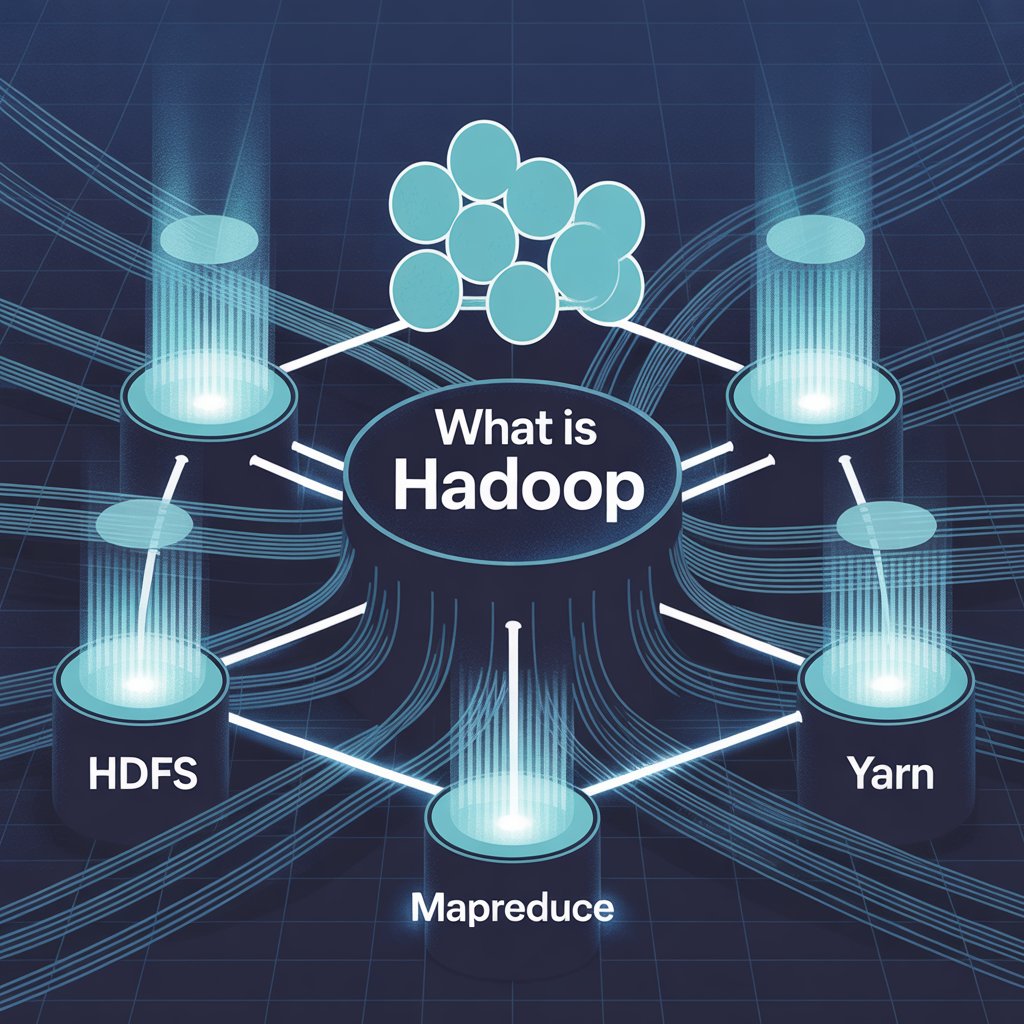

Apache Hadoop is not a single application but rather a comprehensive ecosystem of tools designed for the distributed storage and processing of very large datasets across clusters of computers using simple programming models. Its core strength lies in its ability to scale from a single server to thousands of machines, each offering local computation and storage.

The project’s genesis was Google’s famous 2003 paper on the Google File System (GFS), followed by the 2004 paper on the MapReduce programming model. Doug Cutting and Mike Cafarella created Hadoop, naming it after Cutting’s son’s yellow stuffed elephant. They later donated it to the Apache Software Foundation.

Hadoop is built on two fundamental principles:

- Data Locality: Instead of moving large datasets to a central server for processing, Hadoop moves the computation logic to the nodes where the data resides. This minimizes network congestion and enables highly efficient parallel processing.

- Fault Tolerance: Hardware failures are expected, not exceptional, when you’re managing thousands of servers. Hadoop is designed to handle such failures gracefully. If a node fails, the framework automatically redirects work to another node containing a replica of the data, ensuring the overall computation does not fail.

1.2 The Core Components of the Hadoop Ecosystem

Hadoop’s power comes from its modular ecosystem. While the core has evolved, the following components remain foundational.

1.2.1 Hadoop Distributed File System (HDFS)

HDFS is the storage layer of Hadoop. It’s a distributed, scalable, and portable file system written in Java.

- Architecture:

- NameNode: The “master” server. It manages the file system namespace (the metadata, directory tree, and file-to-block mapping) and regulates access to files by clients. It is the single point of coordination, making it a critical component.

- DataNode: The “slave” servers. There are typically many DataNodes per cluster. They are responsible for storing the actual data blocks and serving read/write requests from clients.

- Secondary NameNode: Despite its name, it is not a hot standby for the NameNode. Its primary function is to periodically merge the namespace image with the edit log to prevent the log from becoming too large. It is a checkpointing mechanism.

- How it Works: When a file is ingested into HDFS, it is split into one or more blocks (typically 128 MB or 256 MB). These blocks are replicated across multiple DataNodes (default replication factor is 3) to ensure reliability and availability. The NameNode keeps track of which blocks make up a file and where those blocks are located.

- Key Features:

- High Throughput: Optimized for reading large files sequentially rather than low-latency access to small files.

- Scalability: Can scale to thousands of nodes and store petabytes of data.

- Fault Tolerance: Data replication ensures data is not lost if individual disks or nodes fail.

1.2.2 Hadoop MapReduce

MapReduce is the original data processing engine and programming model of Hadoop. It is a batch-processing paradigm for processing vast amounts of data in parallel.

- The Programming Model: It works in two main phases:

- Map Phase: The input data is split and fed to multiple “Mapper” tasks. Each Mapper processes a single split and produces a set of intermediate key-value pairs.

- Reduce Phase: The intermediate key-value pairs are shuffled and sorted by key and then sent to “Reducer” tasks. Each Reducer processes all values associated with a particular key and produces the final output.

- Example: Word Count – The “Hello World” of MapReduce.

- Input: “Hello World Hello Data”

- Map: Produces -> (Hello, 1), (World, 1), (Hello, 1), (Data, 1)

- Shuffle & Sort: Groups by key -> (Data, [1]), (Hello, [1, 1]), (World, [1])

- Reduce: Sums the values -> (Data, 1), (Hello, 2), (World, 1)

- Architecture:

- ResourceManager (YARN): The master daemon that manages the global assignment of resources (CPU, memory) to applications.

- NodeManager: The slave daemon that runs on each node and is responsible for containers, which are the abstracted resources (CPU, RAM) allocated for tasks.

- ApplicationMaster: Manages the lifecycle of a single application (e.g., one MapReduce job), negotiating resources from the ResourceManager and working with the NodeManagers to execute and monitor the tasks.

1.2.3 YARN (Yet Another Resource Negotiator)

Introduced in Hadoop 2.0, YARN is the cluster resource management layer. It decouples the programming model from resource management, a crucial evolution.

- Before YARN: MapReduce was responsible for both processing and cluster resource management. This was inflexible.

- After YARN: YARN acts as the cluster’s operating system. It manages resources and schedules applications, allowing multiple data processing engines like MapReduce, Spark, Tez, and others to run on the same Hadoop cluster, sharing a common resource pool. This transformed Hadoop from a single-purpose system into a multi-application data platform.

1.2.4 Other Key Hadoop Ecosystem Projects

- Apache Hive: A data warehouse infrastructure that provides a SQL-like interface (HiveQL) to query data stored in HDFS. It translates SQL queries into MapReduce, Tez, or Spark jobs.

- Apache HBase: A non-relational, distributed, column-oriented database that runs on top of HDFS. It provides real-time read/write random access for very large tables.

- Apache Pig: A high-level platform for creating MapReduce programs using a simple scripting language called Pig Latin.

- Apache Sqoop: A tool designed for efficiently transferring bulk data between Hadoop and structured datastores like relational databases.

- Apache Flume: A service for efficiently collecting, aggregating, and moving large amounts of log data into HDFS.

1.3 Strengths and Weaknesses of Hadoop

Strengths:

- Mature and Robust: A battle-tested platform with over a decade of development and deployment in production environments.

- Cost-Effective Storage: HDFS provides a highly scalable and cheap storage layer for massive datasets.

- Extensive Ecosystem: A rich set of tools (Hive, Pig, HBase, etc.) for various data tasks, all integrated with HDFS.

- Fault Tolerance: Built-in redundancy and recovery mechanisms make it extremely resilient to hardware failures.

Weaknesses:

- High Latency Processing: MapReduce writes intermediate results to disk, which is a slow I/O operation. This makes it unsuitable for real-time or interactive processing.

- Complex Programming Model: Writing native MapReduce code in Java can be complex and verbose compared to higher-level APIs like Spark’s.

- Not for Small Data: The overhead of launching jobs and managing the cluster is not justified for small datasets.

Part 2: Understanding Spark – The Lightning-Fast Unified Analytics Engine

2.1 What is Spark?

Apache Spark is an open-source, distributed computing system designed for fast computation and ease of use. It was developed in 2009 at UC Berkeley’s AMPLab and open-sourced in 2010. Its primary goal was to address the limitations of MapReduce’s disk-based processing, particularly for iterative algorithms and interactive data analysis.

Spark’s key innovation is its in-memory computing engine. By keeping data in memory as much as possible, it can run programs up to 100x faster than Hadoop MapReduce for certain applications. Even for disk-based operations, it’s often 10x faster.

Unlike Hadoop, which is both a storage (HDFS) and processing (MapReduce) framework, Spark is primarily a data processing engine. It does not have its own distributed storage system. It can read data from a variety of sources, including HDFS, Apache Cassandra, Amazon S3, and others, making it incredibly flexible.

2.2 The Core Concept: Resilient Distributed Datasets (RDDs)

At the heart of Spark’s programming model is the Resilient Distributed Dataset (RDD). An RDD is an immutable, distributed collection of objects that can be processed in parallel across a cluster.

- Resilient: If data in memory is lost, it can be recreated using the “lineage” – the sequence of operations used to build it. This is Spark’s primary mechanism for fault tolerance, which is more efficient than the data replication used by HDFS.

- Distributed: Data is partitioned across the nodes in the cluster.

- Dataset: A collection of partitioned elements.

RDDs support two types of operations:

- Transformations: Operations that create a new RDD from an existing one (e.g.,

map,filter,join). They are lazy, meaning they don’t compute their results right away. They just remember the transformation applied to the base dataset. - Actions: Operations that return a value to the driver program or write data to an external storage system (e.g.,

count,collect,saveAsTextFile). Actions trigger the execution of the accumulated transformations.

This lazy evaluation allows Spark to optimize the entire data flow of a program before execution.

2.3 The Spark Ecosystem and APIs

Spark provides a unified stack of libraries, making it a versatile tool for a wide range of data tasks.

2.3.1 Spark Core

The foundation of the platform, providing the basic I/O functionality, task scheduling, and the RDD API.

2.3.2 Spark SQL

One of the most popular Spark components. It provides a programming abstraction called DataFrames and Datasets, which are distributed collections of data organized into named columns. DataFrames allow you to use SQL or familiar DataFrame-style APIs (similar to Pandas in Python or dplyr in R) to manipulate data. Under the hood, Spark SQL has a highly optimized engine called Catalyst that performs sophisticated query optimization.

2.3.3 Spark Streaming

Enables processing of live data streams. It ingests data in mini-batches and performs RDD transformations on those batches. This provides a single engine for both batch and streaming data (the “lambda architecture” simplified). Note: The newer Structured Streaming API, built on Spark SQL, is the recommended approach for stream processing, offering end-to-end exactly-once fault-tolerance guarantees.

2.3.4 MLlib (Machine Learning Library)

A scalable machine learning library containing common learning algorithms and utilities, including classification, regression, clustering, collaborative filtering, dimensionality reduction, and underlying optimization primitives.

2.3.5 GraphX

A library for manipulating graphs (e.g., social network graphs) and performing graph-parallel computations.

2.4 How Spark Works: Architecture and Execution

- Driver Program: The process running the

main()function of your application. It creates the SparkContext, which is the entry point to Spark functionality. The driver converts the user program into tasks and schedules them on the cluster. - Cluster Manager: An external service for acquiring resources on the cluster (e.g., Spark Standalone, Apache Mesos, Hadoop YARN, or Kubernetes). Spark can run on various cluster managers, which gives it great deployment flexibility.

- Executor Nodes: Worker processes that run on the nodes in the cluster. They are responsible for executing the tasks assigned by the driver and storing data in memory or disk.

When code is submitted, the SparkContext connects to a cluster manager, which allocates executors. The driver then sends the application code (JAR or Python files) to the executors. Finally, the SparkContext sends tasks to the executors to run.

If You want Know More the click Here

2.5 Strengths and Weaknesses of Spark

Strengths:

- Blazing Speed: In-memory processing makes it orders of magnitude faster than Hadoop MapReduce for iterative and interactive workloads.

- Ease of Use: High-level APIs in Java, Scala, Python (PySpark), and R make it accessible to a wider audience of developers and data scientists.

- Unified Engine: A single platform for batch processing, interactive queries, real-time streaming, machine learning, and graph processing.

- Advanced Analytics: Strong support for machine learning and graph processing libraries out-of-the-box.

Weaknesses:

- Memory Consumption: Can be resource-hungry, as it holds data in RAM. Improper management can lead to out-of-memory errors.

- No Native Storage: Relies on external storage systems like HDFS, S3, etc.

- Cost: While faster, the requirement for large amounts of RAM can make the hardware cost per node higher than for a Hadoop cluster focused purely on storage.

- Less Mature: While very stable, the core Hadoop HDFS has a longer track record for petabyte-scale storage.

Part 3: Head-to-Head: Hadoop vs. Spark – A Detailed Comparison

Now that we have a solid understanding of both frameworks, let’s put them side-by-side. The table below summarizes the key differences.

| Feature | Apache Hadoop | Apache Spark |

|---|---|---|

| Primary Function | Distributed Storage (HDFS) & Batch Processing (MapReduce) | Distributed Data Processing Engine |

| Data Processing | Batch-only | Batch, Streaming, Interactive, Iterative |

| Speed | Slower, due to disk I/O | Up to 100x faster for in-memory tasks, 10x faster for disk |

| Data Handling | Stores data on disk | Caches data in-memory (RAM) |

| Fault Tolerance | Data replication (HDFS) & task re-execution | RDD Lineage – Recomputes lost data partitions |

| Ease of Use | Lower-level APIs (Java MapReduce). Higher-level tools like Hive help. | High-level APIs (Scala, Java, Python, R), DataFrames, SQL |

| Cost | Cheaper storage (disk-based) | Can be more expensive (requires large RAM) |

| Latency | High latency | Low latency |

| Machine Learning | Via Mahout (less active) | Native MLlib library, very active development |

| Real-time Processing | Not natively supported (needs Storm/Samza) | Native Spark Streaming & Structured Streaming |

| Graph Processing | Via Apache Giraph | Native GraphX library |

| Resource Management | Native YARN | Can use Standalone, YARN, Mesos, or Kubernetes |

3.1 Deep Dive into Key Differentiators

3.1.1 Performance: The In-Memory vs. On-Disk Battle

This is the most significant difference. Let’s visualize the data flow for an iterative operation, common in machine learning algorithms.

Hadoop MapReduce Workflow (Iterative Job):[Disk] -> [Map -> Disk] -> [Reduce -> Disk] -> [Disk] -> [Map -> Disk] -> [Reduce -> Disk] -> ...

Each iteration is a separate MapReduce job, writing its full output to HDFS before the next job can read it. This repeated disk I/O creates a massive performance bottleneck.

Apache Spark Workflow (Iterative Job):[Memory] -> [Map] -> [Reduce] -> [Memory] -> [Map] -> [Reduce] -> [Memory] -> ...

Intermediate results are kept in memory between iterations. Only the final result might be written to disk. This eliminates the vast majority of the I/O overhead.

3.1.2 Fault Tolerance: Replication vs. Lineage

Both systems are fault-tolerant, but they achieve it in fundamentally different ways.

- Hadoop (HDFS): Uses data replication. Each block of data is copied to multiple DataNodes (default 3). If a node fails, the data is still available on other nodes. This is simple and effective but comes with a storage overhead of 200%.

- Spark (RDDs): Uses RDD lineage. Each RDD remembers how it was built from other datasets (its lineage). If a partition of an RDD is lost, Spark can recompute it by replay the transformations from the original, stable data source. This is storage-efficient but can be computationally expensive if the lineage chain is long.

3.1.3 Ease of Use and Development

Writing a “Word Count” program illustrates the difference in developer experience.

Hadoop MapReduce (Java):

Requires two separate classes (Mapper and Reducer), boilerplate code for setting up the job, and handling intermediate key-value pairs.

java

// ... Extensive boilerplate code for Mapper, Reducer, and main driver ...

public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable> {

public void map(Object key, Text value, Context context) {...}

}

public static class IntSumReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values, Context context) {...}

}

// ... More setup code ...Apache Spark (Python/PySpark):

The same logic can be expressed in a few, readable lines of code.

python

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("WordCount").getOrCreate()

sc = spark.sparkContext

text_file = sc.textFile("hdfs://...")

counts = text_file.flatMap(lambda line: line.split(" ")) \

.map(lambda word: (word, 1)) \

.reduceByKey(lambda a, b: a + b)

counts.saveAsTextFile("hdfs://...")Spark’s high-level APIs, especially DataFrames, make data manipulation feel similar to working with data in Python or R, significantly boosting developer productivity.

Part 4: The Modern Data Stack: Hadoop and Spark Together

The “vs.” in “Hadoop vs. Spark” is often misleading. In reality, they are not mutually exclusive but are highly complementary technologies. The most common and powerful architecture in modern data platforms leverages the strengths of both.

4.1 A Synergistic Relationship

The classic and still prevalent pattern is:

HDFS as the reliable, scalable, and cost-effective data lake + Spark as the high-performance processing engine.

- HDFS for Storage: HDFS acts as the central repository, the “data lake,” where all raw and processed data from various sources is dumped and stored for the long term. Its replication provides durability, and its scalability handles petabytes of data at a low cost.

- Spark for Processing: Spark runs on the same cluster, reading data directly from HDFS. It performs all the heavy-lift data processing tasks: ETL (Extract, Transform, Load), data cleansing, feature engineering for machine learning, real-time stream processing, and interactive querying via Spark SQL.

This combination gives you the best of both worlds: the cheap, reliable storage of Hadoop and the speed and agility of Spark.

4.2 Real-World Use Case Scenarios

Let’s explore how this synergy plays out in different industries.

Use Case 1: E-commerce Recommendation Engine

- Challenge: An e-commerce giant needs to provide real-time product recommendations to users based on their current browsing behavior and purchase history, which involves analyzing terabytes of user and product data.

- Solution:

- Hadoop’s Role (HDFS): Serves as the data lake. It stores massive historical datasets: user profiles, past purchase records, product catalogs, and clickstream logs.

- Spark’s Role:

- Batch (Spark MLlib): Trains machine learning models (like collaborative filtering) overnight on the vast historical data stored in HDFS to generate baseline recommendation models.

- Streaming (Spark Streaming): Analyzes the real-time clickstream data of a user currently on the website. It combines this live data with the pre-computed model to instantly generate and serve personalized recommendations.

- Outcome: A responsive, personalized user experience that drives sales, powered by the batch and real-time capabilities of Spark operating on data stored in HDFS.

Use Case 2: Financial Services Fraud Detection

- Challenge: A bank needs to detect fraudulent credit card transactions in near real-time to block them before they are approved, while also running deep historical analysis to improve fraud patterns.

- Solution:

- Hadoop’s Role (HDFS): Archives years of transaction data, including both legitimate and fraudulent transactions. This serves as the training ground for fraud models.

- Spark’s Role:

- Batch (Spark SQL/MLlib): Queries the historical data in HDFS to identify complex, long-term fraud patterns and retrain detection models regularly.

- Streaming (Structured Streaming): Monitors live transaction streams. Each incoming transaction is scored against the deployed ML model in milliseconds. If the fraud probability is above a threshold, an alert is triggered, and the transaction can be halted.

- Outcome: Reduced financial losses due to fraud through a combination of deep historical analysis and lightning-fast real-time decisioning.

Use Case 3: Telecommunications Network Analytics

- Challenge: A telecom operator wants to optimize its network performance and predict infrastructure failures by analyzing terabytes of network log data generated by cell towers and routers every hour.

- Solution:

- Hadoop’s Role (HDFS): Acts as the central repository for all network performance management (NPM) data, call detail records (CDRs), and infrastructure logs.

- Spark’s Role:

- ETL (Spark): Cleanses, normalizes, and enriches the raw log data from HDFS, preparing it for analysis.

- Analytics (Spark SQL): Data scientists and engineers run complex interactive queries on the prepared data to identify coverage gaps, network congestion patterns, and potential equipment faults.

- Outcome: Improved network reliability, better customer service, and proactive maintenance, leading to lower churn rates.

Part 5: Beyond the Duo: The Evolving Big Data Landscape

While Hadoop and Spark form a powerful core, the Big Data ecosystem continues to evolve. New technologies have emerged that address specific niches, often coexisting with or even replacing parts of the traditional Hadoop stack.

5.1 Cloud Data Warehouses and Lakehouses

- Cloud Data Warehouses (e.g., Snowflake, BigQuery, Redshift): These fully-managed services have gained immense popularity. They separate storage and compute, allowing them to scale independently and offer high-performance SQL analytics without the operational overhead of managing a Hadoop cluster. They are often faster and easier for business intelligence workloads.

- Lakehouse Architecture (e.g., Databricks Lakehouse Platform): This new paradigm attempts to combine the best of data lakes (low-cost, flexible storage of all data types) and data warehouses (high-performance SQL and ACID transactions). Apache Spark is often the primary engine for this architecture, working on open data formats (Parquet, Delta Lake) stored in cloud object storage (S3, ADLS).

5.2 Other Processing Frameworks

- Apache Flink: A true stream-processing framework that processes events as they arrive, unlike Spark’s micro-batching. It offers very low latency and sophisticated state management, making it a strong competitor to Spark Streaming for complex event processing applications.

- Apache Beam: A unified programming model that allows you to write batch and streaming data processing pipelines that can run on multiple execution engines, including Spark, Flink, and Google Cloud Dataflow.

5.3 The Future of Hadoop and Spark

- Hadoop’s Future: The trend is moving away from managing on-premise Hadoop clusters towards cloud storage (S3, ADLS, GCS). While the “Hadoop distribution” market has consolidated, HDFS as a concept is being superseded by cloud object storage. However, the principles of distributed storage and the YARN resource manager are still influential. The ecosystem projects like Hive, HBase, and Sqoop remain relevant.

- Spark’s Future: Spark continues to thrive and is arguably more popular than ever. Its position as a unified analytics engine is strengthened by its central role in the Lakehouse paradigm. Continuous improvements in its Structured Streaming API, the Photon execution engine (in Databricks), and deep integration with Kubernetes ensure it remains at the forefront of data processing technology.

Conclusion: Choosing the Right Tool for the Job

So, Hadoop or Spark? The answer, as we’ve seen, is nuanced.

- Choose Hadoop if your primary need is to build a massive, reliable, and cost-effective data lake for storing petabytes of raw data in its native format for the long term, and your primary processing workloads are batch-oriented and not time-sensitive.

- Choose Spark if your primary need is high-performance data processing. You need speed for ETL, interactive analytics, machine learning, or real-time streaming, and you are willing to provision clusters with sufficient memory.

However, the most powerful and common choice in the industry is not to choose at all, but to use them together. Let HDFS (or a modern cloud equivalent) be your scalable, durable storage layer, and let Spark be your versatile, high-speed processing engine on top of it.

The world of Big Data is not about a single winner-takes-all technology. It’s about building a robust, scalable, and efficient data platform by understanding the strengths of each tool and weaving them together to solve complex business problems. By mastering both Hadoop and Spark, you equip yourself with the foundational knowledge to navigate and build the data architectures of today and tomorrow.