Curious about what is data science? This ultimate guide breaks down the definition, lifecycle, components, tools, applications, and how to become a data.

Welcome to the Data-Driven World

In the 21st century, data is the new oil. This phrase, coined by mathematician Clive Humby, has become a mantra for the digital age. But just like crude oil, data in its raw form isn’t inherently valuable. Its true power is unlocked only when it is refined, processed, and transformed into actionable insights. This process of refinement and extraction is the very essence of data science.

You encounter the results of data science every day. When Netflix recommends your next favorite show, when Google Maps finds the quickest route to your destination, when your bank flags a suspicious transaction, or when Amazon suggests a product you might need—you are witnessing data science in action. It is a revolutionary field that has fundamentally changed how businesses operate, how science is conducted, and how we understand the world.

But what exactly is data science? This comprehensive guide will demystify this interdisciplinary field. We will explore its definition, trace its fascinating evolution, break down its core components, delve into its real-world applications, and provide a roadmap for anyone looking to build a career in this high-demand domain. By the end of this article, you will have a thorough understanding of what data science is, why it matters, and how it is shaping our future.

Chapter 1: Defining the Indefinable – What Exactly is Data Science?

At its core, data science is an interdisciplinary field that uses scientific methods, processes, algorithms, and systems to extract knowledge and insights from structured and unstructured data. It is a blend of various tools, algorithms, and machine learning principles with the goal to discover hidden patterns from raw data.

1.1 A Simple Analogy: The Data Science Kitchen

Imagine you are a chef with a kitchen full of random ingredients (data). Your goal is to create a delicious, nutritious meal (actionable insights).

- Data (Ingredients): You have vegetables, spices, meats, and grains. Some are fresh and clean (structured data), while others are unwashed and need preparation (unstructured data).

- Data Preparation (Washing and Chopping): You clean the vegetables, chop the onions, and marinate the meat. This is the crucial, often tedious, step of preparing your data for analysis.

- Exploratory Data Analysis (Tasting and Smelling): You taste the spices, smell the herbs. You understand the properties of each ingredient.

- Modeling (Cooking): You follow a recipe (algorithm) to combine the ingredients. You might experiment with different cooking techniques (models)—frying, baking, steaming—to see what works best.

- Insight and Deployment (Serving the Meal): The final dish is served to the customers, who enjoy it and provide feedback. The successful recipe is added to the restaurant’s menu (deployed into production).

Data science is the entire process of running this kitchen, from managing the inventory to serving the final product.

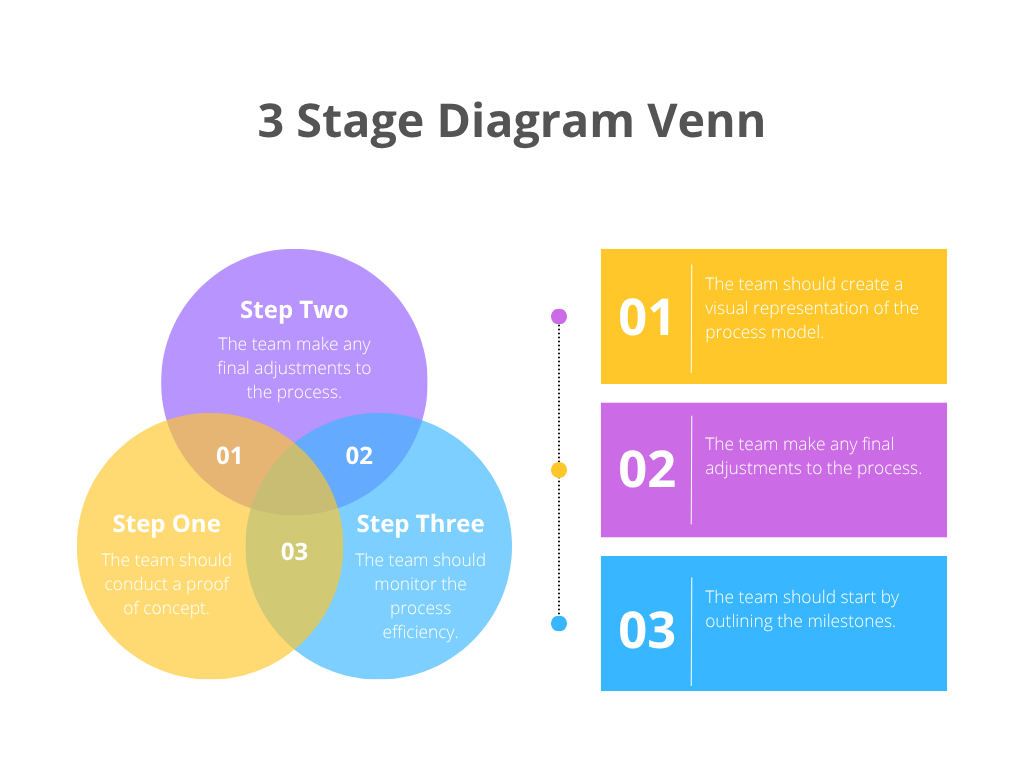

1.2 The Data Science Venn Diagram

One of the most popular ways to visualize data science is through the Venn diagram, famously articulated by Drew Conway. It illustrates data science as the intersection of three key domains:

- Hacking Skills (Computer Science/Programming): The ability to work with digital data requires proficiency in programming languages like Python or R to manipulate, process, and analyze data at scale. This also involves knowledge of databases, algorithms, and distributed computing.

- Math & Statistics Knowledge: This is the foundational bedrock. It includes expertise in statistics, probability, linear algebra, and calculus to build mathematical models, test hypotheses, and ensure that findings are statistically sound and not due to random chance.

- Substantive Expertise (Domain Knowledge): This is the often-overlooked but critical component. It refers to deep knowledge of the field you are working in—be it finance, healthcare, marketing, or astronomy. Without context, a data scientist might find a correlation that is statistically significant but practically meaningless. Domain knowledge ensures that the insights are relevant and actionable.

The sweet spot where all three circles overlap is Data Science. The intersections of only two circles represent other related fields:

- Hacking Skills + Statistics = Machine Learning

- Hacking Skills + Domain Knowledge = Traditional Research

- Statistics + Domain Knowledge = Analytics

This diagram powerfully communicates that a successful data scientist is not just a programmer or a statistician but a versatile expert who can bridge these worlds.

Chapter 2: A Journey Through Time – The Evolution of Data Science

While “Data Scientist” was dubbed the “Sexiest Job of the 21st Century” by Harvard Business Review in 2012, the field’s roots run deep.

- 1960s – 1970s: The Foundations. The term “Data Science” was first used by Peter Naur in 1960 as a substitute for computer science. During this period, the fields of statistics and data analysis were well-established. John Tukey’s 1962 paper “The Future of Data Analysis” began to shift the focus from pure statistics to the broader process of analyzing data.

- 1980s – 1990s: The Rise of Data Mining and Business Intelligence. With the advent of relational databases, businesses started storing more data. The term “Knowledge Discovery in Databases” (KDD) was coined, and the field of data mining emerged, focusing on finding patterns in large datasets. Tools like Excel and early BI platforms (e.g., SAP, Oracle) made data analysis more accessible.

- 2000s: The Big Data Inflection Point. The digital explosion created massive volumes, velocities, and varieties of data—giving rise to the “3 V’s” of Big Data. Technologies like Hadoop and MapReduce were developed to store and process this data across distributed clusters of computers. In 2001, William S. Cleveland laid out a plan to “establish data science as a recognized, distinct field,” combining statistics with data computing.

- 2010s – Present: The Modern Era. The term “Data Scientist” gained widespread popularity. The confluence of cheaper computing power (cloud computing), advanced algorithms (deep learning), and the proliferation of data created the perfect storm for data science to flourish. It became a core function in most tech-first and forward-thinking companies.

Chapter 3: Deconstructing the Hype – Core Components of Data Science

To understand what a data scientist does, we must break down the data science lifecycle, often represented as a cyclical process.

3.1 The Data Science Lifecycle (CRISP-DM)

A common framework is the Cross-Industry Standard Process for Data Mining (CRISP-DM), which includes six key phases:

1. Business Understanding

This is the most critical step. Before touching any data, a data scientist must work with stakeholders to define the problem. What are the business objectives? What questions need to be answered? How will the results be measured? A project without clear business understanding is doomed to fail.

2. Data Understanding

This phase involves data collection and initial exploration. Data can come from various sources: internal databases, third-party APIs, public datasets, web scraping, or IoT sensors. The goal is to identify what data is available, its format, and its quality through descriptive statistics and visualization.

3. Data Preparation (Data Wrangling/Munging)

Often cited as the most time-consuming part (up to 80%) of a data scientist’s job, this is where raw data is transformed into a clean, usable format. Tasks include:

- Handling Missing Values: Deciding whether to remove or impute missing data.

- Data Cleaning: Correcting inconsistencies, typos, and duplicates.

- Data Transformation: Normalizing, scaling, and encoding categorical variables.

- Feature Engineering: Creating new, more informative features from existing ones (e.g., creating “age” from a “date of birth” column).

4. Modeling

Here, the “science” truly begins. Data scientists select appropriate machine learning algorithms and train them on the prepared data.

- Model Selection: Choosing the right algorithm (e.g., Linear Regression, Decision Trees, Neural Networks) based on the problem (is it prediction, classification, or clustering?).

- Training: Feeding the training data to the algorithm so it can learn the underlying patterns.

- Hyperparameter Tuning: Adjusting the model’s settings to optimize performance.

5. Evaluation

Before deployment, the model must be rigorously evaluated to ensure it generalizes well to new, unseen data. This involves using metrics like Accuracy, Precision, Recall, F1-score for classification, or Mean Absolute Error (MAE) for regression. The results are presented to stakeholders to verify that the model meets the business objectives defined in step one.

6. Deployment

This is where the model is integrated into the company’s decision-making systems. It could be a one-time report, a dashboard, or a real-time API that serves predictions to a live application (e.g., a fraud detection system). Deployment is not the end; the model must be continuously monitored for performance decay and retrained as new data comes in.

3.2 Key Methodologies and Algorithms

A data scientist’s toolkit is filled with a variety of algorithms, broadly categorized as follows:

A. Supervised Learning

The model is trained on a labeled dataset (the “right answers” are provided).

- Use Cases: Prediction and classification.

- Algorithms:

- Linear/Logistic Regression: For predicting continuous values and binary outcomes.

- Decision Trees & Random Forests: Versatile and easy-to-interpret models for classification and regression.

- Support Vector Machines (SVM): Effective for complex classification tasks.

- Gradient Boosting Machines (XGBoost, LightGBM): Powerful, ensemble methods often winning data science competitions.

B. Unsupervised Learning

The model finds patterns in unlabeled data without any guidance.

- Use Cases: Clustering and dimensionality reduction.

- Algorithms:

- K-Means Clustering: Groups data into a predefined number of clusters (e.g., customer segmentation).

- Principal Component Analysis (PCA): Reduces the number of variables in a dataset while preserving as much information as possible.

C. Deep Learning

A subset of machine learning using neural networks with many layers (“deep” networks).

- Use Cases: Image recognition, natural language processing, speech recognition.

- Algorithms:

- Convolutional Neural Networks (CNNs): For image and video analysis.

- Recurrent Neural Networks (RNNs) & LSTMs: For sequential data like text and time series.

Chapter 4: The Data Science Toolbox – Essential Technologies and Programming Languages

A craftsman is only as good as their tools. The modern data scientist relies on a robust ecosystem of programming languages, libraries, and platforms.

4.1 Programming Languages

- Python: The undisputed king of data science. Its simplicity, readability, and vast ecosystem of libraries (Pandas, NumPy, Scikit-learn, TensorFlow, PyTorch) make it the go-to choice for most data tasks.

- R: A language designed specifically for statistical computing and graphics. It is exceptionally powerful for exploratory data analysis and creating publication-quality visualizations.

- SQL (Structured Query Language): A non-negotiable skill. Data scientists use SQL to extract and manipulate data from relational databases.

4.2 Libraries and Frameworks

- Data Manipulation: Pandas (Python), dplyr (R)

- Numerical Computing: NumPy (Python)

- Machine Learning: Scikit-learn (Python), caret (R)

- Deep Learning: TensorFlow, PyTorch, Keras (Python)

- Data Visualization: Matplotlib, Seaborn, Plotly (Python), ggplot2 (R)

4.3 Platforms and Environments

- Jupyter Notebook: An interactive, web-based environment that allows data scientists to write code, visualize results, and add narrative text in a single document. It is ideal for experimentation and collaboration.

- Cloud Platforms: AWS (SageMaker), Google Cloud (AI Platform), and Microsoft Azure (Machine Learning) provide scalable infrastructure for storing data and training complex models.

- Big Data Technologies: Apache Spark is a leading engine for processing large-scale data, while Hadoop HDFS is a distributed file system for storage.

Chapter 5: Data Science in Action – Real-World Applications Across Industries

Data science is not a theoretical discipline; its value is proven in its application. Here’s how it is transforming various sectors:

- Healthcare:

- Medical Image Analysis: Using CNNs to detect tumors in MRI scans and X-rays with higher accuracy than human radiologists.

- Drug Discovery: Accelerating the development of new drugs by predicting molecular interactions.

- Predictive Analytics: Forecasting disease outbreaks and patient readmission rates.

- Finance:

- Fraud Detection: Using anomaly detection algorithms to identify suspicious credit card transactions in real-time.

- Algorithmic Trading: Developing models that can execute trades at high speeds based on market data.

- Risk Management: Assessing the creditworthiness of loan applicants.

- E-commerce & Retail:

- Recommendation Systems: The engines behind “customers who bought this also bought…” on Amazon and Netflix.

- Customer Segmentation: Grouping customers based on purchasing behavior for targeted marketing campaigns.

- Supply Chain Optimization: Forecasting demand to optimize inventory levels and reduce costs.

- Technology:

- Search Engines: Google’s PageRank algorithm is a classic example of data science.

- Natural Language Processing (NLP): Powering virtual assistants (Siri, Alexa), sentiment analysis, and machine translation.

- Computer Vision: Enabling facial recognition on Facebook and self-driving car technology.

- Other Industries: From optimizing farming yields in agriculture (precision agriculture) to predicting mechanical failures in manufacturing (predictive maintenance), the applications are virtually limitless.

Chapter 6: The Human Element – Roles, Responsibilities, and Team Structure

The title “Data Scientist” is often an umbrella term. In larger organizations, the work is distributed among specialized roles:

- Data Analyst: Focuses on interpreting existing data, creating reports and dashboards, and providing initial insights. Strong in SQL and visualization tools (Tableau, Power BI).

- Machine Learning Engineer: The bridge between data science and software engineering. They focus on building, deploying, and maintaining scalable machine learning models in production. Strong in software engineering and MLOps.

- Data Engineer: The data plumber. They build and maintain the data infrastructure—data pipelines, databases, and data warehouses—that allows data scientists to access clean data. Strong in distributed computing and ETL processes.

- Business Intelligence (BI) Analyst: Similar to a data analyst but with a stronger focus on business metrics and KPIs, often using pre-built BI platforms.

A Data Scientist often sits in the middle, possessing a blend of skills from all these roles, with a particularly strong emphasis on statistics and advanced modeling.

Chapter 7: The Path to Becoming a Data Scientist

The demand for data scientists is skyrocketing. If you are inspired to join this field, here is a potential roadmap:

- Build a Strong Educational Foundation:

- A bachelor’s degree in a quantitative field like Computer Science, Statistics, Mathematics, or Engineering is common. Many successful data scientists also hold Master’s or Ph.D. degrees.

- Develop Core Technical Skills:

- Programming: Master Python and its key data science libraries (Pandas, NumPy, Scikit-learn).

- Statistics & Math: Solidify your understanding of probability, linear algebra, and calculus.

- SQL: Become proficient in writing complex queries.

- Machine Learning: Understand the theory and application of key algorithms.

- Gain Practical Experience:

- Work on Projects: The best way to learn is by doing. Start with personal projects on topics that interest you. Analyze a public dataset, build a simple recommendation system, or participate in competitions on platforms like Kaggle.

- Build a Portfolio: Create a GitHub repository to showcase your code and a blog or website to explain your projects and findings. This is your tangible proof of skill for employers.

- Cultivate Soft Skills:

- Communication: You must be able to explain complex technical concepts to non-technical stakeholders.

- Curiosity: A natural inquisitiveness to ask the right questions is invaluable.

- Critical Thinking: The ability to question assumptions and interpret results logically.

- Consider Formal Certification:

- Bootcamps and online courses (from Coursera, edX, Udacity) can provide structured learning paths and credentials.

Chapter 8: The Future of Data Science and Ethical Considerations

As data science continues to evolve, several trends and challenges are coming to the forefront:

- The Rise of MLOps: The practice of streamlining and automating the end-to-end machine learning lifecycle, making model deployment and management more reliable and efficient.

- AutoML (Automated Machine Learning): Tools that automate parts of the modeling process, making data science more accessible to non-experts.

- Explainable AI (XAI): As models (especially deep learning) become more complex, there is a growing demand for transparency and interpretability. Understanding why a model made a certain decision is crucial for trust and ethics.

- Edge Computing: Running data models directly on devices (like smartphones or sensors) instead of in the cloud, reducing latency and bandwidth use.

The Ethical Imperative

With great power comes great responsibility. Data scientists must be vigilant about:

- Bias and Fairness: Models can perpetuate and even amplify existing societal biases present in the training data. Ensuring fairness is an active area of research.

- Privacy: Techniques like differential privacy and federated learning are being developed to extract insights without compromising individual user data.

- Accountability: Who is responsible when an algorithm makes a mistake? Establishing clear lines of accountability is essential.

Data Science – The Lens for Understanding Our World

Data science is more than just a job title or a technical skill set; it is a fundamental shift in problem-solving. It provides us with a powerful lens to understand the complexities of our world, from the inner workings of a single cell to the global dynamics of financial markets. It turns guesswork into informed decision-making and intuition into quantifiable evidence.

While the tools and technologies will continue to change, the core mission of data science will remain: to extract signal from noise, to find meaning in chaos, and to create a smarter, more efficient, and more insightful future. The journey to mastering data science is challenging but immensely rewarding, offering the opportunity to be at the forefront of innovation in virtually every field imaginable. The data is there, waiting. The question is, what will you discover?